版权声明:本博客为学习、笔记之用,以笔记形式记录学习的知识与感悟。学习过程中可能参考各种资料,如觉文中表述过分引用,请务必告知,以便迅速处理。如有错漏,不吝赐教。 https://blog.csdn.net/qq_34021712/article/details/79330028

环境介绍

| 服务器 |

是否可以成为主节点 |

是否为数据节点 |

| 192.168.8.101 |

true |

true |

| 192.168.8.103 |

true |

true |

| 192.168.8.104 |

true |

true |

搭建过程

前提是安装java环境,ELK6.2版本需要jdk为1.8,官方推荐安装OracleJDK 最好不要安装OpenJDK.安装jdk参考:

linux安装jdk 只需要将安装包换成1.8的就行。

Elasticsearch安装

①解压文件

tar -zxvf elasticsearch-6.2.1.tar.gz

②重命名

mv elasticsearch-6.2.1 /usr/local/elk/elasticsearch

③创建数据存放路径(应将设置配置为在Elasticsearch主目录之外定位数据目录,以便在不删除数据的情况下删除主目录!)

mkdir /usr/local/elk/elasticsearch/data

④创建日志存放路径(已存在不用创建)

mkdir /usr/local/elk/elasticsearch/logs

⑤建立用户并授权(es不能用root运行)

-

-

-

-

chown -R es:es /usr/local/elk/elasticsearch

⑥修改elasticsearch配置文件

vim /usr/local/elk/elasticsearch/config/elasticsearch.yml 将配置文件以下内容进行修改:

-

-

-

-

-

-

-

-

-

-

path.data: /usr/local/elk/elasticsearch/data

-

-

path.logs: /usr/local/elk/elasticsearch/logs

-

-

bootstrap.memory_lock: true

-

-

-

-

-

-

-

-

-

-

-

discovery.zen.ping.unicast.hosts: ["192.168.8.101:9300", "192.168.8.103:9300", "192.168.8.104:9300"]

-

-

discovery.zen.minimum_master_nodes: 3

修改完之后使用命令查看具体修改了哪些值

grep ‘^[a-z]‘ /usr/local/elk/elasticsearch/config/elasticsearch.yml

⑦调整jvm内存

-

vim /usr/local/elk/elasticsearch/config/jvm.options

-

-

-

分别启动三台Elasticsearch

注意:请使用es用户启动 su - es

/usr/local/elk/elasticsearch/bin/elasticsearch -d

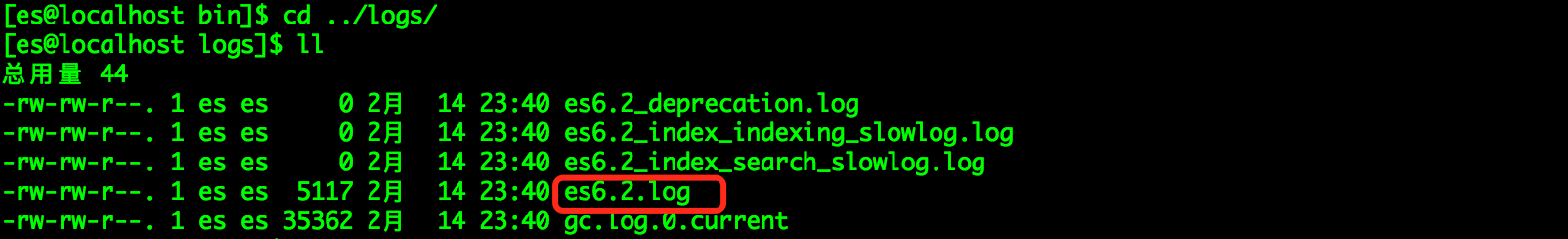

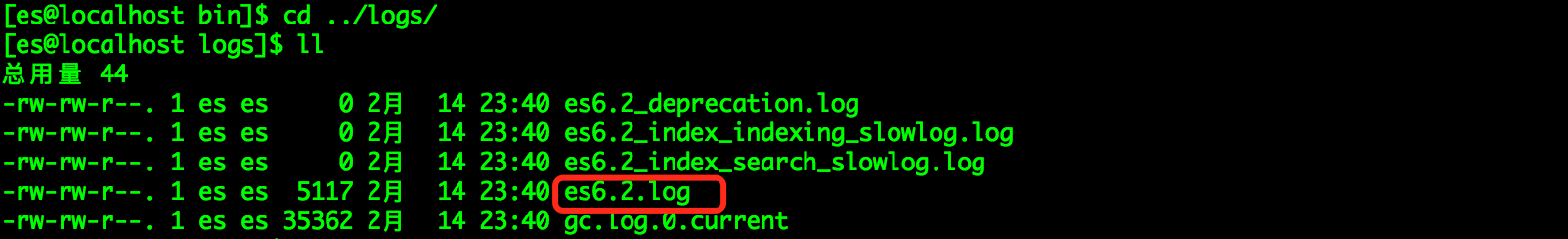

使用ps -ef|grep elasticsearc查看进程是否启动,发现并没有启动,什么原因呢?查看一下日志在我们配置的日志路径下:

第一个坑:日志文件会以集群名称命名,查看es6.2.log文件,日志报以下异常:

-

[2018-02-14T23:40:16,908][ERROR][o.e.b.Bootstrap ] [node-1] node validation exception

-

[3] bootstrap checks failed

-

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536]

-

[2]: memory locking requested for elasticsearch process but memory is not locked

-

[3]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

-

[2018-02-14T23:40:16,910][INFO ][o.e.n.Node ] [node-1] stopping ...

-

[2018-02-14T23:40:17,016][INFO ][o.e.n.Node ] [node-1] stopped

-

[2018-02-14T23:40:17,016][INFO ][o.e.n.Node ] [node-1] closing ...

-

[2018-02-14T23:40:17,032][INFO ][o.e.n.Node ] [node-1] closed

解决方法:

切回root用户su - root,修改配置

① vim /etc/security/limits.conf

使用ps -ef|grep elasticsearch进程查看命令已启动,使用netstat -ntlp查看9200端口也被占用了。

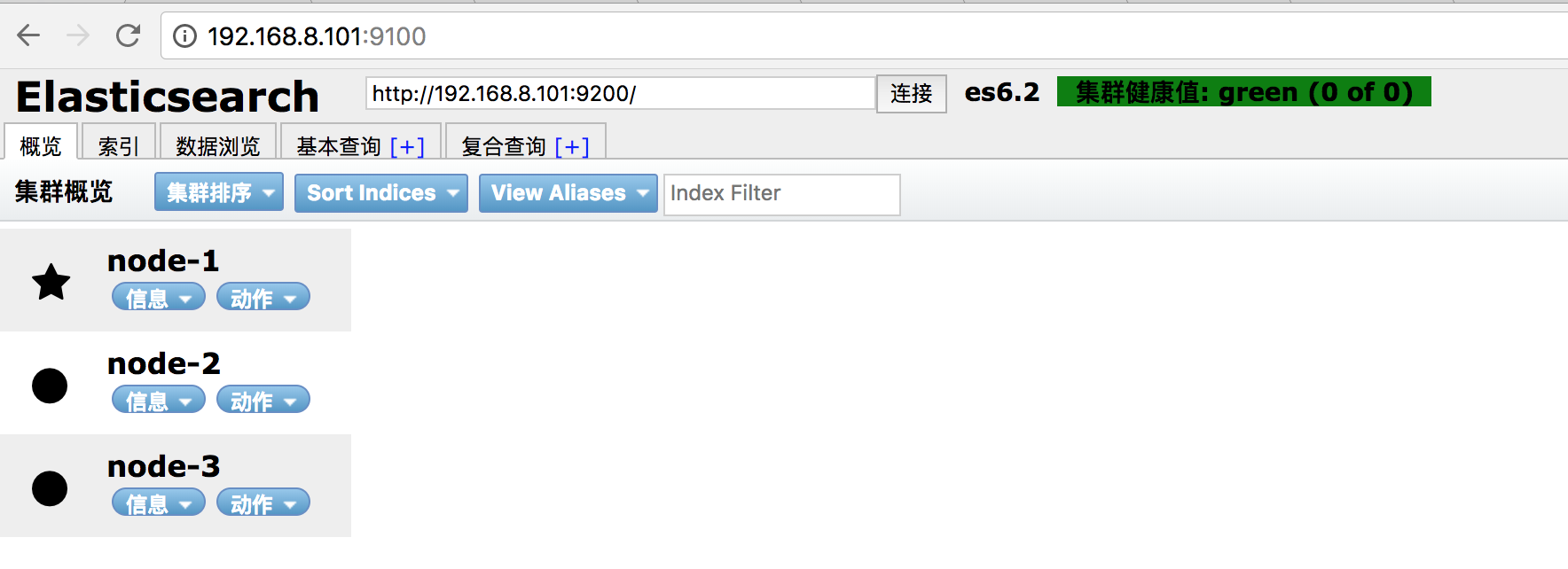

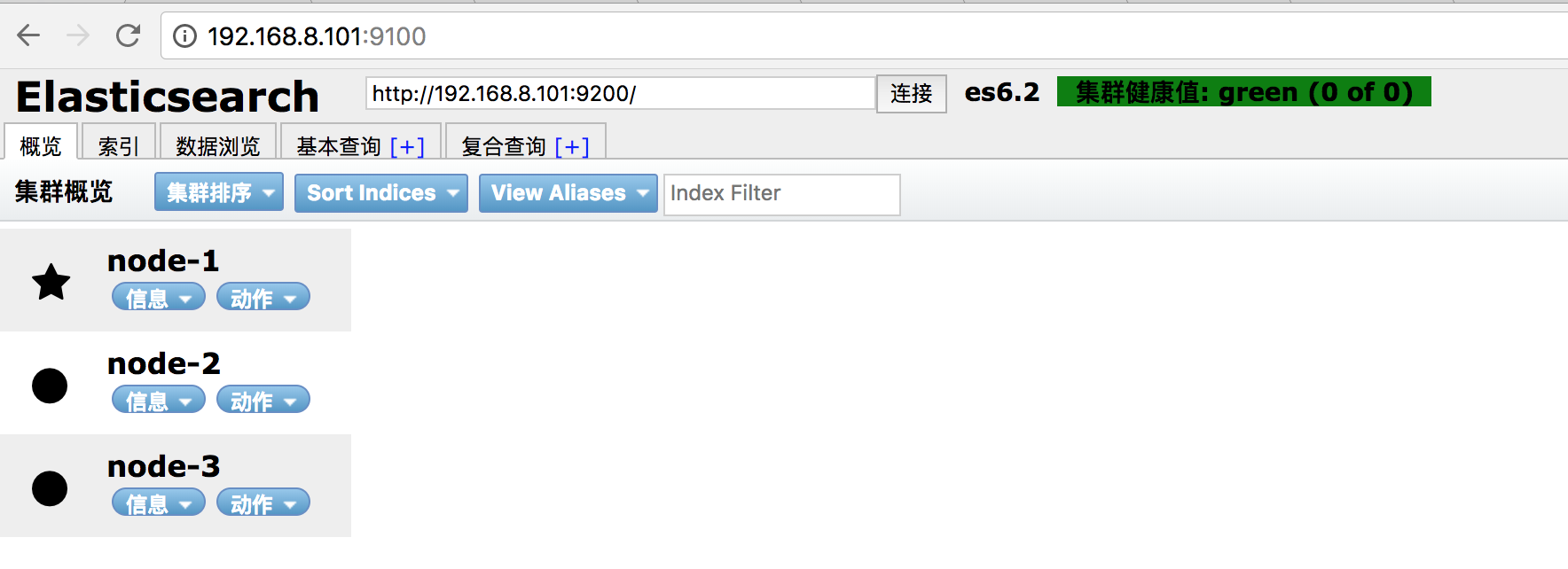

但是我们发现,3个节点都正常started了,但是就是无法形成集群,使用Elasticsearch Head插件缺发现没有连接上集群,

Head插件安装参考:

Elasticsearch-head插件安装第二个坑:查看日志报以下错误:

-

[2018-02-15T21:15:06,352][INFO ][rest.suppressed ] /_cat/health Params: {h=node.total}

-

MasterNotDiscoveredException[waited for [30s]]

-

at org.elasticsearch.action.support.master.TransportMasterNodeAction$4.onTimeout(TransportMasterNodeAction.java:160)

-

at org.elasticsearch.cluster.ClusterStateObserver$ObserverClusterStateListener.onTimeout(ClusterStateObserver.java:239)

-

at org.elasticsearch.cluster.service.InternalClusterService$NotifyTimeout.run(InternalClusterService.java:630)

-

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

-

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

-

at java.lang.Thread.run(Thread.java:745)

原因:将discovery.zen.minimum_master_nodes的值设置为了3,总共3个节点,都充当主节点是不行的,将discovery.zen.minimum_master_nodes将这个配置改为2。

关于discovery.zen.minimum_master_nodes参数介绍,参考:

再次启动

第三个坑:使用Head插件查看,发现只有192.168.8.101为主节点,其他两个节点并没有连接上来,查看日志发现报以下异常

[2018-02-15T21:53:58,084][INFO ][o.e.d.z.ZenDiscovery ] [node-3] failed to send join request to master [{node-1}{SVrW6URqRsi3SShc1PBJkQ}{y2eFQNQ_TRenpAPyv-EnVg}{192.168.8.101}{192.168.8.101:9300}], reason [RemoteTransportException[[node-1][192.168.8.101:9300][internal:discovery/zen/join]]; nested: IllegalArgumentException[can‘t add node {node-3}{SVrW6URqRsi3SShc1PBJkQ}{uqoktM6XTgOnhh5r27L5Xg}{192.168.8.104}{192.168.8.104:9300}, found existing node {node-1}{SVrW6URqRsi3SShc1PBJkQ}{y2eFQNQ_TRenpAPyv-EnVg}{192.168.8.101}{192.168.8.101:9300} with the same id but is a different node instance]; ]

原因:可能是之前启动的时候报错,并没有启动成功,但是data文件中生成了其他节点的数据。将三个节点的data目录清空

再次重新启动,成功!