Embedding 方法层出不穷,但我们于此提出一个统一框架(encoder-decoder)以整合众多方法。

Encoder-Decoder Framework

Encoder-Decoder 框架下的不同方法仅仅是设计了如下的四个方面:

注意,similarity function 和 decoder function 可以是对称的也可非对称

下表会介绍一些有名的 shallow embedding embedding algorithms

| Type | Method | Decoder | Similarity | Loss function |

|---|---|---|---|---|

| 矩阵分解 | LaplacianEigenmaps[4] | 二范数平方 | general | \(DEC(Z_u,Z_v) \cdot S_G(u,v)\) |

| 矩阵分解 | GraphFactorization[1] | 线性核 | \(A_{u, v}\) | \(\Vert DEC(Z_u,Z_v) - S_G(u,v)\Vert _2^2\) |

| 矩阵分解 | GraRep[9] | 线性核 | \(A^k_{u, v}\) | \(\Vert DEC(Z_u,Z_v) - S_G(u,v)\Vert _2^2\) |

| 矩阵分解 | HOPE[45] | 线性核 | general | \(\Vert DEC(Z_u,Z_v) - S_G(u,v)\Vert _2^2\) |

| 随机漫步 | DeepWalk[47] | 高斯核 | \(p_G(u\vert v)\) | \(-S_G(u, v)\log (Dec(u, v))\) |

| 随机漫步 | node2vec [28] | 高斯核 | \(p_G(u\vert v)\) | \(-S_G(u, v)\log (Dec(u, v))\) |

这类方法的 Encoder function 只是简单的 Embedding Lookup:\(Enc(u) = Zu\),这些方法一般都是受了经典的降维矩阵分解的启发。

这类方法就是上表的前四行所述的方法,它们有个共同的特点就是,它们的损失函数都是 \(L \approx \Vert Z^TZ - S \Vert _2^2\) 的形式。其中 \(S\) 中包含了相似性信息,\(Z\) 是 latent space 的 Node Representation

DeepWalk and node2vec.

Large-scale information network embeddings (LINE)

LINE 在概念上与 node2vec 和 DeepWalk 有关,因为它使用了概率解码器和损失,但它明确地分解了一阶和二阶相似性,而不是将它们组合成固定长度的随机游走。

HARP: Extending random-walk embeddings via graph pre-processing

在这种方法中,将 G 中的相关节点折叠在一起成为一个超节点从而实现图的 “粗糙化”,然后在该粗化图上运行 DeepWalk,node2vec 或 LINE

Additional variants of the random-walk idea

随机游走的想法也有许多进一步的扩展

shallow embedding 的缺陷:

自编码器方法的介绍

Deep Neural Graph Representations (DNGR) [10] 和 Structural Deep Network Embeddings (SDNE) [59] 解决上述三个缺陷的第一个。

DNGR 与 SDNE 的共同点

DNGR 与 SDNE 的不同点

自编码器方法的优点

编码器依赖的 \(s_v\) 向量包含节点 \(v\) 的局部邻域的信息。这样可以让 SDNE 和 DNGR 将一个节点的局部邻域的结构信息以正则化项的形式直接纳入编码器中。此功效是 shallow embedding 无法达到的,因为它的编码器仅仅依赖于节点 id

自编码器方法的局限性

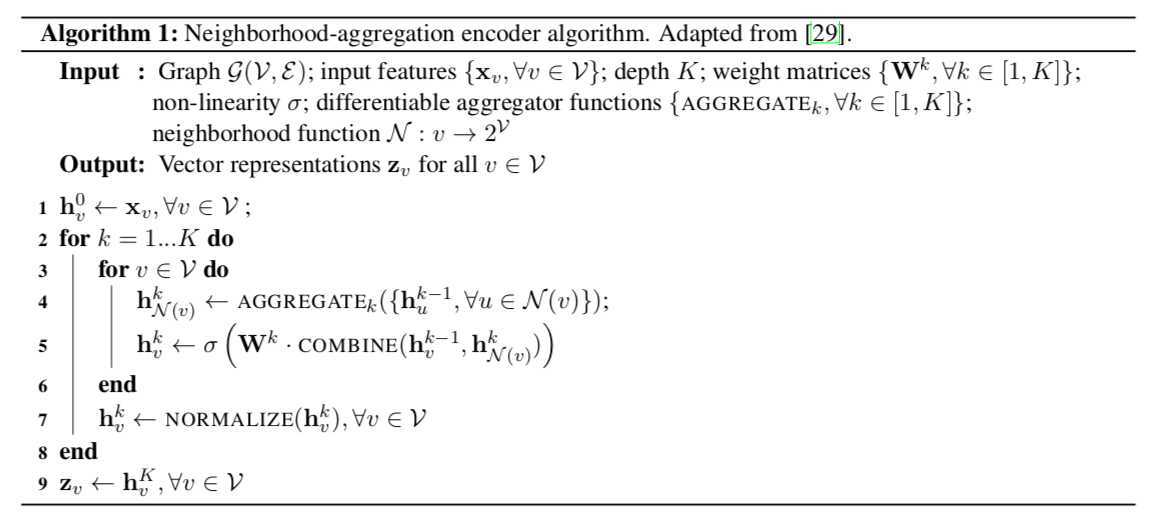

Neighborhood Aggregation(NA) 通过聚合一个节点的局部邻域的信息来产生一个节点的嵌入,它依赖于节点特征或属性来生成嵌入。

算法思路

在编码阶段,NA 会通过一种迭代的方式来生成 Node Representation

有这几个方法遵循上述算法框架:Graph Convolutional Networks (GCN)[35, 36, 53, 56], Column Networks[50], GraphSAGE[29]

算法 1 中的可训练参数是:一组 aggregation functions 和 一组权重矩阵(\(W_k, k \in [1,K]\)),这些参数是可以被所有节点共享的

相同的 aggregation function 和 weight matrix 被用以生成所有节点的 embeddings

GraphSAGE,Column Networks 和各种GCN方法均遵循算法1,但主要区别在于 Aggregation(算法1第4行)和 Vector Combination(算法1第5行)的方式:

[1] A.Ahmed,N.Shervashidze,S.Narayanamurthy,V.Josifovski,andA.J.Smola.Distributedlarge-scalenaturalgraph factorization. In WWW, 2013.

[2] R. Angles and C. Gutierrez. Survey of graph database models. ACM Computing Surveys, 40(1):1, 2008.

[3] L.BackstromandJ.Leskovec.Supervisedrandomwalks:predictingandrecommendinglinksinsocialnetworks.In WSDM, 2011.

[4] M.BelkinandP.Niyogi.Laplacianeigenmapsandspectraltechniquesforembeddingandclustering.InNIPS,2002.

[5] A.R. Benson, D.F. Gleich, and J. Leskovec. Higher-order organization of complex networks. Science, 353(6295):163–166, 2016.

[6] S. Bhagat, G. Cormode, and S. Muthukrishnan. Node classification in social networks. In Social Network Data Analytics, pages 115–148. 2011.

[7] M. M. Bronstein, J. Bruna, Y. LeCun, A. Szlam, and P. Vandergheynst. Geometric deep learning: Going beyond euclidean data. IEEE Signal Processing Magazine, 34(4):18–42, 2017.

[8] J. Bruna, W. Zaremba, and Y. Szlam, A.and LeCun. Spectral networks and locally connected networks on graphs. In ICLR, 2014.

[9] S.Cao,W.Lu,andQ.Xu.Grarep:Learninggraphrepresentationswithglobalstructuralinformation.InKDD,2015.

[10] S. Cao, W. Lu, and Q. Xu. Deep neural networks for learning graph representations. In AAAI, 2016.

[11] B.P.Chamberlain,J.Clough,andM.P.Deisenroth.Neuralembeddingsofgraphsinhyperbolicspace.arXivpreprint arXiv:1705.10359, 2017.

[12] S. Chang, W. Han, J. Tang, G. Qi, C.C. Aggarwal, and T.S. Huang. Heterogeneous network embedding via deep architectures. In KDD, 2015.

[13] H. Chen, B. Perozzi, Y. Hu, and S. Skiena. Harp: Hierarchical representation learning for networks. arXiv preprint arXiv:1706.07845, 2017.

[14] K. Cho, B. Van Merrie ?nboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio. Learning phrase representations using rnn encoder-decoder for statistical machine translation. In EMNLP, 2014.

[15] Fan RK Chung. Spectral Graph Theory. Number 92. American Mathematical Soc., 1997.

[16] H. Dai, B. Dai, and L. Song. Discriminative embeddings of latent variable models for structured data. In ICML, 2016.

[17] M.C.F. De Oliveira and H. Levkowitz. From visual data exploration to visual data mining: a survey. IEEE Transactions on Visualization and Computer Graphics, 9(3):378–394, 2003.

[18] M. Defferrard and P. Bresson, X.and Vandergheynst. Convolutional neural networks on graphs with fast localized spectral filtering. In NIPS, 2016.

[19] Y. Dong, N.V. Chawla, and A. Swami. metapath2vec: Scalable representation learning for heterogeneous networks. In KDD, 2017.

[20] C.Donnat,M.Zitnik,D.Hallac,andJ.Leskovec.Learningstructuralnodeembeddingsviadiffusionwavelets.arXiv preprint arXiv:1710.10321, 2017.

[21] D. Duvenaud, D. Maclaurin, J. Iparraguirre, R. Bombarell, T. Hirzel, A. Aspuru-Guzik, and R.P. Adams. Convolu- tional networks on graphs for learning molecular fingerprints. In NIPS, 2015.

[22] M. Ester, H. Kriegel, J. Sander, X. Xu, et al. A density-based algorithm for discovering clusters in large spatial databases with noise. In KDD, 1996.

[23] S. Fortunato. Community detection in graphs. Physics Reports, 486(3):75–174, 2010.

[24] L. Getoor and B. Taskar. Introduction to Statistical Relational Learning. MIT press, 2007.

[25] J. Gilmer, S.S. Schoenholz, P.F. Riley, O. Vinyals, G.E. Dahl. Neural Message Passing for Quantum Chemistry. In ICML, 2017.

[26] M. Gori, G. Monfardini, and F. Scarselli. A new model for learning in graph domains. In IEEE International Joint Conference on Neural Networks, 2005.

[27] P. Goyal and E. Ferrara. Graph embedding techniques, applications, and performance: A survey. arXiv preprint arXiv:1605.09096, 2017.

[28] A. Grover and J. Leskovec. node2vec: Scalable feature learning for networks. In KDD, 2016.

[29] W.L. Hamilton, R. Ying, and J. Leskovec. Inductive representation learning on large graphs. arXiv preprint, arXiv:1603.04467, 2017.

[30] K. Henderson, B. Gallagher, T. Eliassi-Rad, H. Tong, S. Basu, L. Akoglu, D. Koutra, C. Faloutsos, and L. Li. Rolx: structural role extraction & mining in large graphs. In KDD, 2012.

[31] G.HintonandR.Salakhutdinov.Reducingthedimensionalityofdatawithneuralnetworks.Science,313(5786):504–507, 2006.

[32] S. Hochreiter and J. Schmidhuber. Long short-term memory. Neural Computation, 9(8):1735–1780, 1997.

[33] P.Hoff,A.E.Raftery,andM.S.Handcock.Latentspaceapproachestosocialnetworkanalysis.JASA,97(460):1090– 1098, 2002.

[34] S. Kearnes, K. McCloskey, M. Berndl, V. Pande, and P. Riley. Molecular graph convolutions: moving beyond fingerprints. Journal of Computer-Aided Molecular Design, 30(8):595–608, 2016.

[35] T.N. Kipf and M. Welling. Semi-supervised classification with graph convolutional networks. In ICLR, 2016.

[36] T.N. Kipf and M. Welling. Variational graph auto-encoders. In NIPS Workshop on Bayesian Deep Learning, 2016.

[37] J.B. Kruskal. Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis. Psychometrika, 29(1):1–27, 1964.

[38] J.A. Lee and M. Verleysen. Nonlinear dimensionality reduction. Springer Science & Business Media, 2007.

[39] Y. Li, D. Tarlow, M. Brockschmidt, and R. Zemel. Gated graph sequence neural networks. In ICLR, 2015.

[40] D. Liben-Nowell and J. Kleinberg. The link-prediction problem for social networks. Journal of the Association for Information Science and Technology, 58(7):1019–1031, 2007.

[41] Q. Lu and L. Getoor. Link-based classification. In ICML, volume 3, pages 496–503, 2003.

[42] K. Murphy, Y. Weiss, and M. Jordan. Loopy belief propagation for approximate inference: An empirical study. In UAI, 1999.

[43] M. Nickel, K. Murphy, V. Tresp, and E. Gabrilovich. A review of relational machine learning for knowledge graphs. Proceedings of the IEEE, 104(1):11–33, 2016.

[44] M. Niepert, M. Ahmed, and K. Kutzkov. Learning convolutional neural networks for graphs. In ICML, 2016.

[45] M. Ou, P. Cui, J. Pei, Z. Zhang, and W. Zhu. Asymmetric transitivity preserving graph embedding. In KDD, 2016.

[46] A. Paranjape, A. R. Benson, and J. Leskovec. Motifs in temporal networks. In WSDM, 2017.

[47] B. Perozzi, R. Al-Rfou, and S. Skiena. Deepwalk: Online learning of social representations. In KDD, 2014.

[48] B. Perozzi, V. Kulkarni, and S. Skiena. Walklets: Multiscale graph embeddings for interpretable network classifica- tion. arXiv preprint arXiv:1605.02115, 2016.

[49] Bryan Perozzi. Local Modeling of Attributed Graphs: Algorithms and Applications. PhD thesis, Stony Brook University, 2016.

[50] T. Pham, T. Tran, D.Q. Phung, and S. Venkatesh. Column networks for collective classification. In AAAI, 2017.

[51] L.F.R. Ribeiro, P.H.P. Saverese, and D.R. Figueiredo. struc2vec: Learning node representations from structural

identity. In KDD, 2017.

[52] F. Scarselli, M. Gori, A.C. Tsoi, M. Hagenbuchner, and G. Monfardini. The graph neural network model. IEEE

Transactions on Neural Networks, 20(1):61–80, 2009.

[53] M.Schlichtkrull,T.N.Kipf,P.Bloem,R.vandenBerg,I.Titov,andM.Welling.Modelingrelationaldatawithgraph

convolutional networks. arXiv preprint arXiv:1703.06103, 2017.

[54] J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, and Q. Mei. Line: Large-scale information network embedding. In WWW, 2015.

[55] J. Tenenbaum, V. De Silva, and J. Langford. A global geometric framework for nonlinear dimensionality reduction. Science, 290(5500):2319–2323, 2000.

[56] R. van den Berg, T.N. Kipf, and M. Welling. Graph convolutional matrix completion. arXiv preprint arXiv:1706.02263, 2017.

[57] L. van der Maaten and G. Hinton. Visualizing data using t-sne. JMLR, 9:2579–2605, 2008.

[58] S.V.N. Vishwanathan, N.N. Schraudolph, R. Kondor, and K.M. Borgwardt. Graph kernels. JMLR, 11:1201–1242, 2010.

[59] D. Wang, P. Cui, and W. Zhu. Structural deep network embedding. In KDD, 2016.

[60] Z. Yang, W. Cohen, and R. Salakhutdinov. Revisiting semi-supervised learning with graph embeddings. In ICML, 2016.

[61] M. Zitnik and J. Leskovec. Predicting multicellular function through multi-layer tissue networks. Bioinformatics, 2017.

Representation Learning on Graphs: Methods and Applications

原文:https://www.cnblogs.com/luyunan/p/12430750.html