安装

准备工作:

深度学习入门——给配GPU的工作站安装Ubuntu系统(一篇解决各种问题)

深度学习入门——给Ubuntu系统安装CUDA、cuDNN、Anaconda、Tensorflow-GPU和pyTorch

深度学习入门——测试PyTorch和Tensorflow能正常使用GPU

下载源码并安装依赖包:

$ git clone https://github.com/eriklindernoren/PyTorch-YOLOv3 $ cd PyTorch-YOLOv3/ $ sudo pip3 install -r requirements.txt

下载预训练模型:

$ cd weights/ $ bash download_weights.sh

下载数据集:

$ cd data/ $ bash get_coco_dataset.sh

测试

评估模型对于COCO数据集的效果:

$ python3 test.py --weights_path weights/yolov3.weights

输出:

ubuntu@ubuntu:~/dev/PyTorch-YOLOv3-master$ python3 test.py --weights_path weights/yolov3.weights Namespace(batch_size=8, class_path=‘data/coco.names‘, conf_thres=0.001, data_config=‘config/coco.data‘, img_size=416, iou_thres=0.5, model_def=‘config/yolov3.cfg‘, n_cpu=8, nms_thres=0.5, weights_path=‘weights/yolov3.weights‘) Compute mAP... Detecting objects: 100%|██████████████████████| 625/625 [04:08<00:00, 2.51it/s] Computing AP: 100%|█████████████████████████████| 80/80 [00:01<00:00, 75.66it/s] Average Precisions: + Class ‘0‘ (person) - AP: 0.69071601970752 + Class ‘1‘ (bicycle) - AP: 0.4686961863448047 + Class ‘2‘ (car) - AP: 0.584785409652401 + Class ‘3‘ (motorbike) - AP: 0.6173425471546101 + Class ‘4‘ (aeroplane) - AP: 0.7368216071089109 + Class ‘5‘ (bus) - AP: 0.7522709365644746 + Class ‘6‘ (train) - AP: 0.754366135549987 + Class ‘7‘ (truck) - AP: 0.4188454158138422 + Class ‘8‘ (boat) - AP: 0.4055367699507446 + Class ‘9‘ (traffic light) - AP: 0.44435250125992093 + Class ‘10‘ (fire hydrant) - AP: 0.7803236133317674 + Class ‘11‘ (stop sign) - AP: 0.7203250980406222 + Class ‘12‘ (parking meter) - AP: 0.5318708513711929 + Class ‘13‘ (bench) - AP: 0.33347708090637457 + Class ‘14‘ (bird) - AP: 0.4441360921558241 + Class ‘15‘ (cat) - AP: 0.7303504067363646 + Class ‘16‘ (dog) - AP: 0.7319887348116905 + Class ‘17‘ (horse) - AP: 0.77512155236337 + Class ‘18‘ (sheep) - AP: 0.5984679238272702 + Class ‘19‘ (cow) - AP: 0.5233874581223704 + Class ‘20‘ (elephant) - AP: 0.8563788399614207 + Class ‘21‘ (bear) - AP: 0.7462024921293304 + Class ‘22‘ (zebra) - AP: 0.7870769691158629 + Class ‘23‘ (giraffe) - AP: 0.8227873134751092 + Class ‘24‘ (backpack) - AP: 0.32451636624665287 + Class ‘25‘ (umbrella) - AP: 0.5271238663832635 + Class ‘26‘ (handbag) - AP: 0.20446396737325406 + Class ‘27‘ (tie) - AP: 0.49596217809096577 + Class ‘28‘ (suitcase) - AP: 0.569835653931444 + Class ‘29‘ (frisbee) - AP: 0.6356266022474135 + Class ‘30‘ (skis) - AP: 0.40624013441992135 + Class ‘31‘ (snowboard) - AP: 0.4548600158139028 + Class ‘32‘ (sports ball) - AP: 0.5431383703116072 + Class ‘33‘ (kite) - AP: 0.4099711653381243 + Class ‘34‘ (baseball bat) - AP: 0.5038339063455582 + Class ‘35‘ (baseball glove) - AP: 0.47781969136825725 + Class ‘36‘ (skateboard) - AP: 0.6849120730914782 + Class ‘37‘ (surfboard) - AP: 0.6221252845246673 + Class ‘38‘ (tennis racket) - AP: 0.68764570668767 + Class ‘39‘ (bottle) - AP: 0.4228582945038891 + Class ‘40‘ (wine glass) - AP: 0.5107649160534952 + Class ‘41‘ (cup) - AP: 0.4708999794256628 + Class ‘42‘ (fork) - AP: 0.44107168135464947 + Class ‘43‘ (knife) - AP: 0.288951366082318 + Class ‘44‘ (spoon) - AP: 0.21264460558898557 + Class ‘45‘ (bowl) - AP: 0.4882936721018784 + Class ‘46‘ (banana) - AP: 0.27481021398716976 + Class ‘47‘ (apple) - AP: 0.17694573390321539 + Class ‘48‘ (sandwich) - AP: 0.4595098054471395 + Class ‘49‘ (orange) - AP: 0.2861568847973789 + Class ‘50‘ (broccoli) - AP: 0.34978362407336433 + Class ‘51‘ (carrot) - AP: 0.22371776472064184 + Class ‘52‘ (hot dog) - AP: 0.3702692586995472 + Class ‘53‘ (pizza) - AP: 0.5297757751733385 + Class ‘54‘ (donut) - AP: 0.5068384767127795 + Class ‘55‘ (cake) - AP: 0.476632708387989 + Class ‘56‘ (chair) - AP: 0.3980449296511249 + Class ‘57‘ (sofa) - AP: 0.5214086539073353 + Class ‘58‘ (pottedplant) - AP: 0.4239751120301045 + Class ‘59‘ (bed) - AP: 0.6338351737747959 + Class ‘60‘ (diningtable) - AP: 0.4138012499478281 + Class ‘61‘ (toilet) - AP: 0.7377284037968452 + Class ‘62‘ (tvmonitor) - AP: 0.6991588571748895 + Class ‘63‘ (laptop) - AP: 0.68712851664284 + Class ‘64‘ (mouse) - AP: 0.7214480416511962 + Class ‘65‘ (remote) - AP: 0.4789729416954784 + Class ‘66‘ (keyboard) - AP: 0.6644829934265277 + Class ‘67‘ (cell phone) - AP: 0.39743578548434444 + Class ‘68‘ (microwave) - AP: 0.6423763095621656 + Class ‘69‘ (oven) - AP: 0.48313299304876195 + Class ‘70‘ (toaster) - AP: 0.16233766233766234 + Class ‘71‘ (sink) - AP: 0.5075074098080213 + Class ‘72‘ (refrigerator) - AP: 0.6862896780296917 + Class ‘73‘ (book) - AP: 0.17111744621852634 + Class ‘74‘ (clock) - AP: 0.6886459682881512 + Class ‘75‘ (vase) - AP: 0.44157962279267704 + Class ‘76‘ (scissors) - AP: 0.3437987832196098 + Class ‘77‘ (teddy bear) - AP: 0.5859590979304399 + Class ‘78‘ (hair drier) - AP: 0.11363636363636365 + Class ‘79‘ (toothbrush) - AP: 0.2643722437438991 mAP: 0.5145225242055336

推断

使用预训练模型判断图像中存在哪些物体:

$ python3 detect.py --image_folder data/samples/

输出:

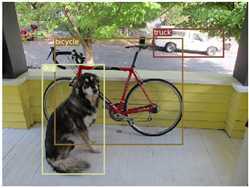

ubuntu@ubuntu:~/dev/PyTorch-YOLOv3-master$ python3 detect.py --image_folder data/samples/ Namespace(batch_size=1, checkpoint_model=None, class_path=‘data/coco.names‘, conf_thres=0.8, image_folder=‘data/samples/‘, img_size=416, model_def=‘config/yolov3.cfg‘, n_cpu=0, nms_thres=0.4, weights_path=‘weights/yolov3.weights‘) Performing object detection: + Batch 0, Inference Time: 0:00:00.804839 + Batch 1, Inference Time: 0:00:00.029886 + Batch 2, Inference Time: 0:00:00.043221 + Batch 3, Inference Time: 0:00:00.034414 + Batch 4, Inference Time: 0:00:00.034508 + Batch 5, Inference Time: 0:00:00.054059 + Batch 6, Inference Time: 0:00:00.024002 + Batch 7, Inference Time: 0:00:00.037496 + Batch 8, Inference Time: 0:00:00.024113 Saving images: (0) Image: ‘data/samples/dog.jpg‘ + Label: dog, Conf: 0.99335 + Label: bicycle, Conf: 0.99981 + Label: truck, Conf: 0.94229 (1) Image: ‘data/samples/eagle.jpg‘ + Label: bird, Conf: 0.99703 (2) Image: ‘data/samples/field.jpg‘ + Label: person, Conf: 0.99996 + Label: horse, Conf: 0.99977 + Label: dog, Conf: 0.99409 (3) Image: ‘data/samples/giraffe.jpg‘ + Label: giraffe, Conf: 0.99959 + Label: zebra, Conf: 0.97958 (4) Image: ‘data/samples/herd_of_horses.jpg‘ + Label: horse, Conf: 0.99459 + Label: horse, Conf: 0.99352 + Label: horse, Conf: 0.96845 + Label: horse, Conf: 0.99478 (5) Image: ‘data/samples/messi.jpg‘ + Label: person, Conf: 0.99993 + Label: person, Conf: 0.99984 + Label: person, Conf: 0.99996 (6) Image: ‘data/samples/person.jpg‘ + Label: person, Conf: 0.99883 + Label: dog, Conf: 0.99275 (7) Image: ‘data/samples/room.jpg‘ + Label: chair, Conf: 0.99906 + Label: chair, Conf: 0.96942 + Label: clock, Conf: 0.99971 (8) Image: ‘data/samples/street.jpg‘ + Label: car, Conf: 0.99977 + Label: car, Conf: 0.99402 + Label: car, Conf: 0.99841 + Label: car, Conf: 0.99785 + Label: car, Conf: 0.97907 + Label: car, Conf: 0.95370 + Label: traffic light, Conf: 0.99995 + Label: car, Conf: 0.62254

检测结果:

参考:

https://github.com/eriklindernoren/PyTorch-YOLOv3

https://github.com/cocodataset/cocoapi

原文:https://www.cnblogs.com/ratels/p/12451876.html