版权声明:本文为博主原创文章,遵循 CC 4.0 BY-SA 版权协议,转载请附上原文出处链接和本声明。

本文链接:https://blog.csdn.net/huitailangyz/article/details/85015611

————————————————————————————————

笔者近来在tensorflow中使用batch_norm时,由于事先不熟悉其内部的原理,因此将其错误使用,从而出现了结果与预想不一致的结果。事后对其进行了一定的调查与研究,在此进行一些总结。

笔者最先使用时只是了解到了在tensorflow中tf.layers.batch_normalization这个函数,就在函数中直接将其使用,该函数中有一个参数为training,在训练阶段赋值True,在测试阶段赋值False。但是在训练完成后,出现了奇怪的现象时,在training赋值为True时,测试的正确率正常,但是training赋值为False时,测试正确率就很低。上述错误使用过程可以精简为下列代码段

is_traing = tf.placeholder(dtype=tf.bool) input = tf.ones([1, 2, 2, 3]) output = tf.layers.batch_normalization(input, training=is_traing) loss = ... train_op = optimizer.minimize(loss) with tf.Session() as sess: sess.run(tf.global_variables_initializer()) sess.run(train_op)

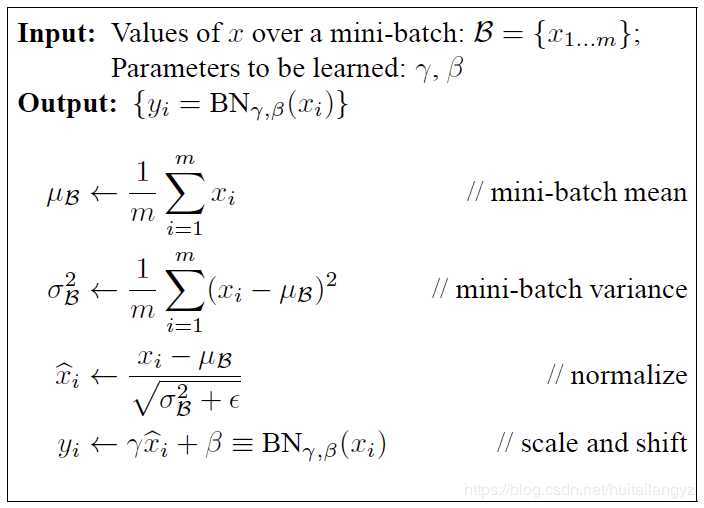

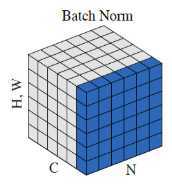

下面首先粗略的介绍一下batch_normalization,这种归一化方法的示意图和算法如下图,

总的来说就是对于同一batch的input,假设输入大小为[batch_num, height, width, channel],逐channel地计算同一batch中所有数据的mean和variance,再对input使用mean和variance进行归一化,最后的输出再进行线性平移,得到batch_norm的最终结果。伪代码如下:

for i in range(channel): x = input[:,:,:,i] mean = mean(x) variance = variance(x) x = (x - mean) / sqrt(variance) x = scale * x + offset input[:,:,:,i] = x

在实现的时候,会在训练阶段记录下训练数据中平均mean和variance,记为moving_mean和moving_variance,并在测试阶段使用训练时的moving_mean和moving_variance进行计算,这也就是参数training的作用。另外,在实现时一般使用一个decay系数来逐步更新moving_mean和moving_variance,moving_mean = moving_mean * decay + new_batch_mean * (1 - decay)

tensorflow中关于batch_norm现在有三种实现方式。

tf.nn.batch_normalization( x, mean, variance, offset, scale, variance_epsilon, name=None )

该函数是一种最底层的实现方法,在使用时mean、variance、scale、offset等参数需要自己传递并更新,因此实际使用时还需自己对该函数进行封装,一般不建议使用,但是对了解batch_norm的原理很有帮助。

封装使用的实例如下:

import tensorflow as tf def batch_norm(x, name_scope, training, epsilon=1e-3, decay=0.99): """ Assume nd [batch, N1, N2, ..., Nm, Channel] tensor""" with tf.variable_scope(name_scope): size = x.get_shape().as_list()[-1] scale = tf.get_variable(‘scale‘, [size], initializer=tf.constant_initializer(0.1)) offset = tf.get_variable(‘offset‘, [size]) pop_mean = tf.get_variable(‘pop_mean‘, [size], initializer=tf.zeros_initializer(), trainable=False) pop_var = tf.get_variable(‘pop_var‘, [size], initializer=tf.ones_initializer(), trainable=False) batch_mean, batch_var = tf.nn.moments(x, list(range(len(x.get_shape())-1))) train_mean_op = tf.assign(pop_mean, pop_mean * decay + batch_mean * (1 - decay)) train_var_op = tf.assign(pop_var, pop_var * decay + batch_var * (1 - decay)) def batch_statistics(): with tf.control_dependencies([train_mean_op, train_var_op]): return tf.nn.batch_normalization(x, batch_mean, batch_var, offset, scale, epsilon) def population_statistics(): return tf.nn.batch_normalization(x, pop_mean, pop_var, offset, scale, epsilon) return tf.cond(training, batch_statistics, population_statistics) is_traing = tf.placeholder(dtype=tf.bool) input = tf.ones([1, 2, 2, 3]) output = batch_norm(input, name_scope=‘batch_norm_nn‘, training=is_traing) with tf.Session() as sess: sess.run(tf.global_variables_initializer()) saver = tf.train.Saver() saver.save(sess, "batch_norm_nn/Model")

在batch_norm中,首先先计算了x的逐通道的mean和var,然后将pop_mean和pop_var进行更新,并根据是在训练阶段还是测试阶段选择将当批次计算的mean和var或者训练阶段保存的mean和var与新定义的变量scale和offset一起传递给tf.nn.batch_normalization

【转载】 tensorflow中的batch_norm以及tf.control_dependencies和tf.GraphKeys.UPDATE_OPS的探究

原文:https://www.cnblogs.com/devilmaycry812839668/p/12459803.html