OpenStack安装指南

配置时间同步服务(NTP)

Controller node

[root@controller ~]# yum install chrony -y

[root@controller ~]# sed -i ‘26a allow 10.0.0.0/24‘ /etc/chrony.conf

[root@controller ~]# systemctl enable chronyd.service

[root@controller ~]# systemctl start chronyd.service

Other nodes

[root@compute ~]# sed -i ‘s/^server/#server/‘ /etc/chrony.conf

[root@compute ~]# sed -i ‘6a server controller‘ /etc/chrony.conf

[root@compute ~]# systemctl enable chronyd.service

[root@compute ~]# systemctl start chronyd.service

[root@storage ~]# sed -i ‘s/^server/#server/‘ /etc/chrony.conf

[root@storage ~]# sed -i ‘6a server controller‘ /etc/chrony.conf

[root@storage ~]# systemctl enable chronyd.service

[root@storage ~]# systemctl start chronyd.service

[root@compute ~]# date -s ‘2001-09-11 11:30:00‘

[root@compute ~]# systemctl restart chronyd

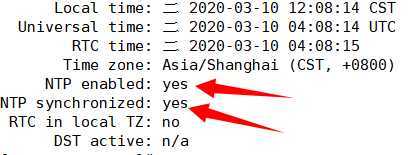

[root@compute ~]# timedatectl #重启后需要等待几分钟方可同步成功

安装openstack包及数据库(所有节点)

Controller node

安装openstack存储库、OpenStack客户端、selinux扩展包

[root@controller ~]# yum install python-openstackclient openstack-selinux \

> mariadb mariadb-server python2-PyMySQL -y

[root@controller ~]# tee /etc/my.cnf.d/openstack.cnf <<-‘EOF‘

> [mysqld]

> bind-address = 10.0.0.10

> default-storage-engine = innodb

> innodb_file_per_table = on

> max_connections = 4096

> collation-server = utf8_general_ci

> character-set-server = utf8

> EOF

[root@controller ~]# systemctl start mariadb.service

[root@controller ~]# systemctl enable mariadb.service

数据库初始化:

[root@controller ~]# mysql_secure_installation

Enter current password for root (enter for none): #回车

Set root password? [Y/n] y #密码

Remove anonymous users? [Y/n] y #移除匿名用户

Disallow root login remotely? [Y/n] n #不关闭root远程登录

Remove test database and access to it? [Y/n] y #删除测试数据库

Reload privilege tables now? [Y/n] y

Thanks for using MariaDB!

[root@controller ~]# yum -y install memcached python-memcached

[root@controller ~]# sed -i ‘s/::1/::1,10.0.0.10/‘ /etc/sysconfig/memcached

[root@controller ~]# systemctl start memcached.service

[root@controller ~]# systemctl enable memcached.service

Other nodes

[root@compute ~]# yum install centos-release-openstack-ocata -y

[root@compute ~]# yum upgrade

[root@storage ~]# yum install centos-release-openstack-ocata -y

[root@storage ~]# yum upgrade

安装rabbitmq消息队列

安装服务并设置开机自启动:三台都要做原理相同

[root@controller ~]# yum -y install erlang rabbitmq-server.noarch

[root@controller ~]# systemctl start rabbitmq-server.service

[root@controller ~]# systemctl enable rabbitmq-server.service

[root@controller ~]# ss -ntulp |grep 5672

tcp LISTEN 0 128 *:25672 *:* users:(("beam.smp",pid=1046,fd=8))

tcp LISTEN 0 128 [::]:5672 [::]:* users:(("beam.smp",pid=1046,fd=16))

[root@controller ~]# rabbitmqctl cluster_status

[root@controller ~]# tee /etc/rabbitmq/rabbitmq-env.conf <<-‘EOF‘

> RABBITMQ_NODE_PORT=5672

> ulimit -S -n 4096

> RABBITMQ_SERVER_ERL_ARGS="+K true +A30 +P 1048576 -kernel inet_default_connect_options [{nodelay,true},{raw,6,18,<<5000:64/native>>}] -kernel inet_default_listen_options [{raw,6,18,<<5000:64/native>>}]"

> RABBITMQ_NODE_IP_ADDRESS=10.0.0.10

> EOF

[root@controller ~]# scp /etc/rabbitmq/rabbitmq-env.conf root@compute:/etc/rabbitmq/rabbitmq-env.conf

rabbitmq-env.conf 100% 285 117.2KB/s 00:00

[root@controller ~]# scp /etc/rabbitmq/rabbitmq-env.conf root@storage:/etc/rabbitmq/rabbitmq-env.conf

rabbitmq-env.conf 100% 285 82.4KB/s 00:00

[root@compute ~]# sed -i ‘s/0.10/0.20/‘ /etc/rabbitmq/rabbitmq-env.conf

[root@storage ~]# sed -i ‘s/0.10/0.30/‘ /etc/rabbitmq/rabbitmq-env.conf

[root@controller ~]# rabbitmq-plugins enable rabbitmq_management

查看是否开启:

[root@controller ~]# /usr/lib/rabbitmq/bin/rabbitmq-plugins list

[E] rabbitmq_management 3.3.5 #中括号内有E表示开启

systemctl restart rabbitmq-server.service

systemctl status rabbitmq-server.service

[root@controller ~]# rabbitmqctl change_password guest admin

Changing password for user "guest" ...

...done.

[root@controller ~]# rabbitmqctl add_user openstack openstack

Creating user "openstack" ...

...done.

[root@controller ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/" ...

...done.

[root@controller ~]# rabbitmqctl set_user_tags openstack administrator

Setting tags for user "openstack" to [administrator] ...

...done

[root@controller ~]# scp /var/lib/rabbitmq/.erlang.cookie compute:/var/lib/rabbitmq/.erlang.cookie

.erlang.cookie 100% 20 7.7KB/s 00:00

[root@controller ~]# scp /var/lib/rabbitmq/.erlang.cookie storage:/var/lib/rabbitmq/.erlang.cookie

.erlang.cookie 100% 20 5.1KB/s 00:00

[root@compute ~]# systemctl restart rabbitmq-server.service

[root@compute ~]# rabbitmqctl stop_app

Stopping node rabbit@controller ...

...done.

[root@compute ~]# rabbitmqctl join_cluster --ram rabbit@controller

Clustering node rabbit@controller with rabbit@controller ...

...done.

[root@compute ~]# rabbitmqctl start_app

Starting node rabbit@controller ...

...done.

[root@controller ~]# rabbitmqctl cluster_status

Cluster status of node rabbit@controller ...

[{nodes,[{disc,[rabbit@controller]},{ram,[rabbit@storage,rabbit@compute]}]},

{running_nodes,[rabbit@compute,rabbit@storage,rabbit@controller]},

{cluster_name,<<"rabbit@controller">>},

{partitions,[]}]

至此rabbitmq集群搭建结束,如果有问题检查上面的步骤是否有遗漏

Web访问http://10.0.0.10:15672/

---------------------------------------------------------------------------------------------------------------------------------

至此消息队列部署完毕!

安装keystone身份认证服务

[root@controller ~]# mysql -uroot -p123123 -e "CREATE DATABASE keystone;

> GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone‘@‘localhost‘ IDENTIFIED BY ‘KEYSTONE_DBPASS‘;

> GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone‘@‘%‘ IDENTIFIED BY ‘KEYSTONE_DBPASS‘;"

[root@controller ~]# yum -y install openstack-keystone httpd mod_wsgi

[root@controller ~]# sed -i ‘2790a provider = fernet‘ /etc/keystone/keystone.conf

[root@controller ~]# sed -i ‘686a connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone‘ /etc/keystone/keystone.conf

[root@controller ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

[root@controller ~]# mysql -uroot -p123123 -e "use keystone; show tables;"

[root@controller ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

[root@controller ~]# keystone-manage bootstrap --bootstrap-password admin \

> --bootstrap-admin-url http://controller:35357/v3/ \ #管理网端点服务地址

> --bootstrap-internal-url http://controller:5000/v3/ \ #内部网端点服务地址

> --bootstrap-public-url http://controller:5000/v3/ \ #公共网端点服务地址

> --bootstrap-region-id RegionOne #工作域

[root@controller ~]# sed -i ‘95a ServerName controller‘ /etc/httpd/conf/httpd.conf

[root@controller ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

[root@controller ~]# systemctl start httpd.service

[root@controller ~]# systemctl enable httpd.service

[root@controller ~]# tee /root/openrc <<-‘EOF‘

> export OS_USERNAME=admin

> export OS_PASSWORD=admin

> export OS_PROJECT_NAME=admin

> export OS_USER_DOMAIN_NAME=Default

> export OS_PROJECT_DOMAIN_NAME=Default

> export OS_AUTH_URL=http://controller:35357/v3

> export OS_IDENTITY_API_VERSION=3

> export OS_PROJECT_NAME=demo

> export OS_USERNAME=demo

> export OS_PASSWORD=demo

> export OS_AUTH_URL=http://controller:5000/v3

> export OS_IMAGE_API_VERSION=2

EOF

[root@controller ~]# source /root/openrc

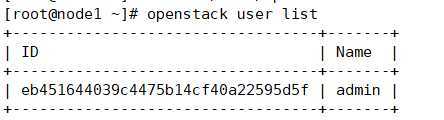

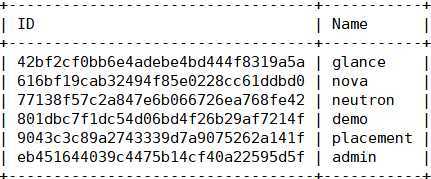

[root@controller ~]# openstack user list #列出用户说明上面操作没问题

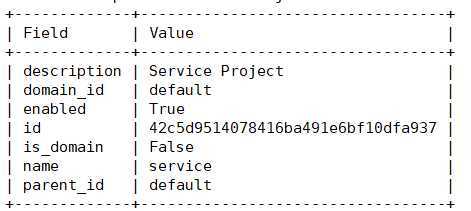

[root@controller ~]# openstack project create --domain default \

> --description "Service Project" service

[root@controller ~]# openstack project create --domain default \

> --description "Demo Project" demo

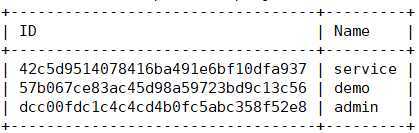

[root@controller ~]# openstack project list

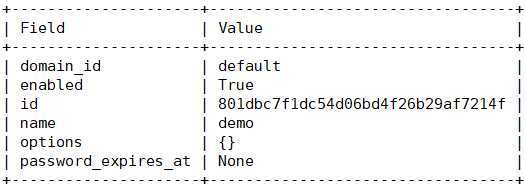

[root@controller ~]# openstack user create --domain default \

> --password-prompt demo

User Password:

Repeat User Password:

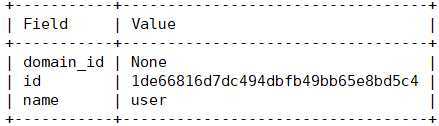

[root@controller ~]# openstack role create user

[root@controller ~]# openstack role add --project demo --user demo user

---------------------------------------------------------------------------------------------------------------------------------

至此,keystone部署完毕!

安装glance镜像服务

[root@controller ~]# mysql -uroot -p123123 -e "create database glance;

> grant all privileges on glance.* to ‘glance‘@‘localhost‘ identified by ‘GLANCE_DBPASS‘;"

[root@controller ~]# mysql -uroot -p123123 -e "grant all privileges on glance.* to ‘glance‘@‘%‘ \

> identified by ‘GLANCE_DBPASS‘;"

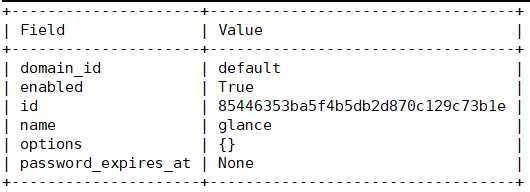

[root@controller ~]# openstack user create --domain default --password=glance glance

[root@controller ~]# openstack role add --project service --user glance admin

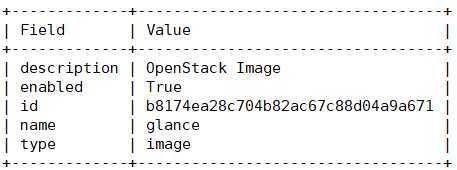

[root@controller ~]# openstack service create --name glance \

> --description "OpenStack Image" image

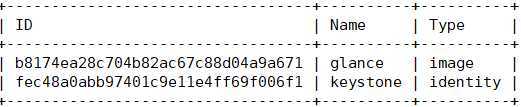

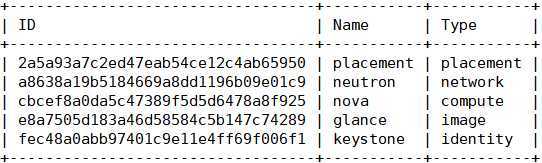

[root@controller ~]# openstack service list

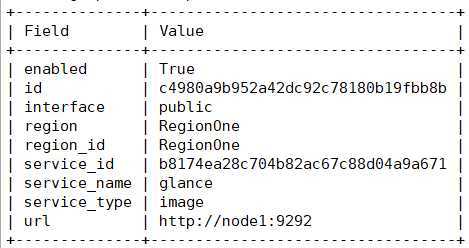

[root@controller ~]# openstack endpoint create --region RegionOne \

> image public http://controller:9292

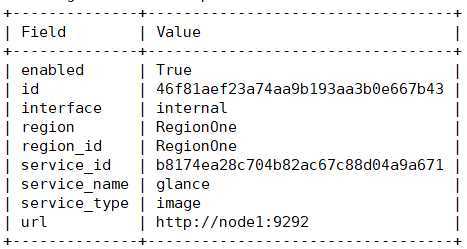

[root@controller ~]# openstack endpoint create --region RegionOne \

> image internal http://controller:9292

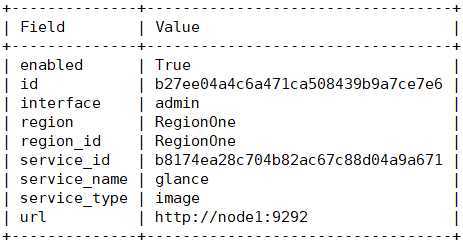

[root@controller ~]# openstack endpoint create --region RegionOne \

> image admin http://controller:9292

[root@controller ~]# openstack endpoint list |grep glance

[root@controller ~]# yum -y install openstack-glance

[root@controller ~]# cp /etc/glance/glance-api.conf{,.bak}

[root@controller ~]# echo "[DEFAULT]

> [cors]

> [cors.subdomain]

> [database]

> connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

> [glance_store]

> stores = file,http

> default_store = file

> filesystem_store_datadir = /var/lib/glance/images/

> [image_format]

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = glance

> password = glance

> [matchmaker_redis]

> [oslo_concurrency]

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_middleware]

> [oslo_policy]

> [paste_deploy]

> flavor = keystone

> [profiler]

> [store_type_location_strategy]

> [task]

> [taskflow_executor]" > /etc/glance/glance-api.conf

[root@controller ~]# cp /etc/glance/glance-registry.conf{,.bak}

[root@controller ~]# echo "[DEFAULT]

> [database]

> connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = glance

> password = glance

> [matchmaker_redis]

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_policy]

> [paste_deploy]

> flavor = keystone

> [profiler]" > /etc/glance/glance-registry.conf

[root@controller ~]# su -s /bin/sh -c "glance-manage db_sync" glance

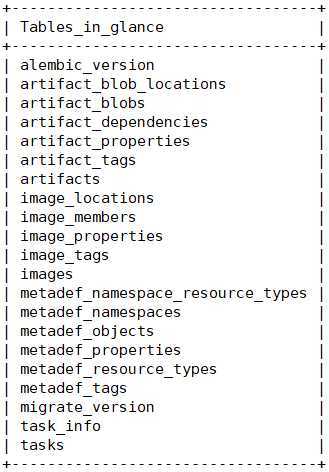

[root@controller ~]# mysql -uroot -p123123 -e "use glance;show tables;"

[root@controller ~]# systemctl enable openstack-glance-api.service \

> openstack-glance-registry.service

[root@controller ~]# systemctl start openstack-glance-api.service \

> openstack-glance-registry.service

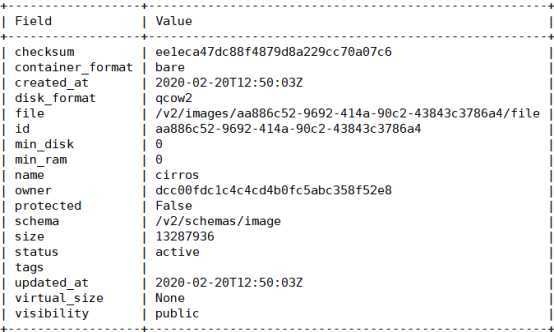

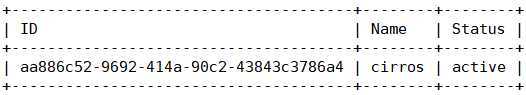

[root@controller images]# openstack image create "cirros" \

> --file /var/lib/glance/images/cirros-0.3.4-x86_64-disk.img \

> --disk-format qcow2 --container-format bare \

> --public

---------------------------------------------------------------------------------------------------------------------------------

至此,glance镜像服务部署完毕!

部署nova计算服务

[root@controller ~]# mysql -uroot -p123123 -e "CREATE DATABASE nova_api;

> CREATE DATABASE nova;CREATE DATABASE nova_cell0;"

[root@controller ~]# mysql -uroot -p123123 -e "

> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova‘@‘localhost‘ \

> IDENTIFIED BY ‘NOVA_DBPASS‘;

> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova‘@‘%‘ \

> IDENTIFIED BY ‘NOVA_DBPASS‘;

> GRANT ALL PRIVILEGES ON nova.* TO ‘nova‘@‘localhost‘ \

> IDENTIFIED BY ‘NOVA_DBPASS‘;

> GRANT ALL PRIVILEGES ON nova.* TO ‘nova‘@‘%‘ \

> IDENTIFIED BY ‘NOVA_DBPASS‘;

> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova‘@‘localhost‘ \

> IDENTIFIED BY ‘NOVA_DBPASS‘;

> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova‘@‘%‘ \

> IDENTIFIED BY ‘NOVA_DBPASS‘;"

[root@controller ~]# source /root/openrc

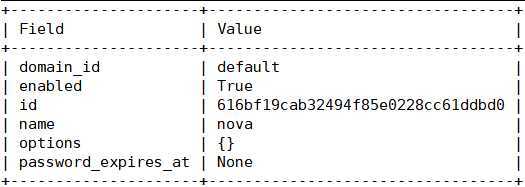

[root@controller ~]# openstack user create --domain default --password=nova nova

[root@controller ~]# openstack role add --project service --user nova admin

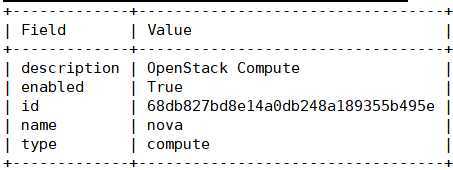

[root@controller ~]# openstack service create --name nova \

> --description "OpenStack Compute" compute

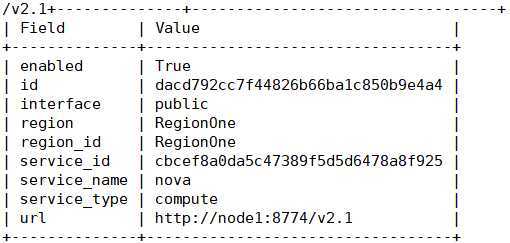

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute public http://controller:8774/v2.1

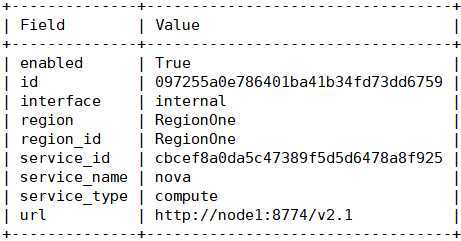

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute internal http://controller:8774/v2.1

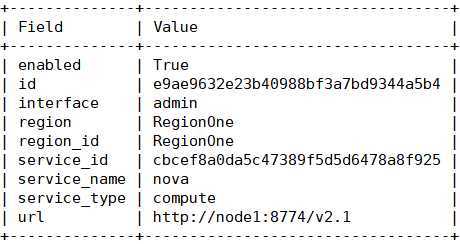

[root@controller ~]# openstack endpoint create --region RegionOne \

> compute admin http://controller:8774/v2.1

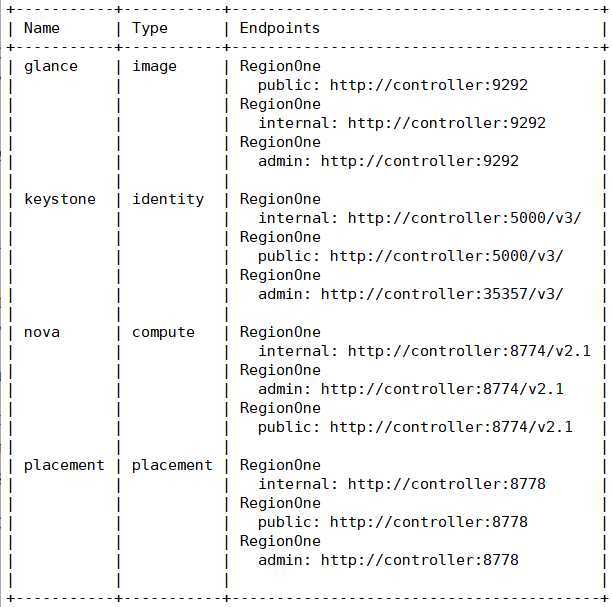

查看已经部署的网络节点:

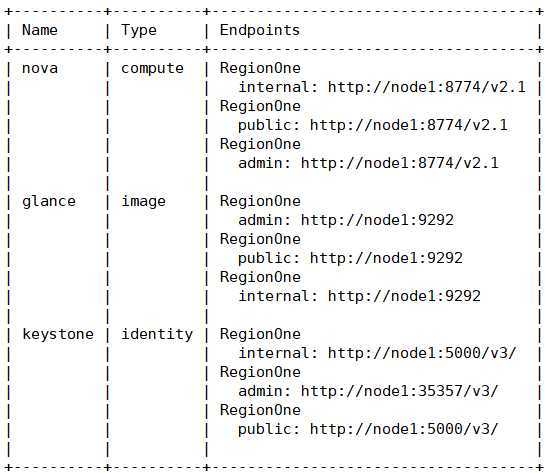

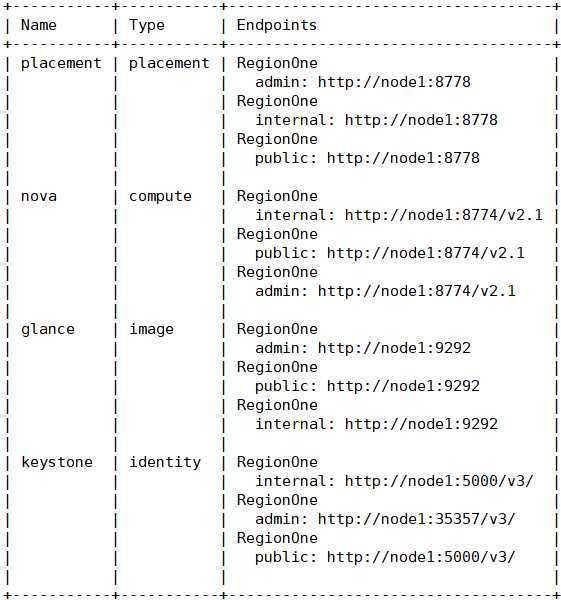

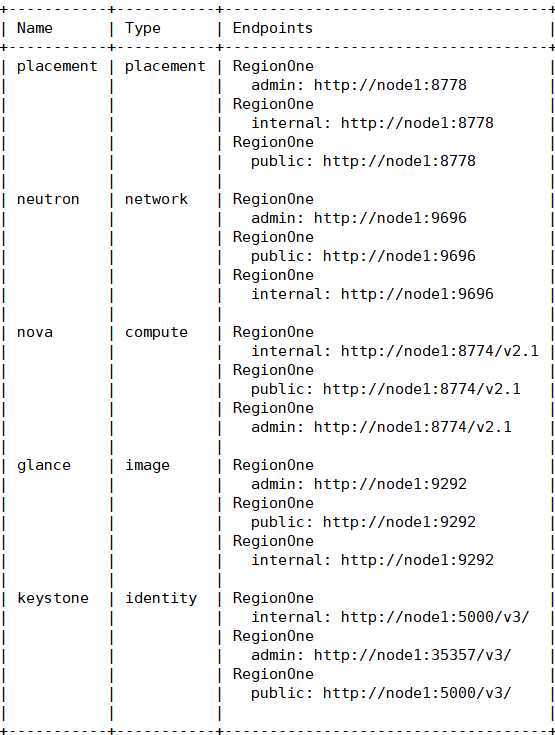

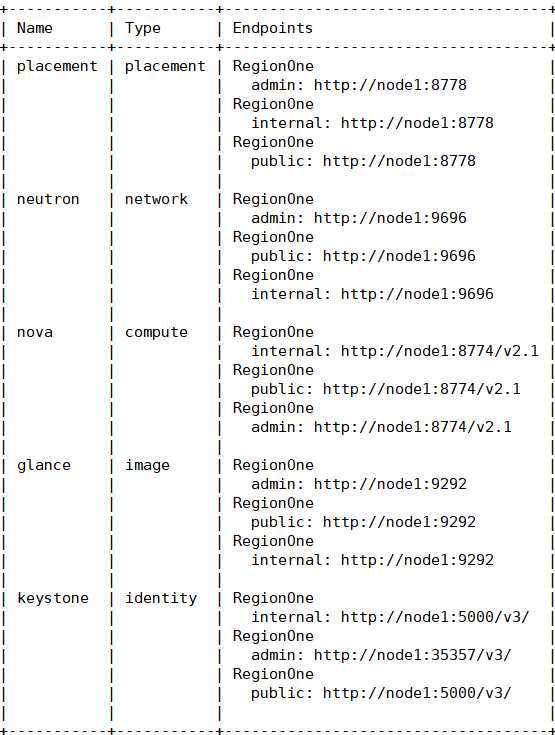

[root@controller ~]# openstack catalog list

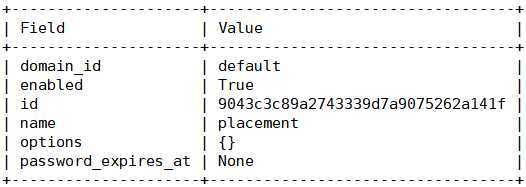

[root@controller ~]# openstack user create --domain default --password=placement placement

[root@controller ~]# openstack role add --project service --user placement admin

[root@controller ~]# openstack service create --name placement --description "Placement API" placement

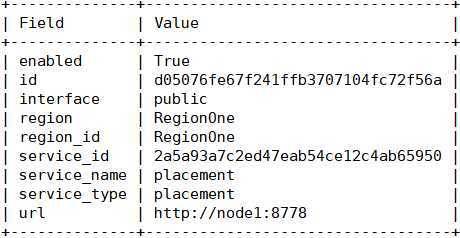

[root@controller ~]# openstack endpoint create --region RegionOne placement public http://controller:8778

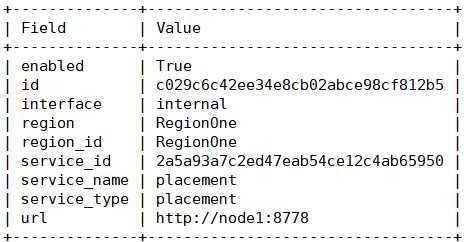

[root@controller ~]# openstack endpoint create --region RegionOne placement internal http://controller:8778

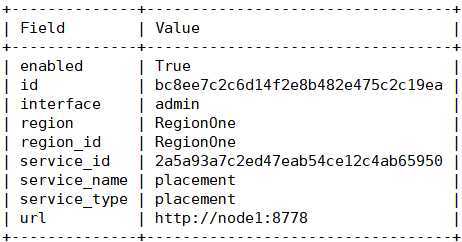

[root@controller ~]# openstack endpoint create --region RegionOne placement admin http://controller:8778

[root@controller ~]# openstack catalog list #查看部署好的服务和服务端点

[root@controller ~]# yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api

[root@controller ~]# cp /etc/nova/nova.conf{,.bak}

[root@controller ~]# tee /etc/nova/nova.conf <<-‘EOF‘

> [DEFAULT]

> my_ip = 10.0.0.10

> use_neutron = True

> firewall_driver = nova.virt.firewall.NoopFirewallDriver

> enabled_apis = osapi_compute,metadata

> transport_url = rabbit://openstack:openstack@controller

> [api]

> auth_strategy = keystone

> [api_database]

> connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

> [barbican]

> [cache]

> [cells]

> [cinder]

> [cloudpipe]

> [conductor]

> [console]

> [consoleauth]

> [cors]

> [cors.subdomain]

> [crypto]

> [database]

> connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

> [ephemeral_storage_encryption]

> [filter_scheduler]

> [glance]

> api_servers = http://controller:9292

> [guestfs]

> [healthcheck]

> [hyperv]

> [image_file_url]

> [ironic]

> [key_manager]

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = nova

> password = nova

> [libvirt]

> [matchmaker_redis]

> [metrics]

> [mks]

> [neutron]

>#url = http://controller:9696

>#auth_url = http://controller:35357

>#auth_type = password

>#project_domain_name = default

>#user_domain_name = default

>#region_name = RegionOne

>#project_name = service

>#username = neutron

>#password = neutron

>#service_metadata_proxy = true

>#metadata_proxy_shared_secret = METADATA_SECRET

> [notifications]

> [osapi_v21]

> [oslo_concurrency]

> lock_path = /var/lib/nova/tmp

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_middleware]

> [oslo_policy]

> [pci]

> [placement]

> os_region_name = RegionOne

> project_domain_name = Default

> project_name = service

> auth_type = password

> user_domain_name = Default

> auth_url = http://controller:35357/v3

> username = placement

> password = placement

> [quota]

> [rdp]

> [remote_debug]

> [scheduler]

> [serial_console]

> [service_user]

> [spice]

> [ssl]

> [trusted_computing]

> [upgrade_levels]

> [vendordata_dynamic_auth]

> [vmware]

> [vnc]

> enabled = true

> vncserver_listen = $my_ip

> vncserver_proxyclient_address = $my_ip

> [workarounds]

> [wsgi]

> [xenserver]

> [xvp]

> EOF

[root@controller ~]# cp /etc/httpd/conf.d/00-nova-placement-api.conf{,.bak}

[root@controller ~]# echo "

><Directory /usr/bin>

> <IfVersion >= 2.4>

> Require all granted

> </IfVersion>

> <IfVersion < 2.4>

> Order allow,deny

> Allow from all

> </IfVersion>

> </Directory>" >> /etc/httpd/conf.d/00-nova-placement-api.conf

[root@controller ~]# systemctl restart httpd

[root@controller ~]# su -s /bin/sh -c"nova-manage api_db sync" nova

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

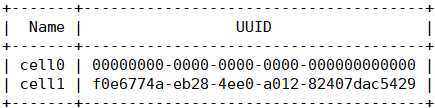

[root@controller ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

f0e6774a-eb28-4ee0-a012-82407dac5429

[root@controller ~]# su -s /bin/sh -c "nova-manage db sync" nova

[root@controller ~]# nova-manage cell_v2 list_cells

[root@controller ~]# systemctl start openstack-nova-api.service \

> openstack-nova-consoleauth.service openstack-nova-scheduler.service \

> openstack-nova-conductor.service openstack-nova-novncproxy.service

[root@controller ~]# systemctl enable openstack-nova-api.service \

> openstack-nova-consoleauth.service openstack-nova-scheduler.service \

> openstack-nova-conductor.service openstack-nova-novncproxy.service

在compute上操作

[root@compute opt]# mkdir -p /openstack/nova #创建文件夹

下载依赖包:

[root@compute ~]# wget -O /openstack/nova/qemu-img-ev-2.12.0-18.el7_6.3.1.x86_64.rpm \

> https://cbs.centos.org/kojifiles/packages/qemu-kvm-ev/2.12.0/18.el7_6.3.1/x86_64/qemu-img-ev-2.12.0-18.el7_6.3.1.x86_64.rpm

[root@compute ~]# wget -O /openstack/nova/qemu-kvm-ev-2.12.0-18.el7_6.3.1.x86_64.rpm \

> https://cbs.centos.org/kojifiles/packages/qemu-kvm-ev/2.12.0/18.el7_6.3.1/x86_64/qemu-kvm-ev-2.12.0-18.el7_6.3.1.x86_64.rpm

[root@compute ~]# wget -O /openstack/nova/qemu-kvm-common-ev-2.12.0-18.el7_6.3.1.x86_64.rpm \

> > https://cbs.centos.org/kojifiles/packages/qemu-kvm-ev/2.12.0/18.el7_6.3.1/x86_64/qemu-kvm-common-ev-2.12.0-18.el7_6.3.1.x86_64.rpm

安装下载的软件包和需要的依赖包

[root@compute ~]# yum -y localinstall /openstack/nova/*

[root@compute ~]# yum -y install openstack-nova-compute

[root@compute ~]# cp /etc/nova/nova.conf{,.bak}

[root@compute ~]# tee /etc/nova/nova.conf <<-‘EOF‘

> [DEFAULT]

> enabled_apis = osapi_compute,metadata

> transport_url = rabbit://openstack:openstack@controller

> my_ip = 192.168.0.20

> use_neutron = True

> firewall_driver = nova.virt.firewall.NoopFirewallDriver

> [api]

> auth_strategy = keystone

> [api_database]

> [barbican]

> [cache]

> [cells]

> [cinder]

> [cloudpipe]

> [conductor]

> [console]

> [consoleauth]

> [cors]

> [cors.subdomain]

> [crypto]

> [database]

> [ephemeral_storage_encryption]

> [filter_scheduler]

> [glance]

> api_servers = http://controller:9292

> [guestfs]

> [healthcheck]

> [hyperv]

> [image_file_url]

> [ironic]

> [key_manager]

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = nova

> password = nova

> [libvirt]

> virt_type = qemu

> [matchmaker_redis]

> [metrics]

> [mks]

> [neutron]

> #url = http://controller:9696

> #auth_url = http://controller:35357

> #auth_type = password

> #project_domain_name = default

> #user_domain_name = default

> #region_name = RegionOne

> #project_name = service

> #username = neutron

> #password = neutron

> [notifications]

> [osapi_v21]

> [oslo_concurrency]

> lock_path = /var/lib/nova/tmp

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_middleware]

> [oslo_policy]

> [pci]

> [placement]

> os_region_name = RegionOne

> project_domain_name = Default

> project_name = service

> auth_type = password

> user_domain_name = Default

> auth_url = http://controller:35357/v3

> username = placement

> password = placement

> [quota]

> [rdp]

> [remote_debug]

> [scheduler]

> [serial_console]

> [service_user]

> [spice]

> [ssl]

> [trusted_computing]

> [upgrade_levels]

> [vendordata_dynamic_auth]

> [vmware]

> [vnc]

> enabled = True

> vncserver_listen = 0.0.0.0

> vncserver_proxyclient_address = $my_ip

> novncproxy_base_url = http://controller:6080/vnc_auto.html

> [workarounds]

> [wsgi]

> [xenserver]

> [xvp]

> EOF

[root@compute ~]# egrep -c ‘(vmx|svm)‘ /proc/cpuinfo

2 #返回结果2表示支持

[root@compute ~]# systemctl enable libvirtd.service openstack-nova-compute.service

[root@compute ~]# systemctl start libvirtd.service openstack-nova-compute.service

在controller上操作

[root@controller nova]# cp /etc/nova/nova.conf{,.compute.bak} #先做备份

[root@controller nova]# sed -i ‘/vncserver_proxyclient_address/a \

novncproxy_base_url = http://10.0.0.10:6080/vnc_auto.html‘ /etc/nova/nova.conf

[root@controller nova]# sed -i ‘/libvirt/a virt_type = qemu‘ /etc/nova/nova.conf

[root@controller nova]# egrep -c ‘(vmx|svm)‘ /proc/cpuinfo

[root@controller nova]# systemctl start libvirtd.service openstack-nova-compute.service

[root@controller nova]# systemctl enable libvirtd.service openstack-nova-compute.service

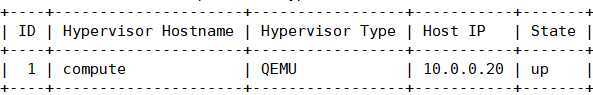

[root@controller nova]# openstack hypervisor list

[root@controller nova]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

在scheduler下面添加

[root@controller ~]# sed -i ‘/\[scheduler\]/a discover_hosts_in_cells_interval = 30‘ /etc/nova/nova.conf

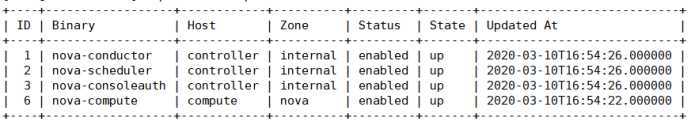

[root@controller nova]# openstack compute service list

[root@controller nova]# openstack catalog list

[root@controller nova]# openstack image list

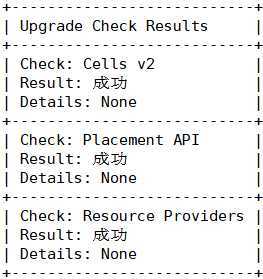

[root@controller nova]# nova-status upgrade check

---------------------------------------------------------------------------------------------------------------------------------

至此,nova服务部署完毕!

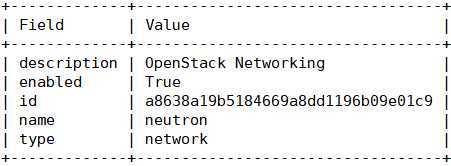

部署neutron网络服务

[root@controller ~]# mysql -uroot -p123123 -e "

> CREATE DATABASE neutron;

> GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘localhost‘ \

> IDENTIFIED BY ‘NEUTRON_DBPASS‘;

> GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron‘@‘%‘ \

> IDENTIFIED BY ‘NEUTRON_DBPASS‘; "

[root@controller ~]# source openrc

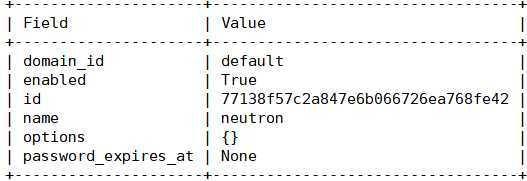

[root@controller ~]# openstack user create --domain default --password=neutron neutron

[root@controller ~]# openstack role add --project service --user neutron admin

[root@controller ~]# openstack service create --name neutron \

> --description "OpenStack Networking" network

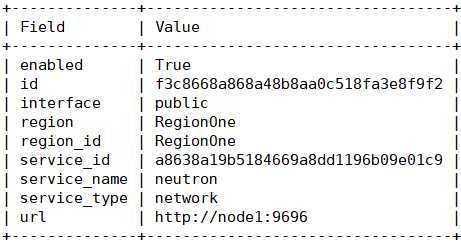

[root@controller ~]# openstack endpoint create --region RegionOne \

> network public http://controller:9696

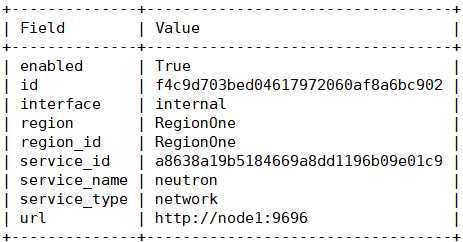

[root@controller ~]# openstack endpoint create --region RegionOne \

> network internal http://controller:9696

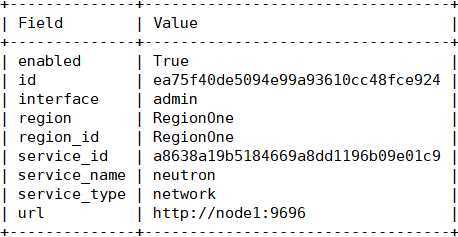

[root@controller ~]# openstack endpoint create --region RegionOne \

> network admin http://controller:9696

[root@controller ~]# openstack user list

[root@controller ~]# openstack service list

[root@controller ~]# openstack catalog list

[root@controller ~]# openstack catalog list

[root@controller ~]# yum install openstack-neutron openstack-neutron-ml2 \

> openstack-neutron-linuxbridge ebtables -y

[root@controller ~]# cp /etc/neutron/neutron.conf{,.bak}

[root@controller ~]# tee /etc/neutron/neutron.conf <<-‘EOF‘

> [DEFAULT]

> core_plugin = ml2

> service_plugins = router

> allow_overlapping_ips = true

> transport_url = rabbit://openstack:openstack@controller

> auth_strategy = keystone

> notify_nova_on_port_status_changes = true

> notify_nova_on_port_data_changes = true

> #dhcp_agent_notification = true

> [agent]

> [cors]

> [cors.subdomain]

> [database]

> connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = neutron

> password = neutron

> [matchmaker_redis]

> [nova]

> auth_url = http://controller:35357

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> region_name = RegionOne

> project_name = service

> username = nova

> password = nova

> [oslo_concurrency]

> lock_path = /var/lib/neutron/tmp

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_middleware]

> [oslo_policy]

> [qos]

> [quotas]

> [ssl]

> EOF

[root@controller ~]# cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

[root@controller ~]# tee /etc/neutron/plugins/ml2/ml2_conf.ini <<-‘EOF‘

> [DEFAULT]

> [ml2]

> type_drivers = flat,vlan,vxlan

> tenant_network_types = vxlan

> mechanism_drivers = linuxbridge,l2population

> extension_drivers = port_security

> [ml2_type_flat]

> flat_networks = provider

> [ml2_type_geneve]

> [ml2_type_gre]

> [ml2_type_vlan]

> [ml2_type_vxlan]

> vni_ranges = 1:1000

> [securitygroup]

> enable_ipset = true

> EOF

[root@controller ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

[root@controller ~]# tee /etc/neutron/plugins/ml2/linuxbridge_agent.ini <<-‘EOF‘

> [DEFAULT]

> [agent]

> [linux_bridge]

> physical_interface_mappings = provider:ens33

> [securitygroup]

> enable_security_group = true

> firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

> [vxlan]

> enable_vxlan = true

> local_ip = 10.0.0.10

> l2_population = true

> EOF

[root@controller ~]# cp /etc/neutron/l3_agent.ini{,.bak}

[root@controller ~]# tee /etc/neutron/l3_agent.ini <<-‘EOF‘

> [DEFAULT]

> interface_driver = linuxbridge

> #external_network_bridge = br-ex

> [agent]

> [ovs]

> EOF

[root@controller ~]# cp /etc/neutron/dhcp_agent.ini{,.bak}

[root@controller ~]# tee /etc/neutron/dhcp_agent.ini <<-‘EOF‘

> [DEFAULT]

> interface_driver = linuxbridge

> dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

> enable_isolated_metadata = true

> [agent]

> [ovs]

> EOF

[root@controller ~]# tee /etc/neutron/metadata_agent.ini <<-‘EOF‘

> [DEFAULT]

> nova_metadata_ip = controller

> metadata_proxy_shared_secret = METADATA_SECRET

> [agent]

> [cache]

> EOF

[root@controller ~]# cp /etc/nova/nova.conf{,.neutron.bak}

[root@controller ~]# sed -i ‘s/^#//‘ /etc/nova/nova.conf (去掉neutron下面的备注符号)

[root@controller ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

[root@controller ~]# systemctl restart openstack-nova-api.service

[root@controller ~]# systemctl restart neutron-linuxbridge-agent.service \

> neutron-server.service neutron-dhcp-agent.service neutron-metadata-agent.service

[root@controller ~]# systemctl enable neutron-linuxbridge-agent.service \

> neutron-server.service neutron-dhcp-agent.service neutron-metadata-agent.service

[root@controller ~]# nmcli connection show

NAME UUID TYPE DEVICE

有线连接 1 a05e21cf-0ce1-3fdb-97af-2aef41f56836 ethernet ens38

ens33 3a90c11e-a36f-401e-ba9d-e7961cea63ca ethernet ens33

ens37 526c9943-ba19-48db-80dc-bf3fe4d99505 ethernet ens37

[root@controller ~]# nmcli connection modify ‘有线连接 1‘ con-name ens38

[root@controller ~]# nmcli connection show

NAME UUID TYPE DEVICE

ens38 a05e21cf-0ce1-3fdb-97af-2aef41f56836 ethernet ens38

ens33 3a90c11e-a36f-401e-ba9d-e7961cea63ca ethernet ens33

ens37 526c9943-ba19-48db-80dc-bf3fe4d99505 ethernet ens37

[root@controller ~]# sed -i ‘3,4d;6,12d;16d‘ /etc/sysconfig/network-scripts/ifcfg-ens38

[root@controller ~]# sed -i ‘s/dhcp/none/‘ /etc/sysconfig/network-scripts/ifcfg-ens38

[root@controller ~]# service network restart #如果不生效reboot重启

[root@controller ~]# source openrc

[root@controller ~]# ovs-vsctl add-br br-ex

[root@controller ~]# ovs-vsctl add-port br-ex ens38

[root@controller ~]# systemctl start neutron-l3-agent.service

[root@controller ~]# systemctl enable neutron-l3-agent.service

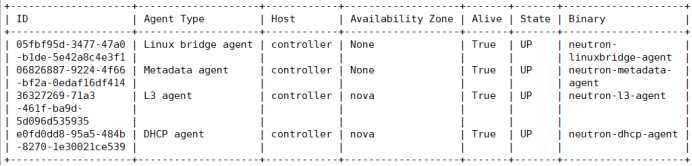

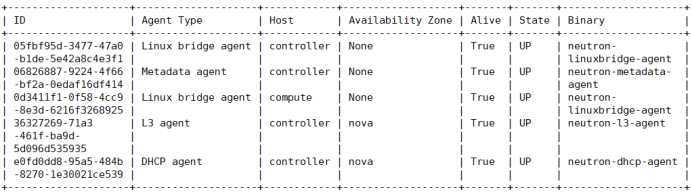

[root@controller ~]# neutron agent-list 或[root@controller ~]# openstack network agent list

在计算节点compute上部署neutron服务

[root@compute ~]# yum install openstack-neutron-linuxbridge ebtables ipset -y

[root@compute ~]# cp /etc/neutron/neutron.conf{,.bak}

[root@compute ~]# tee /etc/neutron/neutron.conf <<-‘EOF‘

> [DEFAULT]

> #core_plugin = ml2

> #service_plugins = router

> #allow_overlapping_ips = true

> transport_url = rabbit://openstack:openstack@controller

> auth_strategy = keystone

> #notify_nova_on_port_status_changes = true

> #notify_nova_on_port_data_changes = true

> #dhcp_agent_notification = true

> [agent]

> [cors]

> [cors.subdomain]

> [database]

> #connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = neutron

> password = neutron

> [matchmaker_redis]

> [nova]

> #auth_url = http://controller:35357

> #auth_type = password

> #project_domain_name = default

> #user_domain_name = default

> #region_name = RegionOne

> #project_name = service

> #username = nova

> #password = nova

> [oslo_concurrency]

> lock_path = /var/lib/neutron/tmp

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_middleware]

> [oslo_policy]

> [qos]

> [quotas]

> [ssl]

> EOF

[root@compute ~]# cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

[root@compute ~]# tee /etc/neutron/plugins/ml2/linuxbridge_agent.ini <<-‘EOF‘

> [DEFAULT]

> [agent]

> [linux_bridge]

> physical_interface_mappings = provider:ens33

> [securitygroup]

> enable_security_group = true

> firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

> [vxlan]

> enable_vxlan = true

> local_ip = 10.0.0.20

> l2_population = true

> EOF

[root@compute ~]# cp /etc/nova/nova.conf{,.nova}

[root@compute ~]# sed -i ‘s/^#//‘ /etc/nova/nova.conf

[root@compute ~]# systemctl restart openstack-nova-compute.service

[root@compute ~]# systemctl start neutron-linuxbridge-agent

[root@compute ~]# systemctl enable neutron-linuxbridge-agent

[root@controller ~]# openstack network agent list 或是用命令 neutron agent-list

至此,neutron服务部署完毕!

部署dashboard(horizon-web管理)服务

[root@controller ~]# yum install openstack-dashboard -y

[root@controller ~]# cp /etc/openstack-dashboard/local_settings{,.bak}

[root@controller ~]# sed -i ‘/^OPENSTACK_HOST/c OPENSTACK_HOST = "controller"‘ \

/etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i "s/localhost‘/localhost‘,‘*‘/" /etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i "136,140s/^/#/" /etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i "129,134s/^#//" /etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i "128a SESSION_ENGINE = ‘django.contrib.sessions.backends.cache‘" \

/etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i "s/127.0.0.1:11211/controller:11211/" \

/etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i "s/v2.0/v3/" /etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i ‘s/_ROLE = "_member_"/_ROLE = "user"/‘ \

/etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i ‘/^#OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT/c \

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True‘ \

/etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i ‘54s/#//;56,60s/#//‘ /etc/openstack-dashboard/local_settings

[root@controller ~]# sed -i ‘/^#OPENSTACK_KEYSTONE_DEFAULT_DOMAIN/c \

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default" ‘ \

/etc/openstack-dashboard/local_settings

[root@controller ~]# systemctl restart httpd.service memcached.service

访问网址是控制节点的IP,用户名密码都是admin

至此,dashboard服务部署完毕!

部署cinder存储服务(controller、storage)

[root@controller ~]# mysql -uroot -p123123 -e "CREATE DATABASE cinder;"

[root@controller ~]# mysql -uroot -p123123 -e "

> GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder‘@‘localhost‘ \

> IDENTIFIED BY ‘CINDER_DBPASS‘; \

> GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder‘@‘%‘ \

> IDENTIFIED BY ‘CINDER_DBPASS‘;"

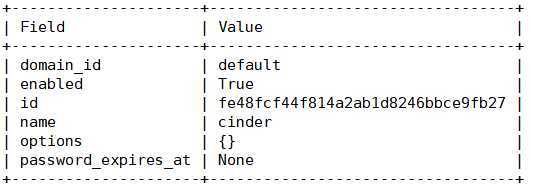

[root@controller ~]# openstack user create --domain default --password=cinder cinder

[root@controller ~]# openstack role add --project service --user cinder admin

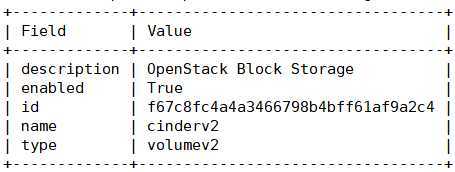

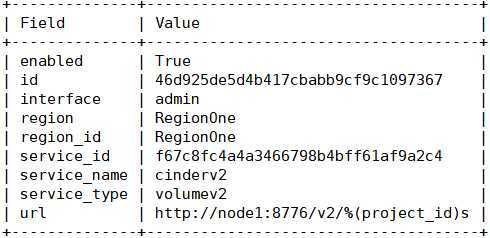

[root@controller ~]# openstack service create --name cinderv2 \

> --description "OpenStack Block Storage" volumev2

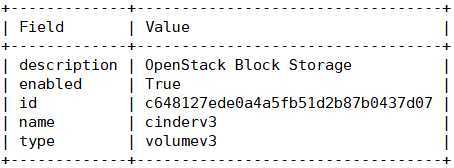

[root@controller ~]# openstack service create --name cinderv3 \

> --description "OpenStack Block Storage" volumev3

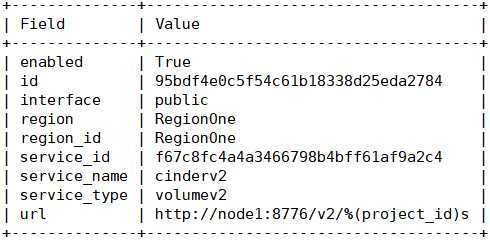

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev2 public http://controller:8776/v2/%\(project_id\)s

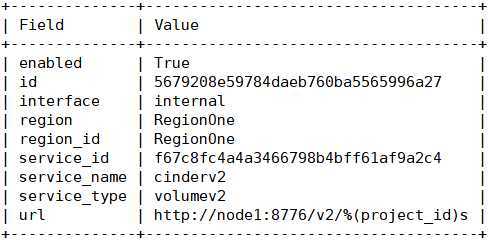

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev2 internal http://controller:8776/v2/%\(project_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev2 admin http://controller:8776/v2/%\(project_id\)s

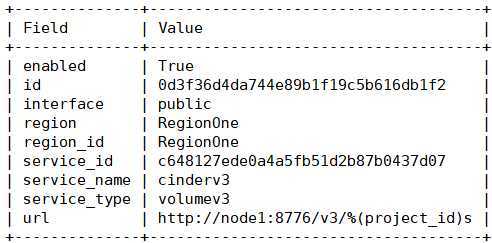

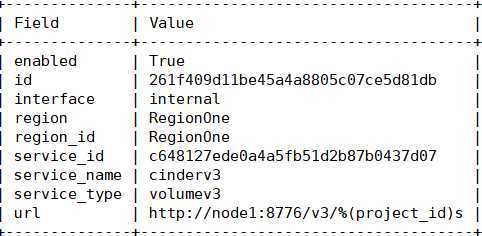

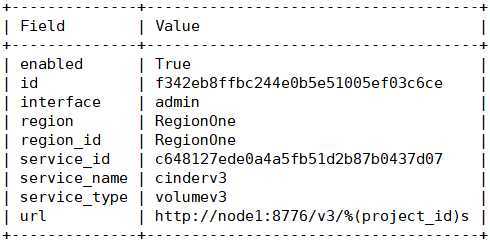

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev3 public http://controller:8776/v3/%\(project_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev3 internal http://controller:8776/v3/%\(project_id\)s

[root@controller ~]# openstack endpoint create --region RegionOne \

> volumev3 admin http://controller:8776/v3/%\(project_id\)s

[root@controller ~]# yum -y install openstack-cinder

[root@controller ~]# cp /etc/cinder/cinder.conf{,.bak}

[root@controller ~]# tee /etc/cinder/cinder.conf <<-‘EOF‘

> [DEFAULT]

> my_ip = 10.0.0.10

> #glance_api_servers = http://controller:9292

> auth_strategy = keystone

> #enabled_backends = lvm

> transport_url = rabbit://openstack:openstack@controller

> [backend]

> [barbican]

> [brcd_fabric_example]

> [cisco_fabric_example]

> [coordination]

> [cors]

> [cors.subdomain]

> [database]

> connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

> [fc-zone-manager]

> [healthcheck]

> [key_manager]

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = cinder

> password = cinder

> [matchmaker_redis]

> [oslo_concurrency]

> lock_path = /var/lib/cinder/tmp

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_middleware]

> [oslo_policy]

> [oslo_reports]

> [oslo_versionedobjects]

> [profiler]

> [ssl]

> [lvm]

> #volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

> #volume_group = cinder-vg

> #volumes_dir = $state_path/volumes

> #Iscsi_protocol = iscsi

> #iscsi_helper = lioadm

> #iscsi_ip_address = 10.0.0.10

> EOF

[root@controller ~]# su -s /bin/sh -c "cinder-manage db sync" cinder

Option "logdir" from group "DEFAULT" is deprecated. Use option "log-dir" from group "DEFAULT".

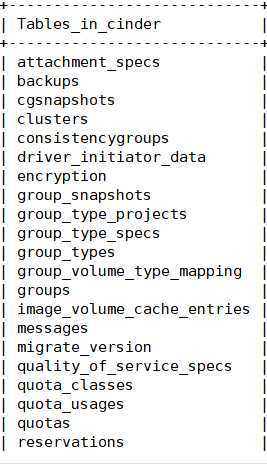

[root@controller ~]# mysql -uroot -p123123 -e "use cinder;show tables;"

[root@controller ~]# sed -i ‘/\[cinder\]/a os_region_name = RegionOne‘ /etc/nova/nova.conf

[root@compute ~]# sed -i ‘/\[cinder\]/a os_region_name = RegionOne‘ /etc/nova/nova.conf

[root@controller ~]# systemctl restart openstack-nova-api.service

[root@controller ~]# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

[root@controller ~]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

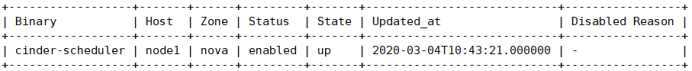

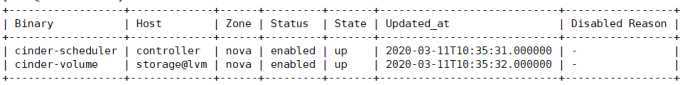

[root@controller ~]# cinder service-list

至此,控制节点controller上的cinder部署完毕!

在存储节点storage上部署cinder服务

[root@storage ~]# yum install lvm2 -y

[root@storage ~]# systemctl start lvm2-lvmetad.service

[root@storage ~]# systemctl enable lvm2-lvmetad.service

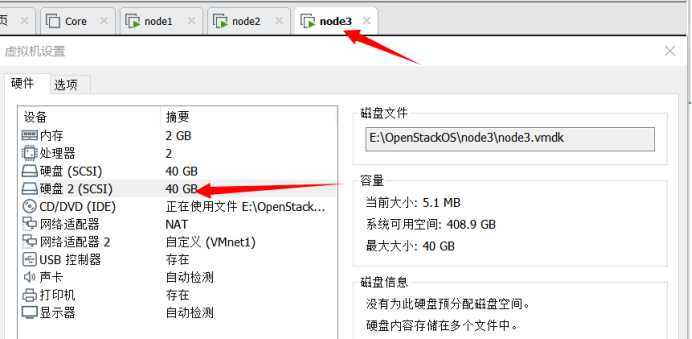

打开VMware-->找到storage右击设置-->点击添加-->添加磁盘类型为ISCSI类型,大小为40G

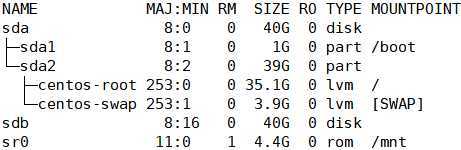

[root@storage ~]# lsblk

[root@storage ~]# pvcreate /dev/sdb

Physical volume "/dev/sdb" successfully created.

[root@storage ~]# vgcreate cinder-volumes /dev/sdb

Volume group "cinder-volumes" successfully created

[root@storage ~]# cp /etc/lvm/lvm.conf{,.bak}

[root@storage ~]# sed -i ‘/devices {/a filter = [ "a/sdb/", "r/.*/"]‘ /etc/lvm/lvm.conf

首先要配置好yum源,配置方法详见:https://www.cnblogs.com/guarding/p/12321702.html

[root@storage ~]# yum install openstack-cinder targetcli python-keystone -y

在配置好yum源后安装如果报错,执行下面四条命令再尝试!

[root@storage ~]# yum -y install libtommath

[root@storage ~]# mkdir /cinder

[root@storage ~]# wget -O /cinder/libtomcrypt-1.17-33.20170623gitcd6e602.el7.x86_64.rpm https://cbs.centos.org/kojifiles/packages/libtomcrypt/1.17/33.20170623gitcd6e602.el7/x86_64/libtomcrypt-1.17-33.20170623gitcd6e602.el7.x86_64.rpm

[root@storage ~]# rpm -ivh /cinder/libtomcrypt-1.17-33.20170623gitcd6e602.el7.x86_64.rpm

[root@storage ~]# yum install openstack-cinder targetcli python-keystone -y

[root@storage ~]# cp /etc/cinder/cinder.conf{,.bak}

[root@storage ~]# tee /etc/cinder/cinder.conf <<-‘EOF‘

> [DEFAULT]

> my_ip = 192.168.0.30

> glance_api_servers = http://controller:9292

> auth_strategy = keystone

> enabled_backends = lvm

> transport_url = rabbit://openstack:openstack@controller

> [backend]

> [barbican]

> [brcd_fabric_example]

> [cisco_fabric_example]

> [coordination]

> [cors]

> [cors.subdomain]

> [database]

> connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

> [fc-zone-manager]

> [healthcheck]

> [key_manager]

> [keystone_authtoken]

> auth_uri = http://controller:5000

> auth_url = http://controller:35357

> memcached_servers = controller:11211

> auth_type = password

> project_domain_name = default

> user_domain_name = default

> project_name = service

> username = cinder

> password = cinder

> [matchmaker_redis]

> [oslo_concurrency]

> lock_path = /var/lib/cinder/tmp

> [oslo_messaging_amqp]

> [oslo_messaging_kafka]

> [oslo_messaging_notifications]

> [oslo_messaging_rabbit]

> [oslo_messaging_zmq]

> [oslo_middleware]

> [oslo_policy]

> [oslo_reports]

> [oslo_versionedobjects]

> [profiler]

> [ssl]

> [lvm]

> volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

> volume_group = cinder-volumes

> #volumes_dir = $state_path/volumes

> Iscsi_protocol = iscsi

> iscsi_helper = lioadm

> #iscsi_ip_address = 192.168.0.30

> EOF

[root@storage ~]# systemctl enable openstack-cinder-volume.service target.service

[root@storage ~]# systemctl start openstack-cinder-volume.service target.service

[root@controller ~]# cinder service-list

至此,cinder服务部署完毕!

至此,OpenStack的基本组件已经部署完毕!

原文:https://www.cnblogs.com/guarding/p/12539801.html