正则表达式(英语:Regular Expression,常简写为regex、regexp或RE),又称正则表示式、正则表示法、规则表达式、常规表示法,是计算机科学的一个概念。正则表达式使用单个字符串来描述、匹配一系列符合某个句法规则的字符串。在很多文本编辑器里,正则表达式通常被用来检索、替换那些符合某个模式的文本,简而言之,正则就是使用特殊字符来匹配特定文本达到提取数据的目的。

贪婪匹配:在满足条件的情况下,尽可能的多匹配。(.+、.?、.* ...)

非贪婪匹配:在满足条件的情况下,尽可能少匹配。 (.+?、.??、.*? ...)

分组是为了提取已经匹配到的字符串中的特定内容。

import re

st = ‘<a href="/list,cjpl.html" class="balink">财经评论吧</a>‘ # 待匹配字符串

#这里进行了分组命名,名字为 name,而在后面通过name这个名字进行引用。

pa = r‘<(?P<name>\w+) href="(.*?)" class="balink">(.*?)</(?P=name)>‘ # 正则表达式

print(re.findall(pa, st))

#这里是第二种分组引用方法

pa = r‘<(\w+) href="(.*?)" class="balink">(.*?)</(\1)>‘ # 正则表达式

print(re.findall(pa, st))

#(?:)取消显示分组,(一般用于取消没有命名或者引用的组)

pa = r‘<(?:\w+) href="(.*?)" class="balink">(.*?)</(\1)>‘ # 正则表达式

print(re.findall(pa, st))

pa = r‘<(?:\w+) href="(.*?)" class="balink">(?:.*?)</(\w+)>‘ # 正则表达式

print(re.findall(pa, st))

#执行结果

[(‘a‘, ‘/list,cjpl.html‘, ‘财经评论吧‘)]

[(‘a‘, ‘/list,cjpl.html‘, ‘财经评论吧‘, ‘a‘)]

[]

[(‘/list,cjpl.html‘, ‘a‘)]

- 非负整数:^[1-9]\d*|0

- 正整数:^[1-9]\d*

- 电话号码:^1[3-9]\d{9}

- 日期1998-08-8 :^[1-9]\d{3}-(1[012]|0?[1-9])-([12]\d|0?[1-9]|3[01])

- 进阶版本:^[1-9]\d{3}(?P<tu>\D+)(1[012]|0?[1-9])(?P=tu)([12]\d|0?[1-9]|3[01])

- ```

st=‘1998+1+1‘

rs=re.search(r‘^[1-9]\d{3}(?P<tu>[^\d])(1[012]|0?[1-9])(?P=tu)([12]\d|0?[1-9]|3[01])‘,st)

print(rs.group())

#结果

1998+1+1

```

- 邮箱:^[-\w.]+@([a-zA-Z\d.-]+\.)+[a-zA-Z\d]{2,6}$

- 中文字符:[\u4e00-\u9fa5]+

re模块是python中用来操作正则表达式的常用模块

pattern = re.compile(

r‘正则表达式‘,

‘匹配模式‘,#可以不指定,默认就按正则表达式本来的含义进行匹配。

)

匹配模式:

match对象 = re.match(pattern,#正则表达式

string,#要匹配的目标字符串

start,#要匹配目标字符串的起始位置(可选)

end#结束位置(可选)

)

s = re.match(‘\d\d\d‘, ‘qwe123rty‘)

print(s)

s = re.match(‘\d\d\d‘, ‘123rty‘)

print(s) #match对象

print(s.group()) #配置到的字符

print(s.span()) #配置到字符所在位置

#None

#<_sre.SRE_Match object; span=(0, 3), match=‘123‘>

#123

#(0, 3)

match = re.search(

pattern,#正则表达式

string,#要匹配的目标字符串

start,#要匹配目标字符串的起始位置(可选)

end#结束位置(可选)

)

s = re.search(‘\d\d\d‘, ‘qwe123rty‘)

print(s)

print(s.group())

print(s.span())

<_sre.SRE_Match object; span=(3, 6), match=‘123‘>

123

(3, 6)

list= re.findall(

pattern,#正则表达式

string,#要匹配的目标字符串

start,#要匹配目标字符串的起始位置(可选)

end#结束位置(可选)

)

s = ‘qweor98e810243h8-0u0d1023-104321‘

ret = re.findall(r‘10(\d)‘, s)

print(ret) #优先显示分组

ret = re.findall(r‘10\d‘, s)

print(ret)

#[‘2‘, ‘2‘, ‘4‘]

#[‘102‘, ‘102‘, ‘104‘]

迭代器= re.finditer(

pattern,#正则表达式

string,#要匹配的目标字符串

start,#要匹配目标字符串的起始位置(可选)

end#结束位置(可选)

)

s = re.finditer(‘[a-z]+‘, ‘qwer1234zxcv‘)

print(next(s).group())

print(next(s).group())

#qwer

#zxcv

替换后与的字符串=re.sub(

pattern,#正则表达式

repl, #替换成什么

String,#替换什么

Count#替换次数,可选,默认全部替换

)

s=‘qwer\n\t 123 9‘

res=re.sub(‘\s+‘,‘‘,s,)

print(res)

#qwer1239

#让s字符串中数字每个加1000

import re

s=‘zs:1000,ls:3000‘

def func(m):

s=m.group()

return str(int(s)+1000)

s=re.sub(r‘\d+‘,func,s)

print(s)

#zs:2000,ls:4000

list=re.split(

pattern,#正则表达式

String,#待分割字符串

Maxsplit#指定最大分隔次数,默认全部分隔,可选

)

s = re.split(‘\d\d\d‘, ‘qwe123rty‘)

print(s)

s = re.split(‘(\d)(\d)(\d)‘, ‘qwe123rty‘) # 在re.split中添加分组,通过整体分割,在结果中保留分组内容

print(s)

#[‘qwe‘, ‘rty‘]

#[‘qwe‘, ‘1‘, ‘2‘, ‘3‘, ‘rty‘]

from concurrent.futures import ThreadPoolExecutor

import re

import requests

from queue import PriorityQueue

from queue import Empty

import os

import json

class Maoyan():

def __init__(self):

self.headers = {

‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36‘

}

self.base_url = ‘https://maoyan.com/board/4?offset={}‘

self.Quene = PriorityQueue()

self.host = ‘https://maoyan.com‘

self.path = ‘./maoyan‘

def get_html(self, url):

res = requests.get(url, headers=self.headers)

res.encoding = res.apparent_encoding

self.parse_content(res.text)

def get_url(self):

return [self.base_url.format(i * 10) for i in range(10)]

def parse_content(self, content):

res = re.findall(

r‘<dd>.*?board-index-.*?">(.*?)</i>.*?<a\s+href="(.*?)"\s+title="(.*?)".*?主演:(.*?)</p>.*?上映时间:(.*?)</p>.*?integer">(.*?)</i>.*?fraction">(.*?)</i>‘,

content, re.S)

for item in res:

dic = {

‘rank‘: int(item[0]),

‘url‘: self.host + item[1],

‘title‘: item[2],

‘star‘: re.sub(‘\s+‘, ‘‘, item[3]),

‘releasetime‘: item[4],

‘score‘: item[-2] + item[-1]

}

self.Quene.put((dic.get(‘rank‘), dic))

def save_file(self):

if not os.path.exists(self.path):

os.mkdir(self.path)

filename = os.path.join(self.path, ‘猫眼.json‘)

with open(filename, ‘w‘, encoding=‘utf-8‘) as file:

while True:

try:

json.dump(self.Quene.get_nowait()[1], file, ensure_ascii=False)

file.write(‘\n‘)

except Empty:

break

def run(self):

t_list = []

tp = ThreadPoolExecutor(10)

for i in self.get_url():

s = tp.submit(self.get_html, i)

t_list.append(s)

for i in t_list:

i.result()

self.save_file()

if __name__ == ‘__main__‘:

my = Maoyan()

my.run()

from threading import Thread

import re

import requests

from queue import Queue, Empty

import os

import json

class Guba(object):

def __init__(self, path=‘./‘):

self.headers = {

‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36‘

}

self.base_url = ‘http://guba.eastmoney.com/default,99_{}.html‘

self.path = path

self.Quene = Queue()

def get_html(self, url):

res = requests.get(url, headers=self.headers)

res.encoding = res.apparent_encoding

self.parse_content(res.text)

def parse_content(self, content):

p = re.compile(

r‘<li>\s+<cite>(.*?)</cite>\s+<cite>(.*?)</cite>.*?<span class="sub">.*?balink">(.*?)</a>.*?note">(.*?)</a></span>.*?<cite class="last">(.*?)</cite>‘,

re.S)

res = p.findall(content)

for item in res:

dic = {

‘read‘: int(re.sub(‘\s+‘, ‘‘, item[0])),

‘comment‘: int(re.sub(‘\s+‘, ‘‘, item[1])),

‘name‘: item[2],

‘title‘: item[3],

‘time‘: item[-1]

}

self.Quene.put(dic)

def get_url(self):

return [self.base_url.format(i) for i in range(1, 13)]

def save_file(self):

if not os.path.exists(self.path):

os.mkdir(self.path)

filename = os.path.join(self.path, ‘股吧.json‘)

with open(filename, ‘w‘, encoding=‘utf-8‘) as file:

while True:

try:

js = self.Quene.get_nowait()

print(js)

json.dump(js, file, ensure_ascii=False)

file.write(‘\n‘)

except Empty:

break

def run(self):

th_list = []

for url in self.get_url():

th = Thread(target=self.get_html, args=(url,))

th.start()

th_list.append(th)

for i in th_list:

i.join()

r_h = Thread(target=self.save_file,)

r_h.start()

if __name__ == ‘__main__‘:

gb = Guba()

gb.run()

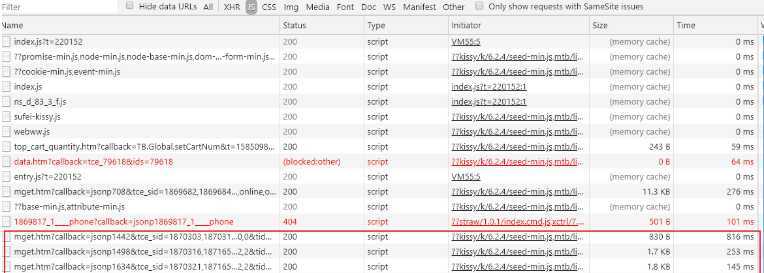

通过分析发现,淘宝电场商品数据都是js请求渲染,我这里只提取了其中的一个url进行商品数据提取

import requests

import re

import json

def get_content():

headers = {

‘User-Agent‘: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36‘

}

url = ‘https://tce.taobao.com/api/mget.htm?callback=jsonp1495&tce_sid=1870256,1870299&tce_vid=0,1&tid=,&tab=,&topic=,&count=,&env=online,online‘

res = requests.get(url, headers=headers)

json_str = re.findall(‘window.jsonp1495&&jsonp1495\((.*)\)‘, res.text, re.S)[0].strip()

dic_ = json.loads(json_str, encoding=‘utf-8‘)

return dic_[‘result‘][‘1870299‘][‘result‘]

def write_file(content):

with open(‘./goods.json‘,‘w‘,encoding=‘utf-8‘) as file:

for item in content:

del item[‘_sys_point_local‘]

json.dump(item,file,ensure_ascii=False)

file.write(‘\n‘)

print(item)

if __name__ == ‘__main__‘:

content = get_content()

write_file(content)

原文:https://www.cnblogs.com/hjnzs/p/12582604.html