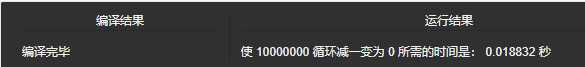

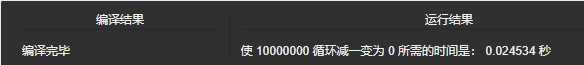

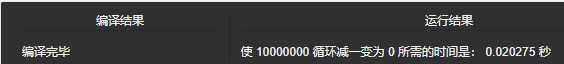

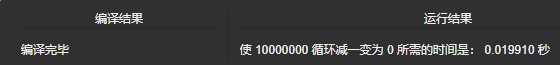

1.clock()函数是C/C++中的计时函数,相关的数据类型是clock_t,使用clock函数可以计算运行某一段程序所需的时间,如下所示程序计算从10000000逐渐减一直到0所需的时间。

注:每次运行所需时间可能会不一样

1 #include "cuda_runtime.h" 2 #include "device_launch_parameters.h" 3 #include <stdio.h> 4 #include <time.h> 5 int main() 6 { 7 //测试clock_t的使用 8 clock_t start, end; 9 long n = 10000000L; 10 double duration; 11 printf("使 %ld 循环减一变为 0 所需的时间是:",n); 12 //开始时间 13 start = clock(); 14 //循环减一 15 while(n--); 16 //结束时间 17 end = clock(); 18 //计算整个过程的时间结束时间减开始时间), 19 //CLOCKS_PER_SEC是"time.h"文件中定义的常量, 20 //表示一秒钟包含多少时钟计时单元(即毫秒)。 21 duration = (double)(end-start) / CLOCKS_PER_SEC; 22 printf(" %f 秒\n",duration); 23 return 0; 24 }

2.

假设我们有两组数据,我们需要将这两组数据中对应的元素两两相加,并将结果保存在第三个数组中。

1 //CUDA的头文件 2 #include "cuda_runtime.h" 3 #include "device_launch_parameters.h" 4 //C语言的头文件 5 #include <stdio.h> 6 #include <time.h> 7 8 #define N 6000 9 #define thread_num 1024 10 11 //GPU函数声明 12 __global__ void add(int* a, int* b, int* c); 13 //CPU函数声明 14 void add_CPU(int *a, int *b,int *c); 15 16 int main() 17 { 18 //GPU方法计时声明 19 float time_CPU, time_GPU; 20 cudaEvent_t start_GPU, stop_GPU, start_CPU, stop_CPU; 21 //CPU方法计时声明 22 float time_cpu, time_gpu; 23 clock_t start_cpu, stop_cpu, start_gpu, stop_gpu; 24 int a[N], b[N], c[N], c_CPU[N]; 25 int *dev_a, *dev_b, *dev_c; 26 27 int block_num; 28 block_num = (N + thread_num - 1)/thread_num; 29 30 //在GPU上分配内存 31 cudaMalloc((void**)&dev_a, N*sizeof(int)); 32 cudaMalloc((void**)&dev_b, N*sizeof(int)); 33 cudaMalloc((void**)&dev_c, N*sizeof(int)); 34 35 //在CPU上进行赋值 36 for(int i = 0; i < N; i++) 37 { 38 a[i] = -i; 39 b[i] = i*i; 40 } 41 42 //创建Event 43 cudaEventCreate(&start_CPU); 44 cudaEventCreate(&stop_CPU); 45 //记录当前时间 46 cudaEventRecord(start_CPU,0); 47 start_cpu = clock(); 48 49 add_CPU(a, b, c_CPU); 50 51 stop_cpu = clock(); 52 //记录当前时间 53 cudaEventRecord(stop_CPU,0); 54 cudaEventSynchronize(start_CPU); //等待事件完成 55 //等待事件完成,记录之前的任务 56 cudaEventSynchronize(stop_CPU); 57 //计算时间差 58 cudaEventElapsedTime(&time_CPU, start_CPU, stop_CPU); 59 printf("Tne time for CPU:\t%f(ms)\n", time_CPU); 60 61 //消除Event 62 cudaEventDestroy(start_CPU); 63 cudaEventDestroy(stop_CPU); 64 65 //输出CPU结果 66 printf("\nResult from CPU:\n"); 67 for(int i = 0; i<N; i++) 68 { 69 printf("CPU:\t%d+%d=%d\n",a[i],b[i],c_CPU[i]); 70 } 71 72 //GPU计算 73 cudaMemcpy(dev_a,a,N*sizeof(int), cudaMemcpyHostToDevice); 74 cudaMemcpy(dev_b,b,N*sizeof(int), cudaMemcpyHostToDevice); 75 76 //创建Event 77 cudaEventCreate(&start_GPU); 78 cudaEventCreate(&stop_GPU); 79 80 //记录当时时间 81 cudaEventRecord(start_GPU,0); 82 start_gpu = clock(); 83 //调用核函数 84 add<<<block_num,thread_num>>>(dev_a,dev_b,dev_c); 85 86 stop_gpu = clock(); 87 //记录当时时间 88 cudaEventRecord(stop_GPU,0); 89 cudaEventSynchronize(start_GPU); 90 cudaEventSynchronize(stop_GPU); 91 cudaEventElapsedTime(&time_GPU, start_GPU, stop_GPU); 92 printf("\nThe time from GPU :\t%f(ms)\n",time_GPU); 93 94 //将device复制到host 95 cudaMemcpy(c,dev_c,N*sizeof(int),cudaMemcpyDeviceToHost); 96 //将GPU中的结果拷贝出来 97 cudaMemcpy(c,dev_c,N*sizeof(int),cudaMemcpyDeviceToHost); 98 99 //输出 100 printf("\nResult from GPU:\n"); 101 for(int i = 0; i<N; i++) 102 { 103 printf("GPU:\t%d+%d=%d\n",a[i],b[i],c[i]); 104 } 105 cudaEventDestroy(start_GPU); 106 cudaEventDestroy(stop_GPU); 107 108 //释放内存 109 cudaFree(dev_a); 110 cudaFree(dev_b); 111 cudaFree(dev_c); 112 printf("\nThe time for CPU by event:\t%f(ms)\n", time_CPU); 113 printf("The time for GPU by event:\t%f(ms)\n", time_GPU); 114 115 time_cpu = (float)(stop_cpu - start_cpu) / CLOCKS_PER_SEC; 116 time_gpu = (float)(stop_gpu - start_gpu) / CLOCKS_PER_SEC; 117 printf("\nThe time for CPU by host:\t%f(ms)\n", time_cpu); 118 printf("The time for GPU by host:\t%f(ms)\n", time_gpu); 119 120 121 return 0; 122 } 123 //GPU函数 124 __global__ void add(int *a, int *b, int *c) 125 { 126 int tid = blockIdx.x*blockDim.x+threadIdx.x;//计算该索引处的数据 127 if (tid < N) 128 { 129 c[tid] = a[tid] + b[tid]; 130 } 131 } 132 133 //CPU函数 134 void add_CPU(int *a, int *b, int *c) 135 { 136 for (int i = 0; i < N; i++) 137 { 138 c[i] = a[i] + b[i]; 139 } 140 }

原文:https://www.cnblogs.com/lin1216/p/12677841.html