RAID(Redundant Array of Independent Disks)独立冗余磁盘阵列

RAID技术通过把多个硬盘设备组合成一个容量更大、安全性更好的磁盘阵列,并把数据切割成多个区段后分别存放在各个不同的物理硬盘设备上,然后利用分散读写技术来提升磁盘阵列整体的性能,同时把多个重要数据的副本同步到不同的物理硬盘设备上,起到很好的数据冗余备份效果。

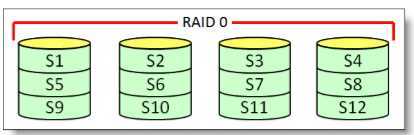

RAID 0

RAID 0技术将多块物理硬盘设备(至少两块)通过硬件或软件的方式串联在一起,组成一个大的卷组,并将数据依次写入各个物理硬盘中(数据分开存放), 如此在理想状态下硬盘设备的读写性能会提升数倍,但是若任意一块硬盘发生故障将导致整个系统的数据都遭到破坏。

RAID 0 能够有效的提升硬盘数据的吞吐速度,但是不具备数据备份和错误修复能力。

RAID 1

RAID 1技术将两块及以上的硬盘设备进行绑定,数据同时写入到两块硬盘中,可将其视为数据的镜像或备份。当其中一块硬盘发生故障后,数据不会丢失,会立即根据备份硬盘恢复数据,数据的安全性得以提升,但是硬盘设备的利用率得以下降。

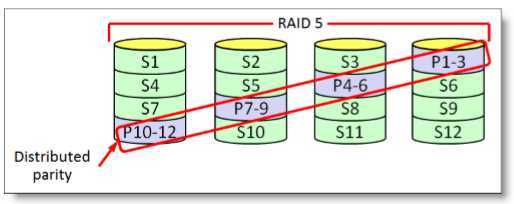

RAID 5

RAID 5 技术是把硬盘设备的数据奇偶校验信息保存到其他硬盘设备中。磁盘阵列组中的数据的奇偶校验信息并不单独保存到某一块硬盘中,而是存储到除自身以外的其他每一块硬盘设备上。如此当任何一台设备出现损坏后不至于破坏整个系统的数据。

RAID 5 并没有备份硬盘中的真实数据信息,而是当硬盘设备出现问题后通过奇偶校验信息来尝试重建损坏的数据。

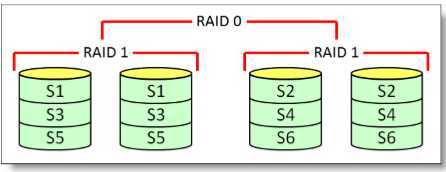

RAID 10

RAID 10 技术是RAID 1 + RAID 0 的组合体。RAID 10 技术至少需要四块硬盘来组建,首先两两制作成RAID 1磁盘阵列,以保证数据的安全性,再对两个RAID 1实施RAID 0 技术,进一步提高硬盘设备的读写速度。RAID 10技术是当前最广泛使用的一种存储技术。

部署磁盘阵列

1. 准备4块磁盘制作一个RAID 10 磁盘阵列

[root@promote ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 20G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 19G 0 part ├─rhel-root 253:0 0 17G 0 lvm / └─rhel-swap 253:1 0 2G 0 lvm [SWAP] sdb 8:16 0 20G 0 disk sdc 8:32 0 20G 0 disk sdd 8:48 0 20G 0 disk sde 8:64 0 20G 0 disk sr0 11:0 1 4.2G 0 rom /run/media/root/RHEL-7.6 Server.x86_64

2. 使用mdadm命令创建RAID 10,名称为/dev/md0

[root@promote ~]# mdadm -Cv /dev/md0 -a yes -n 4 -l 10 /dev/sdb /dev/sdc /dev/sdd /dev/sde mdadm: layout defaults to n2 mdadm: layout defaults to n2 mdadm: chunk size defaults to 512K mdadm: size set to 20954112K mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md0 started.

mdadm命令用于管理Linux系统中的软件RAID磁盘阵列,格式为:mdadm [模式] RAID设备名称 [选项] [成员设备名称]

-C:创建一个RAID阵列卡

-v:显示创建过程

-a:检测设备名称

-n:指定设备数量

-l:指定RAID级别

3. 将制作好的RAID磁盘阵列格式化为ext4格式

[root@promote ~]# mkfs.ext4 /dev/md0 mke2fs 1.42.9 (28-Dec-2013) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) Stride=128 blocks, Stripe width=256 blocks 2621440 inodes, 10477056 blocks 523852 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=2157969408 320 block groups 32768 blocks per group, 32768 fragments per group 8192 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624 Allocating group tables: done Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done

4. 创建挂载点并将硬盘设备进行挂载(并写入fstab使其永久生效)

[root@promote ~]# mkdir /RAID [root@promote ~]# mount /dev/md0 /RAID [root@promote ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/rhel-root 17G 8.6G 8.4G 51% / devtmpfs 894M 0 894M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 11M 900M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/sda1 1014M 178M 837M 18% /boot tmpfs 182M 8.0K 182M 1% /run/user/42 tmpfs 182M 20K 182M 1% /run/user/0 /dev/sr0 4.2G 4.2G 0 100% /run/media/root/RHEL-7.6 Server.x86_64 /dev/md0 40G 49M 38G 1% /RAID

5. 使用-D参数查看/dev/md0磁盘阵列的详细信息

[root@promote ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Fri Apr 17 18:07:46 2020 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Apr 17 18:10:29 2020 State : clean Active Devices : 4 Working Devices : 4 Failed Devices : 0 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Name : promote.cache-dns.local:0 (local to host promote.cache-dns.local) UUID : 7e91561d:5eb5f8da:8aed3287:06dd276c Events : 17 Number Major Minor RaidDevice State 0 8 16 0 active sync set-A /dev/sdb 1 8 32 1 active sync set-B /dev/sdc 2 8 48 2 active sync set-A /dev/sdd 3 8 64 3 active sync set-B /dev/sde

损坏磁盘阵列及修复

1. 使用-f参数模拟/dev/sdb损坏

[root@promote ~]# mdadm /dev/md0 -f /dev/sdb mdadm: set /dev/sdb faulty in /dev/md0 [root@promote ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Fri Apr 17 18:07:46 2020 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Apr 17 18:14:27 2020 State : clean, degraded Active Devices : 3 Working Devices : 3 Failed Devices : 1 Spare Devices : 0 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Name : promote.cache-dns.local:0 (local to host promote.cache-dns.local) UUID : 7e91561d:5eb5f8da:8aed3287:06dd276c Events : 19 Number Major Minor RaidDevice State - 0 0 0 removed 1 8 32 1 active sync set-B /dev/sdc 2 8 48 2 active sync set-A /dev/sdd 3 8 64 3 active sync set-B /dev/sde 0 8 16 - faulty /dev/sdb

2. 修复磁盘阵列

RAID 10 级别的磁盘阵列中,RAID1磁盘阵列中存在一个故障盘时并不影响RAID 10 磁盘阵列的使用,因此将新的磁盘设备使用mdadm命令予以替换即可,在此期间可正常在/RAID目录中创建删除文件。

[root@promote ~]# mdadm /dev/md0 -r /dev/sdb #-r 移除设备 mdadm: hot removed /dev/sdb from /dev/md0 [root@promote ~]# umount /RAID [root@promote ~]# mdadm /dev/md0 -a /dev/sdb mdadm: added /dev/sdb [root@promote ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Fri Apr 17 18:07:46 2020 Raid Level : raid10 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 4 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Apr 17 18:18:56 2020 State : clean, degraded, recovering Active Devices : 3 Working Devices : 4 Failed Devices : 0 Spare Devices : 1 Layout : near=2 Chunk Size : 512K Consistency Policy : resync Rebuild Status : 24% complete Name : promote.cache-dns.local:0 (local to host promote.cache-dns.local) UUID : 7e91561d:5eb5f8da:8aed3287:06dd276c Events : 27 Number Major Minor RaidDevice State 4 8 16 0 spare rebuilding /dev/sdb 1 8 32 1 active sync set-B /dev/sdc 2 8 48 2 active sync set-A /dev/sdd 3 8 64 3 active sync set-B /dev/sde

[root@promote ~]# mount -a

磁盘阵列+备份盘

准备一块足够大的硬盘,这块硬盘平时处于闲置状态,一旦RAID磁盘阵列中有硬盘出现故障则立刻自动顶替。

实验:部署RAID 5 磁盘阵列 + 备份盘

1. 准备磁盘

[root@promote ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 20G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 19G 0 part ├─rhel-root 253:0 0 17G 0 lvm / └─rhel-swap 253:1 0 2G 0 lvm [SWAP] sdb 8:16 0 20G 0 disk sdc 8:32 0 20G 0 disk sdd 8:48 0 20G 0 disk sde 8:64 0 20G 0 disk sr0 11:0 1 4.2G 0 rom /run/media/root/RHEL-7.6 Server.x86_64

2. 创建RAID 5 磁盘阵列 + 备份盘

[root@promote ~]# mdadm -Cv /dev/md0 -n 3 -l 5 -x 1 /dev/sdb /dev/sdc /dev/sdd /dev/sde mdadm: layout defaults to left-symmetric mdadm: layout defaults to left-symmetric mdadm: chunk size defaults to 512K mdadm: size set to 20954112K mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md0 started. [root@promote ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Fri Apr 17 18:27:04 2020 Raid Level : raid5 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Apr 17 18:27:12 2020 State : clean, degraded, recovering Active Devices : 2 Working Devices : 4 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 17% complete Name : promote.cache-dns.local:0 (local to host promote.cache-dns.local) UUID : a94fe735:305eb880:09eaccd2:ab1ff508 Events : 3 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc 4 8 48 2 spare rebuilding /dev/sdd 3 8 64 - spare /dev/sde

3. 格式化

[root@promote ~]# mkfs.ext4 /dev/md0 mke2fs 1.42.9 (28-Dec-2013) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) Stride=128 blocks, Stripe width=256 blocks 2621440 inodes, 10477056 blocks 523852 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=2157969408 320 block groups 32768 blocks per group, 32768 fragments per group 8192 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624 Allocating group tables: done Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done

4. 挂载

[root@promote ~]# mkdir /RAID [root@promote ~]# echo "/dev/md0 /RAID ext4 defaults 0 0" >> /etc/fstab [root@promote ~]# mount -a [root@promote ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/rhel-root 17G 8.6G 8.4G 51% / devtmpfs 894M 0 894M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 11M 900M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/sda1 1014M 178M 837M 18% /boot tmpfs 182M 20K 182M 1% /run/user/0 /dev/sr0 4.2G 4.2G 0 100% /run/media/root/RHEL-7.6 Server.x86_64 /dev/md0 40G 49M 38G 1% /RAID

5. 模拟/dev/sdb损坏测试备份盘效果

[root@promote ~]# mdadm /dev/md0 -f /dev/sdb mdadm: set /dev/sdb faulty in /dev/md0 [root@promote ~]# mdadm -D /dev/md0 /dev/md0: Version : 1.2 Creation Time : Fri Apr 17 18:27:04 2020 Raid Level : raid5 Array Size : 41908224 (39.97 GiB 42.91 GB) Used Dev Size : 20954112 (19.98 GiB 21.46 GB) Raid Devices : 3 Total Devices : 4 Persistence : Superblock is persistent Update Time : Fri Apr 17 18:30:17 2020 State : clean, degraded, recovering Active Devices : 2 Working Devices : 3 Failed Devices : 1 Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Consistency Policy : resync Rebuild Status : 19% complete Name : promote.cache-dns.local:0 (local to host promote.cache-dns.local) UUID : a94fe735:305eb880:09eaccd2:ab1ff508 Events : 30 Number Major Minor RaidDevice State 3 8 64 0 spare rebuilding /dev/sde 1 8 32 1 active sync /dev/sdc 4 8 48 2 active sync /dev/sdd 0 8 16 - faulty /dev/sdb

原文:https://www.cnblogs.com/wanao/p/12721718.html