作为一个python小白,在下面的问题中出错:

1.因为豆瓣页面的数据加载涉及到异步加载,所以需要通过浏览器获取到真正的网页链接。

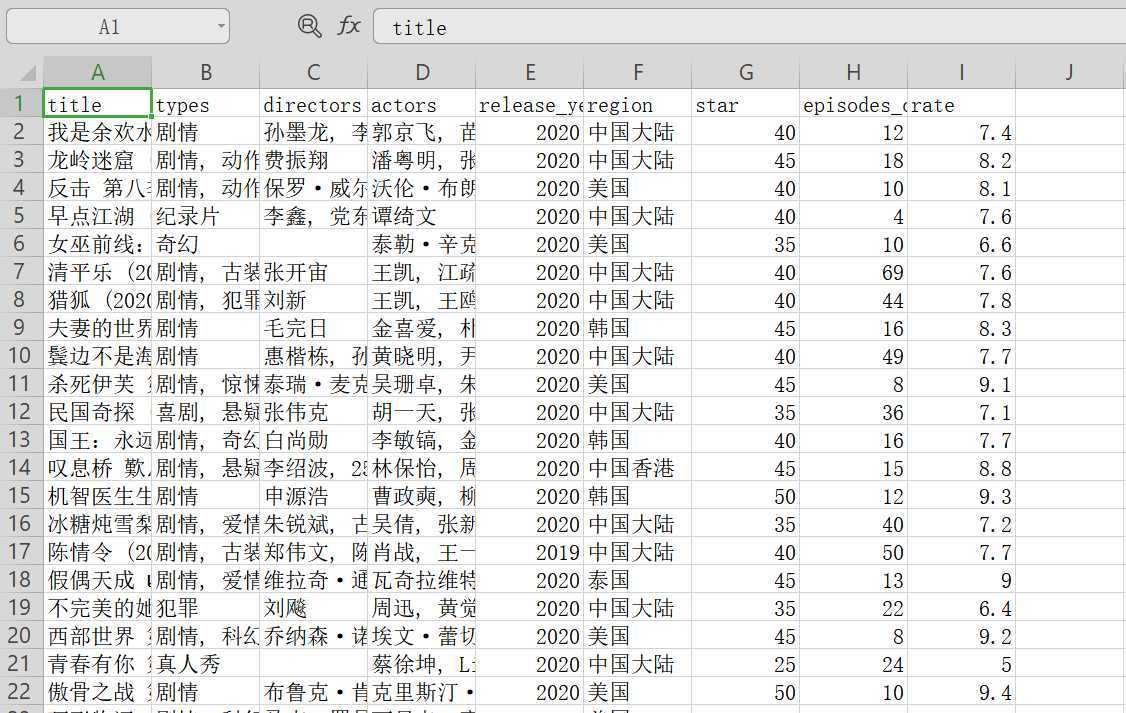

2.将字典转化为DataFrame以后写入.csv文件。DataFrame是一个表单一样的数据结构。

3.从网页获取的json数据的处理。

代码:

import re import requests from bs4 import BeautifulSoup import time import random import string import logging import json import jsonpath import pandas as pd import pdb User_Agents = [ ‘Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10_6_8; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50‘, ‘Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50‘, ‘Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0‘, ‘Mozilla/5.0 (Macintosh; Intel Mac OS X 10.6; rv:2.0.1) Gecko/20100101 Firefox/4.0.1‘, ‘Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; en) Presto/2.8.131 Version/11.11‘, ‘Opera/9.80 (Windows NT 6.1; U; en) Presto/2.8.131 Version/11.11‘, ‘Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_0) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11‘, ] class DoubanSpider(object): ‘‘‘豆瓣爬虫‘‘‘ def __init__(self): # 基本的URL self.base_url = ‘https://movie.douban.com/j/search_subjects?type=tv&tag=%E7%83%AD%E9%97%A8&sort=recommend&page_limit=20&page_‘ + ‘start={start}‘ self.full_url = self.base_url self.tv_detailurl = ‘https://movie.douban.com/j/subject_abstract?subject_id=‘ def download_tvs(self, offset): # offset控制一次下载的量,resp返回的响应体 self.headers = {‘User-Agent‘: random.choice(User_Agents)} self.full_url = self.base_url.format(start=offset) resp = None try: resp = requests.get(self.full_url, headers=self.headers) except Exception as e: print(logging.error(e)) return resp def get_tvs(self, resp): # resp响应体 # movies爬取到的电影信息 print(‘get_tvs‘) print(resp) tv_urls = [] if resp: if resp.status_code == 200: html = resp.text unicodestr = json.loads(html) tv_list = unicodestr[‘subjects‘] for item in tv_list: data = re.findall(r‘[0-9]+‘, str(item[‘url‘])) tv_urls.append(self.tv_detailurl + str(data[0])) print(‘tv_urls‘) return tv_urls return None def download_detailtvs(self, tv_urls): tvs = [] for item in tv_urls: self.headers = {‘User-Agent‘: random.choice(User_Agents)} resp = requests.get(item, headers=self.headers) html = resp.text unicodestr = json.loads(html) tvs.append(unicodestr[‘subject‘]) return tvs def main(): spider = DoubanSpider() offset =0 data = {‘title‘:[], ‘types‘:[], ‘directors‘:[], ‘actors‘:[], ‘release_year‘:[], ‘region‘:[], ‘star‘:[], ‘episodes_count‘:[],‘rate‘:[]} tv_file = pd.DataFrame(data) tv_file.to_csv(‘res_url.csv‘,mode=‘w‘,index=None) while True: reps = spider.download_tvs(offset) tv_urls = spider.get_tvs(reps) tvss = spider.download_detailtvs(tv_urls) for tvsss in tvss: ‘‘‘ #pdb.set_trace() tvsss=re.sub(r‘\\u200e‘,‘‘,tvsss) tvsss = re.sub(r‘\‘‘, ‘\"‘, tvsss) tvsss = re.sub(r‘\‘‘, ‘\"‘, tvsss) #将short_comment去掉 tvsss = re.sub(r‘(\"short_comment\").*(\"directors\")‘, ‘\"directors\"‘,tvsss) #将true,false改为"True","False" tvsss = re.sub(r‘True‘, ‘\"True\"‘, tvsss) tvsss = re.sub(r‘False‘, ‘\"False\"‘, tvsss) #给所有的list加上双引号 print(tvsss) #将: [转化为: "[ tvsss = re.sub(r‘: \[‘, ‘: "[‘, tvsss) #jiang ],zhuanhuawei ]", tvsss=re.sub(r‘\],‘,‘]",‘,tvsss) # 以上正确 print(tvsss) #将director的内容改为单引号 r1 = re.findall(r‘(?<=directors": "\[).*?(?=\]\")‘, tvsss) #正确 if r1: r2 = re.sub(r‘\"‘, ‘\‘‘, r1[0]) r3 = re.sub(r‘\"‘, ‘\‘‘, r2) tvsss = re.sub(r‘(?<=directors\": \"\[).*?(?=\]\")‘, r3, tvsss) #zhengque #将actors的内容改为单引号 print(tvsss) r1 = re.findall(r‘(?<=actors\": \"\[).*?(?=\]\")‘, tvsss) print("actors") print(r1) if r1: r2 = re.sub(r‘\"‘, ‘\‘‘, r1[0]) r3 = re.sub(r‘\"‘, ‘\‘‘, r2) tvsss = re.sub(r‘(?<=actors\": \"\[)[\s\S]*?(?=\]\")‘, r3, tvsss) #将剧情types改为单引号 print(tvsss) r1 = re.findall(r‘(?<=types": "\[).*?(?=\]\")‘, tvsss) if r1: r2 = re.sub(r‘\"‘, ‘\‘‘, r1[0]) r3 = re.sub(r‘\"‘, ‘\‘‘, r2) tvsss = re.sub(r‘(?<=types\": \"\[).*?(?=\]\")‘, r3, tvsss) # 正确 #将二维的数据转化为一维的 types=str(tvs[‘types‘]) actor = str(tvs[‘actors‘]) director = str(tvs[‘directors‘]) types=re.sub(r‘\‘‘,‘‘,types) actor = re.sub(r‘\‘‘, ‘‘, actor) director = re.sub(r‘\‘‘, ‘‘, director) types = re.sub(r‘\‘‘, ‘‘, types) actor= re.sub(r‘\‘‘, ‘‘, actor) director = re.sub(r‘\‘‘, ‘‘, director) types=types.strip(‘[]‘) actor=actor.strip(‘[]‘) director=director.strip(‘[]‘) data2={‘title‘:tvs[‘title‘], ‘types‘:types, ‘directors‘:director, ‘actors‘:actor, ‘release_year‘:tvs[‘release_year‘], ‘region‘:tvs[‘region‘], ‘star‘:tvs[‘star‘], ‘episodes_count‘:tvs[‘episodes_count‘],‘rate‘:tvs[‘rate‘]} print(data2) tv_file=pd.DataFrame(data2,index=[0]) #tv_file = pd.DataFrame(data) # pdb.set_trace() tv_file.to_csv(‘res_url.csv‘, mode=‘a‘, index=None,header=None) offset += 20 id = offset # 控制访问速速 time.sleep(10) if __name__ == ‘__main__‘: main()

原文:https://www.cnblogs.com/yaggy/p/12740739.html