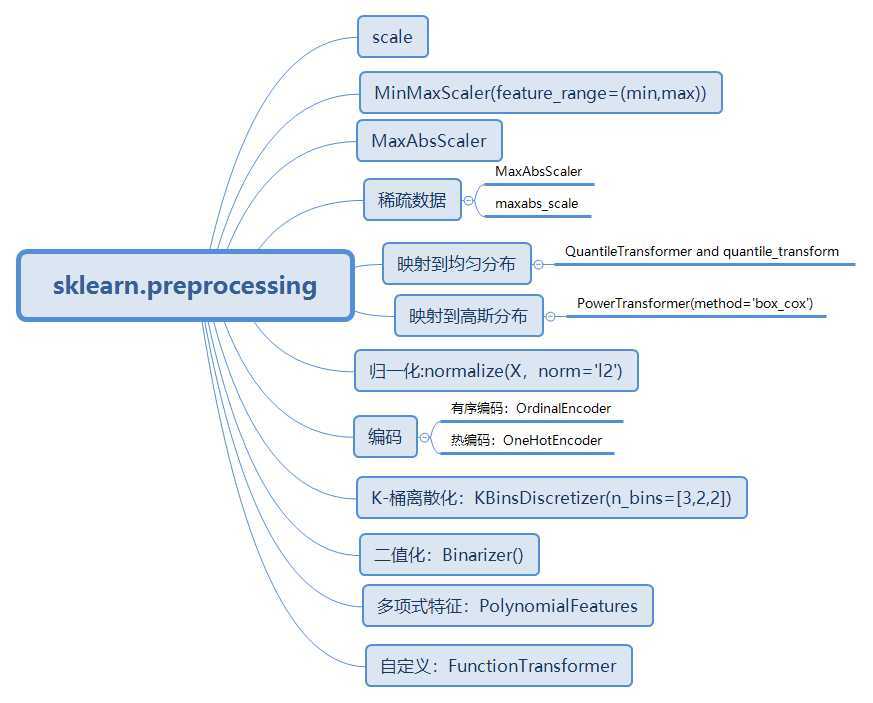

笔记:Preprocessing data — scikit-learn 0.22.2 documentation

from sklearn import preprocessing

import numpy as np

X_train=np.array([[1.,-1.,2.],

[1.,0.,0.],

[0.,1.0,-1.]])

help(preprocessing.scale)

scale(X, axis=0, with_mean=True, with_std=True, copy=True)

axis=0:默认是按照每一个特征(即按照列)进行标准化;

axis=1:则为行,按照样本进本进行标准化

X_scaled=preprocessing.scale(X_train)

X_scaled

array([[ 0.70710678, -1.22474487, 1.33630621],

[ 0.70710678, 0. , -0.26726124],

[-1.41421356, 1.22474487, -1.06904497]])

X_scaled.mean(axis=0)

array([7.40148683e-17, 0.00000000e+00, 0.00000000e+00])

X_scaled.std(axis=0)

array([1., 1., 1.])

#与之类似的是StandarScaler()

scaler=preprocessing.StandardScaler().fit(X_train)

scaler.mean_

array([0.66666667, 0. , 0.33333333])

#标准差

scaler.scale_

#等价于X_train.std(axis=0)

array([0.47140452, 0.81649658, 1.24721913])

scaler.transform(X_train)

array([[ 0.70710678, -1.22474487, 1.33630621],

[ 0.70710678, 0. , -0.26726124],

[-1.41421356, 1.22474487, -1.06904497]])

#对新数据

X_test=[[-1.,1.,0.]]

scaler.transform(X_test)

array([[-3.53553391, 1.22474487, -0.26726124]])

help(preprocessing.MinMaxScaler):

X_std = (X - X.min(axis=0)) / (X.max(axis=0) - X.min(axis=0))

X_scaled = X_std * (max - min) + min

preprocessing.MinMaxScaler(feature_range=(min,max),copy=True)

min_max_scaler=preprocessing.MinMaxScaler()

X_train_minmax=min_max_scaler.fit_transform(X_train)

X_train_minmax

array([[1. , 0. , 1. ],

[1. , 0.5 , 0.33333333],

[0. , 1. , 0. ]])

#对于新数据的处理

X_test=np.array([[-3.,-1.,4.]])

X_test_minmax=min_max_scaler.transform(X_test)

X_test_minmax

array([[-3. , 0. , 1.66666667]])

min_max_scaler.scale_

array([1. , 0.5 , 0.33333333])

min_max_scaler.min_

array([0. , 0.5 , 0.33333333])

help(preprocessing.MaxAbsScaler):

class MaxAbsScaler(sklearn.base.TransformerMixin, sklearn.base.BaseEstimator)

Scale each feature by its maximum absolute(最大值的绝对值) value.

max_abs_scaler=preprocessing.MaxAbsScaler()

X_train=np.array([[1.,-1.,-2.],

[2.,0.,0.],

[0.,1.,-1.]])

X_train_maxabs=max_abs_scaler.fit_transform(X_train)

X_train_maxabs

array([[ 0.5, -1. , -1. ],

[ 1. , 0. , 0. ],

[ 0. , 1. , -0.5]])

help(preprocessing.QuantileTransformer)

class QuantileTransformer(sklearn.base.TransformerMixin, sklearn.base.BaseEstimator)

Transform features using quantiles information(使用分位数信息变换特征).

preprocessing.QuantileTransformer(n_quantiles=1000, output_distribution=‘uniform‘, ignore_implicit_zeros=False, subsample=100000, random_state=None, copy=True)[source]?

Marginal distribution for the transformed data. The choices are ‘uniform’ (default) or ‘normal’.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

X,y=load_iris(return_X_y=True)

X_train,X_test,y_train,y_test=train_test_split(X,y,random_state=0)

quantile_transformer=preprocessing.QuantileTransformer(random_state=0)

X_train_trans=quantile_transformer.fit_transform(X_train)

d:\software\python\lib\site-packages\sklearn\preprocessing\_data.py:2357: UserWarning: n_quantiles (1000) is greater than the total number of samples (112). n_quantiles is set to n_samples.

% (self.n_quantiles, n_samples))

X_test_trans=quantile_transformer.transform(X_test)

np.percentile(X_train[:,0],[0,25,50,75,100])

array([4.3, 5.1, 5.8, 6.5, 7.9])

np.percentile(X_train_trans[:,0],[0,25,50,75,100])

array([0. , 0.23873874, 0.50900901, 0.74324324, 1. ])

#### 映射到高斯分布

help(preprocessing.PowerTransformer)

class PowerTransformer(sklearn.base.TransformerMixin, sklearn.base.BaseEstimator)

| Apply a power transform featurewise to make data more Gaussian-like.

Parameters

| method : str, (default=‘yeo-johnson‘)

| The power transform method. Available methods are:

|

| - ‘yeo-johnson‘ [1]_, works with positive and negative values

| - ‘box-cox‘ [2]_, only works with strictly positive values

pt=preprocessing.PowerTransformer(method=‘box-cox‘,standardize=False)

X_lognormal=np.random.RandomState(616).lognormal(size=(3,3))

X_lognormal

array([[1.28331718, 1.18092228, 0.84160269],

[0.94293279, 1.60960836, 0.3879099 ],

[1.35235668, 0.21715673, 1.09977091]])

pt.fit_transform(X_lognormal)

array([[ 0.49024349, 0.17881995, -0.1563781 ],

[-0.05102892, 0.58863195, -0.57612414],

[ 0.69420009, -0.84857822, 0.10051454]])

help(preprocessing.normalize)

normalize(X, norm=‘l2‘, axis=1, copy=True, return_norm=False)

Scale input vectors individually to unit norm (vector length).

norm : ‘l1‘, ‘l2‘, or ‘max‘, optional (‘l2‘ by default)

The norm to use to normalize each non zero sample (or each non-zero feature if axis is 0).

axis : 0 or 1, optional (1 by default)

axis used to normalize the data along. If 1, independently normalize each sample, otherwise (if 0) normalize each feature.

X_train=np.array([[1.,-1.,-2.],

[2.,0.,0.],

[0.,1.,-1.]])

X_train_normalized=preprocessing.normalize(X_train,norm=‘l2‘)

X_train_normalized

array([[ 0.40824829, -0.40824829, -0.81649658],

[ 1. , 0. , 0. ],

[ 0. , 0.70710678, -0.70710678]])

#使用管道函数:sklearn.pipeline.Pipeline

normalizer=preprocessing.Normalizer().fit(X_train)

normalizer

Normalizer(copy=True, norm=‘l2‘)

normalizer.transform(X_train)

array([[ 0.40824829, -0.40824829, -0.81649658],

[ 1. , 0. , 0. ],

[ 0. , 0.70710678, -0.70710678]])

#### 编码

help(preprocessing.OrdinalEncoder):

class OrdinalEncoder(_BaseEncoder)

| Encode categorical features as an integer array.

| The input to this transformer should be an array-like of integers or strings, denoting the values taken on by categorical (discrete) features.

| The features are converted to ordinal integers. This results in a single column of integers (0 to n_categories - 1) per feature.

enc = preprocessing.OrdinalEncoder()

X = [[‘male‘, ‘from US‘, ‘uses Safari‘], [‘female‘, ‘from Europe‘, ‘uses Firefox‘]]

enc.fit(X)

OrdinalEncoder(categories=‘auto‘, dtype=<class ‘numpy.float64‘>)

enc.transform([[‘female‘, ‘from US‘, ‘uses Safari‘]])

array([[0., 1., 1.]])

genders = [‘female‘, ‘male‘]

locations = [‘from Africa‘, ‘from Asia‘, ‘from Europe‘, ‘from US‘]

browsers = [‘uses Chrome‘, ‘uses Firefox‘, ‘uses IE‘, ‘uses Safari‘]

enc = preprocessing.OneHotEncoder(categories=[genders, locations, browsers])

X = [[‘male‘, ‘from US‘, ‘uses Safari‘], [‘female‘, ‘from Europe‘, ‘uses Firefox‘]]

enc.fit(X)

OneHotEncoder(categories=[[‘female‘, ‘male‘],

[‘from Africa‘, ‘from Asia‘, ‘from Europe‘,

‘from US‘],

[‘uses Chrome‘, ‘uses Firefox‘, ‘uses IE‘,

‘uses Safari‘]],

drop=None, dtype=<class ‘numpy.float64‘>, handle_unknown=‘error‘,

sparse=True)

enc.transform([[‘female‘, ‘from Asia‘, ‘uses Chrome‘]]).toarray()

#逆操作

enc.inverse_transform(np.array([[1., 0., 0., 1., 0., 0., 1., 0., 0., 0.]]))

array([[‘female‘, ‘from Asia‘, ‘uses Chrome‘]], dtype=object)

enc.categories_

[array([‘female‘, ‘male‘], dtype=object),

array([‘from Africa‘, ‘from Asia‘, ‘from Europe‘, ‘from US‘], dtype=object),

array([‘uses Chrome‘, ‘uses Firefox‘, ‘uses IE‘, ‘uses Safari‘],

dtype=object)]

enc.get_feature_names()

array([‘x0_female‘, ‘x0_male‘, ‘x1_from Africa‘, ‘x1_from Asia‘,

‘x1_from Europe‘, ‘x1_from US‘, ‘x2_uses Chrome‘,

‘x2_uses Firefox‘, ‘x2_uses IE‘, ‘x2_uses Safari‘], dtype=object)

#### K-bins离散化

help(preprocessing.KBinsDiscretizer):

class KBinsDiscretizer(sklearn.base.TransformerMixin,sklearn.base.BaseEstimator) Bin continuous data into intervals.

Parameters

n_bins : int or array-like, shape (n_features,) (default=5)

The number of bins to produce. Raises ValueError if n_bins < 2.

encode : {‘onehot‘, ‘onehot-dense‘, ‘ordinal‘}, (default=‘onehot‘)Method used to encode the transformed result.

onehot:Encode the transformed result with one-hot encoding and return a sparse matrix. Ignored features are always stacked to the right.

onehot-dense:Encode the transformed result with one-hot encoding and return a dense array. Ignored features are always stacked to the right.

ordinal:Return the bin identifier encoded as an integer value.

strategy : {‘uniform‘, ‘quantile‘, ‘kmeans‘}, (default=‘quantile‘)

Strategy used to define the widths of the bins.

X=np.array([[-3,5.,15],

[0.,6.,14],

[6.,3.,11]])

est=preprocessing.KBinsDiscretizer(n_bins=[3,2,2],encode=‘ordinal‘).fit(X)

#生成的箱是左闭右开

"""

特征1:[-3,-1)-->0;

[-1,2)-->1;

[2,6]-->3

"""

est.bin_edges_

array([array([-3., -1., 2., 6.]), array([3., 5., 6.]),

array([11., 14., 15.])], dtype=object)

est.transform(X)

array([[0., 1., 1.],

[1., 1., 1.],

[2., 0., 0.]])

help(preprocessing.Binarizer):二值编码(默认阈值为0)

class Binarizer(sklearn.base.TransformerMixin, sklearn.base.BaseEstimator)

| Binarize data (set feature values to 0 or 1) according to a threshold

| Values greater than the threshold map to 1, while values less than or equal to the threshold map to 0. With the default threshold of 0,

| only positive values map to 1.

| Binarization is a common operation on text count data where the analyst can decide to only consider the presence or absence of a

| feature rather than a quantified number of occurrences for instance.

| It can also be used as a pre-processing step for estimators that consider boolean random variables (e.g. modelled using the Bernoulli

| distribution in a Bayesian setting).

Parameters

| threshold : float, optional (0.0 by default)

| Feature values below or equal to this are replaced by 0, above it by 1.

| Threshold may not be less than 0 for operations on sparse matrices.

binarizer = preprocessing.Binarizer(threshold=5.5).fit(X) # fit does nothing

binarizer.transform(X)

array([[0., 0., 1.],

[0., 1., 1.],

[1., 0., 1.]])

#### 生成多项式特征

help(preprocessing.PolynomialFeatures)

class PolynomialFeatures(sklearn.base.TransformerMixin,sklearn.base.BaseEstimator)

| Generate polynomial and interaction features.

|

| Generate a new feature matrix consisting of all polynomial combinations of the features with degree less than or equal to the specified degree.

| For example, if an input sample is two dimensional and of the form [a, b], the degree-2 polynomial features are [1, a, b, a^2, ab, b^2].

Parameters

degreeinteger:The degree of the polynomial features. Default = 2.

interaction_onlyboolean, default = False

If true, only interaction features are produced: features that are products of at most degree distinct input features (so not x[1] ** 2, x[0] * x[2] ** 3, etc.).

include_biasboolean:

If True (default), then include a bias column, the feature in which all polynomial powers are zero (i.e. a column of ones -acts as an intercept term in a linear model).

X=np.arange(6).reshape(3,2)

X

array([[0, 1],

[2, 3],

[4, 5]])

poly=preprocessing.PolynomialFeatures(2)

poly.fit_transform(X)

array([[ 1., 0., 1., 0., 0., 1.],

[ 1., 2., 3., 4., 6., 9.],

[ 1., 4., 5., 16., 20., 25.]])

poly1=preprocessing.PolynomialFeatures(1)

poly1.fit_transform(X)

array([[1., 0., 1.],

[1., 2., 3.],

[1., 4., 5.]])

#交互项设置

X=np.arange(9).reshape(3,3)

X

array([[0, 1, 2],

[3, 4, 5],

[6, 7, 8]])

poly=preprocessing.PolynomialFeatures(degree=3,interaction_only=True)

poly.fit_transform(X)

#X的特征已经从(x1,x2,x3)到(1,x1,x2,x3,x1x2,x1x3,x2x3,x1x2x3)

array([[ 1., 0., 1., 2., 0., 0., 2., 0.],

[ 1., 3., 4., 5., 12., 15., 20., 60.],

[ 1., 6., 7., 8., 42., 48., 56., 336.]])

#### 自定义编码

help(preprocessing.FunctionTransformer)

transformer=preprocessing.FunctionTransformer(np.log1p,validate=True)

#np.log1p:在整个数组上逐个元素操作的函数,Calculates ``log(1 + x)``.。

X=np.array([[0,1],[2,3]])

transformer.transform(X)

array([[0. , 0.69314718],

[1.09861229, 1.38629436]])原文:https://www.cnblogs.com/B-Hanan/p/12774056.html