X = tf.random_normal(shape=[100,1])

y_true = tf.matmul(X,[[0.8]])+0.7

weight = tf.Variable(initial_value=tf.random_normal(shape=[1,1]))

bias = tf.Variable(initial_value=tf.random_normal(shape=[1,1]))

y_predict = tf.matmul(X,weight)+bias

error = tf.reduce_mean(tf.square(y_predict - y_true))

tf.train.GradientDescentOptimizer(learning_rate=0.1).minimize(error)

Where learning_rate is the learning rate, which is generally a relatively small value between 0-1. Because the loss is to be minimized, the minimize() method of the gradient descent optimizer is called.

import os

os.environ[‘TF_CPP_MIN_LOG_LEVEL‘] = ‘2‘

import tensorflow as tf

def linear_regression():

# 1.Prepare data

X = tf.random_normal(shape=[100,1])

y_true = tf.matmul(X,[[0.8]]) + 0.7

# Construct weights and bias, use variables to create

weight = tf.Variable(initial_value=tf.random_normal(shape=[1,1]))

bias = tf.Variable(initial_value=tf.random_normal(shape=[1,1]))

y_predict = tf.matmul(X,weight) + bias

# 2.Construct loss function

error = tf.reduce_mean(tf.square(y_predict-y_true))

# 3.Optimization loss

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.1).minimize(error)

# Initialize variables

init = tf.global_variables_initializer()

# Start conversation

with tf.Session() as sess:

# Run initialization variables

sess.run(init)

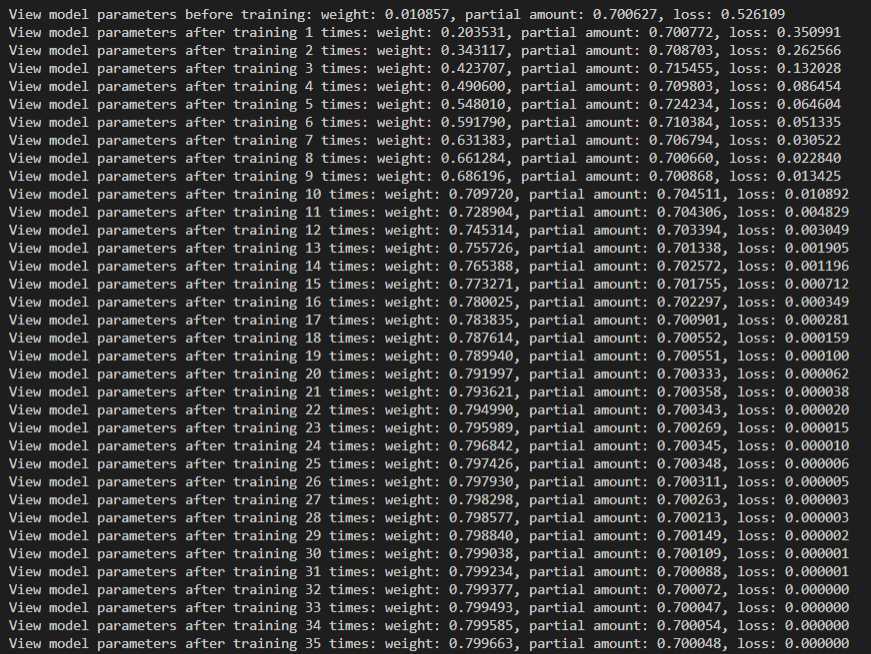

print(‘View model parameters before training: weight: %f, partial amount: %f, loss: %f‘%(weight.eval(),bias.eval(),error.eval()))

# Start training

for i in range(100):

sess.run(optimizer)

print(‘View model parameters after training %d times: weight: %f, partial amount: %f, loss: %f‘%((i+1), weight.eval(), bias.eval(), error.eval()))

if __name__ == ‘__main__‘:

linear_regression()

原文:https://www.cnblogs.com/monsterhy123/p/13089987.html