根据上一篇的文章,我们已经能够获取变速后的声音数据了,接下来我们需要能够播放声音,刚开始我使用的是NAudio技术,因为毕竟游戏的首要发布平台是steam,并且主要是兼容windows系统。但是我遇到了两个问题:

因为这两个问题的原因,我决定使用原生OpenAL接口,重新写一套声音播放插件,其实还有很关键的一点,OpenAL和OpenGL很像,比较偏底层,我自身很喜欢这些底层的技术。

本文分为四部分接收,简短的加了第一部分,OpenAL介绍入门,剩下的三部分和之前一样,所以,目录如下:

OpenAL入门

我们只要打开搜索引擎输入openal,首先映入眼帘的就是OpenAL: Cross Platform 3D Audio,这是OpenAL的官方网站,在这个网站我们完全可以正确的学习OpenAL的知识。通过首页的介绍,我们可以得到如下信息:

文档1是OpenAL 1.1规范和参考,详细介绍OpenAL的使用和部分设计原理,这部分可以挑选着看,我是挑选着看的。

文档2是关于OpenAL API的介绍,这部分对初学者很重要,尤其是对我们打算开发的插件很重要,并且这部分很容易就看懂

最后是OpenAL的下载相关,既然是关于OpenAL的使用,我们直接进入环境配置介绍

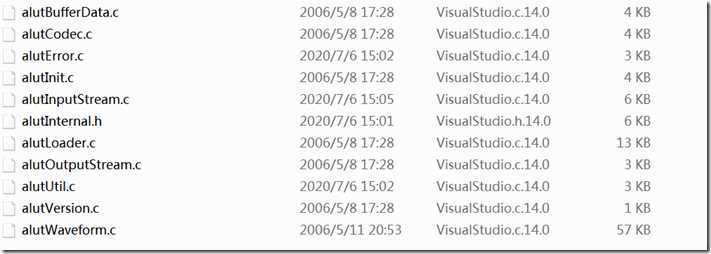

OpenAL插件开发环境配置

接口设计及接口实现

考虑一下我们需要实现的功能,首先必须支持多个声音同时播放,因为我们之前设置视频插件的时候视频是支持10个同时播放的,所以这里我们也需要支持10个音频同时播放。跟视频插件的结构类似的,我们需要有一个音频数据结构的类,我们命名为AudioData,同样跟视频插件相似的,需要一个存储数据缓存的环形队列,我们命名为BufferData,接下来,列出需要实现的接口:

首先是BufferData.h

#pragma once #include <alc.h> #include <al.h> const ALint maxValue = 20; class BufferData { public: BufferData(); inline bool isFull() { return (circleEnd == circleStart - 1 || (circleEnd == maxValue - 1 && circleStart == 0)); } inline bool isEmpty() { return (circleStart == circleEnd); } inline void PushAll(int length) { for (int index = 0; index < length; index++) { Push(tempBuffer[index]); } } bool Push(ALuint value); ALuint Pop(); ALuint tempBuffer[maxValue]; ALuint acheBuffers[maxValue]; ALint circleStart = 0; ALint circleEnd = 0; ~BufferData(); private: };

BufferData.cpp

#include "BufferData.h" bool BufferData::Push(ALuint value) { if (isFull()) { return false; } if (circleEnd < maxValue - 1) { acheBuffers[++circleEnd] = value; } else if (circleStart > 0) { circleEnd = 0; acheBuffers[circleEnd] = value; } return true; } ALuint BufferData::Pop() { if (circleStart == circleEnd) { return -1; } if (circleStart == maxValue - 1) { circleStart = 0; return acheBuffers[maxValue - 1]; } else { return acheBuffers[circleStart++]; } } BufferData::BufferData() { } BufferData::~BufferData() { }

AudioData.h

#pragma once #include "BufferData.h" #include <queue> using namespace std; class AudioData { public: AudioData(); /*音频播放源*/ ALuint source; /*音频数据*/ ALuint* buffer; /*音频当前播放状态*/ ALint state; /*音频格式*/ ALenum audioFormat = AL_FORMAT_STEREO16; /*声道数*/ ALshort channel; /*音频采样率*/ ALsizei sample = 44100; queue<ALuint> bufferQueue; BufferData bufferData; bool isUsed = false; ~AudioData(); };

OpenALSound.h

#pragma once #include <iostream> #include "AL/alut.h" #include <string> #include "AudioData.h" #include "BufferData.h" using namespace std; extern "C" _declspec(dllexport) int InitOpenAL(); extern "C" _declspec(dllexport) int SetSoundPitch(int key,float value); extern "C" _declspec(dllexport) int SetSoundPlay(int key); extern "C" _declspec(dllexport) int SetSoundPause(int key); extern "C" _declspec(dllexport) int SetSoundStop(int key); extern "C" _declspec(dllexport) int SetSoundRewind(int key); extern "C" _declspec(dllexport) bool HasProcessedBuffer(int key); extern "C" _declspec(dllexport) int SendBuffer(int key, ALvoid * data, ALsizei length); extern "C" _declspec(dllexport) int SetSampleRate(int key, short channels, short bit, int samples); extern "C" _declspec(dllexport) int SetVolumn(int key, float value); extern "C" _declspec(dllexport) int Reset(int key); extern "C" _declspec(dllexport) int Clear(int key);

#include "OpenALSound.h" /*音频播放设备*/ ALCdevice* device; /*音频播放上下文*/ ALCcontext* context; static const size_t maxData = 10; AudioData audioArray[maxData]; int initCount = 0; /* 设置声源 */ ALuint SetSource(int key) { alGenSources((ALuint)1, &audioArray[key].source); if (alGetError() != AL_NO_ERROR) { cout << "create source failed" << endl; return -1; } alSourcef(audioArray[key].source, AL_GAIN, 1); if (alGetError() != AL_NO_ERROR) { cout << "set source gain error" << endl; return -1; } alSource3f(audioArray[key].source, AL_POSITION, 0, 0, 0); if (alGetError() != AL_NO_ERROR) { cout << "set source position error" << endl; return -1; } alSource3f(audioArray[key].source, AL_VELOCITY, 0, 0, 0); if (alGetError() != AL_NO_ERROR) { cout << "set source velocity error" << endl; return -1; } alSourcei(audioArray[key].source, AL_LOOPING, AL_FALSE); if (alGetError() != AL_NO_ERROR) { cout << "set source looping error" << endl; return -1; } return 0; } int InitOpenAL() { int ret = -1; for (size_t index = 0; index < maxData; index++) { if (!audioArray[index].isUsed) { ret = index; break; } } if (ret==-1) { return ret; } if (initCount ==0) { const ALchar* deviceName = alcGetString(NULL, ALC_DEVICE_SPECIFIER); if (alGetError() != AL_NO_ERROR) { cout << "get device error" << endl; return -1; } // 打开device device = alcOpenDevice(deviceName); if (!device) { cout << "open device failed" << endl; return -1; } //创建context context = alcCreateContext(device, NULL); if (!alcMakeContextCurrent(context)) { cout << "make context failed" << endl; return -1; } } ALuint source = SetSource(ret); if (source == -1) { return -1; } alGenBuffers(maxValue, audioArray[ret].bufferData.acheBuffers); if (alGetError() != AL_NO_ERROR) { return -1; } audioArray[ret].bufferData.circleStart = 0; audioArray[ret].bufferData.circleEnd = maxValue - 1; audioArray[ret].isUsed = true; initCount++; return ret; } /*根据声道数和采样率返回声音格式*/ ALenum to_al_format(int key, short channels, short samples) { bool stereo = (channels > 1); switch (samples) { case 16: if (stereo) return AL_FORMAT_STEREO16; else return AL_FORMAT_MONO16; case 8: if (stereo) return AL_FORMAT_STEREO8; else return AL_FORMAT_MONO8; default: return -1; } } int SetVolumn(int key, float value) { alSourcef(audioArray[key].source,AL_GAIN,value); if (alGetError() != AL_NO_ERROR) { return -1; } return 0; } /*设置语速*/ int SetSoundPitch(int key, float value) { if (audioArray[key].source!=NULL) { alSourcef(audioArray[key].source, AL_PITCH, 1); if (alGetError()!=AL_NO_ERROR) { return -1; } else { return 0; } } return -1; } /*播放语音*/ int SetSoundPlay(int key) { if (audioArray[key].source==NULL) { return -1; } alGetSourcei(audioArray[key].source, AL_SOURCE_STATE, &audioArray[key].state); if (audioArray[key].state == AL_PLAYING) { return 0; } else { alSourcePlay(audioArray[key].source); if (alGetError() != AL_NO_ERROR) { return -1; } return 0; } } /*暂停语音播放*/ int SetSoundPause(int key) { if (audioArray[key].source == NULL) { return -1; } alGetSourcei(audioArray[key].source, AL_SOURCE_STATE, &audioArray[key].state); if (audioArray[key].state == AL_PAUSED) { return 0; } else { alSourcePause(audioArray[key].source); if (alGetError() != AL_NO_ERROR) { return -1; } return 0; } } /*停止语音播放*/ int SetSoundStop(int key) { if (audioArray[key].source == NULL) { return -1; } alGetSourcei(audioArray[key].source, AL_SOURCE_STATE, &audioArray[key].state); if (audioArray[key].state == AL_STOPPED) { return 0; } else { alSourceStop(audioArray[key].source); if (alGetError() != AL_NO_ERROR) { return -1; } return 0; } } int SetSoundRewind(int key) { if (audioArray[key].source == NULL) { return -1; } alGetSourcei(audioArray[key].source, AL_SOURCE_STATE, &audioArray[key].state); if (audioArray[key].state == AL_INITIAL) { return 0; } else { alSourceRewind(audioArray[key].source); if (alGetError() != AL_NO_ERROR) { return -1; } return 0; } } bool HasProcessedBuffer(int key) { ALint a=-1; alGetSourcei(audioArray[key].source, AL_BUFFERS_PROCESSED, &a); if (alGetError() != AL_NO_ERROR) { return false; } if (a>0) { alSourceUnqueueBuffers(audioArray[key].source, a, audioArray[key].bufferData.tempBuffer); audioArray[key].bufferData.PushAll(a); } return (!audioArray[key].bufferData.isEmpty())?true:false; } /*发送音频数据*/ int SendBuffer(int key, ALvoid * data,ALsizei length) { ALuint temp = audioArray[key].bufferData.Pop(); if (temp==-1) { return -1; } if (alGetError() != AL_NO_ERROR) { return -1; } alBufferData(temp, audioArray[key].audioFormat, data, length, audioArray[key].sample); if (alGetError() != AL_NO_ERROR) { return -1; } alSourceQueueBuffers(audioArray[key].source, 1, &temp); return 0; } /*设置采样率*/ int SetSampleRate(int key, short channels,short bit, int samples) { audioArray[key].channel = channels; audioArray[key].sample = samples; to_al_format(key,channels, bit); return 0; } int Reset(int key) { SetSoundStop(key); ALint a = -1; alGetSourcei(audioArray[key].source, AL_BUFFERS_PROCESSED, &a); if (alGetError() != AL_NO_ERROR) { return -1; } if (a > 0) { alSourceUnqueueBuffers(audioArray[key].source, a, audioArray[key].bufferData.tempBuffer); audioArray[key].bufferData.PushAll(a); } return 0; } int Clear(int key) { alDeleteSources(1, &audioArray[key].source); alDeleteBuffers(maxValue, audioArray[key].bufferData.acheBuffers); initCount--; if (initCount==0) { device = alcGetContextsDevice(context); alcMakeContextCurrent(NULL); alcDestroyContext(context); alcCloseDevice(device); } audioArray[key].isUsed = false; return 0; }

需要注意的问题

1. 全局声明了initCount值,这个值为了验证当前是否已经初始化过设备和上下文信息,如果initCount==0,表示没有初始化过,每次添加一个音频该值加1,每次释放音频该值减1,如果释放的时候该值是0,释放设备和上下文信息。

2. 因为是根据输入的音频的数据段播放声音,所以插件采用的是缓存队列来加载音频数据和获取播放完成的缓存数据,具体的操作方法可以参考官方API。

3. 下面的代码是我测试10个视频同时播放的例子,我测试是没问题的

ALvoid * LoadFile(int key,ALsizei& size) { ALsizei freq; ALvoid * data; ALenum format; ALboolean loop = AL_FALSE; ALbyte name1[] = "2954.wav"; ALbyte *name = name1; alutLoadWAVFile(name, &audioArray[key].audioFormat, &data, &size, &freq, &loop); if (alGetError() != AL_NO_ERROR) { return NULL; } return data; } int main() { float key; ALsizei size; for (int index=0;index<10;index++) { if (InitOpenAL() == -1) { cin >> key; return -1; } if (SetSampleRate(index, 1, 16, 11025) == -1) { cin >> key; return -1; } ALvoid *data = LoadFile(index, size); while ((HasProcessedBuffer(index))) { SendBuffer(index, data, size); } SetSoundPlay(index); } while (cin >> key) { SetVolumn(0, key); if (key == 0) { break; } } Clear(0); }

到这里基本所有视频播放器使用的插件部分就全部介绍完了,从下一篇我们开始介绍在Unity3d中实现视频播放器插件的部分。

原文:https://www.cnblogs.com/sauronKing/p/13346450.html