部署规划

内存资源有限,方便实验,三个节点既做master又做运算节点,生产master节点和node节点分开部署

192.168.30.90 k8s-master-1

192.168.30.91 k8s-master-2

192.168.30.92 k8s-master-3

配置docker-ce社区版的yum源

[root@k8s-master-1 ~]# vim /etc/yum.repos.d/docker-ce.repo

[docker-ce]

name=docker-ce

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/

gpgcheck=0

enabled=1

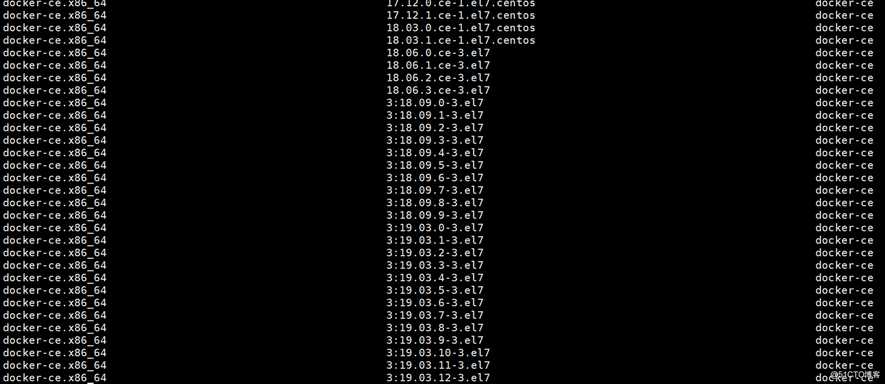

列出docker-ce的所有版本,安装所需的版本

[root@k8s-master-1 yum.repos.d]# yum list docker-ce --showduplicates

这里安装1809版本

[root@k8s-master-1 yum.repos.d]# yum install docker-ce-18.09.9-3.el7 -y curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun #一条命令直接添加yum源并默认安装最新版docke-ce [root@k8s-master-1 ~]# mkdir /etc/docker/ -pv

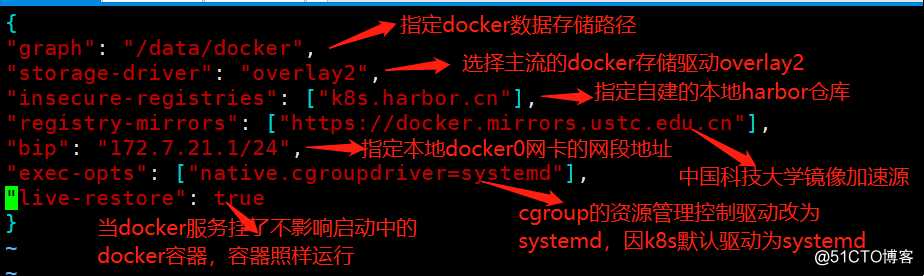

[root@k8s-master-1 ~]# vim /etc/docker/daemon.json

[root@k8s-master-1 ~]# systemctl daemon-reload

[root@k8s-master-1 ~]# systemctl start docker

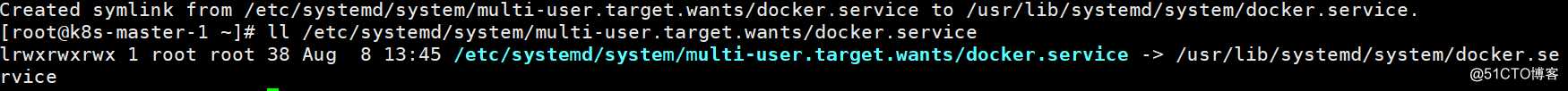

[root@k8s-master-1 ~]# systemctl enable docker

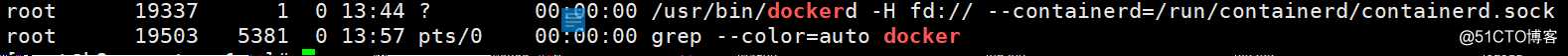

[root@k8s-master-1 ~]# ps -ef | grep docker #查看启动的docker进程

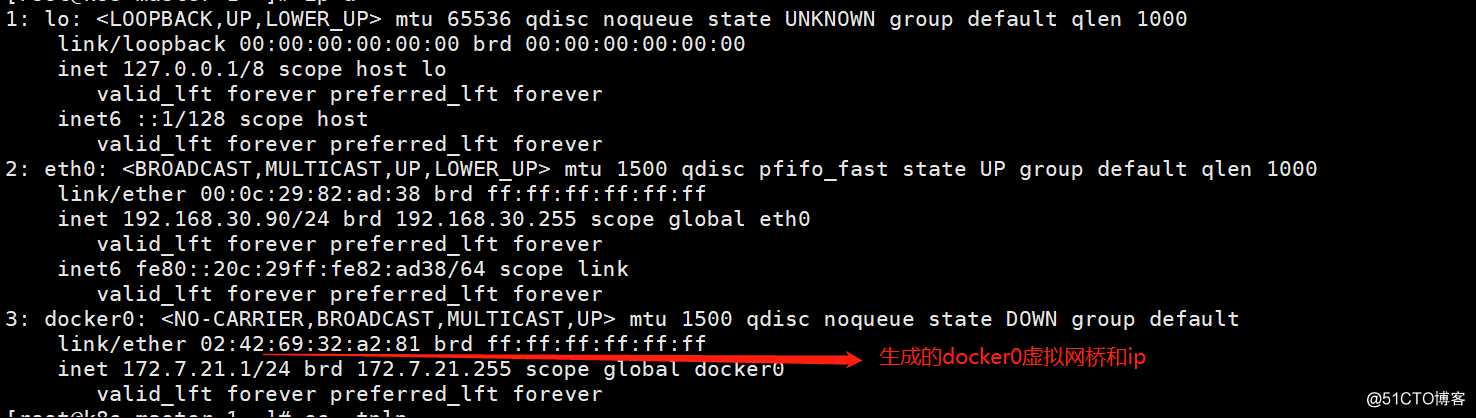

[root@k8s-master-1 ~]# ip a #查看docker0桥

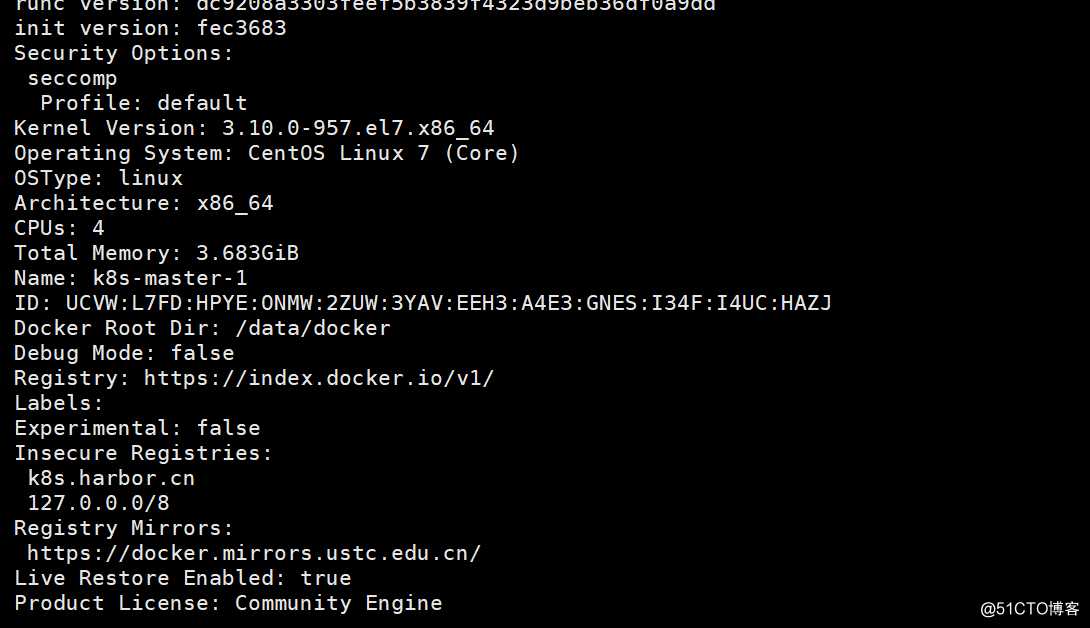

[root@k8s-master-1 ~]# docker info docker启动后的属性信息查看

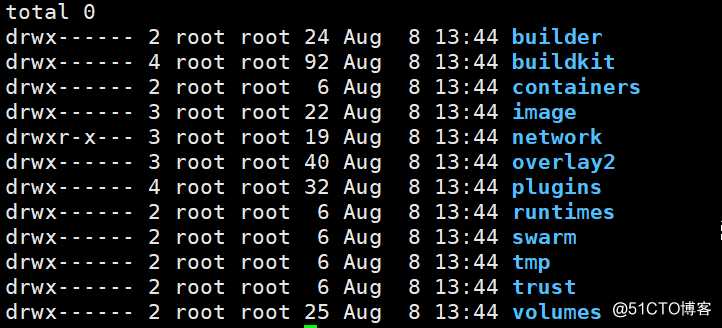

[root@k8s-master-1 ~]# ll /data/docker/ 启动生成的docker数据

[root@k8s-master-1 ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl

[root@k8s-master-1 ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json

[root@k8s-master-1 ~]# chmod +x /usr/bin/cfssl* #添加执行权限[root@k8s-master-1 ~]# mkdir /certs #创建证书签发目录,专用于证书存放或签发

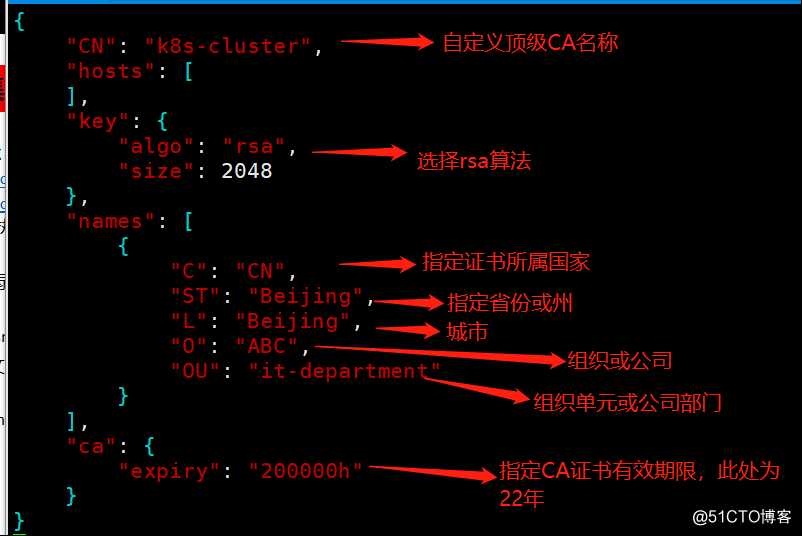

[root@k8s-master-1 certs]# cfssl print-defaults csr > ca-csr.json #通过命令生成CA证书申请文件ca-csr.json模板

[root@k8s-master-1 certs]# vim ca-csr.json #修改模板文件为如下,自定义CA申请文件

l

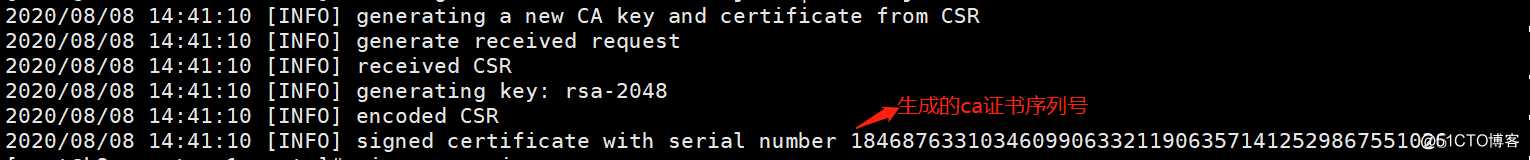

[root@k8s-master-1 certs]# cfssl gencert -initca ca-csr.json | cfssl-json -bare ca #通过申请文件初始化自签发CA证书,-bare表示生成承载式证书

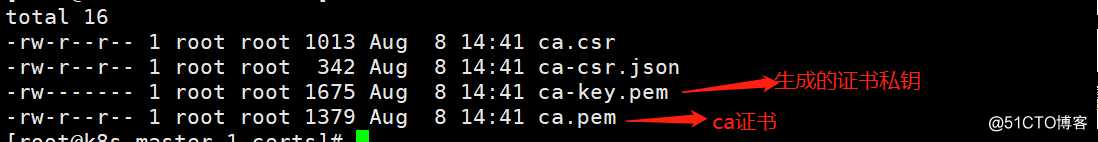

[root@k8s-master-1 certs]# ll #查看生成的证书和证书私钥,生成的其他文件可直接删除掉,无用

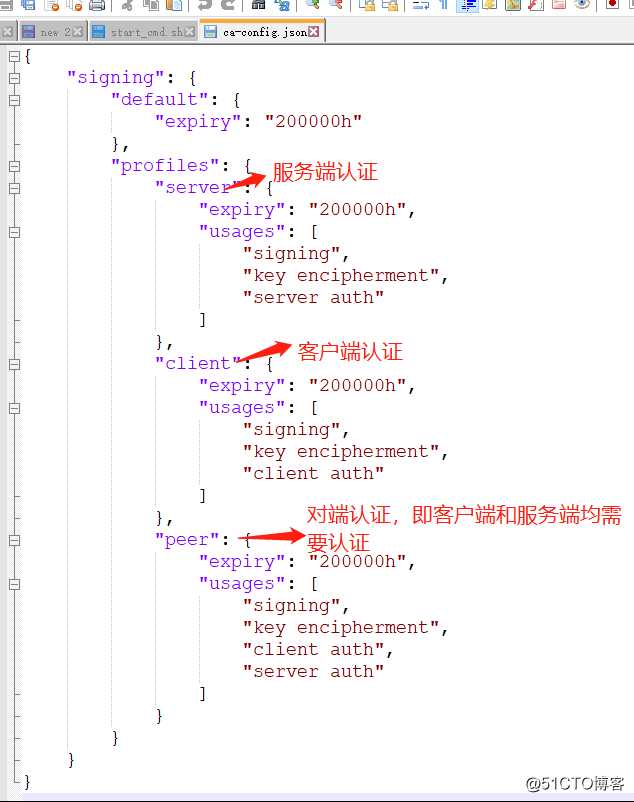

生成ca证书的签发的配置文件

[root@k8s-master-1 certs]# cfssl print-defaults config > ca-config.json

编辑ca配置文件,指定签署证书的类型,修改成如下

[root@k8s-master-1 certs]# vim ca-config.json

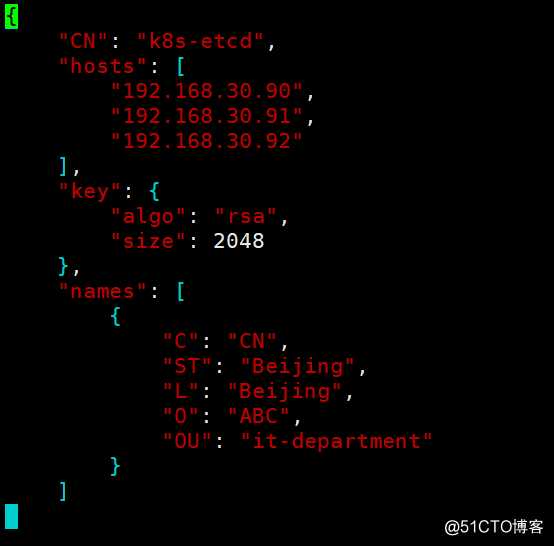

创建etcd证书申请文件

[root@k8s-master-1 certs]# vim etcd-peer-csr.json

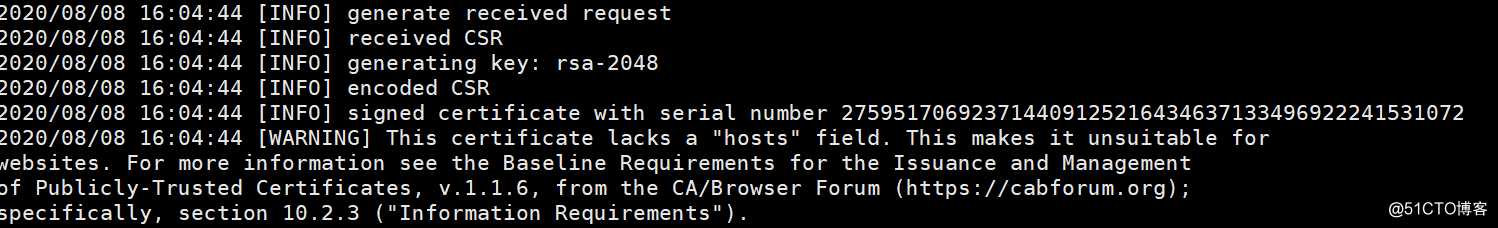

通过etcd申请文件来签发生成etcd证书,-ca:指定根证书 -ca-key:指定ca证书私钥 -config:ca配置 -profile:定义签发的证书类型

[root@k8s-master-1 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json | cfssl-json -bare etcd-peer

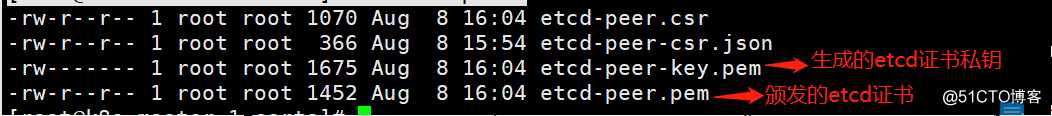

查看颁发生成的证书文件

[root@k8s-master-1 certs]# ll etcd-peer*

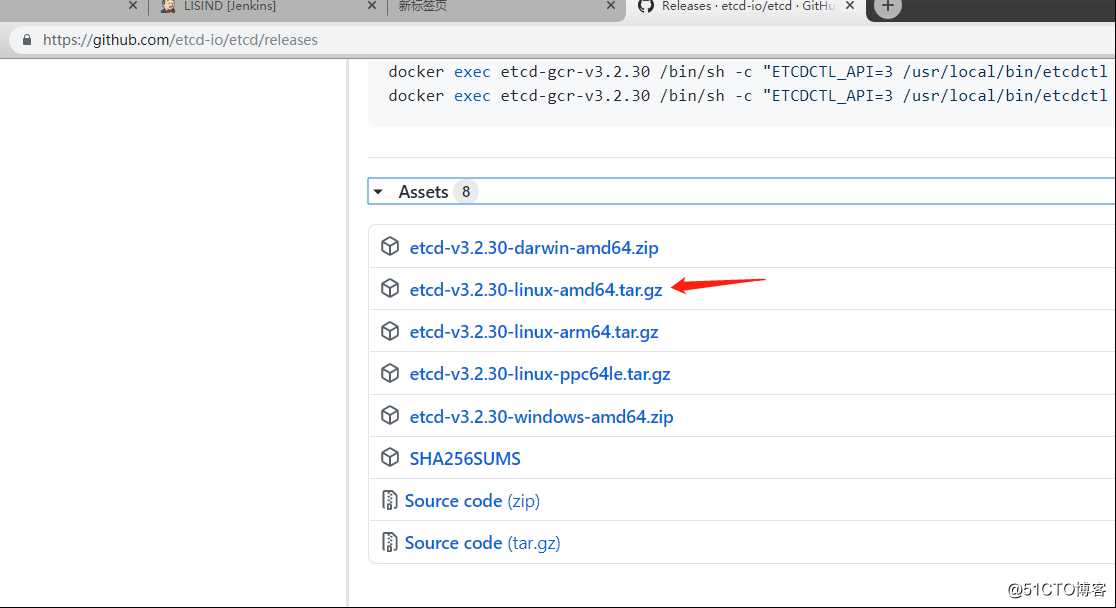

访问https://github.com/etcd-io/etcd/releases,3.3版本可能不稳定,以3.2版本为例,找到3.2.30版本资产下载etcd,再通过curl命令或wget命令下载

[root@k8s-master-1 ~]# curl -L https://github.com/etcd-io/etcd/releases/download/v3.2.30/etcd-v3.2.30-linux-amd64.tar.gz -o /tmp/etcd-v3.2.30-linux-amd64.tar.gz

[root@k8s-master-1 ~]# mkdir /kubernetes -pv #创建集群部署目录

[root@k8s-master-1 kubernetes]# tar xvf /tmp/etcd-v3.2.30-linux-amd64.tar.gz -C /kubernetes/ #解压到此目录

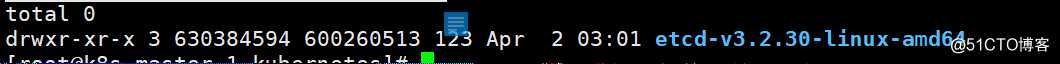

[root@k8s-master-1 kubernetes]# ll #查看解压后的目录

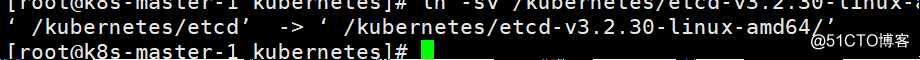

[root@k8s-master-1 kubernetes]# ln -sv /kubernetes/etcd-v3.2.30-linux-amd64/ /kubernetes/etcd #创建软连接,方便后面的升级

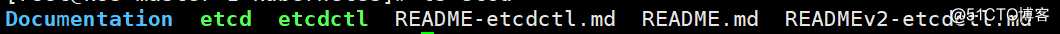

[root@k8s-master-1 kubernetes]# ls etcd #查看etcd的二进制文件

创建etcd服务的启动脚本

[root@k8s-master-1 ~]# vim /kubernetes/etcd/etcd-start.sh

[root@k8s-master-1 ~]# chmod +x /kubernetes/etcd/etcd-start.sh #加上执行权限

创建etcd启动脚本所需的目录和必要的文件

[root@k8s-master-1 ~]# mkdir /kubernetes/etcd/certs -p #存放etcd证书

[root@k8s-master-1 ~]# cp /certs/{etcd-peer-key.pem,etcd-peer.pem,ca.pem} /kubernetes/etcd/certs/ #复制相关的证书文件到此目录下

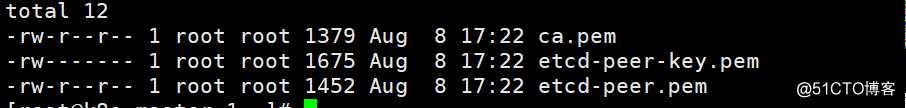

[root@k8s-master-1 ~]# ll /kubernetes/etcd/certs/

[root@k8s-master-1 ~]# mkdir /data/etcd/etcd-server -p #etcd数据存储目录远程复制至两etcd节点

[root@k8s-master-1 ~]# scp -r /kubernetes/ k8s-master-2:/

[root@k8s-master-1 ~]# scp -r /kubernetes/ k8s-master-3:/

创建另外两节点etcd数据存储目录

[root@k8s-master-2 ~]# mkdir /data/etcd/etcd-server -p

[root@k8s-master-3 ~]# mkdir /data/etcd/etcd-server -p [root@k8s-master-1 ~]# cd /kubernetes/etcd && nohup ./etcd-start.sh &> /kubernetes/etcd/etcd.out & #各节点执行启动命令

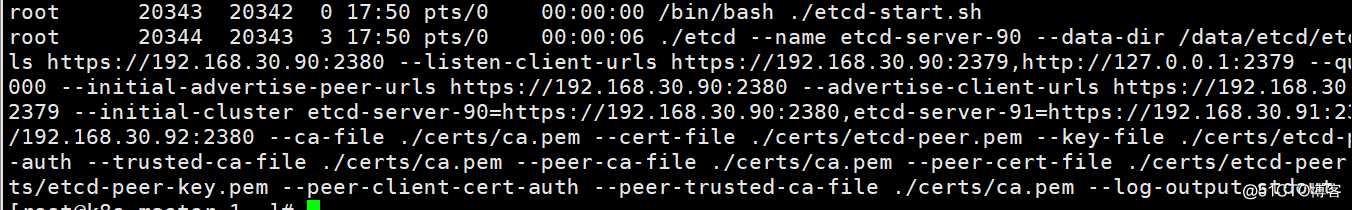

[root@k8s-master-1 ~]# ps -ef | grep etcd | grep -v grep #查看服务进程是否启动

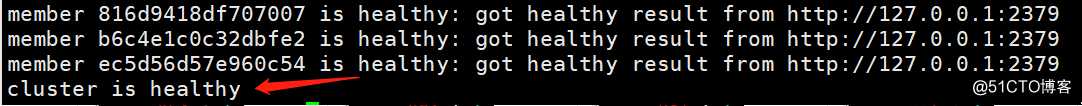

[root@k8s-master-1 ~]# /kubernetes/etcd/etcdctl cluster-health #检查集群健康状态

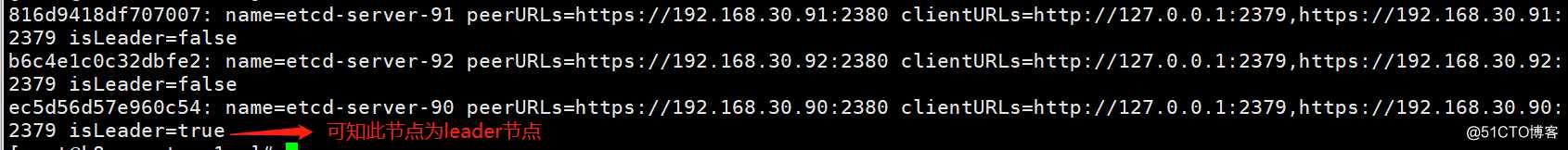

[root@k8s-master-1 ~]# /kubernetes/etcd/etcdctl member list #列出集群中的etcd节点成员,到此etcd集群已经部署成功

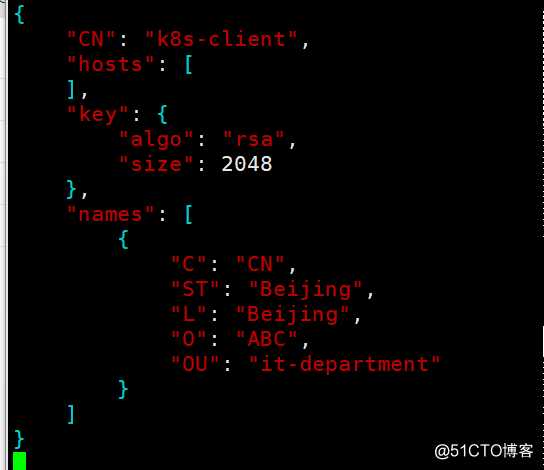

[root@k8s-master-1 certs]# vim client-csr.json #创建client证书申请文件

[root@k8s-master-1 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json | cfssl-json -bare client #签发client证书

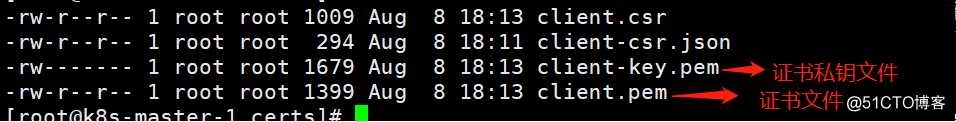

[root@k8s-master-1 certs]# ll client* #查看生成的client证书相关文件

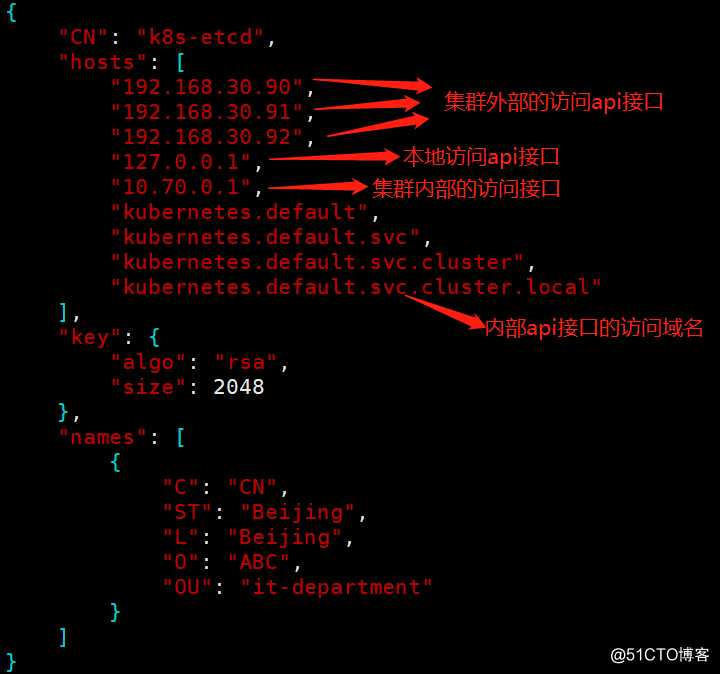

[root@k8s-master-1 certs]# vim apiserver-csr.json #创建apiserver服务端证书申请文件,必须指定所有作为server端的api地址,否则导致集群间无法通信

[root@k8s-master-1 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json | cfssl-json -bare apiserver #签发apiserver证书

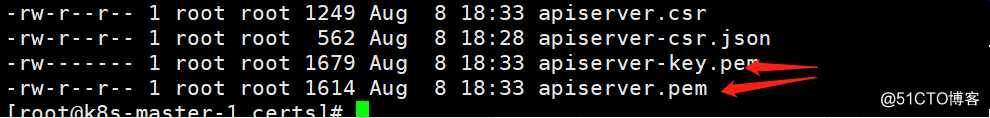

[root@k8s-master-1 certs]# ll apiserver* #查看签发生成的api证书相关文件

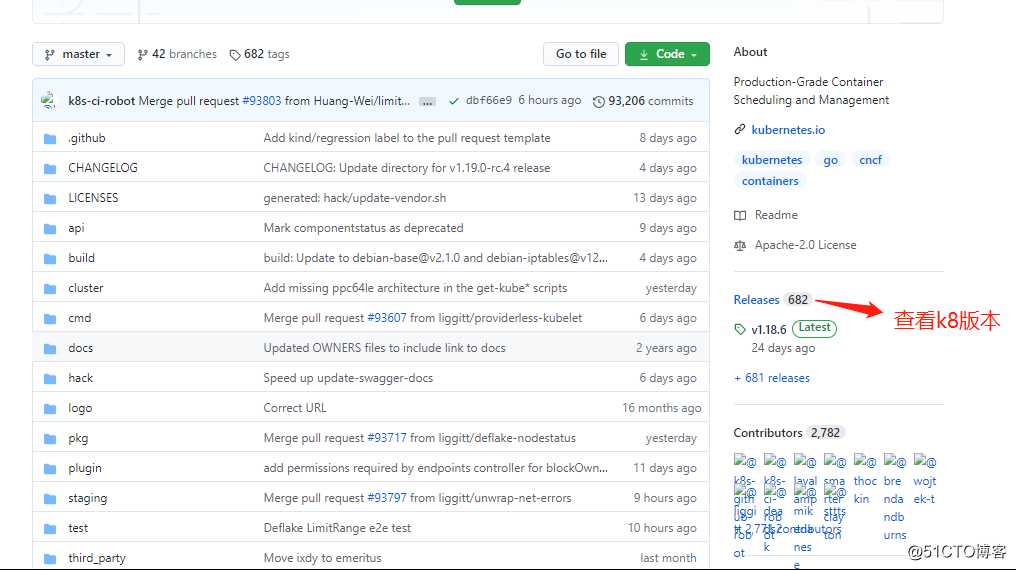

查看k8s二进制包的版本,不推荐最新版,选择一个较稳定版

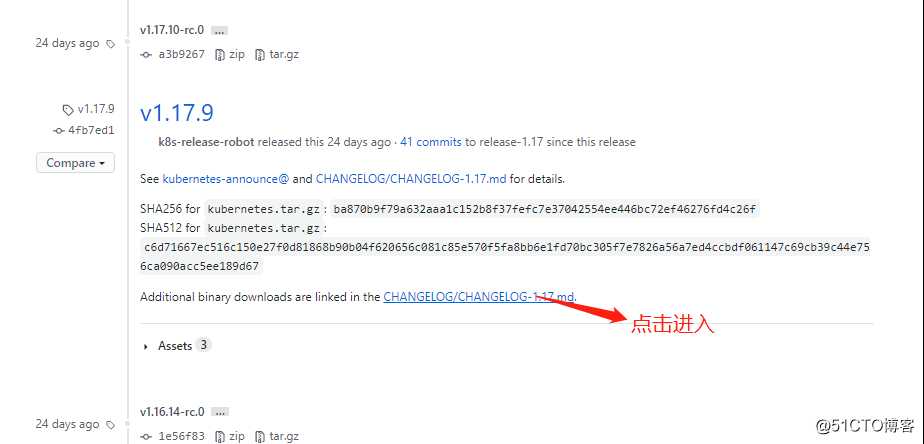

点击进入,下载server端二进制包

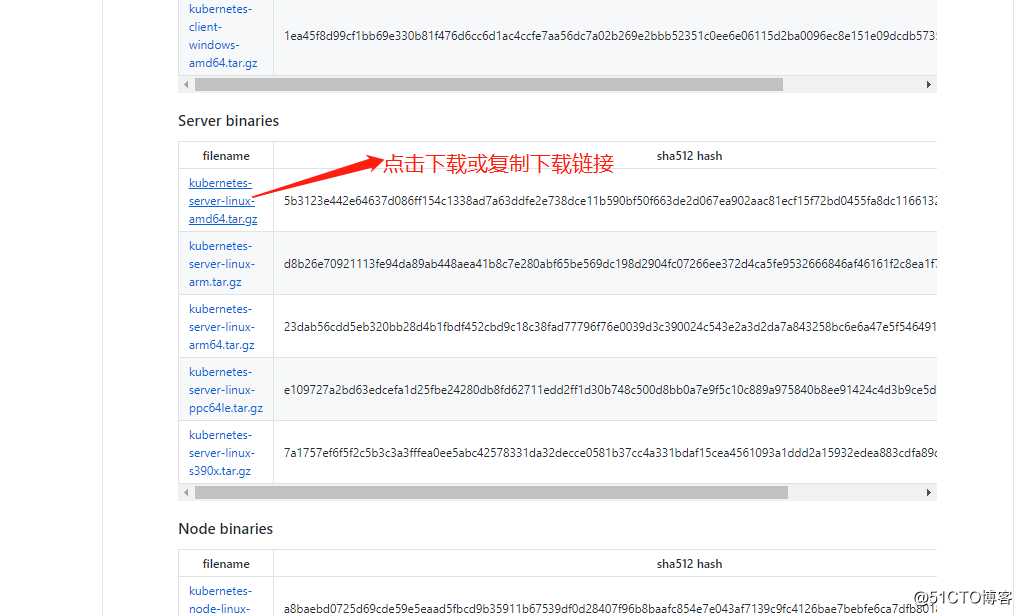

选择server端linux-amd平台进行下载

[root@k8s-master-1 ~]# wget https://dl.k8s.io/v1.17.9/kubernetes-server-linux-amd64.tar.gz -O /tmp/kubernetes-1.17.9-server-linux-amd64.tar.gz #下载1.17.9版本二进制包

解压二进制压缩包

[root@k8s-master-1 tmp]# tar xvf kubernetes-1.17.9-server-linux-amd64.tar.gz

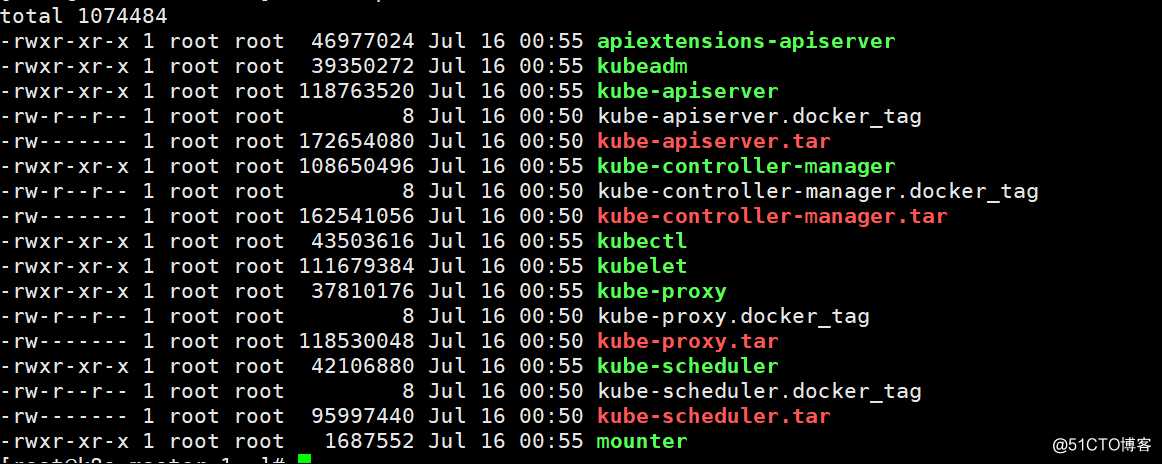

查看解压后目录下的二进制程序

[root@k8s-master-1 ~]# ll /tmp/kubernetes/server/bin/

创建一个存放k8s二进制文件路径

[root@k8s-master-1 ~]# mkdir /kubernetes/bin -pv

将用到的二进制程序复制到此目录下

[root@k8s-master-1 ~]# cp /tmp/kubernetes/server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kubelet,kube-proxy} /kubernetes/bin/

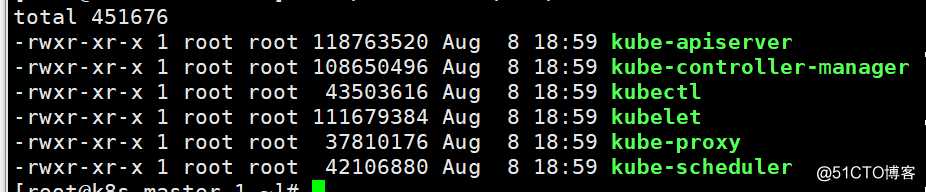

查看需要用到的二进制程序

[root@k8s-master-1 ~]# ll /kubernetes/bin/

[root@k8s-master-1 ~]# vim /kubernetes/bin/apiserver.sh

脚本加上执行权限

[root@k8s-master-1 ~]# chmod +x /kubernetes/bin/apiserver.sh

创建脚本中指定的日志输出保存的路径

[root@k8s-master-1 ~]# mkdir -pv /data/logs/kubernetes/kube-apiserver/

根据脚本创建相应的证书目录,配置目录

[root@k8s-master-1 ~]# mkdir /kubernetes/bin/{conf,cert}

复制签发所需要的所有证书文件

cp /certs/{client.pem,client-key.pem,ca.pem,ca-key.pem,apiserver.pem,apiserver-key.pem} /kubernetes/bin/cert/

创建日志审计配置文件,定义资源信息获取规则

[root@k8s-master-1 ~]# vim /kubernetes/bin/conf/audit.yaml

apiVersion: audit.k8s.io/v1beta1

kind: Policy

omitStages:

- "RequestReceived"

rules:

- level: RequestResponse

resources:

- group: ""

resources: ["pods"]

- level: Metadata

resources:

- group: ""

resources: ["pods/log","pods/status"]

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: ""

resources: ["endpoints","services"]

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*"

- "/version"

- level: Request

resources:

- group: ""

resources: ["configmap"]

namespaces: ["kube-system"]

- level: Metadata

resources:

- group: ""

resources: ["secrets","configmaps"]

- level: Request

resources:

- group: ""

- group: "extensions"

- level: Metadata

omitStages:

- "RequestReceived"相关文件拷贝至远程节点

[root@k8s-master-1 ~]# scp -r /kubernetes/bin/ 192.168.30.91:/kubernetes/

[root@k8s-master-1 ~]# scp -r /kubernetes/bin/ 192.168.30.92:/kubernetes/

启动apiserver服务,各节点执行以下相同的启动命令

[root@k8s-master-1 ~]# cd /kubernetes/bin && nohup ./apiserver.sh &> /data/logs/kubernetes/kube-apiserver/apiserver.out &

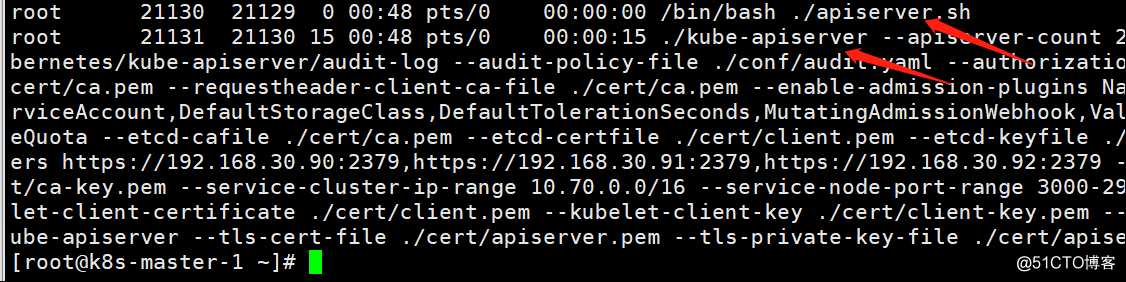

查看apiserver启动进程,同样在各节点查看

[root@k8s-master-1 ~]# ps -ef | grep apiserver | grep -v grep

创建kubectl软链接

[root@k8s-master-1 ~]# ln -sv /kubernetes/bin/kubectl /usr/bin/

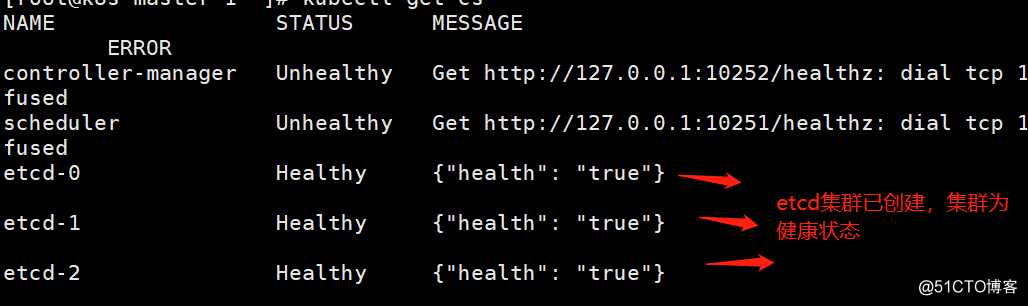

在通过kubectl查看集群组件健康状态,controller和scheduler因还未部署,因此显示不健康状态;各节点依次查看健康状态

[root@k8s-master-1 ~]# kubectl get cs

主控制器可直接访问本地监听8080端口的apiserevr,因此无需签发证书,直接创建启动脚本;各节点控制器启动脚本均相同无需更改

[root@k8s-master-1 ~]# vim /kubernetes/bin/kube-controll-manager.sh

添加执行权限

[root@k8s-master-1 ~]# chmod +x /kubernetes/bin/kube-controll-manager.sh

创建控制器日志输出保存路径

[root@k8s-master-1 ~]# mkdir -pv /data/logs/kubernetes/kube-controller-manager

各节点依次执行控制器脚本,启动controller-manager主控制器

[root@k8s-master-1 ~]# cd /kubernetes/bin && nohup ./kube-controll-manager.sh &> /data/logs/kubernetes/kube-controller-manager/controller-manager.out &

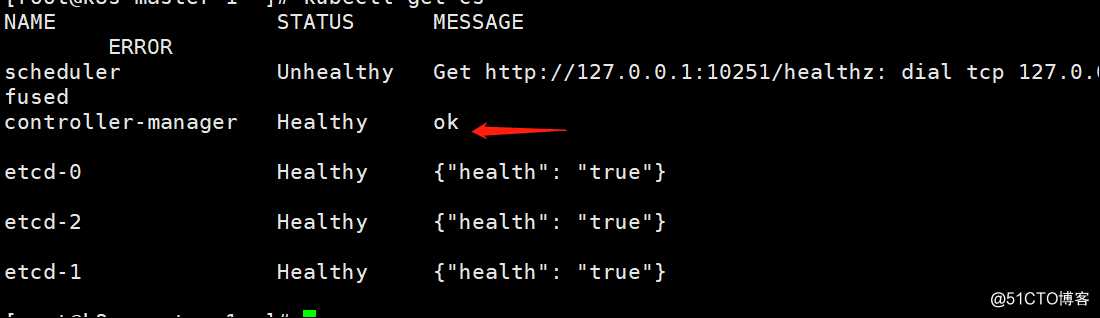

再次查看集群组件健康状态,控制器已成功启动

[root@k8s-master-1 ~]# kubectl get cs

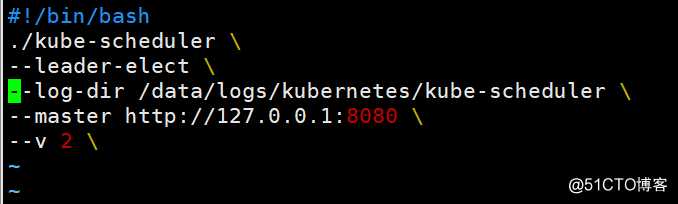

scheduler调度器同样无需签发证书,可直接访问本地的8080监听的api端口,如下是启动脚本,更加简洁

添加执行权限

[root@k8s-master-1 ~]# chmod +x /kubernetes/bin/kube-scheduler.sh

创建日志输出的路径

[root@k8s-master-1 ~]# mkdir -pv /data/logs/kubernetes/kube-scheduler

各节点执行以下启动命令

[root@k8s-master-1 ~]# cd /kubernetes/bin && nohup ./kube-scheduler.sh &> /data/logs/kubernetes/kube-scheduler/kube-scheduler.out &

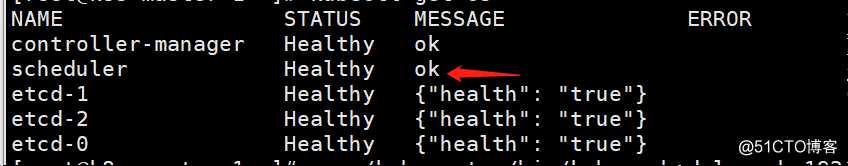

查看你调度器健康状态,可见以成功启动

[root@k8s-master-1 ~]# kubectl get cs

创建kubelet证书申请文件,指定所有node节点

[root@k8s-master-1 certs]# vim kubelet-csr.json

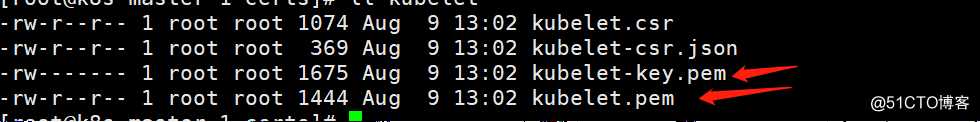

签发证书

[root@k8s-master-1 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

查看签发生成的证书文件

[root@k8s-master-1 certs]# ll kubelet*

拷贝生成的证书至指定的证书目录下

[root@k8s-master-1 ~]# cp /certs/{kubelet.pem,kubelet-key.pem} /kubernetes/bin/cert/通过CA证书创建一个k8s集群

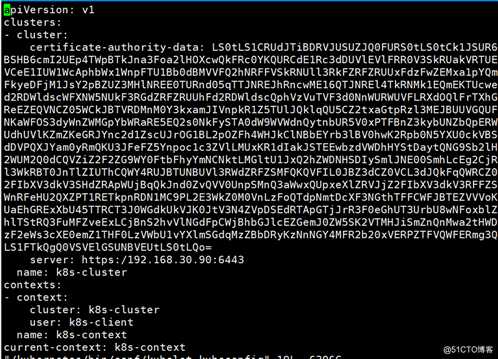

root@k8s-master-1 ~]# kubectl config set-cluster k8s-cluster --certificate-authority=/kubernetes/bin/cert/ca.pem --server=https:/192.168.30.90:6443 --embed-certs=true --kubeconfig=/kubernetes/bin/conf/kubelet.kubeconfig

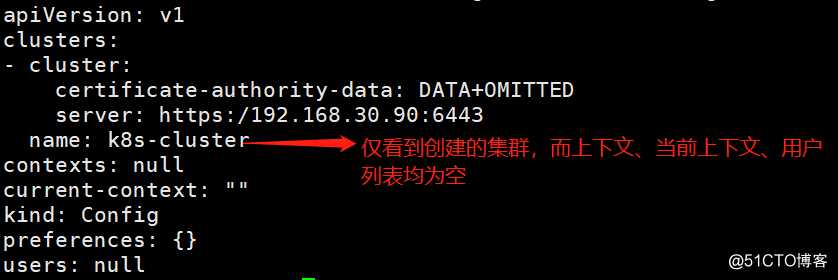

查看创建的集群

[root@k8s-master-1 ~]# kubectl config view --kubeconfig=/kubernetes/bin/conf/kubelet.kubeconfig

添加kubelet认证用户至users表中,kubelet与apiserver共用同一client证书,因此无需单独签发kubelet客户端证书

[root@k8s-master-1 ~]# kubectl config set-credentials k8s-client --client-certificate=/kubernetes/bin/cert/client.pem --client-key=/kubernetes/bin/cert/client-key.pem --embed-certs=true --kubeconfig=/kubernetes/bin/conf/kubelet.kubeconfig

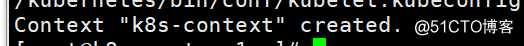

创建context集群上下文件,允许kubelet用户访问该集群

[root@k8s-master-1 ~]# kubectl config set-context k8s-context --cluster=k8s-cluster --user=k8s-client --kubeconfig=/kubernetes/bin/conf/kubelet.kubeconfig

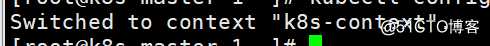

切换使用当前上下文为k8s-context

[root@k8s-master-1 ~]# kubectl config use-context k8s-context --kubeconfig=/kubernetes/bin/conf/kubelet.kubeconfig

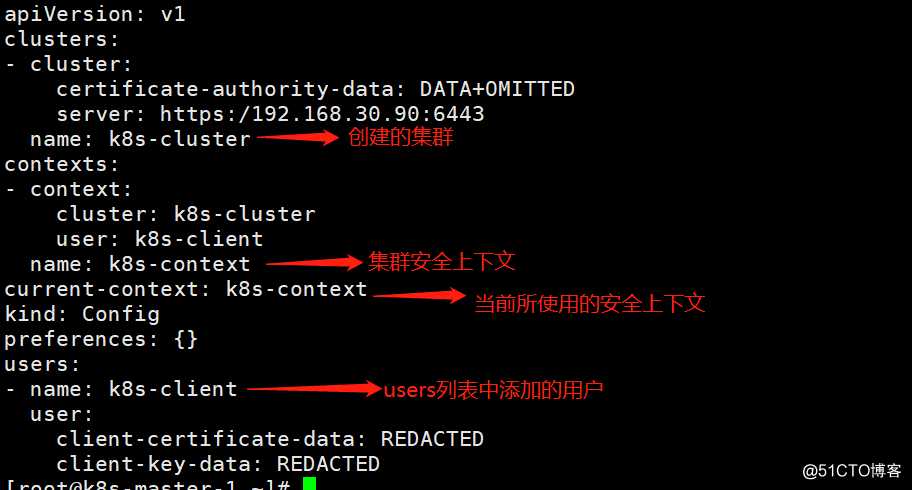

再次查看创建的集群

[root@k8s-master-1 ~]# kubectl config view --kubeconfig=/kubernetes/bin/conf/kubelet.kubeconfig

最终生成的kubeconfig配置文件,里面定义了信任的ca根证书、客户端证书和私钥、集群认证用户、安全上下文等属性信息

[root@k8s-master-1 ~]# vim /kubernetes/bin/conf/kubelet.kubeconfig

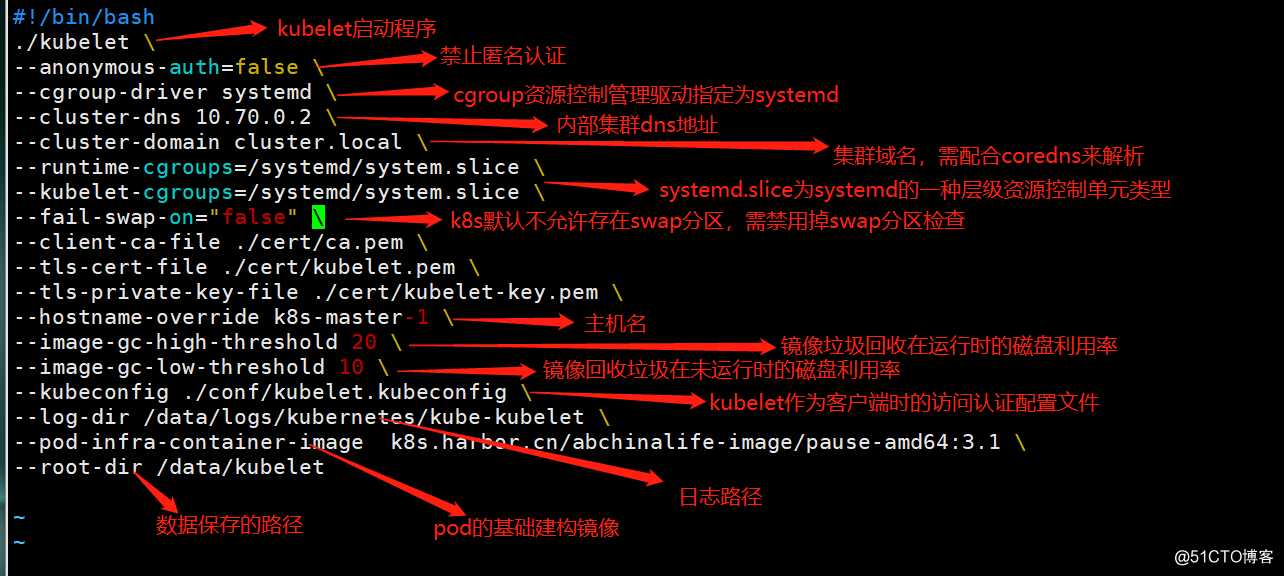

[root@k8s-master-1 ~]# vim /kubernetes/bin/kubelet.sh

添加脚本执行权限

[root@k8s-master-1 ~]# chmod +x /kubernetes/bin/kubelet.sh

创建kubelet数据存储目录

[root@k8s-master-1 ~]# mkdir /data/kubelet

创建kubelet日志存储路径

[root@k8s-master-1 ~]# mkdir -p /data/logs/kubernetes/kube-kubeletkubelet认证证书

[root@k8s-master-1 ~]# scp /kubernetes/bin/cert/{kubelet.pem,kubelet-key.pem} k8s-master-2:/kubernetes/bin/cert/

[root@k8s-master-1 ~]# scp /kubernetes/bin/cert/{kubelet.pem,kubelet-key.pem} k8s-master-3:/kubernetes/bin/cert/

kubelet访问集群的客户端配置文件kubecnfig

[root@k8s-master-1 ~]# scp /kubernetes/bin/conf/kubelet.kubeconfig k8s-master-2:/kubernetes/bin/conf/

[root@k8s-master-1 ~]# scp /kubernetes/bin/conf/kubelet.kubeconfig k8s-master-3:/kubernetes/bin/conf/

kubelet的启动脚本

[root@k8s-master-1 ~]# scp /kubernetes/bin/kubelet.sh k8s-master-2:/kubernetes/bin/ #修改启动脚本中的主机名为k8s-master-2

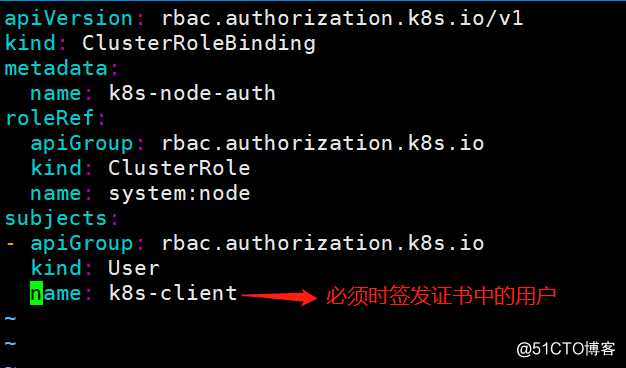

[root@k8s-master-1 ~]# scp /kubernetes/bin/kubelet.sh k8s-master-3:/kubernetes/bin/ #修改启动脚本中的主机名为k8s-master-3创建一个clusterrolebinding

[root@k8s-master-1 ~]# vim /tmp/k8s-node-clusterrole.yaml

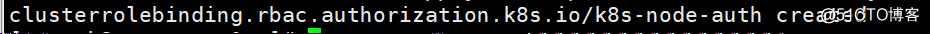

[root@k8s-master-1 ~]# kubectl apply -f /tmp/k8s-node-clusterrole.yaml

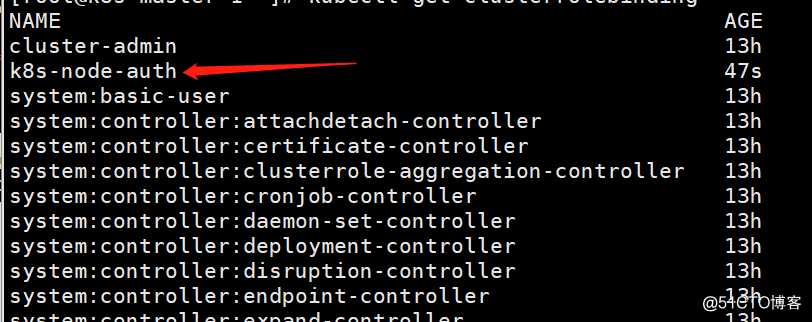

查看创建的clusterrolebinding,可见以成功创建此集群角色绑定资源

[root@k8s-master-1 ~]# kubectl get clusterrolebinding

先试着启动master-1节点kubelet服务

[root@k8s-master-1 ~]# cd /kubernetes/bin && nohup ./kubelet.sh &> /data/logs/kubernetes/kube-kubelet/kubelet.out &

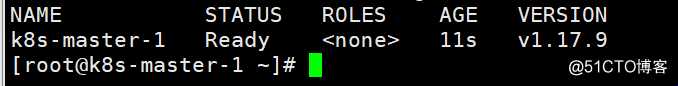

只看到master-1单个节点处于ready状态,因master-2和master-3还未启动kubelet服务

[root@k8s-master-1 ~]# kubectl get node

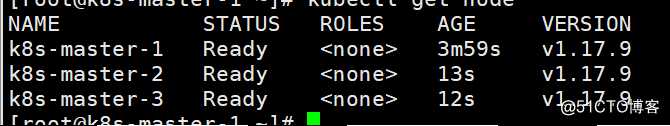

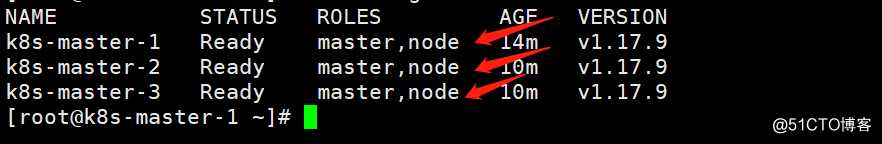

master-2和master-3均启动kubelet服务后,再次查看节点状态,此时所有节点均已成功添加到集群处于就绪状态

[root@k8s-master-1 ~]# kubectl get node

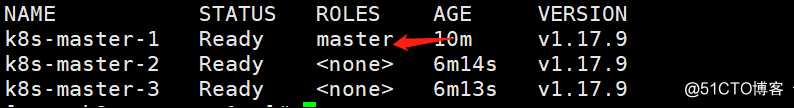

给master-1打上master角色标签

[root@k8s-master-1 ~]# kubectl label node k8s-master-1 node-role.kubernetes.io/master=

查看已经打好的标签

[root@k8s-master-1 ~]# kubectl get node

这里就不区分master节点和node节点,全部打上master和node的标签

[root@k8s-master-1 ~]# kubectl label node k8s-master-1 node-role.kubernetes.io/node= #打上node标签

[root@k8s-master-1 ~]# kubectl label node k8s-master-2 node-role.kubernetes.io/node= #打上node标签

[root@k8s-master-1 ~]# kubectl label node k8s-master-3 node-role.kubernetes.io/node= #打上node标签

[root@k8s-master-1 ~]# kubectl label node k8s-master-3 node-role.kubernetes.io/master= #打上master标签

[root@k8s-master-1 ~]# kubectl label node k8s-master-2 node-role.kubernetes.io/master= #打上master标签

查看打号的集群节点角色标签

[root@k8s-master-1 ~]# kubectl get node

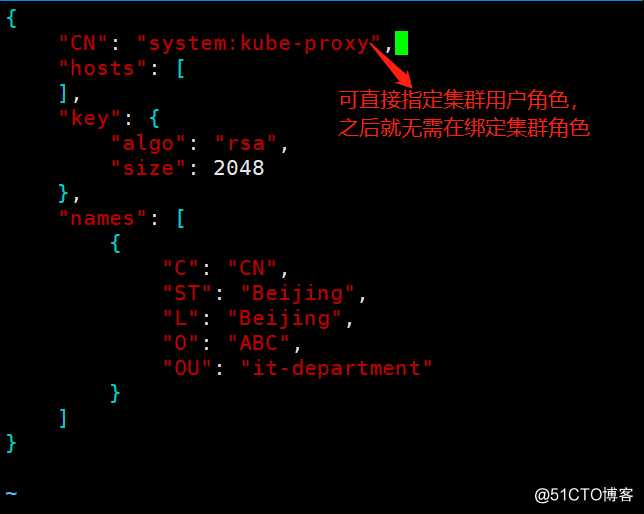

创建kube-proxy的证书申请文件

[root@k8s-master-1 certs]# vim kube-proxy-csr.json

签发kube-proxy证书

[root@k8s-master-1 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json | cfssl-json -bare kube-proxy-client

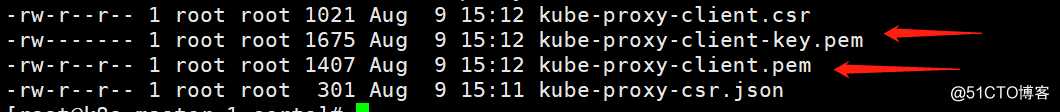

查看签发生成的证书

[root@k8s-master-1 certs]# ll kube-proxy*

生成的签发证书放到只当路径

[root@k8s-master-1 ~]# cp /certs/{kube-proxy-client.pem,kube-proxy-client-key.pem} /kubernetes/bin/cert创建一各集群

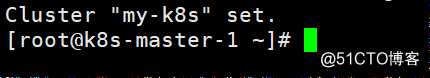

[root@k8s-master-1 ~]# kubectl config set-cluster my-k8s --certificate-authority=/kubernetes/bin/cert/ca.pem --server=https://192.168.30.91:6443 --embed-certs=true --kubeconfig=/kubernetes/bin/conf/kube-proxy.kubeconfig

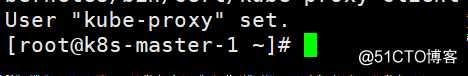

添加kube-proxy认证用户到users列表

[root@k8s-master-1 ~]# kubectl config set-credentials kube-proxy --client-certificate=/kubernetes/bin/cert/kube-proxy-client.pem --client-key=/kubernetes/bin/cert/kube-proxy-client-key.pem --embed-certs=true --kubeconfig=/kubernetes/bin/conf/kube-proxy.kubeconfig

创建context上下文

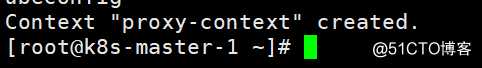

[root@k8s-master-1 ~]# kubectl config set-context proxy-context --cluster=my-k8s --user=kube-proxy --kubeconfig=/kubernetes/bin/conf/kube-proxy.kubeconfig

切换当前上下文

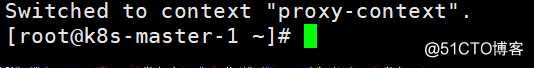

[root@k8s-master-1 ~]# kubectl config use-context proxy-context --kubeconfig=/kubernetes/bin/conf/kube-proxy.kubeconfig

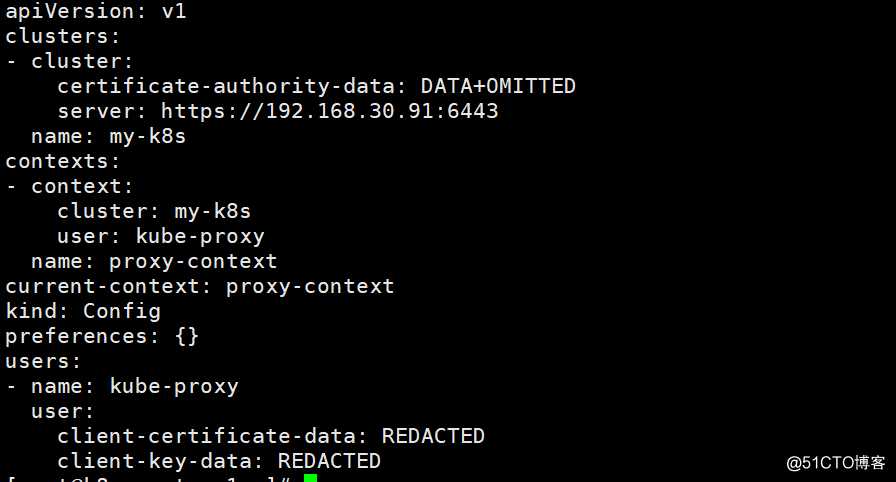

查看创建好的kube-proxy的集群信息

[root@k8s-master-1 ~]# kubectl config view --kubeconfig=/kubernetes/bin/conf/kube-proxy.kubeconfig

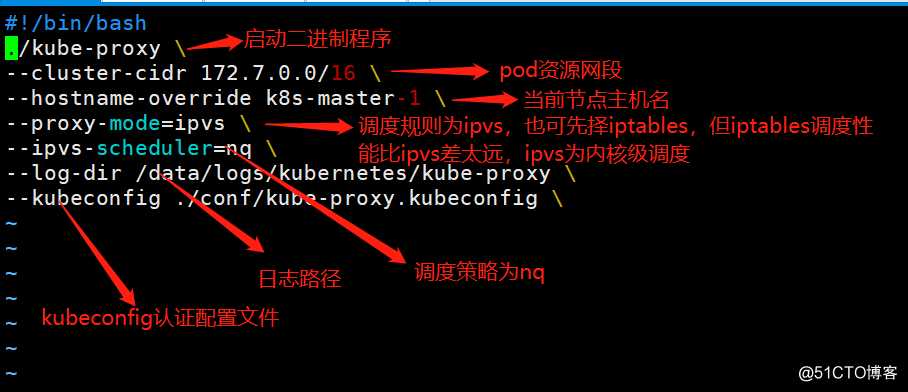

创建kube-proxy的启动脚本

[root@k8s-master-1 ~]# vim /kubernetes/bin/kube-proxy.sh

添加执行权限

[root@k8s-master-1 ~]# chmod +x /kubernetes/bin/kube-proxy.sh

创建kube-proxy的日志输出路径,同样在master-2和master-3等节点创建

[root@k8s-master-1 ~]# mkdir -p /data/logs/kubernetes/kube-proxy

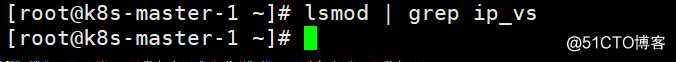

查看ipvs模块是否启动,默认是没有启动,必须启动ipvs调度模块,否者kube-proxy所选的ipvs调度无法生效

[root@k8s-master-1 ~]# lsmod | grep ip_vs #命令查看未返回任何结果,说明未启动ipvs模块

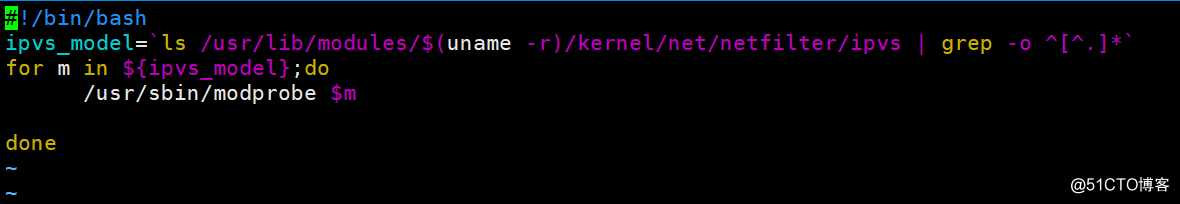

编辑ipvs功能模块启动的脚本

[root@k8s-master-1 ~]# vim /kubernetes/bin/ipvs_model.sh

添加执行权限

[root@k8s-master-1 ~]# chmod +x /kubernetes/bin/ipvs_model.sh

执行ipvs模块启动脚本

[root@k8s-master-1 ~]# bash /kubernetes/bin/ipvs_model.sh

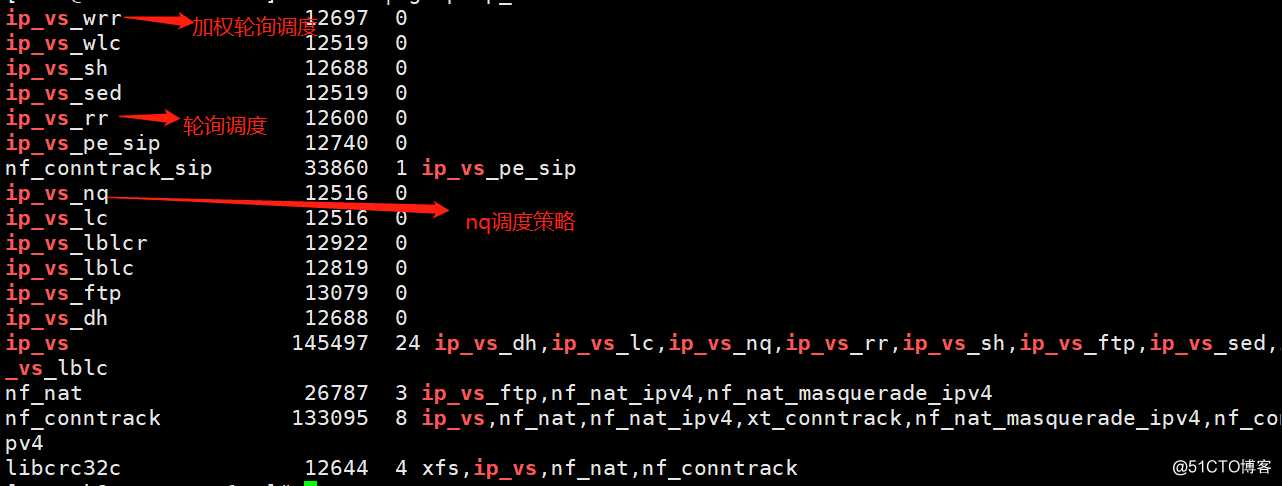

再次查看ipvs模块,可见已经启用ipvs模块调度功能,同时在其他节点也启用ipvs模块

[root@k8s-master-1 ~]# lsmod | grep ip_vs

复制证书文件

[root@k8s-master-1 ~]# scp /kubernetes/bin/cert/{kube-proxy-client.pem,kube-proxy-client-key.pem} k8s-master-2:/kubernetes/bin/cert/

[root@k8s-master-1 ~]# scp /kubernetes/bin/cert/{kube-proxy-client.pem,kube-proxy-client-key.pem} k8s-master-3:/kubernetes/bin/cert/

复制kubeconfig客户端认证配置文件

[root@k8s-master-1 ~]# scp /kubernetes/bin/conf/kube-proxy.kubeconfig k8s-master-2:/kubernetes/bin/conf/

[root@k8s-master-1 ~]# scp /kubernetes/bin/conf/kube-proxy.kubeconfig k8s-master-3:/kubernetes/bin/conf/

复制kube-proxy的启动脚本及ipvs模块启动脚本

[root@k8s-master-1 ~]# scp /kubernetes/bin/{kube-proxy.sh,ipvs_model.sh} k8s-master-2:/kubernetes/bin/ #启动脚本主机名修改为k8s-master-2

[root@k8s-master-1 ~]# scp /kubernetes/bin/{kube-proxy.sh,ipvs_model.sh} k8s-master-3:/kubernetes/bin/ #启动脚本主机名修改为k8s-master-3编辑nginx应用的yaml文件

[root@k8s-master-1 ~]# vim /tmp/nginx.yaml

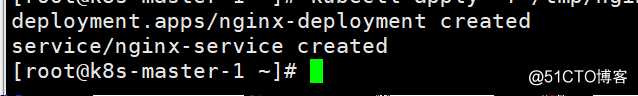

apply声明式创建一个受deployment控制器管理的nginx应用

[root@k8s-master-1 ~]# kubectl apply -f /tmp/nginx.yaml

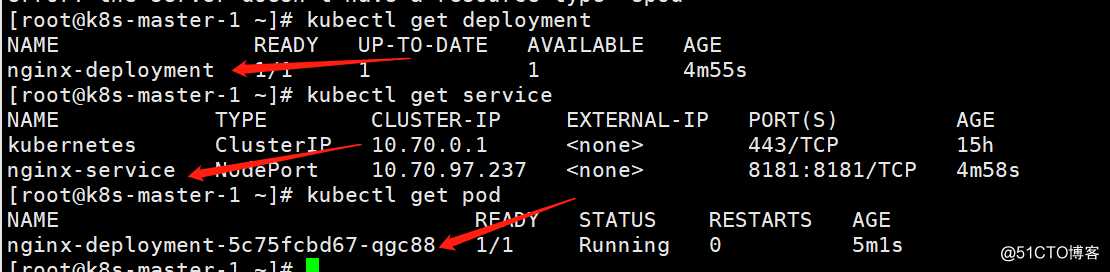

查看nginx的deplotyment、service、pod均已成功创建

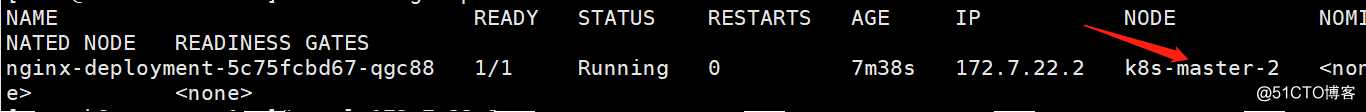

查看pod应用具体运行在哪个节点

[root@k8s-master-1 ~]# kubectl get pod -o wide

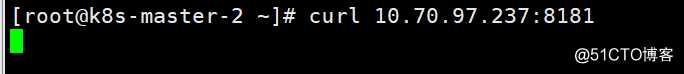

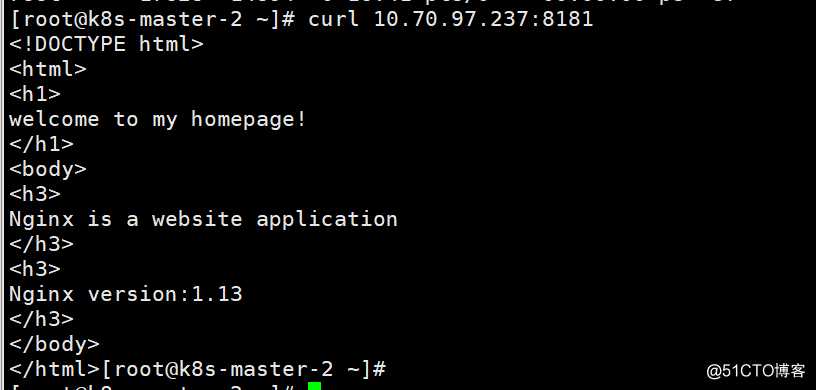

切换到master-2节点,直接访问pod应用;为什么要切换到master-2机器,因没有装flannel或calico等网络插件,此时跨节点访问pod是无法访问

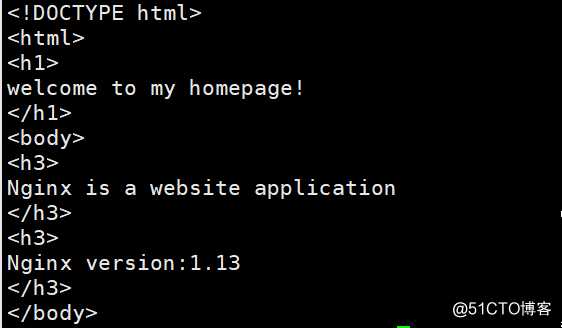

[root@k8s-master-2 ~]# curl 172.7.22.2 #可见是直接能访问到pod

若直接访问service的ip,通过service调度到pod,如下会一直卡住无法访问,就是因为没启动kube-proxy服务,导致service无法实现调度功能

通过以下命令,启动master-2节点的kube-proxy服务组件后

[root@k8s-master-2 ~]# cd /kubernetes/bin && nohup ./kube-proxy.sh &> /data/logs/kubernetes/kube-proxy/kube-proxy.out &

再次访问service的ip时,此时马上返回访问结果,实现service调度

[root@k8s-master-1 ~]# cd /kubernetes/bin && nohup ./kube-proxy.sh &> /data/logs/kubernetes/kube-proxy/kube-proxy.out &

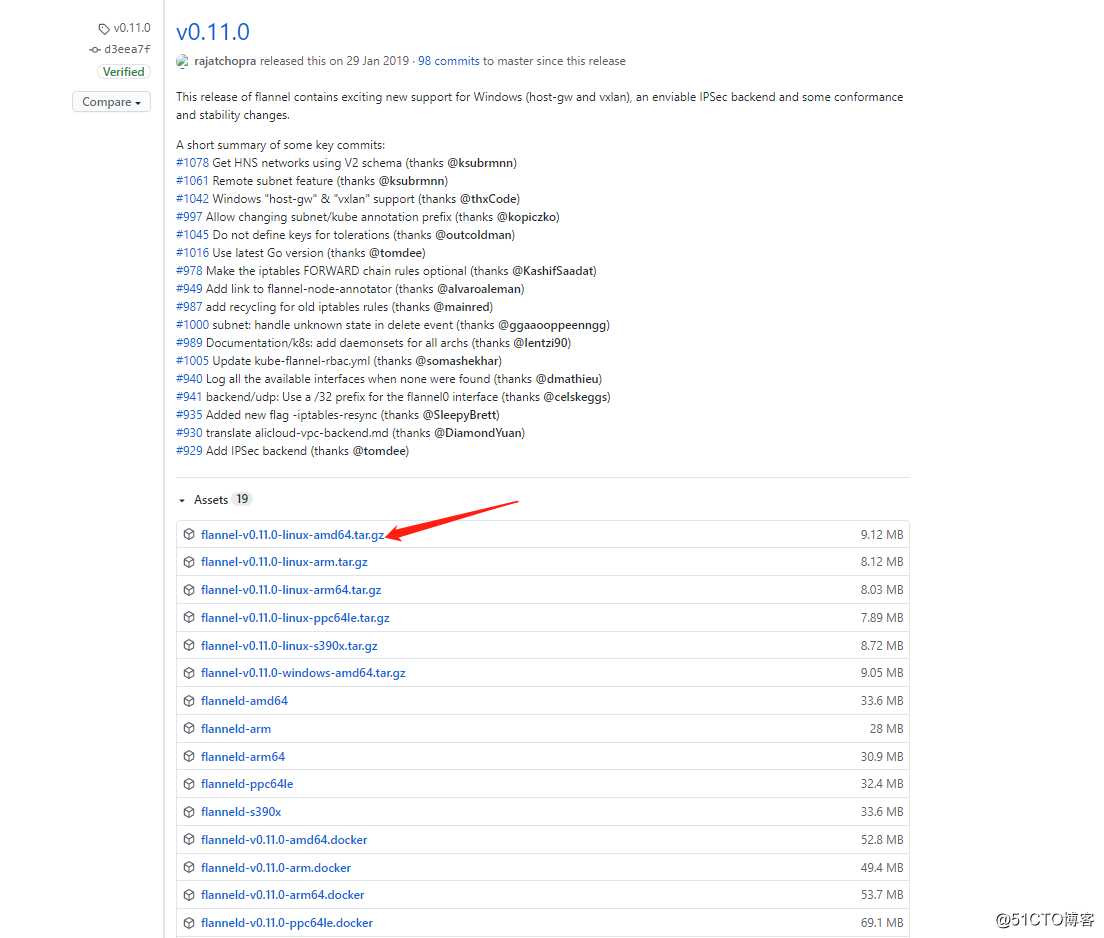

[root@k8s-master-3 ~]# cd /kubernetes/bin && nohup ./kube-proxy.sh &> /data/logs/kubernetes/kube-proxy/kube-proxy.out &[root@k8s-master-1 ~]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz -O /tmp

解压到指定目录下

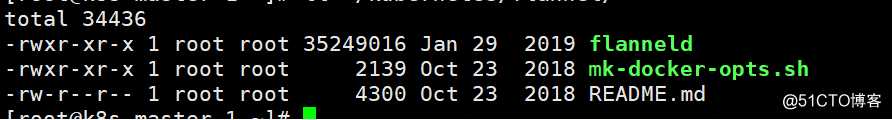

[root@k8s-master-1 ~]# tar xvf /tmp/flannel-v0.11.0-linux-amd64.tar.gz -C /kubernetes/flannel/

查看解压后的二进制

[root@k8s-master-1 ~]# ll /kubernetes/flannel/

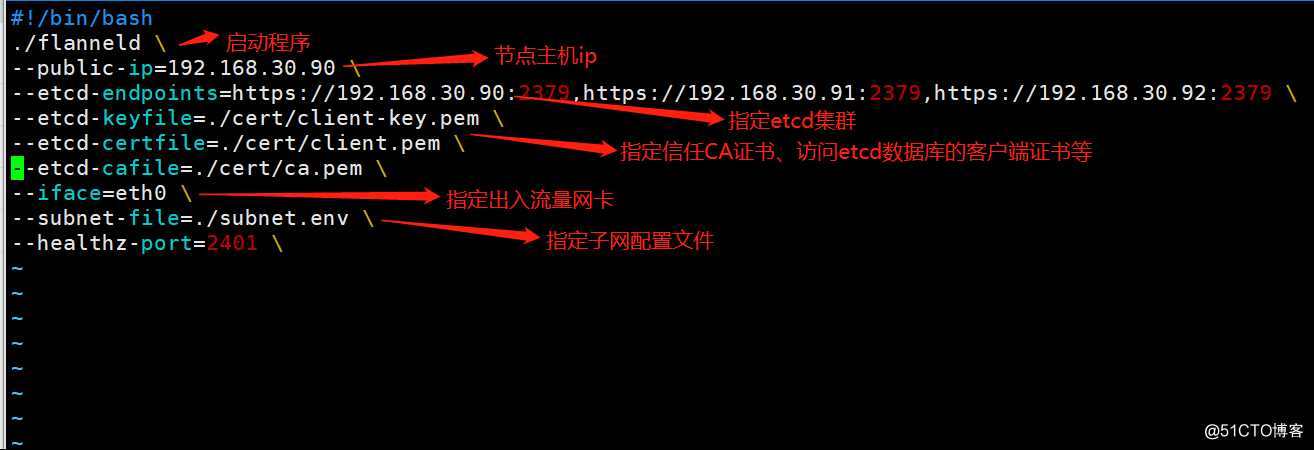

编辑flannel启动脚本

[root@k8s-master-1 ~]# vim /kubernetes/flannel/flanneld.sh

添加执行权限

[root@k8s-master-1 ~]# chmod +x /kubernetes/flannel/flanneld.sh

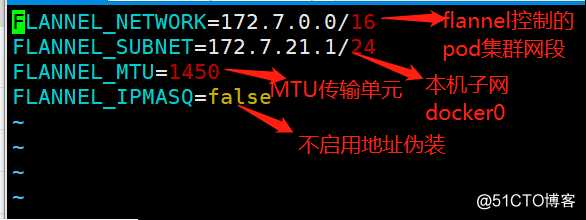

创建subnet.env子网环境变量配置

[root@k8s-master-1 ~]# vim /kubernetes/flannel/subnet.env

复制必要的证书文件

[root@k8s-master-1 ~]# cp /certs/{ca.pem,client-key.pem,client.pem} /kubernetes/flannel/cert/

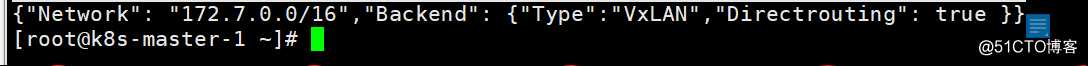

手动通过etcd数据库配置flannel网络类型,类型为vxlan并开启直接路由功能

[root@k8s-master-1 ~]# /kubernetes/etcd/etcdctl set /coreos.com/network/config ‘{"Network": "172.7.0.0/16","Backend": {"Type":"VxLAN","Directrouting": true }}‘

查看设置的网络,类型为vxlan

[root@k8s-master-1 ~]# /kubernetes/etcd/etcdctl get /coreos.com/network/config

[root@k8s-master-1 ~]# scp -r /kubernetes/flannel/ 192.168.30.91:/kubernetes/ #修改启动脚本节点ip为本机和subnet.env中的子网docker0

[root@k8s-master-1 ~]# scp -r /kubernetes/flannel/ 192.168.30.92:/kubernetes/ #修改启动脚本节点ip为本机和subnet.env中的子网docker0启动flannel网络插件服务

[root@k8s-master-1 ~]# cd /kubernetes/flannel/ && nohup ./flanneld.sh &> /kubernetes/flannel/flanneld.out &

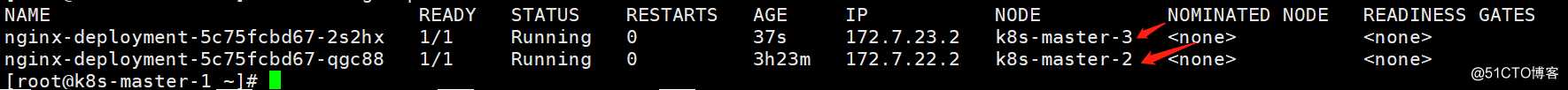

查看出两个pod应用分别运行在不同的节点

[root@k8s-master-1 ~]# kubectl get pod -o wide

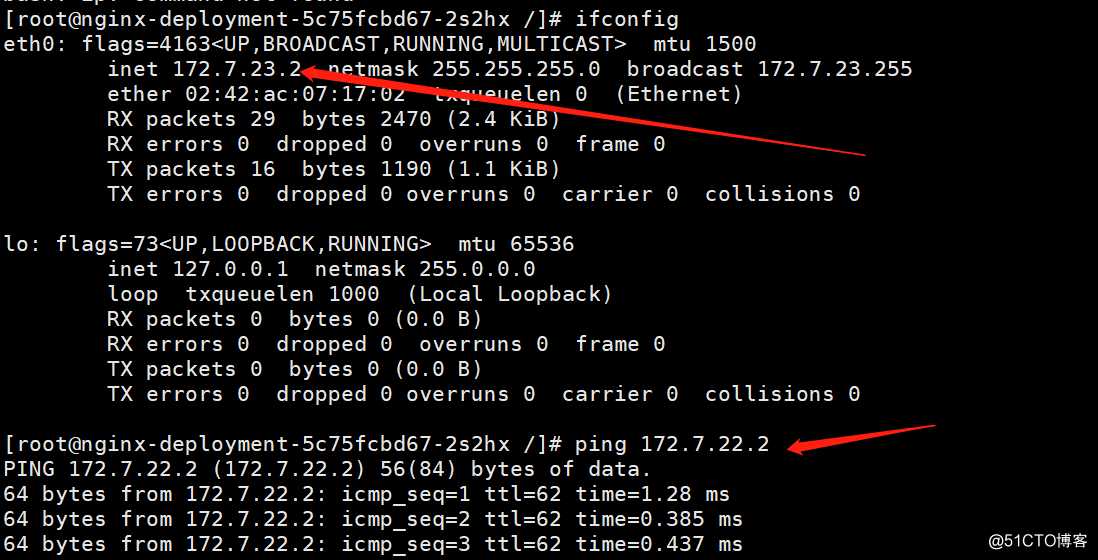

进入其中一个pod容器,可以ping通另外一节点的pod,从而实现pod跨节点通信

[root@k8s-master-1 ~]# kubectl exec -it nginx-deployment-5c75fcbd67-2s2hx bash

二进制手动部署kubernetes集群-k8s-1.17.9

原文:https://blog.51cto.com/14234542/2518424