看NRT,看PYTORCH

def run(data_type, existing_model_name = None):

modules, consts, options = init_modules(data_type)

def init_modules(data_type):

options = {}

options["is_predicting"] = False #Traing时候填false,predicting填true

print ("is_predicting: ", options["is_predicting"], ", data = " + data_type)

options["is_unicode"] = True

print ("loading...") # 读取准备好的数据文件

all_data = pickle.load(open("./data/data_"+ data_type + ".pkl", "rb"))

‘‘‘

x_raw_train/dev/test: [[0,0,5 [‘nice‘, ‘app‘], [‘nice‘,‘app‘...]], [...]]

old_dic: { ‘nice‘: 1569, ...} len=24177

dic: {‘nice‘:1569 ...} len=6468 (经过threshold筛选)

hfw: [‘<unk>‘, ‘<eos>‘, ‘the‘....]

i2w: {0: ‘nice‘, 1: ‘app‘ ...} w2i: {‘nice‘: 0, ‘app‘: 1 ...}

w2w: {‘nice‘: ‘nice‘, ‘sy‘: <unk>, ...}

w2idf: {‘nice‘: 1.1639902059516225, ...}

i2user: {0: 16672, ...} user2i: {16672: 0, ... }(username)

i2item/ item2i (itemname)

user2w: {0: {0, 1, 2, 3, 4, 6467}, 1: {...}, ...} 所有同一个user的对应评论

item2w: {0: {0, 1, 2, .... 1333, 1902, 6467}, 1:{...}, ... } 所有同一个item对应的评论

‘‘‘

[x_raw_train, x_raw_dev, x_raw_test, old_dic, dic, hfw, i2w, w2i, w2w, w2idf, i2user, user2i, i2item, item2i, user2w, item2w] = all_data

consts = {}

consts["num_user"] = len(user2i)

consts["num_item"] = len(item2i)

consts["dict_size"] = len(dic)

consts["hfw_dict_size"] = len(hfw)

consts["lvt_dict_size"] = LVT_DICT_SIZE #6468

consts["len_y"] = LEN_Y # 35 + 1

consts["dim_x"] = DIM_X # 300 根据论文上写的

consts["dim_w"] = DIM_W # 300

consts["dim_h"] = DIM_H # 400

consts["dim_r"] = DIM_RATING # 6

consts["max_len_predict"] = consts["len_y"]

consts["min_len_predict"] = 1

consts["batch_size"] = 200

consts["test_batch_size"] = 1

consts["test_samples"] = 2000

consts["beam_size"] = 5

consts["lr"] = 1.

consts["print_size"] = 2 #400 2 for demo

print ("#train = ", len(x_raw_train), ", #dev = ", len(x_raw_dev), ", #test = ", len(x_raw_test))

print ("#user = ", consts["num_user"], ", #item = ", consts["num_item"])

print ("#dic = ", consts["dict_size"], ", #hfw = ", consts["hfw_dict_size"])

modules = {}

modules["all_data"] = all_data

modules["hfw"] = hfw

modules["w2i"] = w2i

modules["i2w"] = i2w

modules["w2w"] = w2w

modules["u2ws"] = user2w

modules["i2ws"] = item2w

modules["w2idf"] = w2idf

modules["eos_emb"] = modules["w2i"][W_EOS]

modules["unk_emb"] = modules["w2i"][W_UNK]

modules["optimizer"] = "adadelta"

modules["stopwords"] = datar.load_stopwords("./data/stopwords_en.txt")

return modules, consts, options

print ("compiling...")

model = NeuralRecsys(modules, consts, options)

if training_model:

print ("training......")

[x_raw_train, x_raw_dev, x_raw_test, old_dic, dic, hfw, i2w, w2i, w2w, w2idf, i2user, user2i, i2item, item2i, user2w, item2w] = modules["all_data"]

for epoch in range(0, 50):

if epoch > 2:

consts["lr"] = 0.1

start = time.time()

# [[随机排序的index], [随机的index], []...]

batch_list = datar.get_batch_index(len(x_raw_train), consts["batch_size"])

error = 0

error_i = 0

error_rmse = 0

e_nll = 0

e_nllu = 0

e_nlli = 0

e_cce = 0

e_mae = 0

e_l2n = 0

for batch_index in batch_list:

local_batch_size = len(batch_index)

#提取出当前batch所需要的traindata

batch_raw = [x_raw_train[bxi] for bxi in batch_index]

load_time = time.time()

XU, XI, Y_r, Y_r_vec, Dict_lvt, Y_rev_tf, Y_rev_mark, Y_sum_idx, Y_sum_mark, Y_sum_lvt, lvt_i2i = datar.get_batch_data(batch_raw, consts, options, modules)

def get_batch_data(raw_x, consts, options, modules):

batch_size = len(raw_x)

hfw = modules["hfw"]

w2i = modules["w2i"]

i2w = modules["i2w"]

w2w = modules["w2w"]

w2idf = modules["w2idf"]

u2ws = modules["u2ws"]

i2ws = modules["i2ws"]

len_y = consts["len_y"]

lvt_dict_size = consts["lvt_dict_size"]

xu = np.zeros(batch_size, dtype = "int64") # 记录一组userid

xi = np.zeros(batch_size, dtype = "int64") # 记录一组itemid

yr = np.zeros(batch_size, dtype = theano.config.floatX) #记录一组rating

# one_hot encode DIM_RATING: 0--5 = 6

yr_vec = np.zeros((batch_size, DIM_RATING) , dtype = theano.config.floatX)

y_review_mark = np.zeros((batch_size, lvt_dict_size) , dtype = theano.config.floatX)

y_review_tf = np.zeros((batch_size, lvt_dict_size) , dtype = theano.config.floatX)

y_summary_index = np.zeros((len_y, batch_size, 1) , dtype = "int64")

y_summary_mark = np.zeros((len_y, batch_size) , dtype = theano.config.floatX)

y_summary_lvt = np.zeros((len_y, batch_size, 1) , dtype = "int64")

dic_review_words = {}

w2i_review_words = {}

lst_review_words = []

lvt_user_item = {w2i[W_EOS]}

lvt_i2i = {}

for i in range(batch_size):

uid, iid, r, summary, review = raw_x[i]

xu[i] = uid

xi[i] = iid

yr[i] = r

yr_vec[i, int(r)] = 1.

lvt_user_item |= u2ws[uid] | i2ws[iid] #将所有的review词整合到一起

if len(summary) > len_y: # 最大长度

summary = summary[0:len_y-1] + [W_EOS]

for wi in range(len(summary)):

w = summary[wi]

w = w2w[w]

if w in dic_review_words:

dic_review_words[w] += 1 #记录每个词出现的次数

else:

w2i_review_words[w] = len(dic_review_words) #生成当前batch的word2id

dic_review_words[w] = 1

lst_review_words.append(w2i[w])

y_summary_index[wi, i, 0] = w2i[w] #?

y_summary_lvt[wi, i, 0] = w2i_review_words[w] #?

y_summary_mark[wi, i] = 1 #?

for w in review:

w = w2w[w]

if w in dic_review_words:

dic_review_words[w] += 1

else:

w2i_review_words[w] = len(dic_review_words)

dic_review_words[w] = 1

lst_review_words.append(w2i[w])

y_review_mark[i, w2i_review_words[w]] = 1

y_review_tf[i, w2i_review_words[w]] += 1 #w2idf[w]

y_review_tf /= 10.

# 补充当前batch未出现过的词,dic=0

if len(dic_review_words) < lvt_dict_size:

for rd_hfw in hfw:

if rd_hfw not in dic_review_words:

w2i_review_words[rd_hfw] = len(dic_review_words)

dic_review_words[rd_hfw] = 0

lst_review_words.append(w2i[rd_hfw])

if len(dic_review_words) == lvt_dict_size:

break

else:

print ("!!!!!!!!!!!!")

for i in range(len(lst_review_words)):

lvt_i2i[i] = lst_review_words[i]

assert len(dic_review_words) == lvt_dict_size

assert len(lst_review_words) == lvt_dict_size

if options["is_predicting"]:

lvt_i2i = {}

lst_review_words = list(lvt_user_item)

for i in range(len(lst_review_words)):

lvt_i2i[i] = lst_review_words[i]

lst_review_words = np.asarray(lst_review_words)

return xu, xi, yr, yr_vec, lst_review_words, y_review_tf, y_review_mark, y_summary_index, y_summary_mark, y_summary_lvt, lvt_i2i

cost, mse, nll, nllu, nlli, cce, mae, l2n, rat_pred, sum_pred = model.train(XU, XI, Y_r, Y_r_vec, Dict_lvt, Y_rev_tf, Y_rev_mark, Y_sum_idx, Y_sum_mark, Y_sum_lvt, local_batch_size, consts["lr"])

#print cost, mse, nll, cce, l2n

error += cost

error_rmse += math.sqrt(mse)

e_nll += nll

e_nllu += nllu

e_nlli += nlli

e_cce += cce

e_mae += mae

e_l2n += l2n

error_i += 1

if error_i % consts["print_size"] == 0:

print ("epoch=", epoch, ", Error now = ", error / error_i, ", ",)

print ("RMSE now = ", error_rmse / error_i, ", ",)

print ("MAE now = ", e_mae / error_i, ", ",)

print ("NLL now = ", e_nll / error_i, ", ",)

print ("NLLu now = ", e_nllu / error_i, ", ",)

print ("NLLi now = ", e_nlli / error_i, ", ",)

print ("CCE now = ", e_cce / error_i, ", ",)

print ("L2 now = ", e_l2n / error_i, ", ",)

print (error_i, "/", len(batch_list), "time=", time.time()-start)

save_model("./model/m_" + data_type + "." + str(epoch) + "." + str(int(error_i / consts["print_size"])), model)

print_sent_dec(sum_pred, Y_sum_idx, Y_sum_mark, modules, consts, options, lvt_i2i)

save_model("./model/m_" + data_type + "." + str(epoch), model)

#print_sent_dec(sum_pred, Y_sum_idx, Y_sum_mark, modules, consts, options, lvt_i2i)

print ("epoch=", epoch, ", Error = ", error / len(batch_list),)

print (", RMSE = ", error_rmse / len(batch_list), ", time=", time.time()-start)

为什么出来全都是unk啊

Theano是一个Python库,你可以定义,优化,评价数学表达式,尤其是多维数组 (numpy.ndarray)

最接近Theano的Python包是sympy,Theano比sympy更专注于张量表达式,并且有更多的机制来编译,

class NeuralRecsys(object):

def __init__(self, modules, consts, options):

self.is_predicting = options["is_predicting"]

self.optimizer = modules["optimizer"]

# import theano.tensor as T

# 各种建立

self.xu = T.vector("xu", dtype = "int64")

self.xi = T.vector("xi", dtype = "int64")

self.y_rating = T.vector("y_rating")

self.lvt_dict = T.lvector("lvt_dict")

self.y_rev_mark = T.matrix("y_rev_mark")

self.y_rev_tf = T.matrix("y_rev_tf")

self.y_rat_vec = T.matrix("y_rat_vec")

self.y_sum_idx = T.tensor3("y_sum_idx", dtype = "int64")

self.y_sum_mark = T.matrix("y_sum_mark")

if not self.is_predicting:

self.y_sum_lvt = T.tensor3("y_sum_lvt", dtype = "int64")

self.lr = T.scalar("lr")

self.batch_size = T.iscalar("batch_size")

self.num_user = consts["num_user"]

self.num_item = consts["num_item"]

self.dict_size = consts["hfw_dict_size"]

self.lvt_dict_size = consts["lvt_dict_size"]

self.dim_x = consts["dim_x"]

self.dim_w = consts["dim_w"]

self.dim_h = consts["dim_h"]

self.dim_r = consts["dim_r"]

self.len_y = consts["len_y"]

self.params = []

self.sub_params = []

self.define_layers(modules, consts, options)

if not self.is_predicting:

self.define_train_funcs(modules, consts, options)

‘‘‘

The number of layers for the rating regression model is 4, and for the tips generation model is 1.

‘‘‘

def define_layers(self, modules, consts, options):

# init_weights 来自utils_pg.py

self.emb_u = init_weights((self.num_user, self.dim_x), "emb_u", sample = "normal")

self.emb_i = init_weights((self.num_item, self.dim_x), "emb_i", sample = "normal")

self.params += [self.emb_u, self.emb_i]

xu_flat = self.xu.flatten() # 向量吗???

xi_flat = self.xi.flatten()

xu_emb = self.emb_u[xu_flat, :] # 这些是什么意思哇

xu_emb = T.reshape(xu_emb, (self.batch_size, self.dim_x))

xi_emb = self.emb_i[xi_flat, :]

xi_emb = T.reshape(xi_emb, (self.batch_size, self.dim_x))

## user-doc

self.W_ud_uh = init_weights((self.dim_x, self.dim_h), "W_ud_uh")

self.b_ud_uh = init_bias(self.dim_h, "b_ud_uh")

self.W_ud_hd = init_weights((self.dim_h, self.dict_size), "W_ud_hd")

self.b_ud_hd = init_bias(self.dict_size, "b_ud_hd")

self.sub_W_ud_hd = self.W_ud_hd[:, self.lvt_dict]

self.sub_b_ud_hd = self.b_ud_hd[self.lvt_dict]

self.params += [self.W_ud_uh, self.b_ud_uh]

self.sub_params = [(self.W_ud_hd, self.sub_W_ud_hd, (self.dim_h, self.lvt_dict_size)),

(self.b_ud_hd, self.sub_b_ud_hd, (self.lvt_dict_size,))]

h_ru = T.nnet.sigmoid(T.dot(xu_emb, self.W_ud_uh) + self.b_ud_uh)

self.W_ud_uh2 = init_weights((self.dim_h, self.dim_h), "W_ud_uh2")

self.b_ud_uh2 = init_bias(self.dim_h, "b_ud_uh2")

self.params += [self.W_ud_uh2, self.b_ud_uh2]

h_ru2 = T.nnet.sigmoid(T.dot(h_ru, self.W_ud_uh2) + self.b_ud_uh2) ###

self.W_ud_uh3 = init_weights((self.dim_h, self.dim_h), "W_ud_uh3")

self.b_ud_uh3 = init_bias(self.dim_h, "b_ud_uh3")

self.params += [self.W_ud_uh3, self.b_ud_uh3]

h_ru3 = T.nnet.sigmoid(T.dot(h_ru2, self.W_ud_uh3) + self.b_ud_uh3) ##############

## item-doc

self.W_id_ih = init_weights((self.dim_x, self.dim_h), "W_id_ih")

self.b_id_ih = init_bias(self.dim_h, "b_id_ih")

self.W_id_hd = init_weights((self.dim_h, self.dict_size), "W_id_hd")

self.b_id_hd = init_bias(self.dict_size, "b_id_hd")

self.sub_W_id_hd = self.W_id_hd[:, self.lvt_dict]

self.sub_b_id_hd = self.b_id_hd[self.lvt_dict]

self.params += [self.W_id_ih, self.b_id_ih]

self.sub_params += [(self.W_id_hd, self.sub_W_id_hd, (self.dim_h, self.lvt_dict_size)),

(self.b_id_hd, self.sub_b_id_hd, (self.lvt_dict_size,))]

h_ri = T.nnet.sigmoid(T.dot(xi_emb, self.W_id_ih) + self.b_id_ih)

self.W_id_ih2 = init_weights((self.dim_h, self.dim_h), "W_id_ih2")

self.b_id_ih2 = init_bias(self.dim_h, "b_id_ih2")

self.params += [self.W_id_ih2, self.b_id_ih2]

h_ri2 = T.nnet.sigmoid(T.dot(h_ri, self.W_id_ih2) + self.b_id_ih2) ###

self.W_id_ih3 = init_weights((self.dim_h, self.dim_h), "W_id_ih3")

self.b_id_ih3 = init_bias(self.dim_h, "b_id_ih3")

self.params += [self.W_id_ih3, self.b_id_ih3]

h_ri3 = T.nnet.sigmoid(T.dot(h_ri2, self.W_id_ih3) + self.b_id_ih3) #####

## user-item-doc

self.W_uid_uh = init_weights((self.dim_x, self.dim_h), "W_uid_uh")

self.W_uid_ih = init_weights((self.dim_x, self.dim_h), "W_uid_ih")

self.b_uid_uih = init_bias(self.dim_h, "b_uid_uih")

self.W_uid_hd = init_weights((self.dim_h, self.dict_size), "W_uid_hd")

self.b_uid_hd = init_bias(self.dict_size, "b_uid_hd")

self.sub_W_uid_hd = self.W_uid_hd[:, self.lvt_dict]

self.sub_b_uid_hd = self.b_uid_hd[self.lvt_dict]

self.params += [self.W_uid_uh, self.W_uid_ih, self.b_uid_uih]

self.sub_params += [(self.W_uid_hd, self.sub_W_uid_hd, (self.dim_h, self.lvt_dict_size)),

(self.b_uid_hd, self.sub_b_uid_hd, (self.lvt_dict_size,))]

h_rui = T.nnet.sigmoid(T.dot(xu_emb, self.W_uid_uh) + T.dot(xi_emb, self.W_uid_ih) + self.b_uid_uih)

self.W_uid_uih2 = init_weights((self.dim_h, self.dim_h), "W_uid_uih2")

self.b_uid_uih2 = init_bias(self.dim_h, "b_uid_uih2")

self.params += [self.W_uid_uih2, self.b_uid_uih2]

h_rui2 = T.nnet.sigmoid(T.dot(h_rui, self.W_uid_uih2) + self.b_uid_uih2) ###

self.W_uid_uih3 = init_weights((self.dim_h, self.dim_h), "W_uid_uih3")

self.b_uid_uih3 = init_bias(self.dim_h, "b_uid_uih3")

self.params += [self.W_uid_uih3, self.b_uid_uih3]

h_rui3 = T.nnet.sigmoid(T.dot(h_rui2, self.W_uid_uih3) + self.b_uid_uih3) #####

# --------------------这上面的都是些什么玩意?-------------------------------

# predict 1: rating

self.W_r_uh = init_weights((self.dim_x, self.dim_h), "W_r_uh")

self.W_r_ih = init_weights((self.dim_x, self.dim_h), "W_r_ih")

self.W_r_uih = init_weights((self.dim_x, self.dim_h), "W_r_uih")

self.b_r_uih = init_bias(self.dim_h, "b_r_uih")

self.W_r_hr = init_weights((self.dim_h, 1), "W_r_hr")

self.W_r_ur = init_weights((self.dim_x, 1), "W_r_ur")

self.W_r_ir = init_weights((self.dim_x, 1), "W_r_ir")

self.b_r_hr = init_bias(1, "b_r_hr")

self.params += [self.W_r_uh, self.W_r_ih, self.W_r_uih, self.b_r_uih, self.W_r_hr, self.b_r_hr, self.W_r_ur, self.W_r_ir]

# 公式(3)

h_r = T.nnet.sigmoid(T.dot(xu_emb, self.W_r_uh) + T.dot(xi_emb, self.W_r_ih) + T.dot(xu_emb * xi_emb, self.W_r_uih) + self.b_r_uih)

self.W_r_hh2 = init_weights((self.dim_h, self.dim_h), "W_r_hh2")

self.b_r_hh2 = init_bias(self.dim_h, "b_r_hh2")

self.params += [self.W_r_hh2, self.b_r_hh2]

# 公式(5)多层

h_r2 = T.nnet.sigmoid(T.dot(h_r, self.W_r_hh2) + self.b_r_hh2) ###

self.W_r_hh3 = init_weights((self.dim_h, self.dim_h), "W_r_hh3")

self.b_r_hh3 = init_bias(self.dim_h, "b_r_hh3")

self.params += [self.W_r_hh3, self.b_r_hh3]

# 公式(5)

h_r3 = T.nnet.sigmoid(T.dot(h_r2, self.W_r_hh3) + self.b_r_hh3) #####

## merge them

self.review_i = T.nnet.softmax(T.dot(h_ri3, self.sub_W_id_hd) + self.sub_b_id_hd)

self.review_u = T.nnet.softmax(T.dot(h_ru3, self.sub_W_ud_hd) + self.sub_b_ud_hd)

self.review = T.nnet.softmax(T.dot(h_rui3, self.sub_W_uid_hd) + self.sub_b_uid_hd)

self.W_r_uhh = init_weights((self.dim_h, self.dim_h), "W_r_uhh")

self.W_r_ihh = init_weights((self.dim_h, self.dim_h), "W_r_ihh")

self.W_r_uihh = init_weights((self.dim_h, self.dim_h), "W_r_uihh")

self.W_r_rhh = init_weights((self.dim_h, self.dim_h), "W_r_rhh")

self.b_r_uirh = init_bias(self.dim_h, "b_r_uirh")

self.params += [self.W_r_uhh, self.W_r_ihh, self.W_r_uihh, self.W_r_rhh, self.b_r_uirh]

# 公式(6),增加了别的东西吧

h_r4 = T.nnet.sigmoid(T.dot(h_ru3, self.W_r_uhh) + T.dot(h_ri3, self.W_r_ihh) + T.dot(h_r3, self.W_r_rhh) + T.dot(h_rui3, self.W_r_uihh) + self.b_r_uirh)

self.r_pred = T.dot(h_r4, self.W_r_hr) + T.dot(xu_emb, self.W_r_ur) + T.dot(xi_emb, self.W_r_ir) + self.b_r_hr

# predict 3: summary----------------------------------------------------------

# 后面的都不会了

self.emb_w = init_weights((self.dict_size, self.dim_w), "emb_w", sample = "normal")

if self.is_predicting:

emb_r = T.zeros((self.batch_size, self.dim_r))

r_idx = T.round(self.r_pred)

r_idx = T.cast(r_idx, "int64")

r_idx = T.clip(r_idx, 0, 5)

emb_r = T.inc_subtensor(emb_r[:, r_idx], T.cast(1.0, dtype = theano.config.floatX))

else:

emb_r = self.y_rat_vec

self.W_s_uh = init_weights((self.dim_x, self.dim_h), "W_s_uh")

self.W_s_ih = init_weights((self.dim_x, self.dim_h), "W_s_ih")

self.b_s_uih = init_bias(self.dim_h, "b_s_uih")

self.W_s_rh = init_weights((self.dim_r, self.dim_h), "W_s_rh")

self.W_s_uch = init_weights((self.dim_h, self.dim_h), "W_s_uch")

self.W_s_ich = init_weights((self.dim_h, self.dim_h), "W_s_ich")

self.W_s_uich = init_weights((self.dim_h, self.dim_h), "W_s_uich")

self.params += [self.emb_w, self.W_s_uh, self.W_s_ih, self.b_s_uih, self.W_s_rh, self.W_s_uch, self.W_s_ich, self.W_s_uich]

## initialize hidden state

# 公式(17)

h_s = T.tanh(T.dot(xu_emb, self.W_s_uh) + T.dot(xi_emb, self.W_s_ih) + T.dot(emb_r, self.W_s_rh) + T.dot(h_ru3, self.W_s_uch) + T.dot(h_ri3, self.W_s_ich) + T.dot(h_rui3, self.W_s_uich) + self.b_s_uih)

if self.is_predicting:

inputs = [self.xu, self.xi, self.batch_size]

self.encode = theano.function(inputs = inputs,

outputs = [self.r_pred, h_s, emb_r, xu_emb, xi_emb],

on_unused_input = ‘ignore‘)

y_flat = self.y_sum_idx.flatten()

if self.is_predicting:

y_emb = ifelse(T.lt(T.sum(y_flat), 0),

T.zeros((self.batch_size, self.dim_w)), self.emb_w[y_flat, :])

y_emb = T.reshape(y_emb, (self.batch_size, self.dim_w))

else:

y_emb = self.emb_w[y_flat, :]

y_emb = T.reshape(y_emb, (self.len_y, self.batch_size, self.dim_w))

y_shifted = T.zeros_like(y_emb)

y_shifted = T.set_subtensor(y_shifted[1:, :, :], y_emb[:-1, :, :])

y_emb = y_shifted

# gru decoder

# 这又是哪里哇论文里好像没写?

self.W_yh = init_weights((self.dim_w, self.dim_h), "W_yh", num_concatenate = 2, axis_concatenate = 1)

self.b_yh = init_bias(self.dim_h, "b_yh", num_concatenate = 2)

self.W_yhx = init_weights((self.dim_w, self.dim_h), "W_yhx")

self.b_yhx = init_bias(self.dim_h, "b_yhx")

self.W_hru = init_weights((self.dim_h, self.dim_h), "W_hru", "ortho", num_concatenate = 2, axis_concatenate = 1)

self.W_hh = init_weights((self.dim_h, self.dim_h), "W_hh", "ortho")

self.params += [self.W_yh, self.b_yh, self.W_yhx, self.b_yhx, self.W_hru, self.W_hh]

def _slice(x, n):

if x.ndim == 3:

return x[:, :, n * self.dim_h : (n + 1) * self.dim_h]

return x[:, n * self.dim_h : (n + 1) * self.dim_h]

dec_in_x = T.dot(y_emb, self.W_yh) + self.b_yh

dec_in_xx = T.dot(y_emb, self.W_yhx) + self.b_yhx

def _active(x, xx, y_mask, pre_h, W_hru, W_hh):

tmp1 = T.nnet.sigmoid(T.dot(pre_h, W_hru) + x)

r1 = _slice(tmp1, 0)

u1 = _slice(tmp1, 1)

h1 = T.tanh(T.dot(pre_h * r1, W_hh) + xx)

h1 = u1 * pre_h + (1.0 - u1) * h1

h1 = y_mask[:, None] * h1 #+ (1.0 - y_mask) * pre_h

return h1

if self.is_predicting:

self.y_sum_mark = T.ones((self.batch_size, 1))

sequences = [dec_in_x, dec_in_xx, self.y_sum_mark]

non_sequences = [self.W_hru, self.W_hh]

if self.is_predicting:

self.dec_next_state = T.matrix("dec_next_state")

print ("use one-step decoder")

hs = _active(*(sequences + [self.dec_next_state] + non_sequences))

else:

hs, _ = theano.scan(_active,

sequences = sequences,

outputs_info = [h_s],

non_sequences = non_sequences,

allow_gc = False, strict = True)

# output layer

self.W_hy = init_weights((self.dim_h, self.dim_h), "W_hy")

self.W_yy = init_weights((self.dim_w, self.dim_h), "W_yy")

self.W_uy = init_weights((self.dim_x, self.dim_h), "W_uy")

self.W_iy = init_weights((self.dim_x, self.dim_h), "W_iy")

self.W_ry = init_weights((self.dim_r, self.dim_h), "W_ry")

self.b_hy = init_bias(self.dim_h, "b_hy")

self.W_logit = init_weights((self.dim_h, self.dict_size), "W_logit")

self.b_logit = init_bias(self.dict_size, "b_logit")

self.sub_W_logit = self.W_logit[:, self.lvt_dict]

self.sub_b_logit = self.b_logit[self.lvt_dict]

self.params += [self.W_hy, self.W_yy, self.W_uy, self.W_iy, self.W_ry, self.b_hy]

self.sub_params += [(self.W_logit, self.sub_W_logit, (self.dim_h, self.lvt_dict_size)),

(self.b_logit, self.sub_b_logit, (self.lvt_dict_size,))]

logit = T.tanh(T.dot(hs, self.W_hy) + T.dot(y_emb, self.W_yy) + T.dot(xu_emb, self.W_uy) + T.dot(xi_emb, self.W_iy) + T.dot(emb_r, self.W_ry) + self.b_hy)

logit = T.dot(logit, self.sub_W_logit) + self.sub_b_logit

old_shape = logit.shape

if self.is_predicting:

self.y_sum_pred = T.nnet.softmax(logit)

else:

self.y_sum_pred = T.nnet.softmax(logit.reshape((old_shape[0] * old_shape[1], old_shape[2]))).reshape(old_shape)

if self.is_predicting:

emb_r_enc = T.matrix("emb_r_enc")

xu_emb_enc = T.matrix("xu_emb_enc")

xi_emb_enc = T.matrix("xi_emb_enc")

inputs = [self.y_sum_idx, self.dec_next_state, emb_r, xu_emb, xi_emb, self.batch_size, self.lvt_dict]

self.decode_once = theano.function(inputs = inputs,

outputs = [self.y_sum_pred, hs],

on_unused_input = ‘ignore‘)

def define_train_funcs(self, modules, consts, options):

mse = self.cost_mse(self.r_pred, self.y_rating.reshape((self.batch_size, 1)))

nll = self.cost_nll(self.review, self.y_rev_tf, self.y_rev_mark)

nllu = self.cost_nll(self.review_u, self.y_rev_tf, self.y_rev_mark)

nlli = self.cost_nll(self.review_i, self.y_rev_tf, self.y_rev_mark)

cce = self.categorical_crossentropy(modules)

mae = self.cost_mae(self.r_pred, self.y_rating.reshape((self.batch_size, 1)))

l2n = self.l2_norm()

cost = mse + nll + nllu + nlli + cce

# Negative Log-Likelihood (NLL) 公式(16)

def cost_nll(self, pred, label, mark):

cost = -T.log(pred) * label * mark

cost = T.mean(T.sum(cost, axis = 1))

return cost

https://stackoverflow.com/questions/38931112/theano-function-how-does-it-work

function()中updates=[old_w,new_w],当函数被调用的时候,这个会用new_w替换old_w

gparams = []

for param in self.params:

gparams.append(T.clip(T.grad(cost, param), -10, 10))

sub_gparams = []

for param in self.sub_params:

sub_gparams.append(T.clip(T.grad(cost, param[1]), -10, 10))

optimizer = eval(self.optimizer)

updates = optimizer(self.params, gparams, self.sub_params, sub_gparams, self.lr)

inputs = [self.xu, self.xi, self.y_rating, self.y_rat_vec, self.lvt_dict, self.y_rev_mark, self.y_rev_tf, self.y_sum_idx, self.y_sum_mark, self.y_sum_lvt, self.batch_size]

# 定义输入和输出的函数,就自动计算?

self.train = theano.function(

inputs = inputs + [self.lr],

outputs = [cost, mse, nll, nllu, nlli, cce, mae, l2n, self.r_pred, self.y_sum_pred],

updates = updates,

on_unused_input = ‘ignore‘)

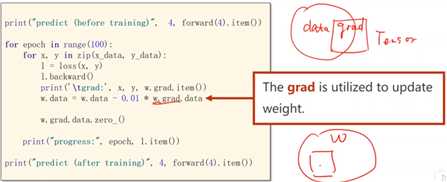

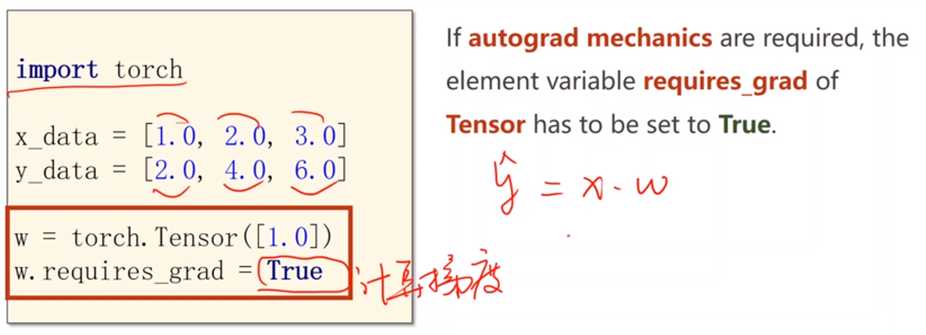

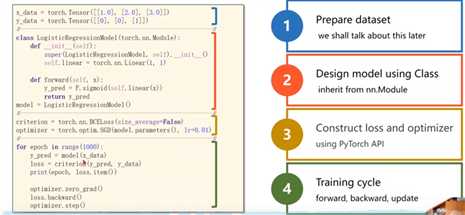

创建的w(权重)是Tensor类型,里面有w.data 和 w.grad

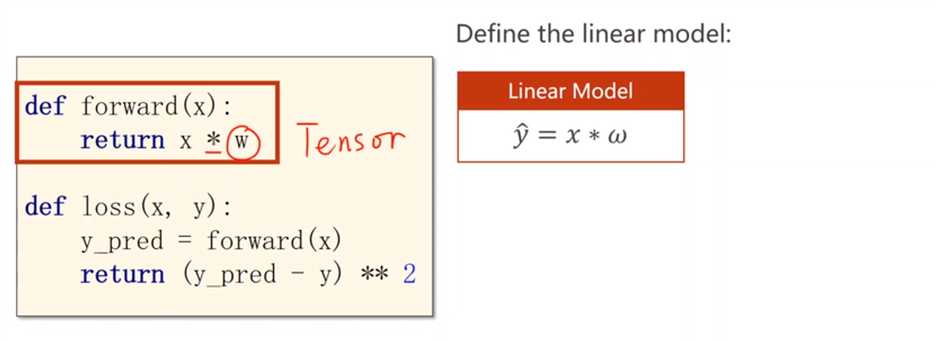

计算时用到了w,用到tensor的计算会构建动态的计算图,x*w也是Tensor了。

返回loss,调用backward的时候自动计算grad,存在Tensor里。计算图会释放,下一次进行loss计算会生成新的计算图。

更新权重的时候不能直接操作w.grad,它也是一个tensor,而是取w.grad.data。所有的Tensor都要去data数据操作才行,否则就是构建计算图。

清零梯度数据,否则下一次计算的梯度会和之前的梯度叠加,手动。

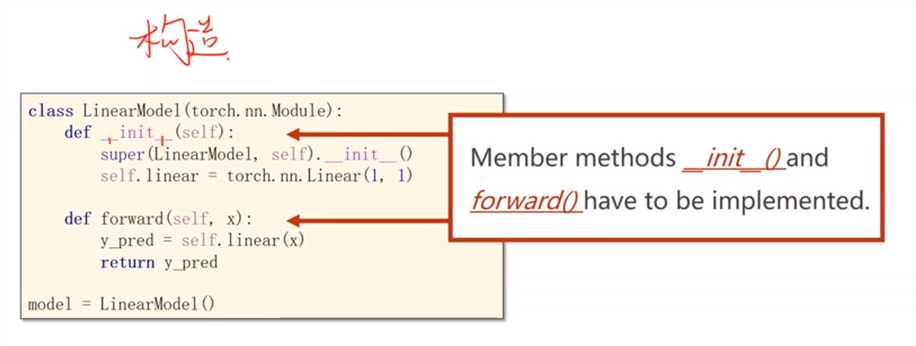

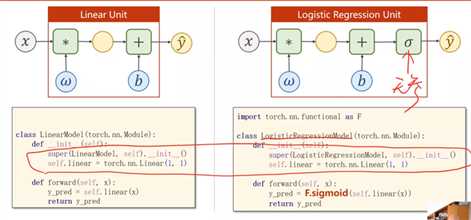

nn.Module 构造自己的神经网络

loss 构造损失函数

sgd

一定要有这两个函数(实现,名字相同)

没有backward:有module的类会自动实现backward这个过程

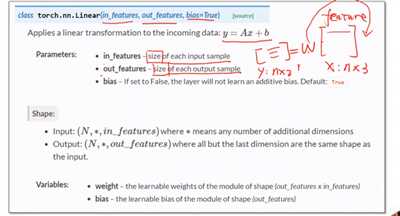

torch.nn.Linear(1,1) 是一个对象。包含w和b两个tensor

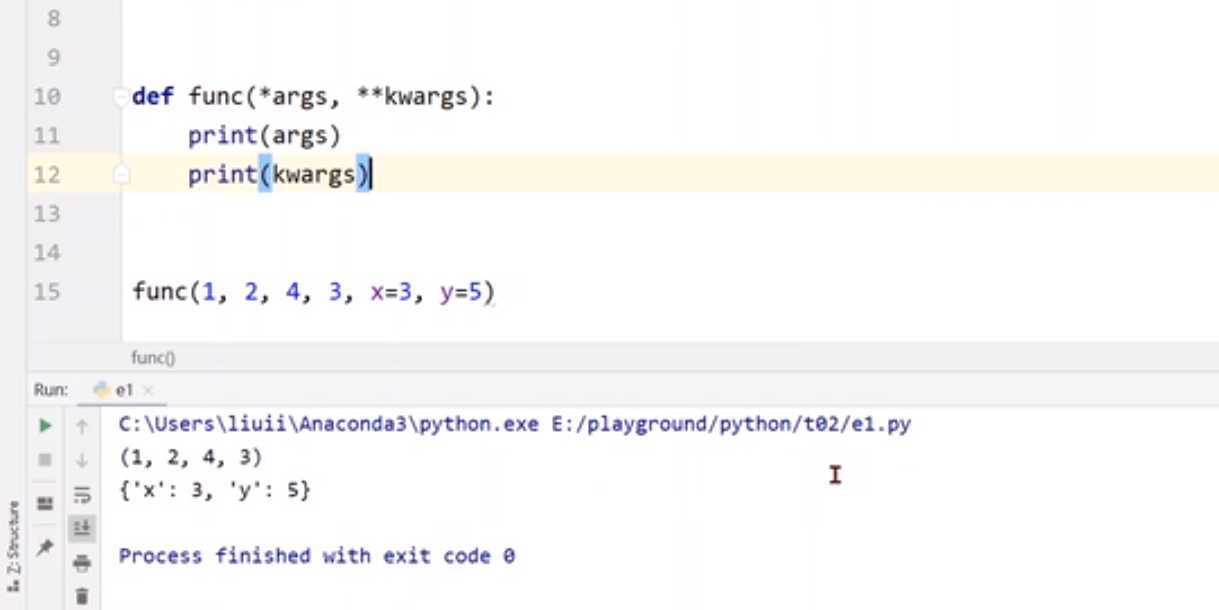

args和kwargs

_ _ call _ _ 里面会调用forward,建立model初始化后调用model(x)就相当与把x直接传进forward里

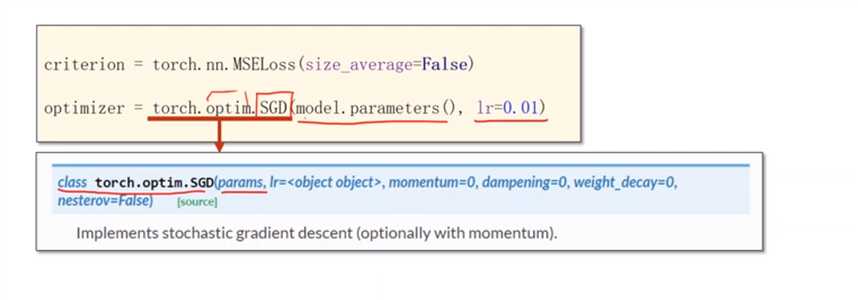

MSE损失函数和SGD优化函数

使用model.parameters得到模型本来定义好的w和b

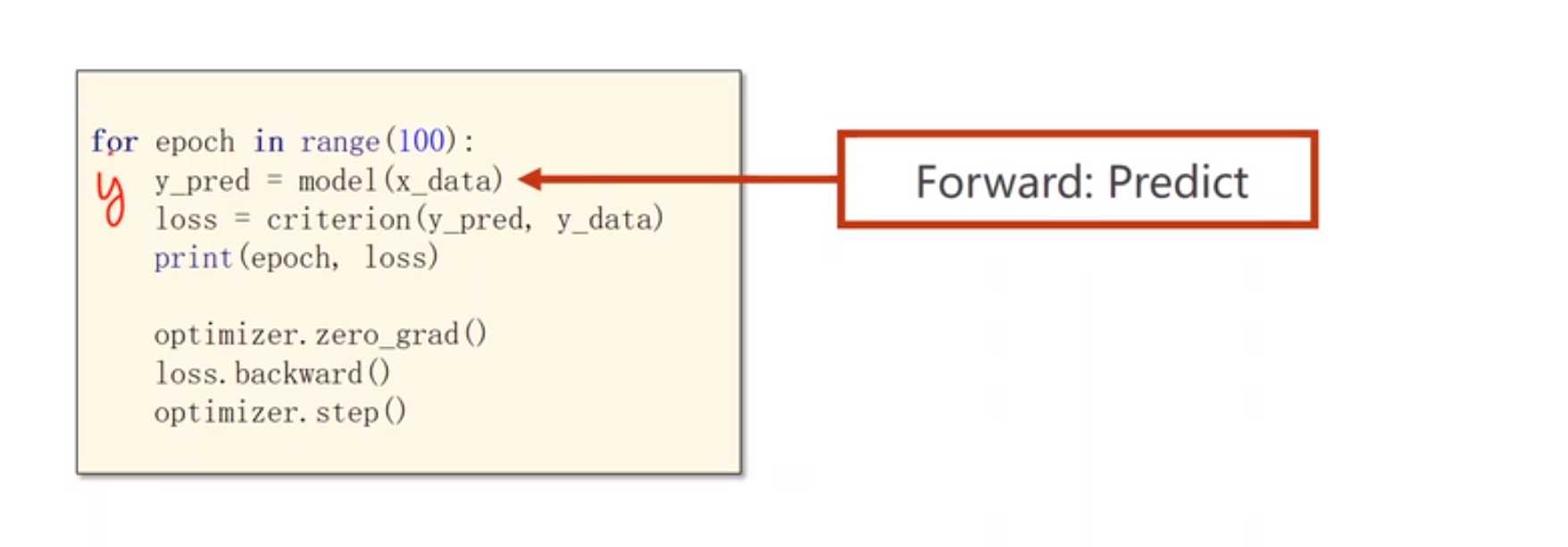

训练的过程

先计算出当前结果,然后计算损失,然后backward,最后更新

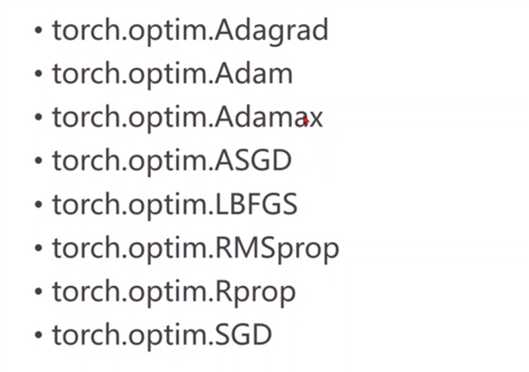

各种优化器

LogisticRegression解决分类问题

Sigmod函数定义在functional包下

基本分块,Cross EntropyE计算loss

原文:https://www.cnblogs.com/pppqq77799/p/13537923.html