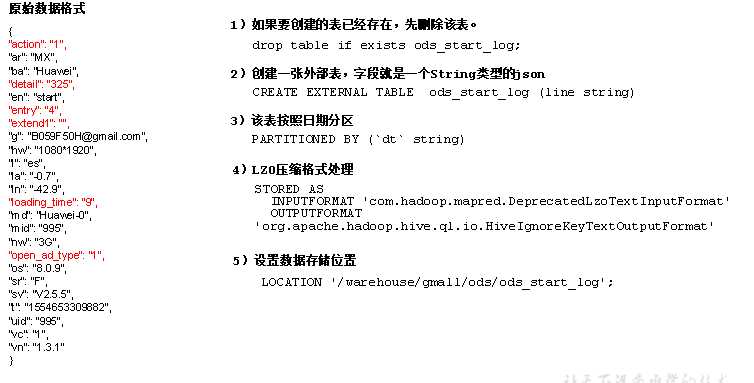

原始数据层,存放原始数据,直接加载原始日志、数据,数据保持原貌不做处理。

1.1 创建数据库

1)创建数据仓库目录,并修改所有者

sudo -u hdfs hadoop fs -mkdir /warehouse

sudo -u hdfs hadoop fs -chown hive:hive /warehouse

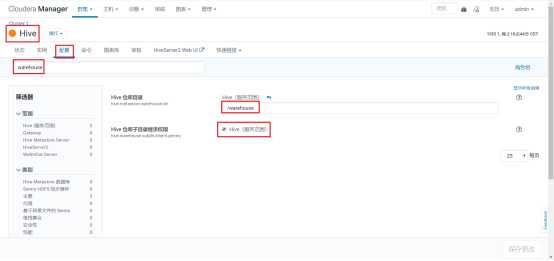

2)修改hive配置

2)启动Hive客户端(注意要以hive用户启动)

sudo -u hive hive

3)创建gmall数据库

hive (default)> create database gmall;

说明:如果数据库存在且有数据,需要强制删除时执行:drop database gmall cascade;

4)使用gmall数据库

hive (default)> use gmall;

1.2 创建启动日志表ods_start_log

以下为建表语句

hive (gmall)>

drop table if exists ods_start_log;

CREATE EXTERNAL TABLE ods_start_log (`line` string)

PARTITIONED BY (`dt` string)

STORED AS

INPUTFORMAT ‘com.hadoop.mapred.DeprecatedLzoTextInputFormat‘

OUTPUTFORMAT ‘org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat‘

LOCATION ‘/warehouse/gmall/ods/ods_start_log‘;

说明Hive的LZO压缩:https://cwiki.apache.org/confluence/display/Hive/LanguageManual+LZO

1)在hadoop102的/root/bin目录下创建脚本

[root@hadoop102 bin]$ vim ods_log.sh

在脚本中编写如下内容

#!/bin/bash # 定义变量方便修改 APP=gmall # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi echo "===日志日期为 $do_date===" sql=" load data inpath ‘/origin_data/gmall/log/topic_start/$do_date‘ into table "$APP".ods_start_log partition(dt=‘$do_date‘); " beeline -u "jdbc:hive2://hadoop102:10000/" -n hive -e "$sql"

说明1:

[ -n 变量值 ] 判断变量的值,是否为空

-- 变量的值,非空,返回true

-- 变量的值,为空,返回false

说明2:

查看date命令的使用,[root@hadoop102 ~]$ date --help

2)增加脚本执行权限

[root@hadoop102 bin]$ chmod 777 ods_log.sh

3)脚本使用

[root@hadoop102 module]$ ods_log.sh 2019-09-03

2.1 创建启动表

1)建表语句

hive (gmall)> drop table if exists dwd_start_log; CREATE EXTERNAL TABLE dwd_start_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `entry` string, `open_ad_type` string, `action` string, `loading_time` string, `detail` string, `extend1` string ) PARTITIONED BY (dt string) location ‘/warehouse/gmall/dwd/dwd_start_log/‘;

hive (gmall)> drop table if exists dwd_start_log; CREATE EXTERNAL TABLE dwd_start_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `entry` string, `open_ad_type` string, `action` string, `loading_time` string, `detail` string, `extend1` string ) PARTITIONED BY (dt string) location ‘/warehouse/gmall/dwd/dwd_start_log/‘;

hive (gmall)> drop table if exists dwd_start_log; CREATE EXTERNAL TABLE dwd_start_log( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `entry` string, `open_ad_type` string, `action` string, `loading_time` string, `detail` string, `extend1` string ) PARTITIONED BY (dt string) location ‘/warehouse/gmall/dwd/dwd_start_log/‘;

2.2 DWD层启动表加载数据脚本

1)在hadoop102的/root/bin目录下创建脚本

[root@hadoop102 bin]$ vim dwd_start_log.sh

在脚本中编写如下内容

#!/bin/bash # 定义变量方便修改 APP=gmall # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dwd_start_log PARTITION (dt=‘$do_date‘) select get_json_object(line,‘$.mid‘) mid_id, get_json_object(line,‘$.uid‘) user_id, get_json_object(line,‘$.vc‘) version_code, get_json_object(line,‘$.vn‘) version_name, get_json_object(line,‘$.l‘) lang, get_json_object(line,‘$.sr‘) source, get_json_object(line,‘$.os‘) os, get_json_object(line,‘$.ar‘) area, get_json_object(line,‘$.md‘) model, get_json_object(line,‘$.ba‘) brand, get_json_object(line,‘$.sv‘) sdk_version, get_json_object(line,‘$.g‘) gmail, get_json_object(line,‘$.hw‘) height_width, get_json_object(line,‘$.t‘) app_time, get_json_object(line,‘$.nw‘) network, get_json_object(line,‘$.ln‘) lng, get_json_object(line,‘$.la‘) lat, get_json_object(line,‘$.entry‘) entry, get_json_object(line,‘$.open_ad_type‘) open_ad_type, get_json_object(line,‘$.action‘) action, get_json_object(line,‘$.loading_time‘) loading_time, get_json_object(line,‘$.detail‘) detail, get_json_object(line,‘$.extend1‘) extend1 from "$APP".ods_start_log where dt=‘$do_date‘; " beeline -u "jdbc:hive2://hadoop102:10000/" -n hive -e "$sql"

2)增加脚本执行权限

[root@hadoop102 bin]$ chmod 777 dwd_start_log.sh

3)脚本使用

[root@hadoop102 module]$ dwd_start_log.sh 2019-09-03

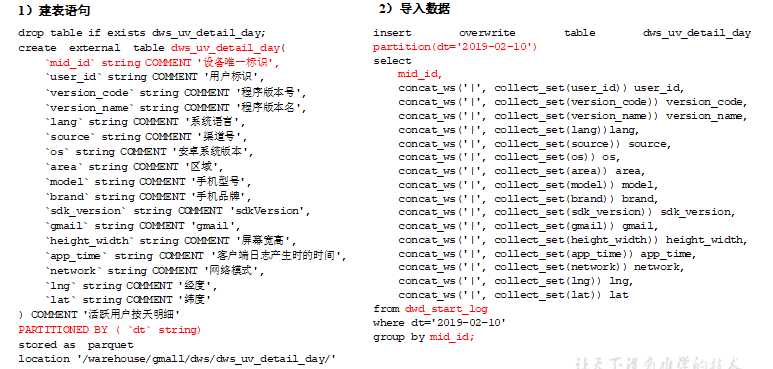

目标:统计当日、当周、当月活动的每个设备明细

3.1 每日活跃设备明细

1)建表语句

hive (gmall)> drop table if exists dws_uv_detail_day; create external table dws_uv_detail_day ( `mid_id` string COMMENT ‘设备唯一标识‘, `user_id` string COMMENT ‘用户标识‘, `version_code` string COMMENT ‘程序版本号‘, `version_name` string COMMENT ‘程序版本名‘, `lang` string COMMENT ‘系统语言‘, `source` string COMMENT ‘渠道号‘, `os` string COMMENT ‘安卓系统版本‘, `area` string COMMENT ‘区域‘, `model` string COMMENT ‘手机型号‘, `brand` string COMMENT ‘手机品牌‘, `sdk_version` string COMMENT ‘sdkVersion‘, `gmail` string COMMENT ‘gmail‘, `height_width` string COMMENT ‘屏幕宽高‘, `app_time` string COMMENT ‘客户端日志产生时的时间‘, `network` string COMMENT ‘网络模式‘, `lng` string COMMENT ‘经度‘, `lat` string COMMENT ‘纬度‘ ) partitioned by(dt string) stored as parquet location ‘/warehouse/gmall/dws/dws_uv_detail_day‘ ;

1)在hadoop102的/root/bin目录下创建脚本

[root@hadoop102 bin]$ vim dws_log.sh

在脚本中编写如下内容

#!/bin/bash # 定义变量方便修改 APP=gmall # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dws_uv_detail_day partition(dt=‘$do_date‘) select mid_id, concat_ws(‘|‘, collect_set(user_id)) user_id, concat_ws(‘|‘, collect_set(version_code)) version_code, concat_ws(‘|‘, collect_set(version_name)) version_name, concat_ws(‘|‘, collect_set(lang)) lang, concat_ws(‘|‘, collect_set(source)) source, concat_ws(‘|‘, collect_set(os)) os, concat_ws(‘|‘, collect_set(area)) area, concat_ws(‘|‘, collect_set(model)) model, concat_ws(‘|‘, collect_set(brand)) brand, concat_ws(‘|‘, collect_set(sdk_version)) sdk_version, concat_ws(‘|‘, collect_set(gmail)) gmail, concat_ws(‘|‘, collect_set(height_width)) height_width, concat_ws(‘|‘, collect_set(app_time)) app_time, concat_ws(‘|‘, collect_set(network)) network, concat_ws(‘|‘, collect_set(lng)) lng, concat_ws(‘|‘, collect_set(lat)) lat from "$APP".dwd_start_log where dt=‘$do_date‘ group by mid_id; " beeline -u "jdbc:hive2://hadoop102:10000/" -n hive -e "$sql"

#!/bin/bash # 定义变量方便修改 APP=gmall # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dws_uv_detail_day partition(dt=‘$do_date‘) select mid_id, concat_ws(‘|‘, collect_set(user_id)) user_id, concat_ws(‘|‘, collect_set(version_code)) version_code, concat_ws(‘|‘, collect_set(version_name)) version_name, concat_ws(‘|‘, collect_set(lang)) lang, concat_ws(‘|‘, collect_set(source)) source, concat_ws(‘|‘, collect_set(os)) os, concat_ws(‘|‘, collect_set(area)) area, concat_ws(‘|‘, collect_set(model)) model, concat_ws(‘|‘, collect_set(brand)) brand, concat_ws(‘|‘, collect_set(sdk_version)) sdk_version, concat_ws(‘|‘, collect_set(gmail)) gmail, concat_ws(‘|‘, collect_set(height_width)) height_width, concat_ws(‘|‘, collect_set(app_time)) app_time, concat_ws(‘|‘, collect_set(network)) network, concat_ws(‘|‘, collect_set(lng)) lng, concat_ws(‘|‘, collect_set(lat)) lat from "$APP".dwd_start_log where dt=‘$do_date‘ group by mid_id; " beeline -u "jdbc:hive2://hadoop102:10000/" -n hive -e "$sql"

2)增加脚本执行权限

[root@hadoop102 bin]$ chmod 777 dws_log.sh

3)脚本使用

[root@hadoop102 module]$ dws_log.sh 2019-09-03

目标:当日活跃设备数

4.1 活跃设备数

1)建表语句

hive (gmall)> drop table if exists ads_uv_count; create external table ads_uv_count( `dt` string COMMENT ‘统计日期‘, `day_count` bigint COMMENT ‘当日用户数量‘ ) COMMENT ‘活跃设备数‘ row format delimited fields terminated by ‘\t‘ location ‘/warehouse/gmall/ads/ads_uv_count/‘ ;

1)在hadoop102的/root/bin目录下创建脚本

[root@hadoop102 bin]$ vim ads_uv_log.sh

在脚本中编写如下内容

#!/bin/bash # 定义变量方便修改 APP=gmall # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert into table "$APP".ads_uv_count select ‘$do_date‘ dt, daycount.ct from ( select ‘$do_date‘ dt, count(*) ct from "$APP".dws_uv_detail_day where dt=‘$do_date‘ )daycount; " beeline -u "jdbc:hive2://hadoop102:10000/" -n hive -e "$sql"

2)增加脚本执行权限

[root@hadoop102 bin]$ chmod 777 ads_uv_log.sh

3)脚本使用

[root@hadoop102 module]$ ads_uv_log.sh 2019-09-03

大数据实战(七十三):电商数仓(五十七)数仓之用户行为数仓搭建(二)ODS层,DWD层,DWS层,ADS层

原文:https://www.cnblogs.com/qiu-hua/p/13574749.html