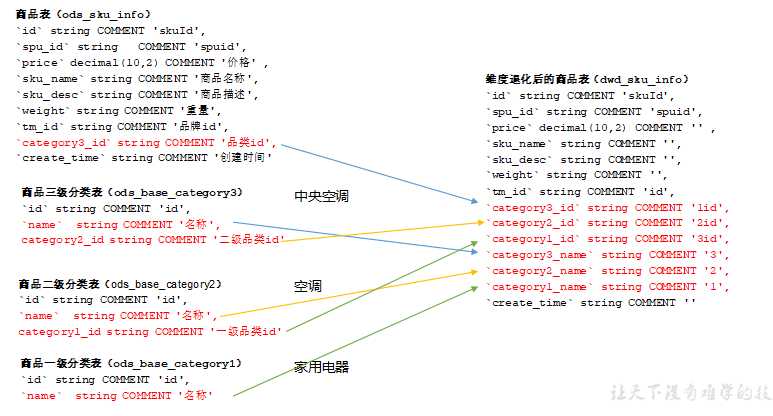

对ODS层数据进行判空过滤。对商品分类表进行维度退化(降维)。

hive (gmall)> drop table if exists dwd_order_info; create external table dwd_order_info ( `id` string COMMENT ‘‘, `total_amount` decimal(10,2) COMMENT ‘‘, `order_status` string COMMENT ‘ 1 2 3 4 5‘, `user_id` string COMMENT ‘id‘ , `payment_way` string COMMENT ‘‘, `out_trade_no` string COMMENT ‘‘, `create_time` string COMMENT ‘‘, `operate_time` string COMMENT ‘‘ ) PARTITIONED BY ( `dt` string) stored as parquet location ‘/warehouse/gmall/dwd/dwd_order_info/‘ ;

hive (gmall)> drop table if exists dwd_order_detail; create external table dwd_order_detail( `id` string COMMENT ‘‘, `order_id` decimal(10,2) COMMENT ‘‘, `user_id` string COMMENT ‘id‘ , `sku_id` string COMMENT ‘id‘, `sku_name` string COMMENT ‘‘, `order_price` string COMMENT ‘‘, `sku_num` string COMMENT ‘‘, `create_time` string COMMENT ‘‘ ) PARTITIONED BY (`dt` string) stored as parquet location ‘/warehouse/gmall/dwd/dwd_order_detail/‘ ;

hive (gmall)> drop table if exists dwd_user_info; create external table dwd_user_info( `id` string COMMENT ‘id‘, `name` string COMMENT ‘‘, `birthday` string COMMENT ‘‘ , `gender` string COMMENT ‘‘, `email` string COMMENT ‘‘, `user_level` string COMMENT ‘‘, `create_time` string COMMENT ‘‘ ) PARTITIONED BY (`dt` string) stored as parquet location ‘/warehouse/gmall/dwd/dwd_user_info/‘ ;

4 创建支付流水表

hive (gmall)> drop table if exists `dwd_payment_info`; create external table `dwd_payment_info`( `id` bigint COMMENT ‘‘, `out_trade_no` string COMMENT ‘‘, `order_id` string COMMENT ‘‘, `user_id` string COMMENT ‘‘, `alipay_trade_no` string COMMENT ‘‘, `total_amount` decimal(16,2) COMMENT ‘‘, `subject` string COMMENT ‘‘, `payment_type` string COMMENT ‘‘, `payment_time` string COMMENT ‘‘ ) PARTITIONED BY ( `dt` string) stored as parquet location ‘/warehouse/gmall/dwd/dwd_payment_info/‘ ;

hive (gmall)> drop table if exists dwd_sku_info; create external table dwd_sku_info( `id` string COMMENT ‘skuId‘, `spu_id` string COMMENT ‘spuid‘, `price` decimal(10,2) COMMENT ‘‘ , `sku_name` string COMMENT ‘‘, `sku_desc` string COMMENT ‘‘, `weight` string COMMENT ‘‘, `tm_id` string COMMENT ‘id‘, `category3_id` string COMMENT ‘1id‘, `category2_id` string COMMENT ‘2id‘, `category1_id` string COMMENT ‘3id‘, `category3_name` string COMMENT ‘3‘, `category2_name` string COMMENT ‘2‘, `category1_name` string COMMENT ‘1‘, `create_time` string COMMENT ‘‘ ) PARTITIONED BY ( `dt` string) stored as parquet location ‘/warehouse/gmall/dwd/dwd_sku_info/‘ ;

1)在/root/bin目录下创建脚本dwd_db.sh

[root@hadoop102 bin]$ vim dwd_db.sh

在脚本中填写如下内容

#!/bin/bash # 定义变量方便修改 APP=gmall # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 if [ -n "$1" ] ;then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dwd_order_info partition(dt) select * from "$APP".ods_order_info where dt=‘$do_date‘ and id is not null; insert overwrite table "$APP".dwd_order_detail partition(dt) select * from "$APP".ods_order_detail where dt=‘$do_date‘ and id is not null; insert overwrite table "$APP".dwd_user_info partition(dt) select * from "$APP".ods_user_info where dt=‘$do_date‘ and id is not null; insert overwrite table "$APP".dwd_payment_info partition(dt) select * from "$APP".ods_payment_info where dt=‘$do_date‘ and id is not null; insert overwrite table "$APP".dwd_sku_info partition(dt) select sku.id, sku.spu_id, sku.price, sku.sku_name, sku.sku_desc, sku.weight, sku.tm_id, sku.category3_id, c2.id category2_id , c1.id category1_id, c3.name category3_name, c2.name category2_name, c1.name category1_name, sku.create_time, sku.dt from "$APP".ods_sku_info sku join "$APP".ods_base_category3 c3 on sku.category3_id=c3.id join "$APP".ods_base_category2 c2 on c3.category2_id=c2.id join "$APP".ods_base_category1 c1 on c2.category1_id=c1.id where sku.dt=‘$do_date‘ and c2.dt=‘$do_date‘ and c3.dt=‘$do_date‘ and c1.dt=‘$do_date‘ and sku.id is not null; " beeline -u "jdbc:hive2://hadoop102:10000/" -n hive -e "$sql"

2)增加脚本执行权限

[root@hadoop102 bin]$ chmod 777 dwd_db.sh

3)采用脚本导入数据

[root@hadoop102 bin]$ dwd_db.sh 2019-02-10

大数据实战(七十六):电商数仓(六十)数仓之业务数仓搭建(三)DWD层

原文:https://www.cnblogs.com/qiu-hua/p/13576152.html