The Apache Hive ? data warehouse software facilitates reading, writing, and managing large datasets residing in distributed storage using SQL. Structure can be projected onto data already in storage. A command line tool and JDBC driver are provided to connect users to Hive.

翻译过来大意是:Apache Hive数据仓库软件促进了使用SQL读取、写入和管理分布存储中的大型数据集。结构可以投射到存储中已经存在的数据上。并提供了命令行工具和JDBC驱动程序来连接用户和Hive。

(1)迭代式算法无法表达

(2)数据挖掘方面不擅长,由于MapReduce数据处理流程的限制,效率更高的算法却无法实现。

(1)Hive自动生成的MapReduce作业,通常情况下不够智能化

(2)Hive调优比较困难,粒度较粗

Note: 在安装Hive之前,默认hadoop集群已经正常运行。Hive默认安装在NameNode节点上,不要在DataNode节点上安装。本文档安装的Hive版本基于2.3.7。

[root@k8s-master ~]# cd /opt

[root@k8s-master opt]# wget https://mirrors.tuna.tsinghua.edu.cn/apache/hive/hive-2.3.7/apache-hive-2.3.7-bin.tar.gz

[root@k8s-master opt]# tar -zxf apache-hive-2.3.7-bin.tar.gz

[root@k8s-master opt]# mv apache-hive-2.3.7-bin hive-2.3.7

[root@k8s-master opt]# ln -s hive-2.3.7 hive

[root@k8s-master ~]# vim /root/.bash_profile

#添加以下语句

export HIVE_HOME=/opt/hive

export HIVE_CONF_DIR=$HIVE_HOME/conf

PATH=$HIVE_HOME/bin:$PATH

[root@k8s-master ~]# source /root/.bash_profile

mysql> create database metastore;

mysql> set global read_only=0;

mysql> grant all on metastore.* to hive@‘%‘ identified by ‘123456‘;

mysql> grant all on metastore.* to hive@‘localhost‘ identified by ‘123456‘;

mysql> flush privileges;

PS: 如果忘记了mysql的登录密码,可以使用以下方法进行修改

[root@k8s-master ~]# vim /etc/my.cnf

在[mysqld]后面最后一行添加“skip-grant-tables”用来跳过密码验证的过程,如下所示

[mysqld]

......

log_bin=mysql-bin

log_bin_index=mysql-bin.index

binlog_format=row

skip-grant-tables

[root@k8s-master ~]# service mysqld restart

接下来的操作:

[root@k8s-master ~]# mysql -u root -p

直接按enter键,便可以不用密码登录到数据库当中

mysql> update user set password=password("你的新密码") where user="root";

mysql> flush privileges;

mysql> quit

如果遇到以下问题:

ERROR 1290 (HY000): The MySQL server is running with the --skip-grant-tables option so it cannot execute this statement

解决方法:

mysql> flush privileges;

继续执行修改密码操作

最后,使用vim /etc/my.cnf,去掉刚才添加的skip-grant-tables内容,然后重启MySQL即可。

# 操作之前,将配置文件重命名一下

[root@k8s-master ~]# cd /opt/hive/conf

[root@k8s-master conf]# cp hive-default.xml.template hive-site.xml

[root@k8s-master conf]# cp hive-env.sh.template hive-env.sh

[root@k8s-master conf]# cp hive-log4j2.properties.template hive-log4j2.properties

[root@k8s-master conf]# cp hive-exec-log4j2.properties.template hive-exec-log4j2.properties

# 修改hive-env.sh文件

[root@k8s-master conf]# vim hive-env.sh

# 放开以下内容

# Set HADOOP_HOME to point to a specific hadoop install directory

export HADOOP_HOME=/opt/hadoop

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/opt/hive/conf

# Folder containing extra libraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/opt/hive/lib

export JAVA_HOME=/opt/jdk1.8.0_161

export HIVE_HOME=/opt/hive

[root@k8s-master ~]# hadoop dfs -mkdir -p /user/hive/warehouse

[root@k8s-master ~]# hadoop dfs -mkdir -p /user/hive/tmp

[root@k8s-master ~]# hadoop dfs -mkdir -p /user/hive/log

[root@k8s-master ~]# hadoop dfs -chmod -R 777 /user/hive/warehouse

[root@k8s-master ~]# hadoop dfs -chmod -R 777 /user/hive/tmp

[root@k8s-master ~]# hadoop dfs -chmod -R 777 /user/hive/log

将 hive-site.xml 文件中的 ${system:java.io.tmpdir} 替换为hive的本地临时目录,如果该目录不存在,需要先进行创建,并且赋予读写权限,我这边创建的临时目录是/opt/hive/tmp

[root@k8s-master ~]# mkdir -p /opt/hive/tmp

[root@k8s-master ~]# chmod 755 /opt/hive/tmp

[root@k8s-master ~]# cd /opt/hive/conf

# 在vim命令模式下执行如下命令完成替换

:%s#${system:java.io.tmpdir}#/opt/hive/tmp#g

[root@k8s-master ~]# cd /opt/hive/conf

[root@k8s-master conf]# vim hive-site.xml

:%s#${system:user.name}#root#g

hive-site.xml中与mysql相关的配置,如下所示:

| 属性名称 | 描述 |

|---|---|

| javax.jdo.option.ConnectionDriverName | 数据库的驱动类名称 |

| javax.jdo.option.ConnectionURL | 数据库的JDBC连接地址 |

| javax.jdo.option.ConnectionUserName | 连接数据库所使用的用户名 |

| javax.jdo.option.ConnectionPassword | 连接数据库所使用的密码 |

在hive-site.xm配置文件中修改以上4项配置:

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://k8s-master:3306/hive?createDatabaseIfNotExist=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

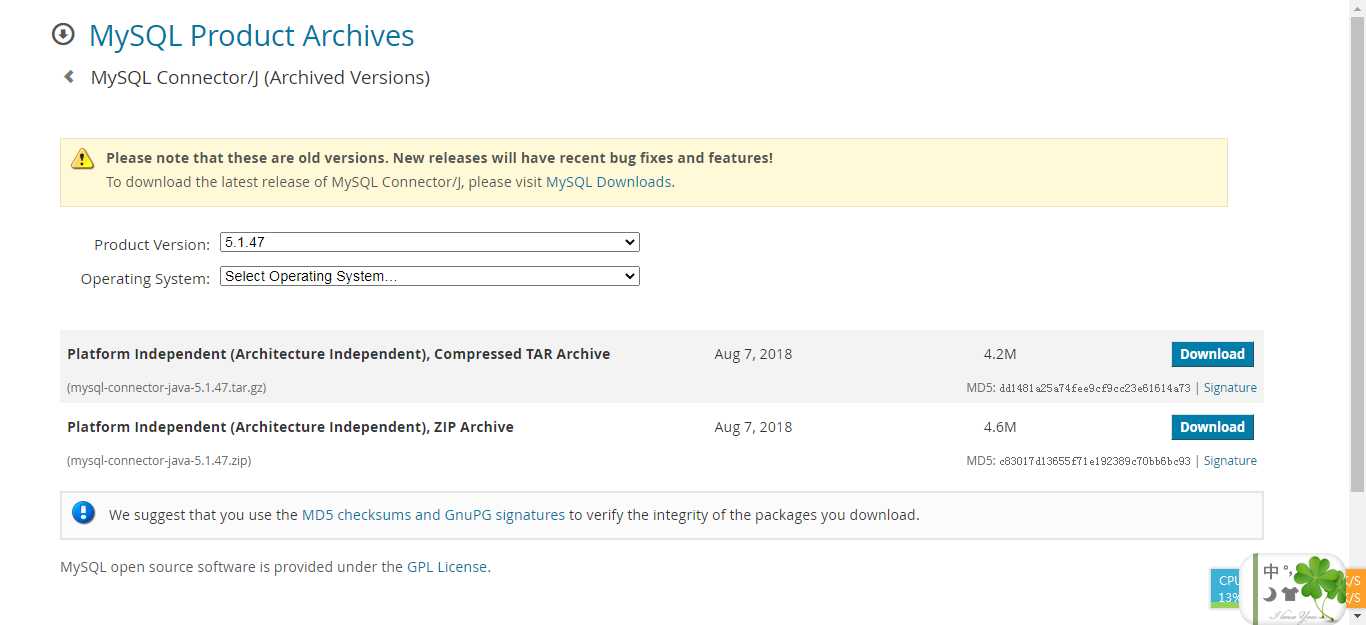

随后,将下载的mysql的jdbc驱动jar包,放到hive的lib目录下

[root@k8s-master opt]# tar -zxf mysql-connector-java-5.1.47.tar.gz

[root@k8s-master opt]# cd mysql-connector-java-5.1.47

[root@k8s-master mysql-connector-java-5.1.47]# cp mysql-connector-java-5.1.47.jar /opt/hive/lib

[root@k8s-master ~]# cd /opt/hive/bin

[root@k8s-master ~]# schematool -initSchema -dbType mysql

随后会生成一些连接mysql的初始化信息

[root@k8s-master ~]# cd /opt/hive/bin

# 使用 hive 命令启动Hive

[root@k8s-master bin]# ./hive

which: no hbase in (/opt/hive/bin:/opt/jdk1.8.0_161/bin:/opt/jdk1.8.0_161/bin:/usr/lib64/qt-3.7/bin:/opt/hive/bin:/opt/bin:/usr/bin:/opt/sbin:/usr/sbin:/opt/zookeeper-3.4.12/bin:/home/hadoop/.local/bin:/home/hadoop/bin:/opt/hadoop-2.7.5/bin:/opt/hadoop-2.7.5/sbin:/home/hadoop/.local/bin:/home/hadoop/bin:/home/hadoop/.local/bin:/home/hadoop/bin:/opt/hadoop-2.7.5/bin:/opt/hadoop-2.7.5/sbin:/opt/zookeeper-3.4.12/bin:/home/hadoop/.local/bin:/home/hadoop/bin:/opt/hadoop-2.7.5/bin:/opt/hadoop-2.7.5/sbin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-2.7.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/opt/hive-2.3.7/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

# 测试下hive能否正常使用

hive> show databases;

OK

default

Time taken: 3.451 seconds, Fetched: 2 row(s)

问题定位: hive的启动脚本默认寻找了hbase的Path,我这边没装该模块,可以注释掉。

解决方法:

[root@k8s-master ~]# cd /opt/hive/bin

[root@k8s-master ~]# vim hive

#注释掉以下的语句

#if [ "$SKIP_HBASECP" = false ]; then

# # HBase detection. Need bin/hbase and a conf dir for building classpath entries.

# # Start with BigTop defaults for HBASE_HOME and HBASE_CONF_DIR.

# HBASE_HOME=${HBASE_HOME:-"/usr/lib/hbase"}

# HBASE_CONF_DIR=${HBASE_CONF_DIR:-"/etc/hbase/conf"}

# if [[ ! -d $HBASE_CONF_DIR ]] ; then

# # not explicitly set, nor in BigTop location. Try looking in HBASE_HOME.

# HBASE_CONF_DIR="$HBASE_HOME/conf"

# fi

# # look for the hbase script. First check HBASE_HOME and then ask PATH.

# if [[ -e $HBASE_HOME/bin/hbase ]] ; then

# HBASE_BIN="$HBASE_HOME/bin/hbase"

# fi

# HBASE_BIN=${HBASE_BIN:-"$(which hbase)"}

# perhaps we‘ve located HBase. If so, include its details on the classpath

# if [[ -n $HBASE_BIN ]] ; then

# # exclude ZK, PB, and Guava (See HIVE-2055)

# # depends on HBASE-8438 (hbase-0.94.14+, hbase-0.96.1+) for `hbase mapredcp` command

# for x in $($HBASE_BIN mapredcp 2>> ${STDERR} | tr ‘:‘ ‘\n‘) ; do

# if [[ $x == *zookeeper* || $x == *protobuf-java* || $x == *guava* ]] ; then

# continue

# fi

# # TODO: should these should be added to AUX_PARAM as well?

# export HADOOP_CLASSPATH="${HADOOP_CLASSPATH}:${x}"

# done

# fi

#fi

# --skiphbasecp)

# SKIP_HBASECP=true

# shift

# ;;

问题定位: 控制台提示"不建议不使用服务器身份验证建立SSL连接。产生的原因是使用JDBC连接MySQL服务器时没有设置useSSL的参数。" 该警告信息不处理的话,会输出太多无用的信息。

解决方案:

可以设置useSSL=false,如下所示

[root@k8s-master ~]# cd /opt/hive/conf

[root@k8s-master conf]# vim hive-site.xml

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://k8s-master:3306/hive?createDatabaseIfNotExist=true&characterEncoding=utf8&useSSL=false</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

参考文章链接:https://blog.csdn.net/pengjunlee/article/details/81607890

华为云服务器基于hadoop2.7.5安装hive2.3.7

原文:https://www.cnblogs.com/hyethebest/p/13585968.html