ip地址 主机名 操作系统 磁盘 172.21.16.8 ceph1 centos7.6 3*10G 172.21.16.10 ceph2 centos7.6 3*10G 172.21.16.11 ceph3 centos7.6 3*10G 确保三台机器防火墙和selinux全部关闭

[cephadmin@ceph1 ~]$ yum -y install ntpdate ntp [cephadmin@ceph1 ~]$ ntpdate cn.ntp.org.cn [cephadmin@ceph1 ~]$ systemctl restart ntpd && systemctl enable ntpd 三台机器都要执行

部署ceph最好自己创建一个用户去部署,不要用ceph和root用户,三台机器都要执行

[cephadmin@ceph1 ~]$ useradd cephadmin [cephadmin@ceph1 ~]$ echo "cephadmin" | passwd --stdin cephadmin [cephadmin@ceph1 ~]$ echo "cephadmin ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephadmin [cephadmin@ceph1 ~]$ chmod 0440 /etc/sudoers.d/cephadmin

sed -i ‘s/Default requiretty/#Default requiretty/‘ /etc/sudoers

三台机器都要执行

第一台机器 [cephadmin@ceph1 ~]$ hostnamectl set-hostname ceph1 第二台机器 [cephadmin@ceph1 ~]$ hostnamectl set-hostname ceph2 第三台机器 [cephadmin@ceph1 ~]$ hostnamectl set-hostname ceph3

三台机器都要执行

[cephadmin@ceph1 ~]$ cat /etc/hosts 172.21.0.8 ceph1 172.21.0.10 ceph2 172.21.0.11 ceph3

只需要在部署节点上(ceph1)上执行

[cephadmin@ceph1 ~]$ ssh-keygen [cephadmin@ceph1 ~]$ ssh-copy-id cephadmin@ceph1 [cephadmin@ceph1 ~]$ ssh-copy-id cephadmin@ceph2 [cephadmin@ceph1 ~]$ ssh-copy-id cephadmin@ceph3

三台机器都要执行

[cephadmin@ceph1 ~]$sudo yum install -y ceph ceph-radosgw

只需要在部署节点上执行(ceph1)

[cephadmin@ceph1 ~]$ sudo yum install -y ceph-deploy python-pip

在部署节点上运行(ceph1)

[cephadmin@ceph1 ~]$mkdir my-cluster [cephadmin@ceph1 ~]$cd my-cluster [cephadmin@ceph1 ~]$ceph-deploy new ceph1 ceph2 ceph3 [cephadmin@ceph1 ~]$vim ceph.conf [global] ..... public network = 172.21.16.0/20 cluster network = 172.21.16.0/20

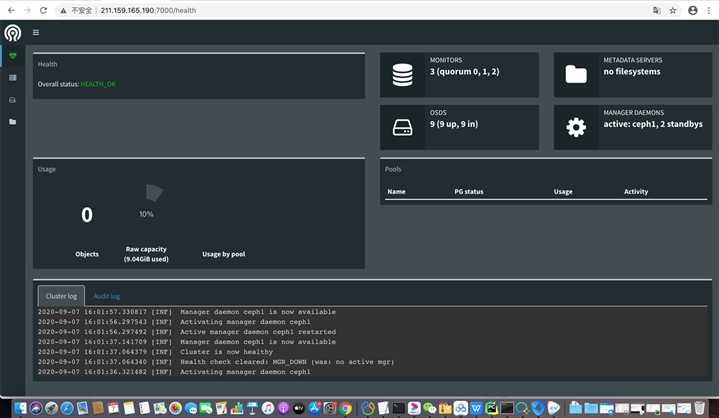

[cephadmin@ceph1 ~]$ ceph-deploy mon create-initial #/配置初始 monitors并收集所有密钥 [cephadmin@ceph1 ~]$ ceph-deploy admin ceph1 ceph2 ceph3 #把配置信息拷贝到各节点 [cephadmin@ceph1 ~]$sudo chown -R cephadmin:cephadmin /etc/ceph #三台机器都需要执行 [cephadmin@ceph1 ~]$ ceph -s cluster: id: d64f9fbf-b948-4e50-84f0-b30073161ef6 health: HEALTH_OK services: mon: 3 daemons, quorum ceph1,ceph2,ceph3 mgr: ceph1(active), standbys: ceph2, ceph3 osd: 9 osds: 9 up, 9 in data: pools: 0 pools, 0 pgs objects: 0 objects, 0B usage: 9.04GiB used, 80.9GiB / 90.0GiB avail pgs:

for dev in /dev/vdb /dev/vdc /dev/vdd;do ceph-deploy disk zap ceph1 $dev;ceph-deploy osd create ceph1 --data $dev;ceph-deploy disk zap ceph2 $dev;ceph-deploy osd create ceph2 --data $dev;ceph-deploy disk zap ceph3 $dev;ceph-deploy osd create ceph3 --data $dev; done

L版本以后才需要执行

ceph-deploy mgr create ceph1 ceph2 ceph3

[cephadmin@ceph1 ~]$ ceph mgr module enable dashboard

原文:https://www.cnblogs.com/fengzi7314/p/13627706.html