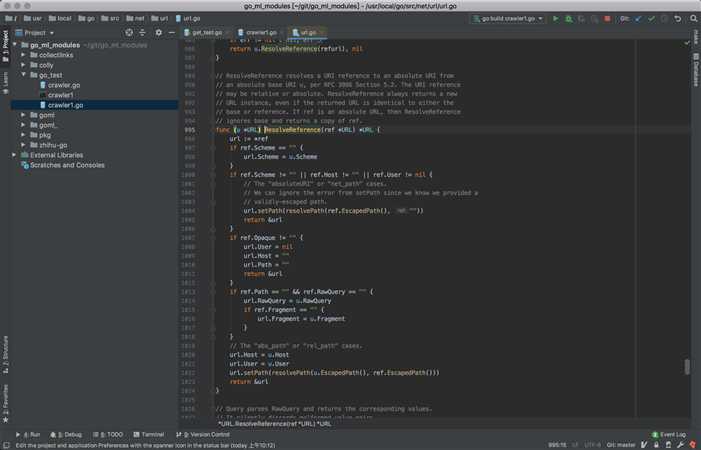

package main import ( "crypto/tls" "flag" "fmt" "github.com/jackdanger/collectlinks" "net/http" "net/url" "os" ) func usage() { fmt.Fprintf(os.Stderr, "usage: crawl http://example.com/path/file.html\n") flag.PrintDefaults() os.Exit(2) } func main() { flag.Usage = usage flag.Parse() args := flag.Args() fmt.Println(args) if len(args) < 1 { usage() fmt.Println("Please specify start page") os.Exit(1) } queue := make(chan string) filteredQueue := make(chan string) go func() { queue <- args[0] }() go filterQueue(queue, filteredQueue) // introduce a bool channel to synchronize execution of concurrently running crawlers done := make(chan bool) // pull from the filtered queue, add to the unfiltered queue for i := 0; i < 5; i++ { go func() { for uri := range filteredQueue { enqueue(uri, queue) } done <- true }() } <-done } func filterQueue(in chan string, out chan string) { var seen = make(map[string]bool) for val := range in { if !seen[val] { seen[val] = true out <- val } } } func enqueue(uri string, queue chan string) { fmt.Println("fetching", uri) transport := &http.Transport{ TLSClientConfig: &tls.Config{ InsecureSkipVerify: true, }, } client := http.Client{Transport: transport} resp, err := client.Get(uri) if err != nil { return } defer resp.Body.Close() links := collectlinks.All(resp.Body) fmt.Println(links) for _, link := range links { absolute := fixUrl(link, uri) if uri != "" { go func() { queue <- absolute }() } } } func fixUrl(href, base string) string { uri, err := url.Parse(href) if err != nil { return "" } baseUrl, err := url.Parse(base) if err != nil { return "" } uri = baseUrl.ResolveReference(uri) return uri.String() }

package main import ( "fmt" "github.com/jackdanger/collectlinks" "net/http" ) func main() { fmt.Println("Hello, world") url := "http://www.baidu.com/" download(url) } func download(url string) { client := &http.Client{} req, _ := http.NewRequest("GET", url, nil) // 自定义Header req.Header.Set("User-Agent", "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)") resp, err := client.Do(req) if err != nil { fmt.Println("http get error", err) return } //函数结束后关闭相关链接 defer resp.Body.Close() links := collectlinks.All(resp.Body) for _, link := range links { fmt.Println("parse url", link) } }

原文:https://www.cnblogs.com/cx2016/p/13851838.html