Baseline:SAGAN[1]

用大量的技术去稳定了一个拥有大量参数的cGAN的训练

[1] Zhang, Han, Ian Goodfellow, Dimitris Metaxas, and Augustus Odena. "Self-Attention Generative Adversarial Networks." ArXiv:1805.08318 [Cs, Stat], June 14, 2019. http://arxiv.org/abs/1805.08318.

[2] Vries, Harm de, Florian Strub, Jérémie Mary, Hugo Larochelle, Olivier Pietquin, and Aaron Courville. "Modulating Early Visual Processing by Language." ArXiv:1707.00683 [Cs], December 18, 2017. http://arxiv.org/abs/1707.00683.

[3] Miyato, Takeru, and Masanori Koyama. "CGANs with Projection Discriminator." ArXiv:1802.05637 [Cs, Stat], August 14, 2018. http://arxiv.org/abs/1802.05637.

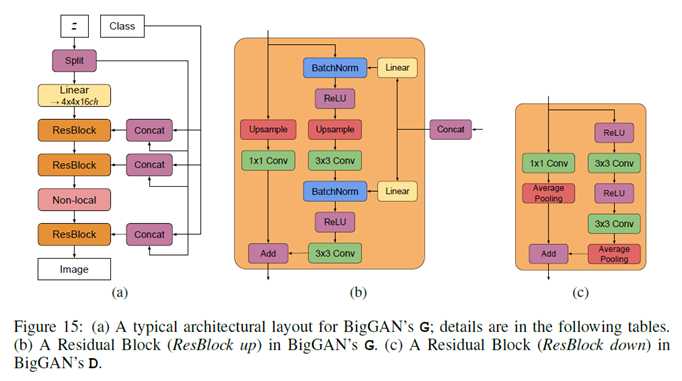

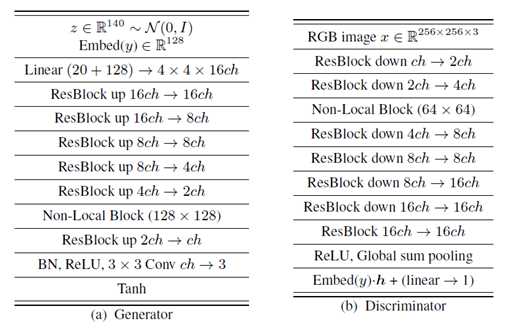

BIGGAN: LARGE SCALE GAN TRAINING FORHIGH FIDELITY NATURAL IMAGE SYNTHESIS

原文:https://www.cnblogs.com/JunzhaoLiang/p/13886458.html