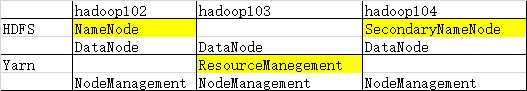

HDFS:NameNode DataNode SecondaryNameNode

Yarn:ResourceManegement NodeManagement

<configuration>

<!--指定HDFS中NameNode地址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop102:9000</value>

</property>

<!--指定hadoop运行时产生文件的存储目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.6/data/tmp</value>

</property>

</configuration>

<configuration>

<!--指定hdfs副本的数量-->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!--SecondaryNameNode配置->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop104:50090</value>

</property>

</configuration>

<configuration>

<!--指定MR运行在yarn-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

<configuration>

<!--reduce数据的获取方式-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--指定ResourceManegement的地址-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop103</value>

</property>

</configuration>

hadoop-env.sh

mapred-env.sh

yarn-env.sh

[atguigu@hadoop101 hadoop-2.7.6]$ xsync etc

hdfs namenode -format

hadoop102:

hadoop-daemon.sh start namenode

hadoop-daemon.sh start datanode

hadoop103:

hadoop-daemon.sh start datanode

hadoop104:

hadoop-daemon.sh start datanode

hadoop-daemon.sh start secondarynamenode

hadoop102:

25584 NameNode

25744 Jps

25669 DataNode

hadoop103:

2818 DataNode

2935 Jps

hadoop104:

2773 DataNode

2825 SecondaryNameNode

2922 Jps

原文:https://www.cnblogs.com/hapyygril/p/13945015.html