1.任务:

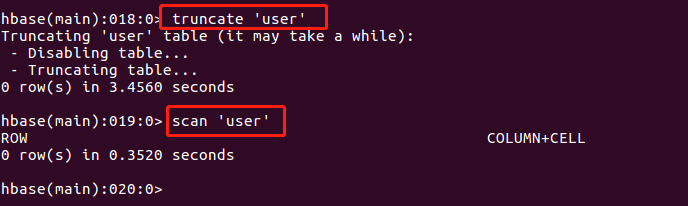

2.关系型数据库中的表和数据(教材P92上),要求将其转换为适合于HBase存储的表并插入数据。

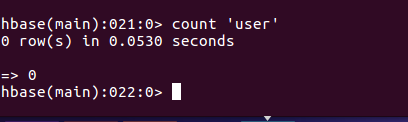

创建表

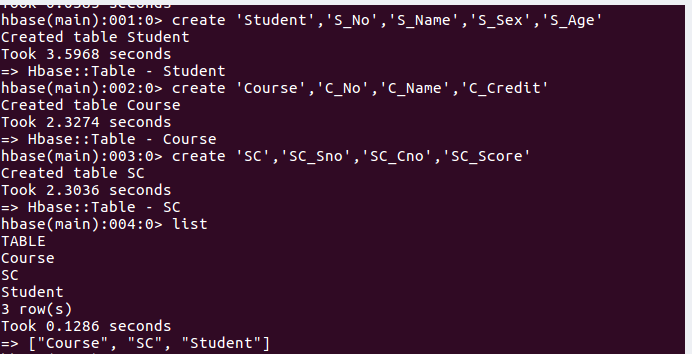

‘Student’表中添加数据插入数据

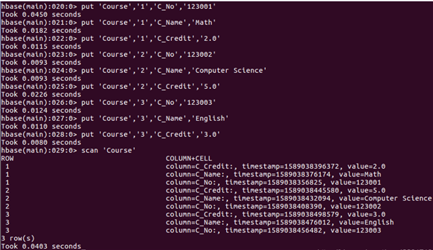

‘Course’表中添加数据

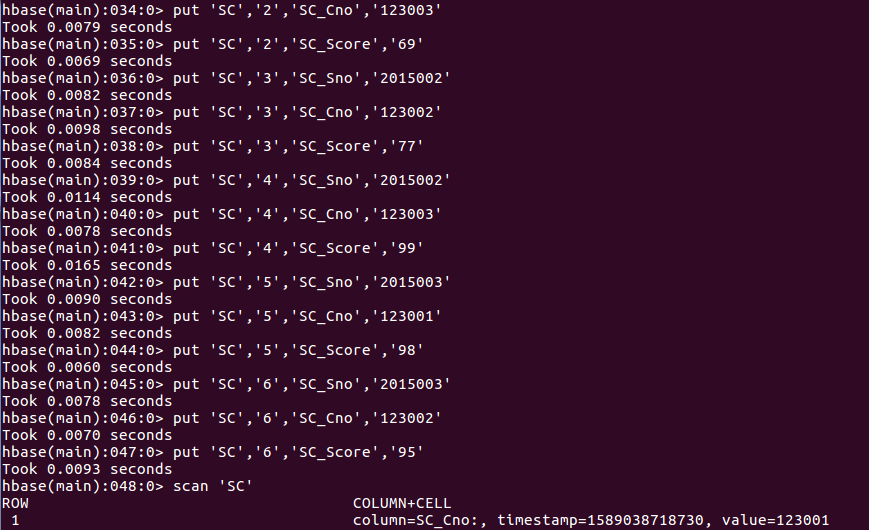

‘SC’表中添加数据

3. 编程完成以下指定功能(教材P92下):

(1)createTable(String tableName, String[] fields)创建表。

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.ColumnFamilyDescriptor;

import org.apache.hadoop.hbase.client.ColumnFamilyDescriptorBuilder;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.TableDescriptorBuilder;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class CreateTable {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void init(){//建立连接

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch(IOException e){

e.printStackTrace();

}

}

public static void close(){//关闭连接

try{

if(admin != null){

admin.close();

}

if(connection != null){

connection.close();

}

}catch(IOException e){

e.printStackTrace();

}

}

public static void createTable(String tableName,String[] fields) throws IOException{

init();

TableName tablename = TableName.valueOf(tableName);//定义表名

if(admin.tableExists(tablename)){

System.out.println("table is exists!");

admin.disableTable(tablename);

admin.deleteTable(tablename);

}

TableDescriptorBuilder tableDescriptor = TableDescriptorBuilder.newBuilder(tablename);

for(int i=0;i<fields.length;i++){

ColumnFamilyDescriptor family = ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes(fields[i])).build();

tableDescriptor.setColumnFamily(family);

}

admin.createTable(tableDescriptor.build());

close();

}

public static void main(String[] args){

String[] fields = {"id","score"};

try{

createTable("test",fields);

}catch(IOException e){

e.printStackTrace();

}

}

}

(2)addRecord(String tableName, String row, String[] fields, String[] values)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

public class addRecord {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void init(){//建立连接

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch(IOException e){

e.printStackTrace();

}

}

public static void close(){//关闭连接

try{

if(admin != null){

admin.close();

}

if(connection != null){

connection.close();

}

}catch(IOException e){

e.printStackTrace();

}

}

public static void addRecord(String tableName,String row,String[] fields,String[] values) throws IOException{

init();//连接Hbase

Table table = connection.getTable(TableName.valueOf(tableName));//表连接

Put put = new Put(row.getBytes());//创建put对象

for(int i=0;i<fields.length;i++){

String[] cols = fields[i].split(":");

if(cols.length == 1){

put.addColumn(fields[i].getBytes(),"".getBytes(),values[i].getBytes());

}

else{

put.addColumn(cols[0].getBytes(),cols[1].getBytes(),values[i].getBytes());

}

table.put(put);//向表中添加数据

}

close();//关闭连接

}

public static void main(String[] args){

String[] fields = {"Score:Math","Score:Computer Science","Score:English"};

String[] values = {"90","90","90"};

try{

addRecord("grade","S_Name",fields,values);

}catch(IOException e){

e.printStackTrace();

}

}

}

(3)scanColumn(String tableName, String column)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.util.Bytes;

public class scanColumn {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void init(){//建立连接

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch(IOException e){

e.printStackTrace();

}

}

public static void close(){//关闭连接

try{

if(admin != null){

admin.close();

}

if(connection != null){

connection.close();

}

}catch(IOException e){

e.printStackTrace();

}

}

public static void showResult(Result result){

Cell[] cells = result.rawCells();

for(int i=0;i<cells.length;i++){

System.out.println("RowName:"+new String(CellUtil.cloneRow(cells[i])));//打印行键

System.out.println("ColumnName:"+new String(CellUtil.cloneQualifier(cells[i])));//打印列名

System.out.println("Value:"+new String(CellUtil.cloneValue(cells[i])));//打印值

System.out.println("Column Family:"+new String(CellUtil.cloneFamily(cells[i])));//打印列簇

System.out.println();

}

}

public static void scanColumn(String tableName,String column){

init();

try {

Table table = connection.getTable(TableName.valueOf(tableName));

Scan scan = new Scan();

scan.addFamily(Bytes.toBytes(column));

ResultScanner scanner = table.getScanner(scan);

for(Result result = scanner.next();result != null;result = scanner.next()){

showResult(result);

}

} catch (IOException e) {

e.printStackTrace();

}

finally{

close();

}

}

public static void main(String[] args){

scanColumn("Student","S_Age");

}

}

(4)modifyData(String tableName, String row, String column)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Table;

public class modifyData {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void init(){//建立连接

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch(IOException e){

e.printStackTrace();

}

}

public static void close(){//关闭连接

try{

if(admin != null){

admin.close();

}

if(connection != null){

connection.close();

}

}catch(IOException e){

e.printStackTrace();

}

}

public static void modifyData(String tableName,String row,String column,String value) throws IOException{

init();

Table table = connection.getTable(TableName.valueOf(tableName));

Put put = new Put(row.getBytes());

String[] cols = column.split(":");

if(cols.length == 1){

put.addColumn(column.getBytes(),"".getBytes(), value.getBytes());

}

else{

put.addColumn(cols[0].getBytes(), cols[1].getBytes(), value.getBytes());

}

table.put(put);

close();

}

public static void main(String[] args){

try{

modifyData("Student","1","S_Name","Tom");

}

catch(Exception e){

e.printStackTrace();

}

}

}

(5)deleteRow(String tableName, String row)

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Table;

public class deleteRow {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void init(){//建立连接

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch(IOException e){

e.printStackTrace();

}

}

public static void close(){//关闭连接

try{

if(admin != null){

admin.close();

}

if(connection != null){

connection.close();

}

}catch(IOException e){

e.printStackTrace();

}

}

public static void deleteRow(String tableName,String row) throws IOException{

init();

Table table = connection.getTable(TableName.valueOf(tableName));

Delete delete = new Delete(row.getBytes());

table.delete(delete);

close();

}

public static void main(String[] args){

try{

deleteRow("Student","3");

}catch(Exception e){

e.printStackTrace();

}

}

}

原文:https://www.cnblogs.com/Lonely-lie/p/14009145.html