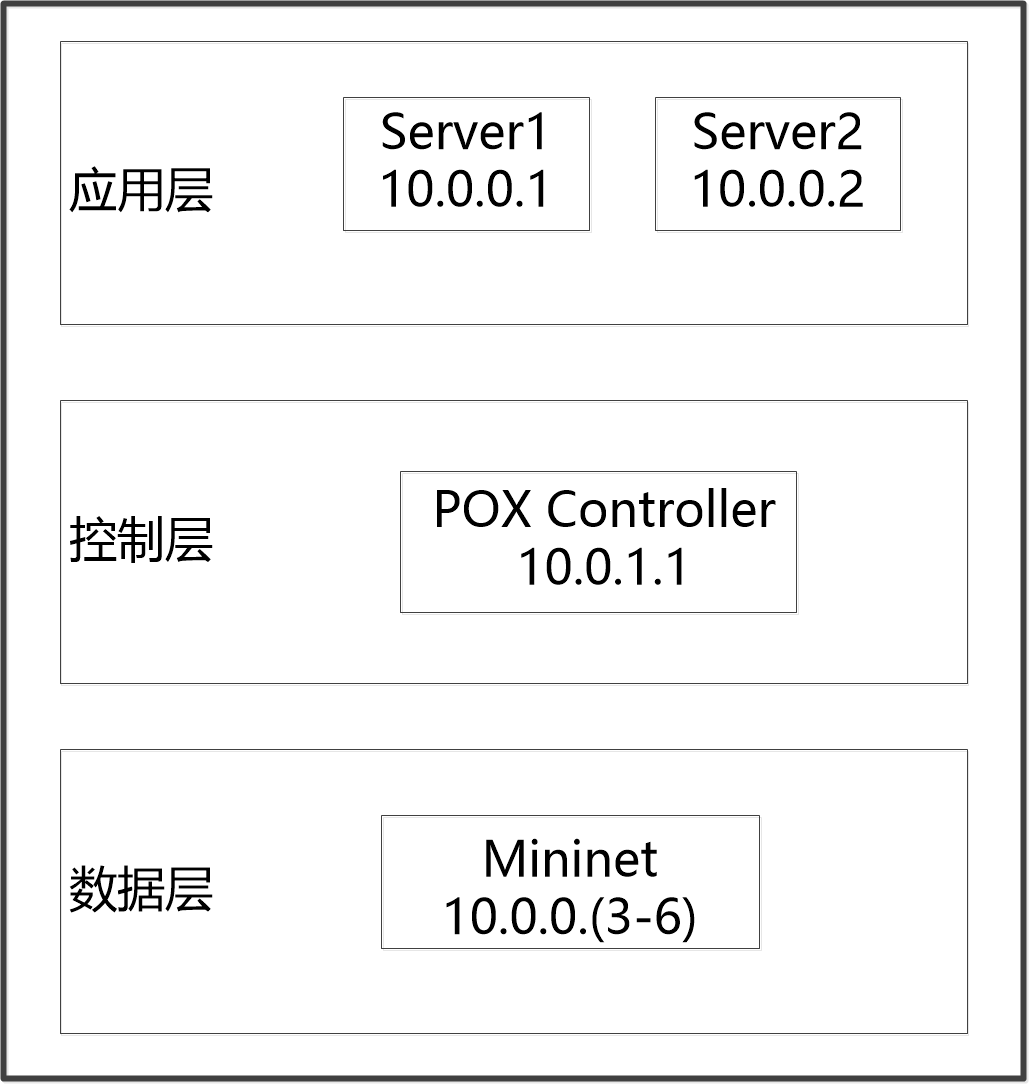

SDNHub_tutorial_VM_64镜像;POX控制器;Mininet;python创建简易的HTTP服务器。

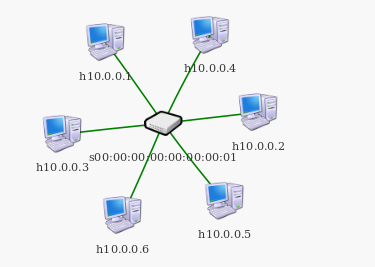

1.创建拓扑:

sudo mn --switch ovsk,protocols=OpenFlow13 --topo single,6 --controller=remote,ip=127.0.0.1,port=6633

2.由于POX还不支持OpenFlow 1.3,所以最好静态地设置OVS的OpenFlow版本:

sudo ovs-vsctl set bridge s1 protocols=OpenFlow10

3.打开服务器:

1)通过xterm[host]打开主机h1和h2,h1和h2作为服务器。

mininet> xterm h1 & h2

2)实验中对服务器的要求不是那么高,所以服务器选用python的server模块中的SimpleHTTPServer作为HTTP服务器来响应请求包,HTTP服务器端口设置为80。

root@sdnhubvm:~$ python -m SimpleHTTPServer 80

服务器建立成功

Serving HTTP on 0.0.0.0 port 80 ...

4.控制器与负载均衡策略

开启pox控制器,开启官方自带策略loadbalancer 并且指定ip和服务器的ip运行。

cd /home/ubuntu/pox

sudo ./pox.py log.level --DEBUG forwarding.tutorial_l2_hub misc.ip_loadbalancer --ip=10.0.1.1 --servers=10.0.0.1,10.0.0.2

输出如下,表示成功

POX 0.5.0 (eel) / Copyright 2011-2014 James McCauley, et al.

Module not found: ip=127.0.0.1

ubuntu@sdnhubvm:~/pox[01:42] (eel)$ sudo ./pox.py log.level --DEBUG forwarding.tutorial_l2_hub misc.ip_loadbalancer --ip=10.0.1.1 --servers=10.0.0.1,10.0.0.2

POX 0.5.0 (eel) / Copyright 2011-2014 James McCauley, et al.

DEBUG:core:POX 0.5.0 (eel) going up...

DEBUG:core:Running on CPython (2.7.6/Jun 22 2015 17:58:13)

DEBUG:core:Platform is Linux-3.13.0-24-generic-x86_64-with-Ubuntu-14.04-trusty

INFO:core:POX 0.5.0 (eel) is up.

DEBUG:openflow.of_01:Listening on 0.0.0.0:6633

INFO:openflow.of_01:[00-00-00-00-00-01 1] connected

INFO:iplb:IP Load Balancer Ready.

INFO:iplb:Load Balancing on [00-00-00-00-00-01 1]

INFO:iplb.00-00-00-00-00-01:Server 10.0.0.1 up

INFO:iplb.00-00-00-00-00-01:Server 10.0.0.2 up

5.检查mininet网络拓扑中的主机是否可以相互访问。

mininet> pingall

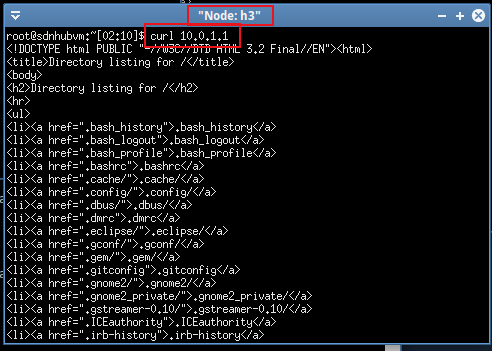

6.然后用h3 4 5 6主机的控制台去访问服务器。

打开其他的主机,作为发送请求的主机,通过curl指令,对服务器server1和server2发起METHOD为GET的请求,发送一个Request packet。请求成功,服务器会回送一个网页信息。

curl 10.0.1.1

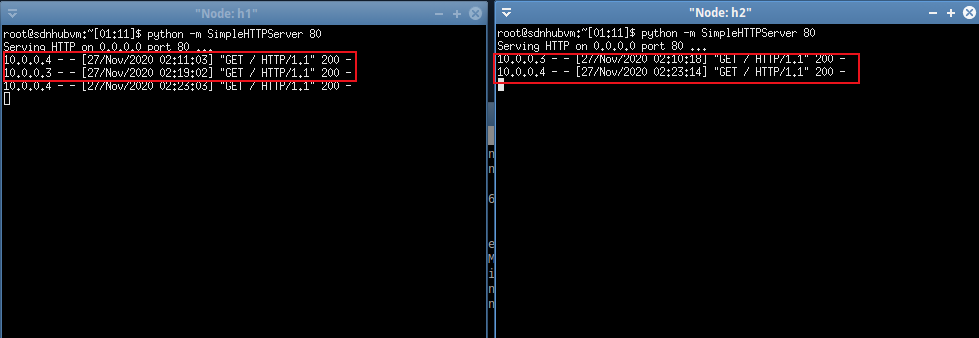

同时使用多台主机,重复请求控制器多次,观察POX的流量路径走向。分析流量,可以观察到多台主机请求控制器,最终请求到的服务器不一样,流量的走向也不一样。

在服务器端,h1和h2上可以对收到的包进行拆包处理,在GET请求的这些过程中,h1和h2并行工作,并且每次同样的请求不会在同一台服务器模拟上进行处理。

如上图所示,在h3(或h4)上连续两次Request packet包请求,如果包请求第一次在h1上处理,则第二次在h2上处理。

#Copyright 2013,2014 James McCauley # #Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. #You may obtain a copy of the License at: # # http://www.apache.org/licenses/LICENSE-2.0 # #Unless required by applicable law or agreed to in writing, software #distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. #See the License for the specific language governing permissions and #limitations under the License. """ A very sloppy IP load balancer. Run it with --ip= --servers=IP1,IP2,... By default, it will do load balancing on the first switch that connects. If you want, you can add --dpid= to specify a particular switch. Please submit improvements. ?? """ from pox.core import core import pox log = core.getLogger("iplb") from pox.lib.packet.ethernet import ethernet, ETHER_BROADCAST from pox.lib.packet.ipv4 import ipv4 from pox.lib.packet.arp import arp from pox.lib.addresses import IPAddr, EthAddr from pox.lib.util import str_to_bool, dpid_to_str, str_to_dpid import pox.openflow.libopenflow_01 as of import time import random FLOW_IDLE_TIMEOUT = 10 FLOW_MEMORY_TIMEOUT = 60 * 5 class MemoryEntry (object): """ Record for flows we are balancing Table entries in the switch "remember" flows for a period of time, but rather than set their expirations to some long value (potentially leading to lots of rules for dead connections), we let them expire from the switch relatively quickly and remember them here in the controller for longer. Another tactic would be to increase the timeouts on the switch and use the Nicira extension which can match packets with FIN set to remove them when the connection closes. """ def init (self, server, first_packet, client_port): self.server = server self.first_packet = first_packet self.client_port = client_port self.refresh() def refresh (self): self.timeout = time.time() + FLOW_MEMORY_TIMEOUT @property def is_expired (self): return time.time() > self.timeout @property def key1 (self): ethp = self.first_packet ipp = ethp.find(‘ipv4‘) tcpp = ethp.find(‘tcp‘) return ipp.srcip,ipp.dstip,tcpp.srcport,tcpp.dstport @property def key2 (self): ethp = self.first_packet ipp = ethp.find(‘ipv4‘) tcpp = ethp.find(‘tcp‘) return self.server,ipp.srcip,tcpp.dstport,tcpp.srcport class iplb (object): """ A simple IP load balancer Give it a service_ip and a list of server IP addresses. New TCP flows to service_ip will be randomly redirected to one of the servers. We probe the servers to see if they‘re alive by sending them ARPs. """ def init (self, connection, service_ip, servers = []): self.service_ip = IPAddr(service_ip) self.servers = [IPAddr(a) for a in servers] self.con = connection self.mac = self.con.eth_addr self.live_servers = {} # IP -> MAC,port try: self.log = log.getChild(dpid_to_str(self.con.dpid)) except: # Be nice to Python 2.6 (ugh) self.log = log self.outstanding_probes = {} # IP -> expire_time # How quickly do we probe? self.probe_cycle_time = 5 # How long do we wait for an ARP reply before we consider a server dead? self.arp_timeout = 3 # We remember where we directed flows so that if they start up again, # we can send them to the same server if it‘s still up. Alternate # approach: hashing. self.memory = {} # (srcip,dstip,srcport,dstport) -> MemoryEntry self._do_probe() # Kick off the probing # As part of a gross hack, we now do this from elsewhere #self.con.addListeners(self) def _do_expire (self): """ Expire probes and "memorized" flows Each of these should only have a limited lifetime. """ t = time.time() # Expire probes for ip,expire_at in self.outstanding_probes.items(): if t > expire_at: self.outstanding_probes.pop(ip, None) if ip in self.live_servers: self.log.warn("Server %s down", ip) del self.live_servers[ip] # Expire old flows c = len(self.memory) self.memory = {k:v for k,v in self.memory.items() if not v.is_expired} if len(self.memory) != c: self.log.debug("Expired %i flows", c-len(self.memory)) def _do_probe (self): """ Send an ARP to a server to see if it‘s still up """ self._do_expire() server = self.servers.pop(0) self.servers.append(server) r = arp() r.hwtype = r.HW_TYPE_ETHERNET r.prototype = r.PROTO_TYPE_IP r.opcode = r.REQUEST r.hwdst = ETHER_BROADCAST r.protodst = server r.hwsrc = self.mac r.protosrc = self.service_ip e = ethernet(type=ethernet.ARP_TYPE, src=self.mac, dst=ETHER_BROADCAST) e.set_payload(r) #self.log.debug("ARPing for %s", server) msg = of.ofp_packet_out() msg.data = e.pack() msg.actions.append(of.ofp_action_output(port = of.OFPP_FLOOD)) msg.in_port = of.OFPP_NONE self.con.send(msg) self.outstanding_probes[server] = time.time() + self.arp_timeout core.callDelayed(self._probe_wait_time, self._do_probe) @property def _probe_wait_time (self): """ Time to wait between probes """ r = self.probe_cycle_time / float(len(self.servers)) r = max(.25, r) # Cap it at four per second return r def _pick_server (self, key, inport): """ Pick a server for a (hopefully) new connection """ return random.choice(self.live_servers.keys()) def _handle_PacketIn (self, event): inport = event.port packet = event.parsed def drop (): if event.ofp.buffer_id is not None: # Kill the buffer msg = of.ofp_packet_out(data = event.ofp) self.con.send(msg) return None tcpp = packet.find(‘tcp‘) if not tcpp: arpp = packet.find(‘arp‘) if arpp: # Handle replies to our server-liveness probes if arpp.opcode == arpp.REPLY: if arpp.protosrc in self.outstanding_probes: # A server is (still?) up; cool. del self.outstanding_probes[arpp.protosrc] if (self.live_servers.get(arpp.protosrc, (None,None)) == (arpp.hwsrc,inport)): # Ah, nothing new here. pass else: # Ooh, new server. self.live_servers[arpp.protosrc] = arpp.hwsrc,inport self.log.info("Server %s up", arpp.protosrc) return # Not TCP and not ARP. Don‘t know what to do with this. Drop it. return drop() # It‘s TCP. ipp = packet.find(‘ipv4‘) if ipp.srcip in self.servers: # It‘s FROM one of our balanced servers. # Rewrite it BACK to the client key = ipp.srcip,ipp.dstip,tcpp.srcport,tcpp.dstport entry = self.memory.get(key) if entry is None: # We either didn‘t install it, or we forgot about it. self.log.debug("No client for %s", key) return drop() # Refresh time timeout and reinstall. entry.refresh() #self.log.debug("Install reverse flow for %s", key) # Install reverse table entry mac,port = self.live_servers[entry.server] actions = [] actions.append(of.ofp_action_dl_addr.set_src(self.mac)) actions.append(of.ofp_action_nw_addr.set_src(self.service_ip)) actions.append(of.ofp_action_output(port = entry.client_port)) match = of.ofp_match.from_packet(packet, inport) msg = of.ofp_flow_mod(command=of.OFPFC_ADD, idle_timeout=FLOW_IDLE_TIMEOUT, hard_timeout=of.OFP_FLOW_PERMANENT, data=event.ofp, actions=actions, match=match) self.con.send(msg) elif ipp.dstip == self.service_ip: # Ah, it‘s for our service IP and needs to be load balanced # Do we already know this flow? key = ipp.srcip,ipp.dstip,tcpp.srcport,tcpp.dstport entry = self.memory.get(key) if entry is None or entry.server not in self.live_servers: # Don‘t know it (hopefully it‘s new!) if len(self.live_servers) == 0: self.log.warn("No servers!") return drop() # Pick a server for this flow server = self._pick_server(key, inport) self.log.debug("Directing traffic to %s", server) entry = MemoryEntry(server, packet, inport) self.memory[entry.key1] = entry self.memory[entry.key2] = entry # Update timestamp entry.refresh() # Set up table entry towards selected server mac,port = self.live_servers[entry.server] actions = [] actions.append(of.ofp_action_dl_addr.set_dst(mac)) actions.append(of.ofp_action_nw_addr.set_dst(entry.server)) actions.append(of.ofp_action_output(port = port)) match = of.ofp_match.from_packet(packet, inport) msg = of.ofp_flow_mod(command=of.OFPFC_ADD, idle_timeout=FLOW_IDLE_TIMEOUT, hard_timeout=of.OFP_FLOW_PERMANENT, data=event.ofp, actions=actions, match=match) self.con.send(msg) _dpid = None def launch (ip, servers, dpid = None): global _dpid if dpid is not None: _dpid = str_to_dpid(dpid) servers = servers.replace(","," ").split() servers = [IPAddr(x) for x in servers] ip = IPAddr(ip) from proto.arp_responder import ARPResponder old_pi = ARPResponder._handle_PacketIn def new_pi (self, event): if event.dpid == _dpid: #Yes, the packet-in is on the right switch return old_pi(self, event) ARPResponder._handle_PacketIn = new_pi from proto.arp_responder import launch as arp_launch arp_launch(eat_packets=False,**{str(ip):True}) import logging logging.getLogger("proto.arp_responder").setLevel(logging.WARN) def _handle_ConnectionUp (event): global _dpid if _dpid is None: _dpid = event.dpid if _dpid != event.dpid: log.warn("Ignoring switch %s", event.connection) else: if not core.hasComponent(‘iplb‘): # Need to initialize first... core.registerNew(iplb, event.connection, IPAddr(ip), servers) log.info("IP Load Balancer Ready.") log.info("Load Balancing on %s", event.connection) # Gross hack core.iplb.con = event.connection event.connection.addListeners(core.iplb) core.openflow.addListenerByName("ConnectionUp", _handle_ConnectionUp)

1.https://www.pianshen.com/article/65011005435/

2.https://www.cnblogs.com/levylovepage/p/11251065.html

3.http://sdnhub.org/tutorials/pox/

原文:https://www.cnblogs.com/Horizon-asd/p/14049754.html