## 包的作用

import jieba #分词

from wordcloud import WordCloud #词云

from PIL import Image #图片处理

import numpy as np #将图片变成数组

import collections #计数器

from matplotlib import pyplot as plt #绘图

import sqlite3 #数据库

def get_data(db_name,sql):

#连接数据库

conn = sqlite3.connect(db_name)

#获取游标

cursor = conn.cursor()

#执行sql语句

data = cursor.execute(sql)

text = ""

#拼接信息

for item in data:

text += item[0]+" "

cursor.close()

#关闭数据库

conn.close()

return text

def cut_word(text):

#分词:cut_all=False:精确模式 HMM=True:使用隐式马尔科夫

cut = jieba.cut(text,cut_all=False,HMM=True)

object_list = []

#读取停用词

with open("stop_word.txt", ‘r‘, encoding=‘UTF-8‘) as meaninglessFile:

stopwords = set(meaninglessFile.read().split(‘\n‘))

stopwords.add(‘ ‘)

#如果单词不在停用词里,则添加

for word in cut:

if word not in stopwords:

object_list.append(word)

#collections.Counter 计数器,统计单词个数

word_counts = collections.Counter(object_list)

print(word_counts)

return word_counts

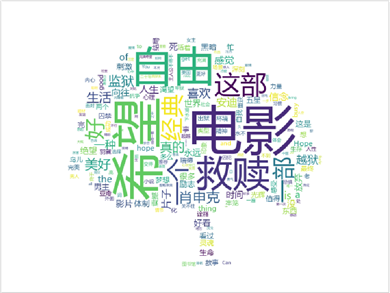

def get_cloud(word_counts,i):

#遮罩图:必须是白底的

img = Image.open(r‘./img/tree.jpg‘)

img_array = np.array(img) #将图片变为数组

wc = WordCloud(

background_color = ‘white‘, # 背景颜色

mask = img_array, #遮罩图片

font_path = ‘msyh.ttc‘ #字体样式

)

wc.generate_from_frequencies(word_counts) #生成词云图

fig = plt.figure(1)

plt.imshow(wc) # 显示词云

plt.axis(‘off‘) # 关闭保存

#plt.show()

#调整边框

plt.subplots_adjust(top=0.99, bottom=0.01, right=0.99, left=0.01, hspace=0, wspace=0)

#保存图片

plt.savefig(r‘./movie_img/movie{0}.jpg‘.format(i),dpi = 500)

#-*- codeing = utf-8 -*-

#@Time : 2020/11/14 22:16

#@Author : 杨晓

#@File : testCloud.py

#@Software: PyCharm

## 包的作用

import jieba #分词

from wordcloud import WordCloud #词云

from PIL import Image #图片处理

import numpy as np #将图片变成数组

import collections #计数器

from matplotlib import pyplot as plt #绘图

import sqlite3 #数据库

# 获取短评信息

def get_data(db_name,sql):

#连接数据库

conn = sqlite3.connect(db_name)

#获取游标

cursor = conn.cursor()

#执行sql语句

data = cursor.execute(sql)

text = ""

for item in data:

text += item[0]+" "

cursor.close()

#关闭数据库

conn.close()

return text

def cut_word(text):

#分词:cut_all=False:精确模式 HMM=True:使用隐式马尔科夫

cut = jieba.cut(text,cut_all=False,HMM=True)

object_list = []

#读取停用词

with open("stop_word.txt", ‘r‘, encoding=‘UTF-8‘) as meaninglessFile:

stopwords = set(meaninglessFile.read().split(‘\n‘))

stopwords.add(‘ ‘)

#如果单词不在停用词里,则添加

for word in cut:

if word not in stopwords:

object_list.append(word)

#collections.Counter 计数器,统计单词个数

word_counts = collections.Counter(object_list)

print(word_counts)

return word_counts

def get_cloud(word_counts,i):

#遮罩图:必须是白底的

img = Image.open(r‘./img/tree.jpg‘)

img_array = np.array(img) #将图片变为数组

wc = WordCloud(

background_color = ‘white‘, # 背景颜色

mask = img_array, #遮罩图片

font_path = ‘msyh.ttc‘ #字体样式

)

wc.generate_from_frequencies(word_counts) #生成词云图

fig = plt.figure(1)

plt.imshow(wc) # 显示词云

plt.axis(‘off‘) # 关闭保存

#plt.show()

#调整边框

plt.subplots_adjust(top=0.99, bottom=0.01, right=0.99, left=0.01, hspace=0, wspace=0)

#保存图片

plt.savefig(r‘./movie_img/movie{0}.jpg‘.format(i),dpi = 500)

if __name__ == ‘__main__‘:

for i in range(1,251):

#编写查询语句

sql = "select info from movie"+str(i)

text = get_data(‘duanping‘,sql)

word_counts = cut_word(text)

get_cloud(word_counts,i)

原文:https://www.cnblogs.com/yangxiao-/p/14091094.html