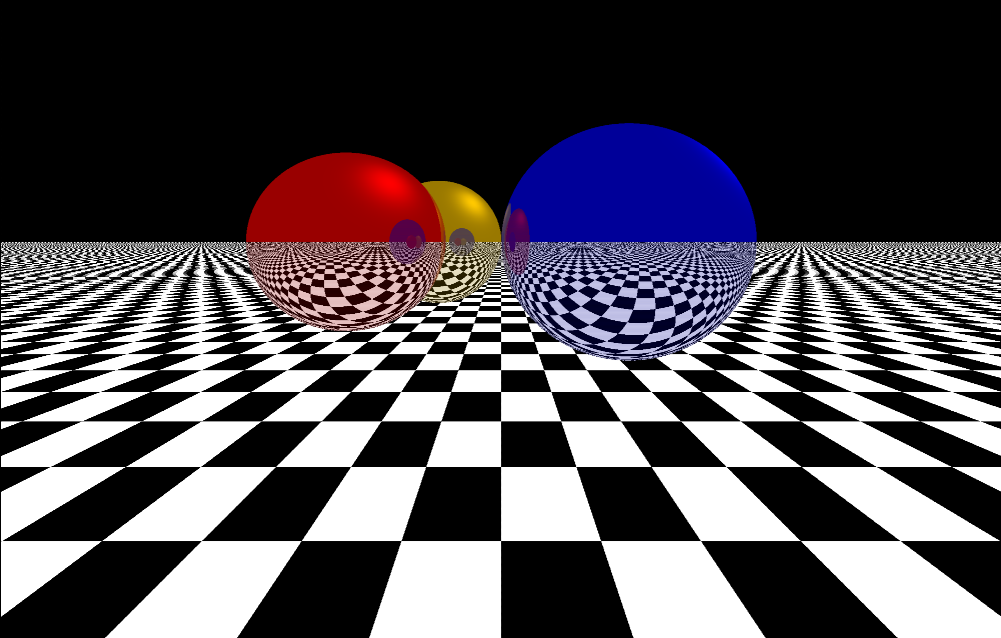

光线跟踪是一种真实地显示物体的方法,该方法由Appe在1968年提出。光线跟踪方法沿着到达视点的光线的反方向跟踪,经过屏幕上每一个象素,找出与视线相交的物体表面点P0,并继续跟踪,找出影响P0点光强的所有光源,从而算出P0点上精确的光线强度,在材质编辑中经常用来表现镜面效果。光线跟踪或称光迹追踪是计算机图形学的核心算法之一。在算法中,光线从光源被抛射出来,当他们经过物体表面的时候,对他们应用种种符合物理光学定律的变换。最终,光线进入虚拟的摄像机底片中,图片被生成出来。

至此,我们来实现一个光线跟踪的效果(实际上是懒得写注释 doge)

<script>

document.body.style.background="#000";

var C=document.getElementById("CANVAS").getContext("2d")

document.onkeydown = function(event){

if(event.keyCode==38){//up

cinemaZ+=10;

}

else if(event.keyCode==40){//down

cinemaZ-=10;

}

else if(event.keyCode==37){//left

q+=-.005

}

else if(event.keyCode==39){//right

q-=-.005

}

}

function sphere_hit(x,y,z,r,ex,ey,ez,sx,sy,sz){

m=Math

a=(sx-ex)*(sx-ex)+(sy-ey)*(sy-ey)+(sz-ez)*(sz-ez)

b=2*(sx-ex)*(ex-x)+2*(sy-ey)*(ey-y)+2*(sz-ez)*(ez-z)

c=ex*ex-2*x*ex+x*x + ey*ey-2*y*ey+y*y + ez*ez-2*z*ez+z*z -r*r

theta=b*b-4*a*c

if(theta<0)return null;

else {

x1=(-b+m.sqrt(theta))/(2*a)

x2=(-b-m.sqrt(theta))/(2*a)

//alert("a:"+a+".b:"+b+".c:"+c+".x1="+x1+" x2="+x2)

tt=(x1<x2)?x1:x2

if(tt<0)return null

return {

t:tt,

nx:ex+sx*tt-x,

ny:ey+sy*tt-y,

nz:ez+sz*tt-z

};

}

}

function unitization(vector)

{

// if(vector[0]==null || vector[1]==null || vector[2]==null) alert("In Function unitization:Not Acceptable Parameters")

m=Math.sqrt(vector[0]*vector[0]+vector[1]*vector[1]+vector[2]*vector[2])

return new Array(vector[0]/m,vector[1]/m,vector[2]/m)

}

function transvection(v1,v2){

// if(v1[0]==null || v1[1]==null || v[2]==null) alert("In Function transvection:Not Acceptable Parameters in 1")

// if(v2[0]==null || v2[1]==null || v2[2]==null) alert("In Function transvection:Not Acceptable Parameters in 2")

return v1[0]*v2[0]+v1[1]*v2[1]+v1[2]*v2[2]

}

/*

************ obj List ***********

1.Sphere

2.Sphere

3.Sphere

4.Plate

************ obj List ***********

*/

plateN = unitization([0,-1,0])

var obj = new Array(

new Array(new Array(-500,-300,250),300,[255,0,0]),

new Array(new Array(-300,-300,700),300,[255,200,0]),

new Array(new Array(300,-300,15),300,[0,0,255])

)

eye=[0,-300,-800]

screenZ=-500

dem=[700,1000]

directal_light=unitization([1/1.732,1/1.732,-1/1.732])

envir_col=[0.6,0.6,0.6]

RAY_TRA=3

function detection(e,s){

// if(e[0]==null || e[1]==null || e[2]==null) alert("In Function detection:Not Acceptable Parameters in 1")

// if(s[0]==null || s[1]==null || s[2]==null) alert("In Function detection:Not Acceptable Parameters in 2")

var nearest={}

nearest.t=9999

nearest.n=null

for(io=0;io<obj.length;io++){

sinfor=sphere_hit(obj[io][0][0],obj[io][0][1],obj[io][0][2],obj[io][1],e[0],e[1],e[2],s[0],s[1],s[2])

if(sinfor!=null)if(sinfor.t<nearest.t){nearest.n=io;nearest.t=sinfor.t;}

}

if(nearest.t==9999) {

/* the farthest locatial ,this value mean the ratio between screen-e and object position

not hit all object,we have to test y=0 plate ,otherwise fill backgroundcolor

*/

if(s[1]!=e[1] && (pt= (-e[1]/(s[1]-e[1])) ) >0 ) {return null;} //nothing having been hited,meanwhile,out of the y=0 plate boundary

else //y=0 plate had been hited,calculate corresponding coordinates

{

return new Array(e[0]+pt*(s[0]-e[0]),0,e[2]+pt*(s[2]-e[2]))

}}

else return nearest;

}

function reflect(l,n){

// if(l[0]==null || l[1]==null || l[2]==null) alert("In Function reflection:Not Acceptable Parameters in 1")

// if(n[0]==null || n[1]==null || n[2]==null) alert("In Function reflection:Not Acceptable Parameters in 2")

n=unitization(n) // plateN had been unitizated

l=unitization(l)

co=transvection(l,n)

return new Array(l[0]-2*co*n[0],l[1]-2*co*n[1],l[2]-2*co*n[2])

}

for(i=0;i<=1;i+=.0005)

for(j=-.5;j<=.5;j+=.0005)

{ e=eye

scr=s=[dem[1]*j,-dem[0]*i,screenZ]

for(rt=RAY_TRA;rt>0;rt--){

point=detection(e,s)

if(point==null) {C.fillStyle="rgb(0,0,0)";

//if(rt==RAY_TRA){ C.fillRect(s[0]+dem[1]/2,-s[1],1,1);}else {C.fillRect(scr[0]+dem[1]/2,-scr[1],1,1);} break;}

if(rt==RAY_TRA) C.fillRect(s[0]+dem[1]/2,-s[1],1,1);break;}

else{

l=[s[0]-e[0],s[1]-e[1],s[2]-e[2]]

if(point[2]!=null){ //is plate

aaa=Math.floor(Math.sin(point[0]*6.28/200)+1 )

bbb=Math.floor(Math.sin(point[2]*6.28/200)+1 )

if(aaa==bbb)C.fillStyle="rgb(0,0,0)"; else C.fillStyle="rgb(255,255,255)";

C.fillRect(scr[0]+dem[1]/2,-scr[1],1,1);

rr=reflect(l,plateN)

e=point

s=[e[0]+rr[0],e[1]+rr[1],e[2]+rr[2]]

}else{ //is object

rgb=obj[point.n][2]

//phong color model

p0=obj[point.n][0]

p1=[e[0]+point.t*(s[0]-e[0]) ,e[1]+point.t*(s[1]-e[1]) ,e[2]+point.t*(s[2]-e[2]) ]

nvec=unitization([p1[0]-p0[0],p1[1]-p0[1],p1[2]-p0[2]])

co=nvec[0]*directal_light[0]+nvec[1]*directal_light[1]+nvec[2]*directal_light[2]

co=Math.pow(co,31)

//sur_col=[Math.round(co*rgb[0]),Math.round(co*rgb[1]),Math.round(co*rgb[2])]

sur_col=[Math.min(255,Math.round((1-envir_col[0])*co*rgb[0])+envir_col[0]*rgb[0]),

Math.min(255,Math.round((1-envir_col[1])*co*rgb[1])+envir_col[1]*rgb[1]),

Math.min(255,Math.round((1-envir_col[2])*co*rgb[2])+envir_col[2]*rgb[2])]

//C.fillStyle="rgb("+sur_col[0]+","+sur_col[1]+","+sur_col[2]+")";

C.fillStyle="rgba("+sur_col[0]+","+sur_col[1]+","+sur_col[2]+","+ rt/RAY_TRA +")";

C.fillRect(scr[0]+dem[1]/2,-scr[1],1,1);

rr=reflect(l,nvec)

e=p1

s=[e[0]+rr[0],e[1]+rr[1],e[2]+rr[2]]

}

}

//C.fillRect(s[0]+dem[1]/2,-s[1],1,1);

}

}

</script>

原文:https://www.cnblogs.com/yumingle/p/14129213.html