从ambari启动kylin时报错大意如下:

stderr:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/KYLIN/package/scripts/kylin_query.py", line 74, in <module>

KylinQuery().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 352, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/KYLIN/package/scripts/kylin_query.py", line 53, in start

Execute(cmd, user=‘hdfs‘)

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 166, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 160, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 124, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 263, in action_run

returns=self.resource.returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 72, in inner

result = function(command, **kwargs)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 102, in checked_call

tries=tries, try_sleep=try_sleep, timeout_kill_strategy=timeout_kill_strategy, returns=returns)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 150, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 314, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of ‘. /var/lib/ambari-agent/tmp/kylin_env.rc;/usr/hdp/3.0.1.0-187/kylin/bin/kylin.sh start;cp -rf /usr/hdp/3.0.1.0-187/kylin/pid /var/run/kylin/kylin.pid‘ returned 1. Retrieving hadoop conf dir...

...................................................[[32mPASS[0m]

KYLIN_HOME is set to /usr/hdp/3.0.1.0-187/kylin

Checking HBase

...................................................[[32mPASS[0m]

Checking hive

...................................................[[32mPASS[0m]

Checking hadoop shell

...................................................[[32mPASS[0m]

Checking hdfs working dir

...................................................[[32mPASS[0m]

Retrieving Spark dependency...

...................................................[[32mPASS[0m]

Retrieving Flink dependency...

[33mOptional dependency flink not found, if you need this; set FLINK_HOME, or run bin/download-flink.sh[0m

...................................................[[32mPASS[0m]

Retrieving kafka dependency...

Couldn‘t find kafka home. If you want to enable streaming processing, Please set KAFKA_HOME to the path which contains kafka dependencies.

...................................................[[32mPASS[0m]

[33mChecking environment finished successfully. To check again, run ‘bin/check-env.sh‘ manually.[0m

Retrieving hive dependency...

Something wrong with Hive CLI or Beeline, please execute Hive CLI or Beeline CLI in terminal to find the root cause.

cp: cannot stat ‘/usr/hdp/3.0.1.0-187/kylin/pid’: No such file or directory

这有可能是没有权限不能成功启动kylin导致,试着授权hdfs用户,让其拥有hive的权限

[root@worker kylin]# su hdfs

[hdfs@worker kylin]$ /usr/hdp/3.0.1.0-187/kylin/bin/sample.sh

如果执行上面的命令再次报错Cannot modify hive.security.authorization.sqlstd.confwhitelist.append at runtime. It is not in list of params that are allowed to be modified at runtime (state=08S01,code=0)

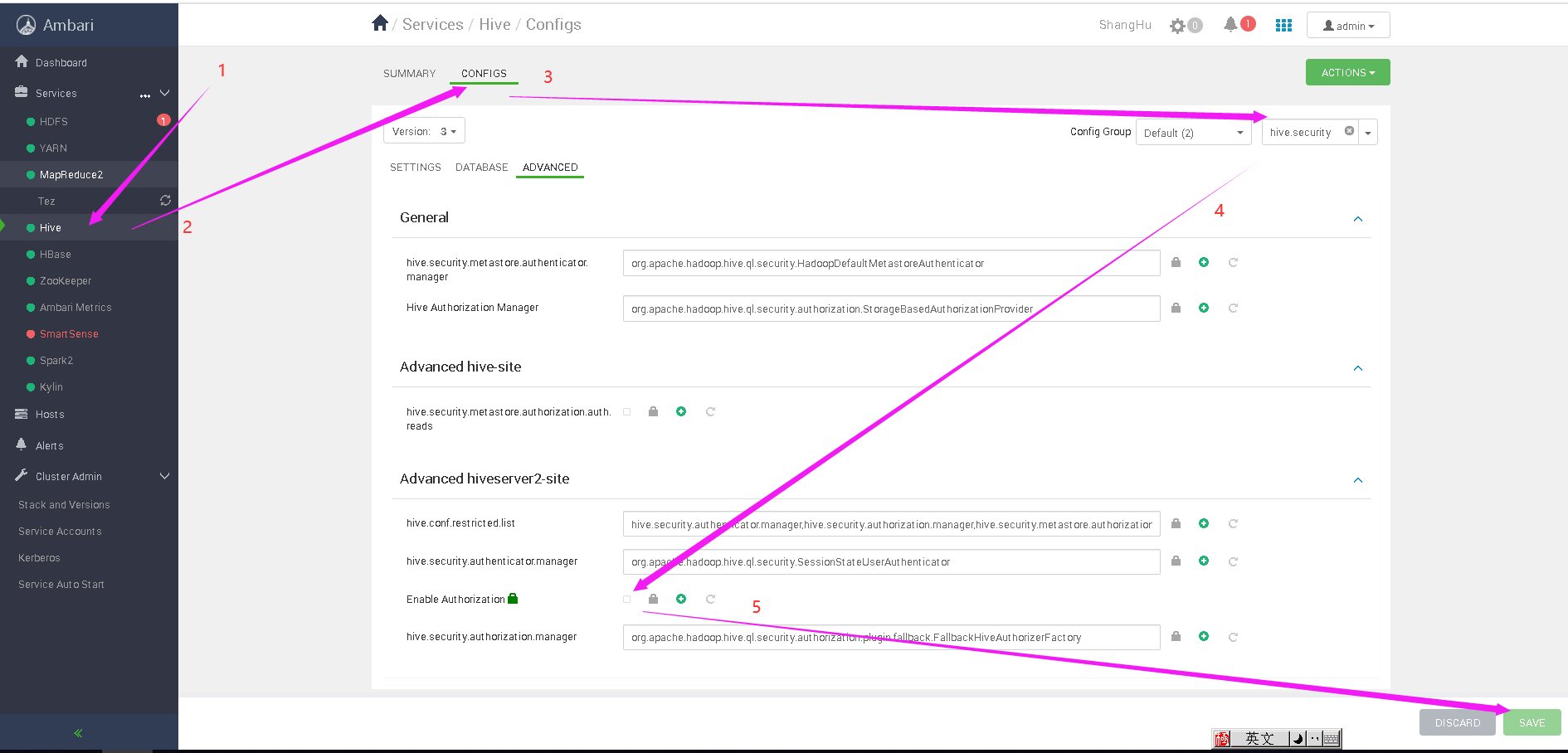

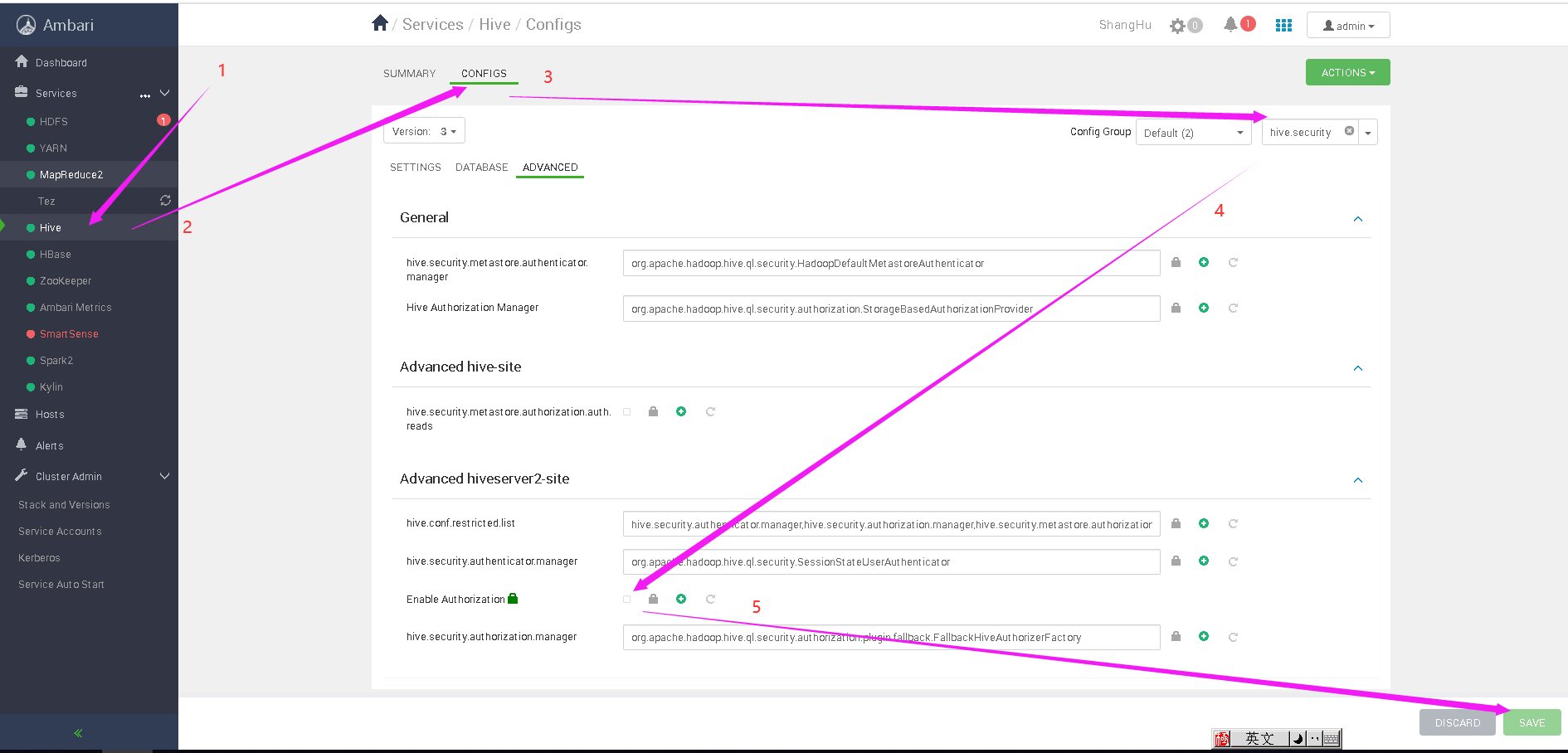

则应在ambari的services菜单中找到Hive,在右边CONFIGS -> Filter中输入hive.security,把

Enable Authorization后面的勾勾掉,如下图:

,点保存后再次在hdfs用户下重新执行

/usr/hdp/3.0.1.0-187/kylin/bin/sample.sh应该可以得到解决。

这个错误其它是上一个错误hdfs用户执行/usr/hdp/3.0.1.0-187/kylin/bin/sample.sh语句出现的错误,这里单独列出来,方便查询。

解决方法:

在ambari的services菜单中找到Hive,在右边CONFIGS -> Filter中输入hive.security,把

Enable Authorization后面的勾勾掉,如下图:

,点保存后再次在hdfs用户下重新执行

/usr/hdp/3.0.1.0-187/kylin/bin/sample.sh应该可以得到解决。

原文:https://www.cnblogs.com/zh672903/p/14220509.html