为了分析沉默用户、本周回流用户数、流失用户、最近连续3周活跃用户、最近七天内连续三天活跃用户数,需要准备2019-02-12、2019-02-20日的数据。

dt.sh 2019-02-12

cluster.sh start

lg.sh

ods_log.sh 2019-02-12

dwd_start_log.sh 2019-02-12

dwd_base_log.sh 2019-02-12

dwd_event_log.sh 2019-02-12

dws_uv_log.sh 2019-02-12

select * from dws_uv_detail_day where dt=‘2019-02-12‘ limit 2;

dt.sh 2019-02-20

cluster.sh start

lg.sh

ods_log.sh 2019-02-20

dwd_start_log.sh 2019-02-20

dwd_base_log.sh 2019-02-20

dwd_event_log.sh 2019-02-20

dws_uv_log.sh 2019-02-20

select * from dws_uv_detail_day where dt=‘2019-02-20‘ limit 2;

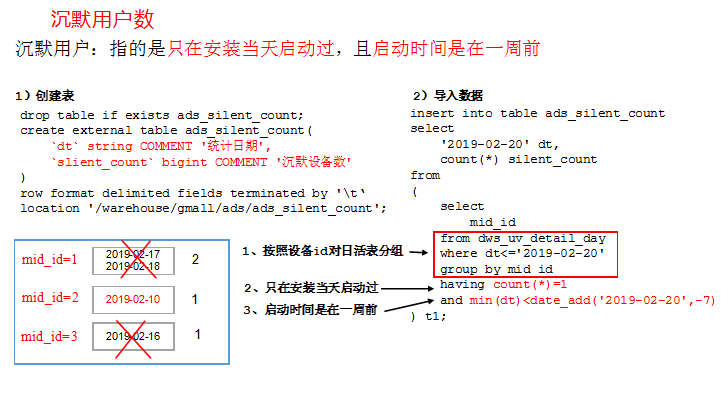

沉默用户:指的是只在安装当天启动过,且启动时间是在一周前

使用日活明细表dws_uv_detail_day作为DWS层数据

drop table if exists ads_silent_count;

create external table ads_silent_count(

`dt` string COMMENT ‘统计日期‘,

`silent_count` bigint COMMENT ‘沉默设备数‘

)

row format delimited fields terminated by ‘\t‘

location ‘/warehouse/gmall/ads/ads_silent_count‘;

insert into table ads_silent_count

select

‘2019-02-20‘ dt,

count(*) silent_count

from

(

select mid_id

from dws_uv_detail_day

where dt<=‘2019-02-20‘

group by mid_id

having count(*)=1 and max(dt)<date_add(‘2019-02-20‘,-7)

) t1;

select * from ads_silent_count;

[kgg@hadoop102 bin]$ vim ads_silent_log.sh

在脚本中编写如下内容

chmod 777 ads_silent_log.sh

ads_silent_log.sh 2019-02-20

select * from ads_silent_count;

企业开发中一般在每日凌晨30分~1点

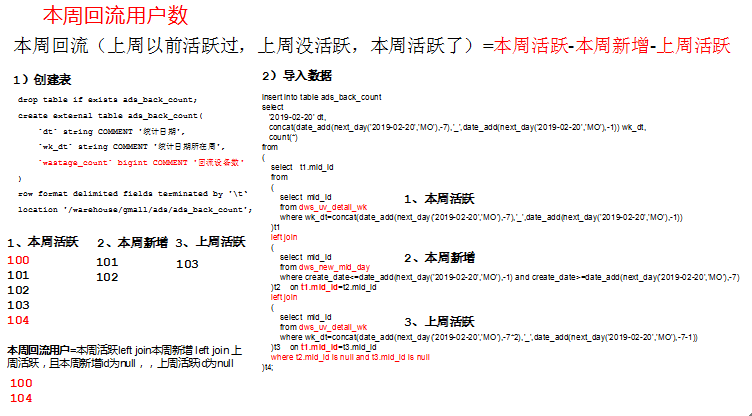

本周回流=本周活跃-本周新增-上周活跃

使用日活明细表dws_uv_detail_day作为DWS层数据

drop table if exists ads_back_count;

create external table ads_back_count(

`dt` string COMMENT ‘统计日期‘,

`wk_dt` string COMMENT ‘统计日期所在周‘,

`wastage_count` bigint COMMENT ‘回流设备数‘

)

row format delimited fields terminated by ‘\t‘

location ‘/warehouse/gmall/ads/ads_back_count‘;

insert into table ads_back_count

select

‘2019-02-20‘ dt,

concat(date_add(next_day(‘2019-02-20‘,‘MO‘),-7),‘_‘,date_add(next_day(‘2019-02-20‘,‘MO‘),-1)) wk_dt,

count(*)

from

(

select t1.mid_id

from

(

select mid_id

from dws_uv_detail_wk

where wk_dt=concat(date_add(next_day(‘2019-02-20‘,‘MO‘),-7),‘_‘,date_add(next_day(‘2019-02-20‘,‘MO‘),-1))

)t1

left join

(

select mid_id

from dws_new_mid_day

where create_date<=date_add(next_day(‘2019-02-20‘,‘MO‘),-1) and create_date>=date_add(next_day(‘2019-02-20‘,‘MO‘),-7)

)t2

on t1.mid_id=t2.mid_id

left join

(

select mid_id

from dws_uv_detail_wk

where wk_dt=concat(date_add(next_day(‘2019-02-20‘,‘MO‘),-7*2),‘_‘,date_add(next_day(‘2019-02-20‘,‘MO‘),-7-1))

)t3

on t1.mid_id=t3.mid_id

where t2.mid_id is null and t3.mid_id is null

)t4;

select * from ads_back_count;

[kgg@hadoop102 bin]$ vim ads_back_log.sh

在脚本中编写如下内容

chmod 777 ads_back_log.sh

ads_back_log.sh 2019-02-20

select * from ads_back_count;

企业开发中一般在每周一凌晨30分~1点

项目实战从0到1之hive(43)大数据项目之电商数仓(用户行为数据)(十一)

原文:https://www.cnblogs.com/huanghanyu/p/14304352.html