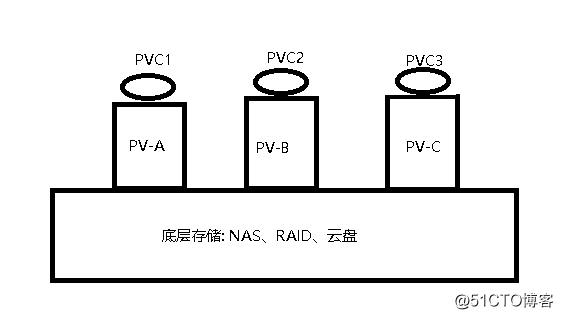

底层存储支持各种方式,NAS、云盘、CEPH是我们常用的存储方式之一。

这是最底层的硬件存储,在底层硬件基础之上再开辟 PV(Persistent Volume)。

通过PV申请PVC(PersistentVolumeClaim)资源。

实现方式分为两种,通过定义PV再定义PVC; 直接动态申请PVC。2、卷访问模式

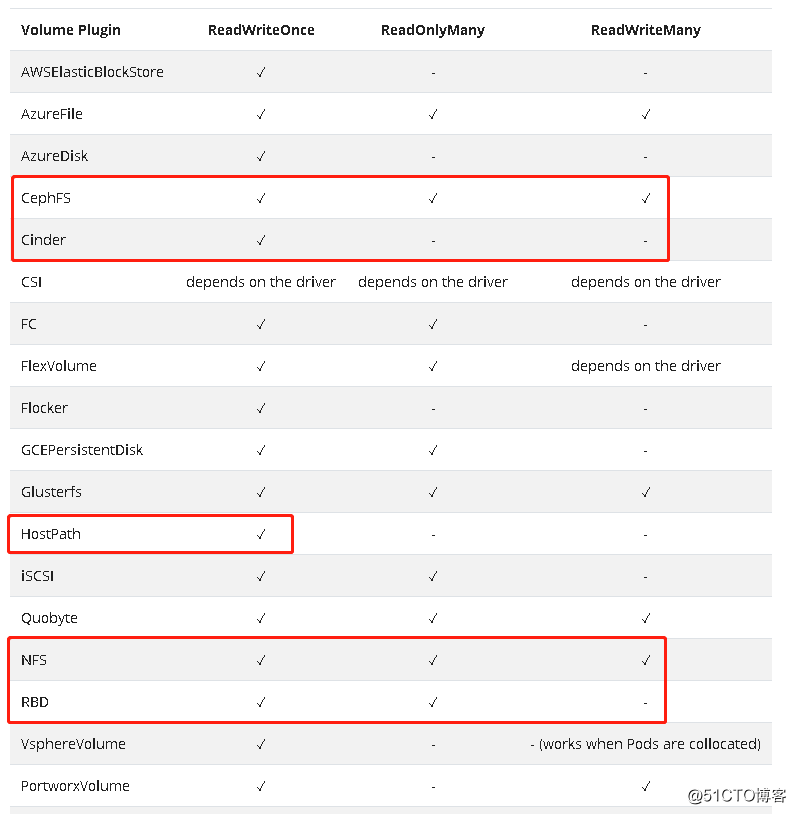

1 ) RWO ROM RWM

ReadWriteOnce 卷可以被一个节点以读写方式挂载;

ReadOnlyMany 卷可以被多个节点以只读方式挂载;

ReadWriteMany 卷可以被多个节点以读写方式挂载。2)pv 的几种生命周期

3) pv生命周期的4阶段

Available 可用状态,未与PVC绑定

Bound 绑定状态

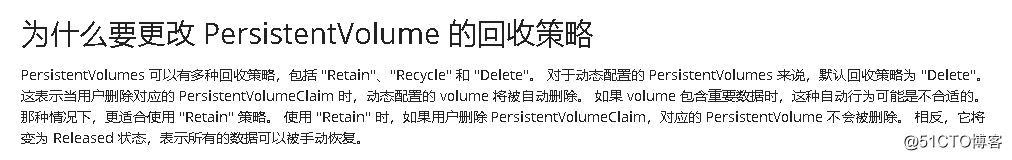

Released 绑定的PVC删除、资源释放、未被集群收回

Failed 资源回收失败3、kubernetes目录挂载方式

0 ) emptyDir

emptyDir,它的生命周期和所属的 Pod 是相同的,同生共死。

empty Volume 在 Pod 分配到 Node 上时会被创建, 自动分配一个目录。

当 Pod 从 Node 上删除或者迁移时,emptyDir 中的数据会被永久删除1)hostpath模式,创建在node节点,与pod 生命周期不同

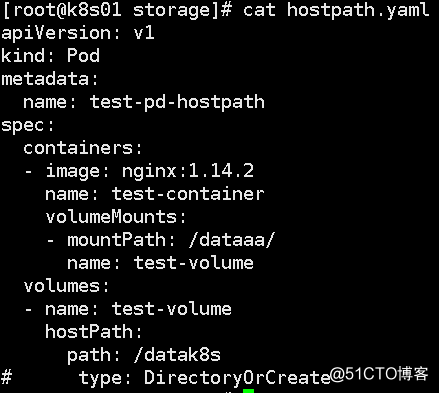

将nginx 镜像中/data 挂载到 宿中的/datak8s, yaml文件如下:

apiVersion: v1

kind: Pod

metadata:

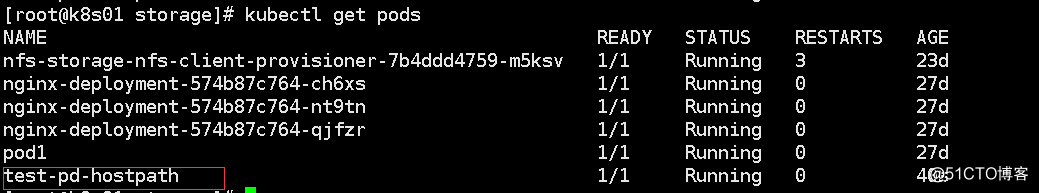

name: test-pd-hostpath

spec:

containers:

- image: nginx:1.14.2

name: test-container

volumeMounts:

- mountPath: /data/

name: test-volume

volumes:

- name: test-volume

hostPath:

path: /datak8s

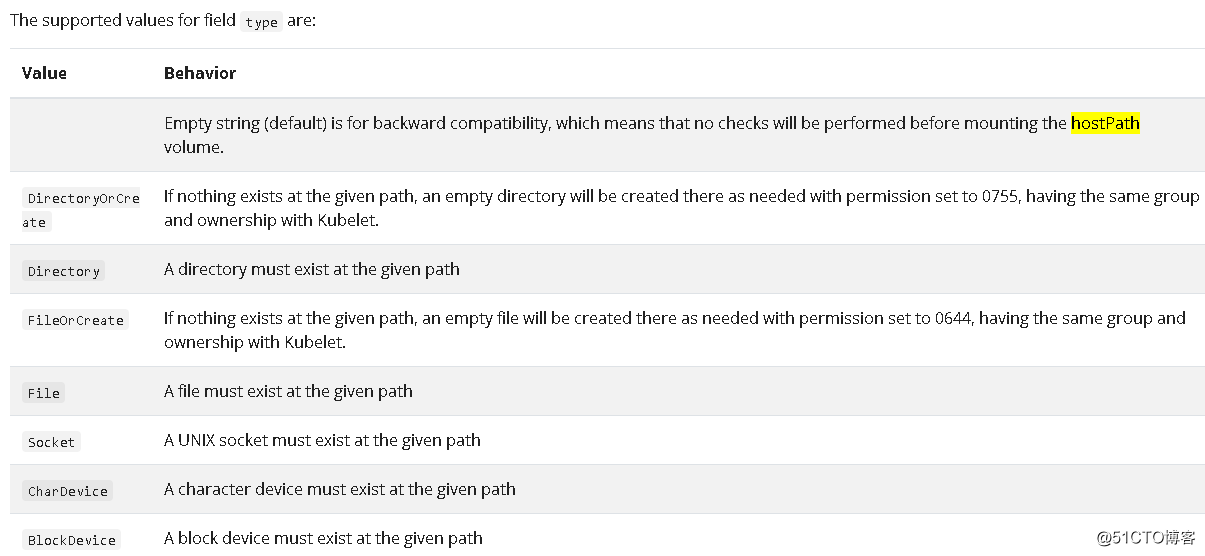

type: DirectoryOrCreatehostPath type 模式如下:

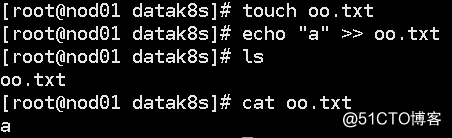

在node节点 /datak8s 下创建文件

删除test-pd-hostpath,修改yaml文件

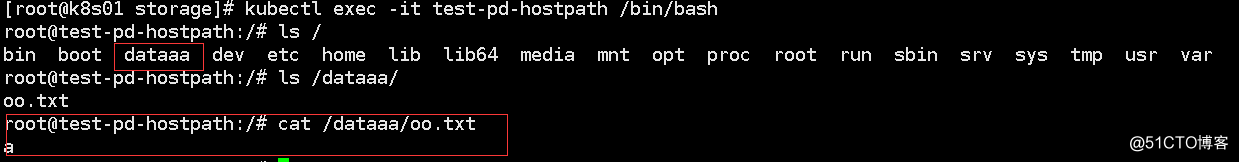

kubectl apply -f hostpath.yaml

查看镜像内目录

在低版本某云中,使用该种对应关系进行日志收集, node节点使用同样的目录,pod中由程序 logback 来创建日志

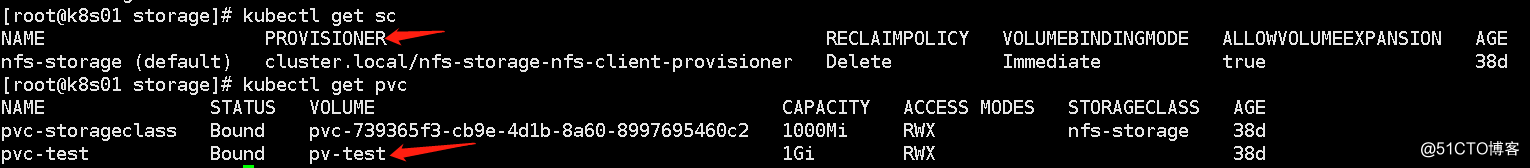

2、使用nfs-pvc 做为数据存储 或者在云端可以使用云存储挂载但仅局限于RWO环境

k8s 挂载NFS的 rbac.yaml 创建

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io#pvc 创建

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-test

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

path: /nfs/k8s

server: 10.0.0.181

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-test

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

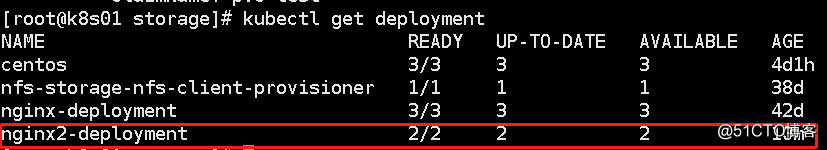

#创建yaml文件并挂载pvc

vim nginx2-pvc-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx2-deployment

labels:

app: nginx2

spec:

replicas: 2

selector:

matchLabels:

app: nginx2

template:

metadata:

labels:

app: nginx2

spec:

containers:

- name: nginx2

image: nginx:1.14.2

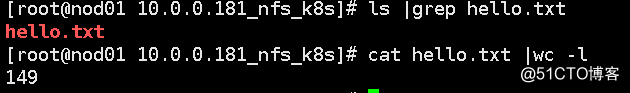

command: [ "sh", "-c", "while [ true ]; do echo ‘Hello‘; sleep 10; done | tee -a /logs/hello.txt" ]

ports:

- containerPort: 80

volumeMounts:

- name: nginx2-pvc-storageclass

mountPath: /logs

volumes:

- name: nginx2-pvc-storageclass

persistentVolumeClaim:

claimName: pvc-test#部署nginx2 deployment

kubectl apply -f nginx2-pvc-deployment.yaml

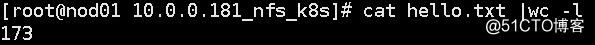

#删除 nginx2,hello.txt 文件还存在

[root@k8s01 storage]# kubectl delete -f nginx2-pvc-deployment.yaml

deployment.apps "nginx2-deployment" deleted

原文:https://blog.51cto.com/keep11/2622798