基于上一篇Hadoop集群已经搭建完成基础上(需要的朋友可以翻看我的上一篇博文),我们来完成Hbase的搭建工作。

| 服务/机器 | node21(10.10.26.21) | node245(10.10.26.245) | node255(10.10.26.255) |

|---|---|---|---|

| NameNode | Y | ||

| DataNode | Y | Y | Y |

| Zookeeper | Y | Y | Y |

| RegionServer | Y | Y | Y |

| HBase Master | Y |

由于Hbase集群master的高可用需要依赖Zookeeper集群,所以需要安装Zookeeper集群。

zooker安装:

注意要下载bin的版本才能带可执行文件

cd /opt/

mkdir zookeeper

cd zookeeper

wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.5.9/apache-zookeeper-3.5.9-bin.tar.gz

tar -zxvf zookeeper-3.4.14.tar.gz

cd zookeeper-3.4.14/conf

cp zoo_sample.cfg zoo.cfg

vim zoo.cfg

添加如下内容:

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/var/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=node21:2888:3888

server.2=node245:2888:3888

server.3=node255:2888:3888

设置node21上的zookeeper的id为1

mkdir -p /var/zookeeper

cd /var/zookeeper

echo "1" >> ./myid

scp -r zookeeper/ root@node245:/opt

scp -r zookeeper/ root@node255:/opt

设置node245上的zookeeper的id为2

mkdir -p /var/zookeeper

cd /var/zookeeper

echo "2" >> ./myid

设置node255上的zookeeper的id为3

mkdir -p /var/zookeeper

cd /var/zookeeper

echo "3" >> ./myid

vim /etc/profile

#添加如下配置

export ZOOKEEPER_HOME=/opt/zookeeper/apache-zookeeper-3.5.9

export PATH=$ZOOKEEPER_HOME/bin:$PATH

#配置生效

source /etc/profile

zkServer.sh

可以看到zookeeper集群有一台机器node245是leader, 另外两台是 follower , 集群工作状态正常!

[root@node21 bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/apache-zookeeper-3.5.9/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

[root@node245 zookeeper]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/apache-zookeeper-3.5.9/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

[root@node255 zookeeper]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/apache-zookeeper-3.5.9/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

mkdir -p /opt/hbase

cd /opt/hbase

wget http://mirror.bit.edu.cn/apache/hbase/2.2.6/hbase-2.2.6-bin.tar.gz

tar -zxvf hbase-2.2.6-bin.tar.gz

vim /opt/hbase/hbase-2.2.6/conf/hbase-site.xml

#添加内容如下

<property>

<name>hbase.rootdir</name>

<value>hdfs://node21:8020/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>node21,node245,node255</value>

</property>

<property>

<name>hbase.master.info.port</name>

<value>9084</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

注意 hbase.rootdir要跟hadoop中的配置一致(即和 /opt/hadoop/hadoop-3.2.1/etc/hadoop/core-site.xml 中的fs.defaultFS 配置的IP和端口是一致的!

vim /opt/hbase/hbase-2.2.6/conf/hbase-env.sh

#jdk 路径

export JAVA_HOME=/opt/jdk1.8

# 不要用hbase 自带的zookeeper

export HBASE_MANAGES_ZK=false

vim /opt/hbase/hbase-2.2.6/conf/regionservers

#添加如下内容,注意删除自带的localhost

node21

node245

node255

scp -r hbase/ root@node245:/opt

scp -r hbase/ root@node255:/opt

vim /etc/profile

#添加如下配置

export HBASE_HOME=/opt/hbase/hbase-2.2.6

export PATH=$HBASE_HOME/bin:$PATH

#配置生效

source /etc/profile

[root@node21 ~]# start-hbase.sh

[root@node21 conf]# jps -l

2768 sun.tools.jps.Jps

18470 org.apache.hadoop.hdfs.server.datanode.DataNode

1943 org.apache.hadoop.hbase.regionserver.HRegionServer

19144 org.apache.hadoop.yarn.server.nodemanager.NodeManager

18940 org.apache.hadoop.yarn.server.resourcemanager.ResourceManager

18269 org.apache.hadoop.hdfs.server.namenode.NameNode

26989 org.apache.zookeeper.server.quorum.QuorumPeerMain

1757 org.apache.hadoop.hbase.master.HMaster

可以看到hbase的两个服务,一个是HMster,一个是HregionServer。

[root@node245 ~]# jps -l

10689 org.apache.hadoop.hdfs.server.datanode.DataNode

15985 sun.tools.jps.Jps

10810 org.apache.hadoop.yarn.server.nodemanager.NodeManager

14348 org.apache.zookeeper.server.quorum.QuorumPeerMain

15628 org.apache.hadoop.hbase.regionserver.HRegionServer

可以看到hbase的服务一个是HregionServer已经成功启动。

[root@node255 ~]# jps -l

19768 org.apache.hadoop.hdfs.server.datanode.DataNode

19976 org.apache.hadoop.yarn.server.nodemanager.NodeManager

19886 org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode

25758 org.apache.zookeeper.server.quorum.QuorumPeerMain

28014 sun.tools.jps.Jps

27567 org.apache.hadoop.hbase.regionserver.HRegionServer

同样,可以看到hbase的服务一个是HregionServer已经成功启动。

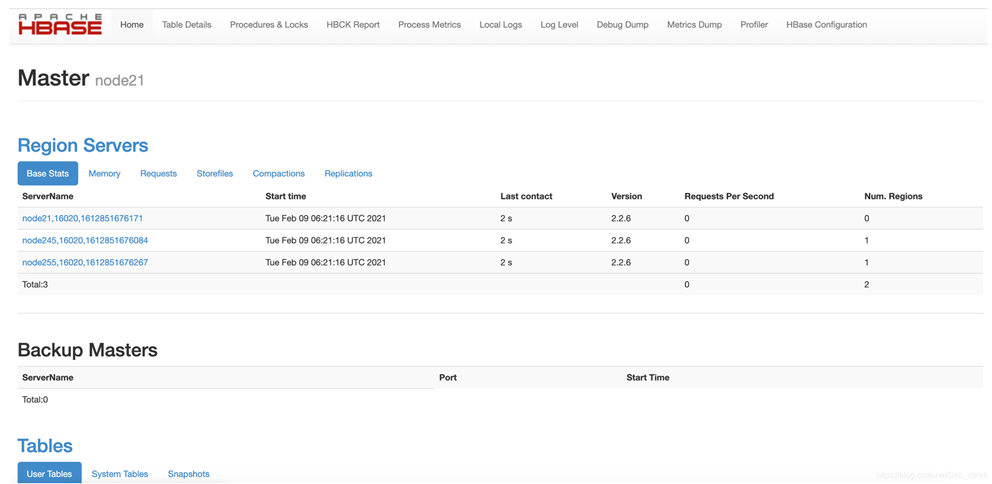

可以看到Hbase的3个节点信息。

以上就完成了整个Hbase集群的搭建工作!

—————END—————

喜欢本文的朋友,欢迎关注、转发、评论,让我们一起成为有智慧的架构师!

原文:https://www.cnblogs.com/hongwen2220/p/14415517.html