import torch

import numpy as np

import torchvision #torch的视觉包

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from torch.utils.data.dataloader import DataLoader

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import PIL.Image as Image

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

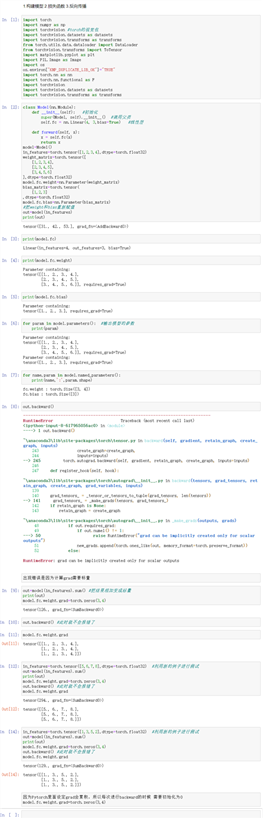

class Model(nn.Module):

def __init__(self): #初始化

super(Model, self).__init__() #调用父类

self.fc = nn.Linear(4, 3,bias=True) #线性层

def forward(self, x):

x = self.fc(x)

return x

model=Model()

in_features=torch.tensor([1,2,3,4],dtype=torch.float32)

weight_matrix=torch.tensor([

[1,2,3,4],

[2,3,4,5],

[3,4,5,6]

],dtype=torch.float32)

model.fc.weight=nn.Parameter(weight_matrix)

bias_matrix=torch.tensor(

[1,2,3]

,dtype=torch.float32)

model.fc.bias=nn.Parameter(bias_matrix)

#把weight和bias重新赋值

out=model(in_features)

print(out)

print(model.fc)

print(model.fc.weight)

print(model.fc.bias)

for param in model.parameters(): #输出模型的参数

print(param)

for name,param in model.named_parameters():

print(name,‘:‘,param.shape)

out=model(in_features).sum() #把结果相加变成标量

print(out)

model.fc.weight.grad=torch.zeros(3,4)

out.backward() #此时就不会报错了

model.fc.weight.grad

in_features=torch.tensor([5,6,7,8],dtype=torch.float32) #利用新的例子进行测试

out=model(in_features).sum()

print(out)

model.fc.weight.grad=torch.zeros(3,4)

out.backward() #此时就不会报错了

model.fc.weight.grad

in_features=torch.tensor([1,3,5,2],dtype=torch.float32) #利用新的例子进行测试

out=model(in_features).sum()

print(out)

model.fc.weight.grad=torch.zeros(3,4)

out.backward() #此时就不会报错了

model.fc.weight.grad

import torch

import numpy as np

import torchvision #torch的视觉包

import torchvision.datasets as datasets

import torchvision.transforms as transforms

from torch.utils.data.dataloader import DataLoader

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

import PIL.Image as Image

import os

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

import torch.nn as nn

import torch.nn.functional as F

import torchvision

import torchvision.datasets as datasets

import torchvision.transforms as transforms

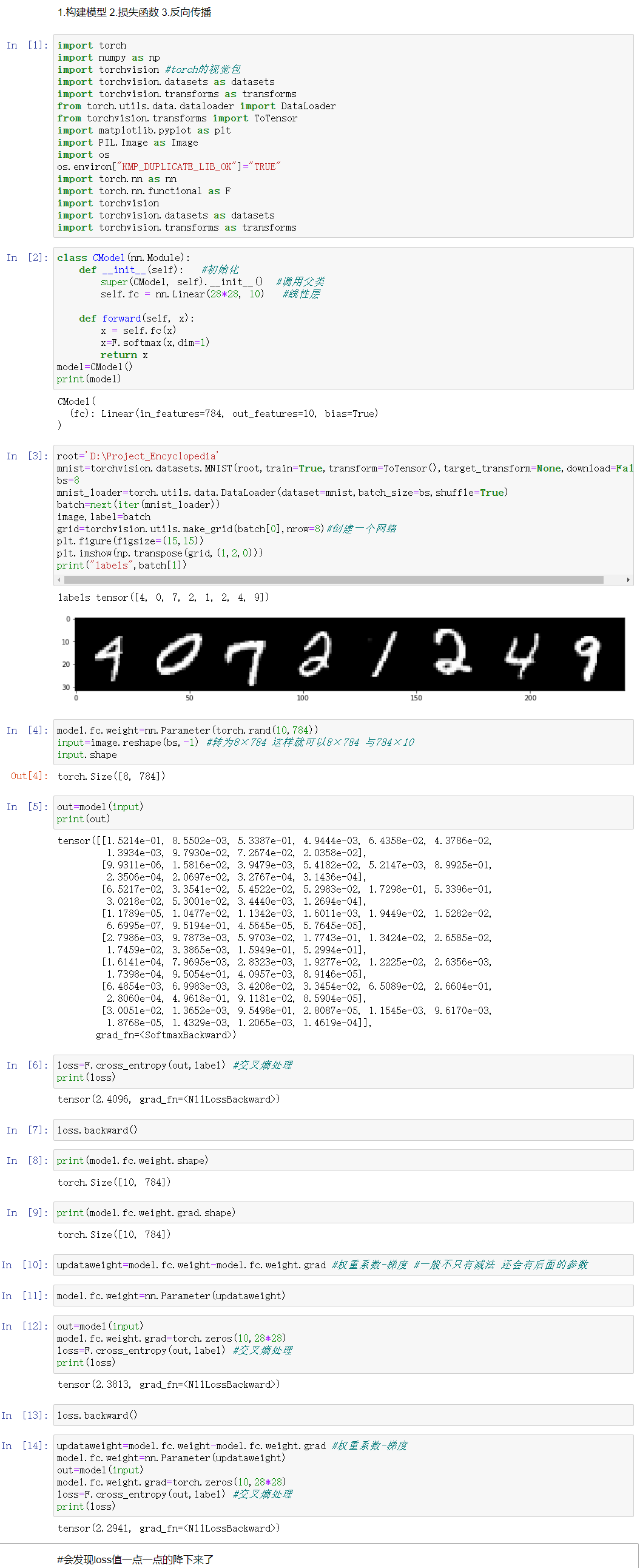

class CModel(nn.Module):

def __init__(self): #初始化

super(CModel, self).__init__() #调用父类

self.fc = nn.Linear(28*28, 10) #线性层

def forward(self, x):

x = self.fc(x)

x=F.softmax(x,dim=1)

return x

model=CModel()

print(model)

root=‘D:\Project_Encyclopedia‘

mnist=torchvision.datasets.MNIST(root,train=True,transform=ToTensor(),target_transform=None,download=False)

bs=8

mnist_loader=torch.utils.data.DataLoader(dataset=mnist,batch_size=bs,shuffle=True)

batch=next(iter(mnist_loader))

image,labels=batch

grid=torchvision.utils.make_grid(batch[0],nrow=8)#创建一个网络

plt.figure(figsize=(15,15))

plt.imshow(np.transpose(grid,(1,2,0)))

print("labels",batch[1])

model.fc.weight=nn.Parameter(torch.rand(10,784))

input=image.reshape(bs,-1) #转为8×784 这样就可以8×784 与784×10

input.shape

out=model(input)

print(out)

loss=F.cross_entropy(out,label) #交叉熵处理

print(loss)

loss.backward()

print(model.fc.weight.shape)

print(model.fc.weight.grad.shape)

updataweight=model.fc.weight-model.fc.weight.grad #权重系数-梯度

model.fc.weight=nn.Parameter(updataweight)

out=model(input)

model.fc.weight.grad=torch.zeros(10,28*28)

loss=F.cross_entropy(out,label) #交叉熵处理

print(loss)

loss.backward()

updataweight=model.fc.weight-model.fc.weight.grad #权重系数-梯度

model.fc.weight=nn.Parameter(updataweight)

out=model(input)

model.fc.weight.grad=torch.zeros(10,28*28)

loss=F.cross_entropy(out,label) #交叉熵处理

print(loss)

原文:https://www.cnblogs.com/jgg54335/p/14585500.html