etcd 数据目录

[root@k8s-master01 ~]# cat /opt/k8s/bin/environment.sh |grep "ETCD_DATA_DIR="

export ETCD_DATA_DIR="/data/k8s/etcd/data"

etcd WAL 目录

[root@k8s-master01 ~]# cat /opt/k8s/bin/environment.sh |grep "ETCD_WAL_DIR="

export ETCD_WAL_DIR="/data/k8s/etcd/wal"

[root@k8s-master01 ~]# ls /data/k8s/etcd/data/

member

[root@k8s-master01 ~]# ls /data/k8s/etcd/data/member/

snap

[root@k8s-master01 ~]# ls /data/k8s/etcd/wal/

0000000000000000-0000000000000000.wal 0.tmp

# mkdir -p /data/etcd_backup_dir

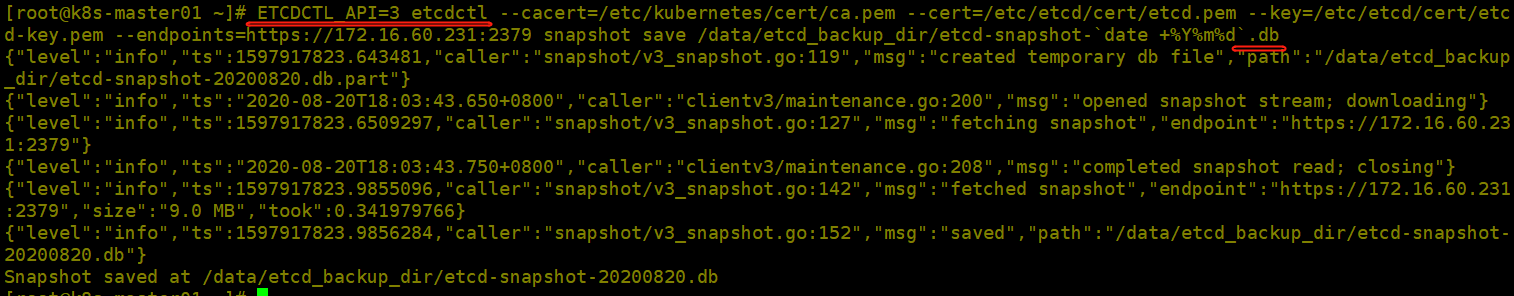

在etcd集群其中个一个节点(这里在k8s-master01)上执行备份:

[root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --cacert=/etc/kubernetes/cert/ca.pem --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --endpoints=https://172.16.60.231:2379 snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db

[root@k8s-master01 ~]# rsync -e "ssh -p22" -avpgolr /data/etcd_backup_dir/etcd-snapshot-20200820.db root@k8s-master02:/data/etcd_backup_dir/ [root@k8s-master01 ~]# rsync -e "ssh -p22" -avpgolr /data/etcd_backup_dir/etcd-snapshot-20200820.db root@k8s-master03:/data/etcd_backup_dir/

可以将上面k8s-master01节点的etcd备份命令放在脚本里,结合crontab进行定时备份:

[root@k8s-master01 ~]# cat /data/etcd_backup_dir/etcd_backup.sh

#!/usr/bin/bash

date;

CACERT="/etc/kubernetes/cert/ca.pem"

CERT="/etc/etcd/cert/etcd.pem"

EKY="/etc/etcd/cert/etcd-key.pem"

ENDPOINTS="172.16.60.231:2379"

ETCDCTL_API=3 /opt/k8s/bin/etcdctl --cacert="${CACERT}" --cert="${CERT}" --key="${EKY}" --endpoints=${ENDPOINTS} snapshot save /data/etcd_backup_dir/etcd-snapshot-`date +%Y%m%d`.db

# 备份保留30天

find /data/etcd_backup_dir/ -name "*.db" -mtime +30 -exec rm -f {} \;

# 同步到其他两个etcd节点

/bin/rsync -e "ssh -p5522" -avpgolr --delete /data/etcd_backup_dir/ root@k8s-master02:/data/etcd_backup_dir/

/bin/rsync -e "ssh -p5522" -avpgolr --delete /data/etcd_backup_dir/ root@k8s-master03:/data/etcd_backup_dir/

[root@k8s-master01 ~]# chmod 755 /data/etcd_backup_dir/etcd_backup.sh [root@k8s-master01 ~]# crontab -l #etcd集群数据备份 0 5 * * * /bin/bash -x /data/etcd_backup_dir/etcd_backup.sh > /dev/null 2>&1

二、etcd集群恢复

etcd集群备份操作只需要在其中的一个etcd节点上完成,然后将备份文件拷贝到其他节点。

但etcd集群恢复操作必须要所有的etcd节点上完成!

1)模拟etcd集群数据丢失

删除三个etcd集群节点的data数据 (或者直接删除data目录)

# rm -rf /data/k8s/etcd/data/*

查看k8s集群状态:

[root@k8s-master01 ~]# kubectl get cs NAME STATUS MESSAGE ERROR etcd-2 Unhealthy Get https://172.16.60.233:2379/health: dial tcp 172.16.60.233:2379: connect: connection refused etcd-1 Unhealthy Get https://172.16.60.232:2379/health: dial tcp 172.16.60.232:2379: connect: connection refused etcd-0 Unhealthy Get https://172.16.60.231:2379/health: dial tcp 172.16.60.231:2379: connect: connection refused scheduler Healthy ok controller-manager Healthy ok

[root@k8s-master01 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

[root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem endpoint health

https://172.16.60.231:2379 is healthy: successfully committed proposal: took = 9.918673ms

https://172.16.60.233:2379 is healthy: successfully committed proposal: took = 10.985279ms

https://172.16.60.232:2379 is healthy: successfully committed proposal: took = 13.422545ms

[root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem member list --write-out=table

+------------------+---------+------------+----------------------------+----------------------------+------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS | IS LEARNER |

+------------------+---------+------------+----------------------------+----------------------------+------------+

| 1d1d7edbba38c293 | started | k8s-etcd03 | https://172.16.60.233:2380 | https://172.16.60.233:2379 | false |

| 4c0cfad24e92e45f | started | k8s-etcd02 | https://172.16.60.232:2380 | https://172.16.60.232:2379 | false |

| 79cf4f0a8c3da54b | started | k8s-etcd01 | https://172.16.60.231:2380 | https://172.16.60.231:2379 | false |

+------------------+---------+------------+----------------------------+----------------------------+------------+

# systemctl restart etcd

[root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl -w table --cacert=/etc/kubernetes/cert/ca.pem --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" endpoint status +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://172.16.60.231:2379 | 79cf4f0a8c3da54b | 3.4.9 | 1.6 MB | true | false | 5 | 24658 | 24658 | | | https://172.16.60.232:2379 | 4c0cfad24e92e45f | 3.4.9 | 1.6 MB | false | false | 5 | 24658 | 24658 | | | https://172.16.60.233:2379 | 1d1d7edbba38c293 | 3.4.9 | 1.7 MB | false | false | 5 | 24658 | 24658 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@k8s-master01 ~]# kubectl get ns NAME STATUS AGE default Active 9m47s kube-node-lease Active 9m39s kube-public Active 9m39s kube-system Active 9m47s [root@k8s-master01 ~]# kubectl get pods -n kube-system No resources found in kube-system namespace. [root@k8s-master01 ~]# kubectl get pods --all-namespaces No resources found

# systemctl stop kube-apiserver # systemctl stop etcd

# rm -rf /data/k8s/etcd/data && rm -rf /data/k8s/etcd/wal

172.16.60.231节点 ------------------------------------------------------- ETCDCTL_API=3 etcdctl --name=k8s-etcd01 --endpoints="https://172.16.60.231:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem --initial-cluster-token=etcd-cluster-0 --initial-advertise-peer-urls=https://172.16.60.231:2380 --initial-cluster=k8s-etcd01=https://172.16.60.231:2380,k8s-etcd02=https://172.16.60.232:2380,k8s-etcd03=https://192.168.137.233:2380 --data-dir=/data/k8s/etcd/data --wal-dir=/data/k8s/etcd/wal snapshot restore /data/etcd_backup_dir/etcd-snapshot-20200820.db 172.16.60.232节点 ------------------------------------------------------- ETCDCTL_API=3 etcdctl --name=k8s-etcd02 --endpoints="https://172.16.60.232:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem --initial-cluster-token=etcd-cluster-0 --initial-advertise-peer-urls=https://172.16.60.232:2380 --initial-cluster=k8s-etcd01=https://172.16.60.231:2380,k8s-etcd02=https://172.16.60.232:2380,k8s-etcd03=https://192.168.137.233:2380 --data-dir=/data/k8s/etcd/data --wal-dir=/data/k8s/etcd/wal snapshot restore /data/etcd_backup_dir/etcd-snapshot-20200820.db 192.168.137.233节点 ------------------------------------------------------- ETCDCTL_API=3 etcdctl --name=k8s-etcd03 --endpoints="https://192.168.137.233:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem --initial-cluster-token=etcd-cluster-0 --initial-advertise-peer-urls=https://192.168.137.233:2380 --initial-cluster=k8s-etcd01=https://172.16.60.231:2380,k8s-etcd02=https://172.16.60.232:2380,k8s-etcd03=https://192.168.137.233:2380 --data-dir=/data/k8s/etcd/data --wal-dir=/data/k8s/etcd/wal snapshot restore /data/etcd_backup_dir/etcd-snapshot-20200820.db

# systemctl start etcd # systemctl status etcd

[root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --cacert=/etc/kubernetes/cert/ca.pem endpoint health https://172.16.60.232:2379 is healthy: successfully committed proposal: took = 12.837393ms https://172.16.60.233:2379 is healthy: successfully committed proposal: took = 13.306671ms https://172.16.60.231:2379 is healthy: successfully committed proposal: took = 13.602805ms [root@k8s-master01 ~]# ETCDCTL_API=3 etcdctl -w table --cacert=/etc/kubernetes/cert/ca.pem --cert=/etc/etcd/cert/etcd.pem --key=/etc/etcd/cert/etcd-key.pem --endpoints="https://172.16.60.231:2379,https://172.16.60.232:2379,https://172.16.60.233:2379" endpoint status +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+ | https://172.16.60.231:2379 | 79cf4f0a8c3da54b | 3.4.9 | 9.0 MB | false | false | 2 | 13 | 13 | | | https://172.16.60.232:2379 | 4c0cfad24e92e45f | 3.4.9 | 9.0 MB | true | false | 2 | 13 | 13 | | | https://172.16.60.233:2379 | 5f70664d346a6ebd | 3.4.9 | 9.0 MB | false | false | 2 | 13 | 13 | | +----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

# systemctl start kube-apiserver # systemctl status kube-apiserver

[root@k8s-master01 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-2 Unhealthy HTTP probe failed with statuscode: 503

etcd-1 Unhealthy HTTP probe failed with statuscode: 503

etcd-0 Unhealthy HTTP probe failed with statuscode: 503

由于etcd服务刚重启,需要多刷几次状态就会正常:

[root@k8s-master01 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

[root@k8s-master01 ~]# kubectl get ns NAME STATUS AGE default Active 7d4h kevin Active 5d18h kube-node-lease Active 7d4h kube-public Active 7d4h kube-system Active 7d4h [root@k8s-master01 ~]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE default dnsutils-ds-22q87 0/1 ContainerCreating 171 7d3h default dnsutils-ds-bp8tm 0/1 ContainerCreating 138 5d18h default dnsutils-ds-bzzqg 0/1 ContainerCreating 138 5d18h default dnsutils-ds-jcvng 1/1 Running 171 7d3h default dnsutils-ds-xrl2x 0/1 ContainerCreating 138 5d18h default dnsutils-ds-zjg5l 1/1 Running 0 7d3h default kevin-t-84cdd49d65-ck47f 0/1 ContainerCreating 0 2d2h default nginx-ds-98rm2 1/1 Running 2 7d3h default nginx-ds-bbx68 1/1 Running 0 7d3h default nginx-ds-kfctv 0/1 ContainerCreating 1 5d18h default nginx-ds-mdcd9 0/1 ContainerCreating 1 5d18h default nginx-ds-ngqcm 1/1 Running 0 7d3h default nginx-ds-tpcxs 0/1 ContainerCreating 1 5d18h kevin nginx-ingress-controller-797ffb479-vrq6w 0/1 ContainerCreating 0 5d18h kevin test-nginx-7d4f96b486-qd4fl 0/1 ContainerCreating 0 2d1h kevin test-nginx-7d4f96b486-qfddd 0/1 Running 0 2d1h kube-system calico-kube-controllers-578894d4cd-9rp4c 1/1 Running 1 7d3h kube-system calico-node-d7wq8 0/1 PodInitializing 1 7d3h

原文:https://www.cnblogs.com/kevingrace/p/14616824.html