这里没有什么反爬措施,所以就不分析了,直接上代码

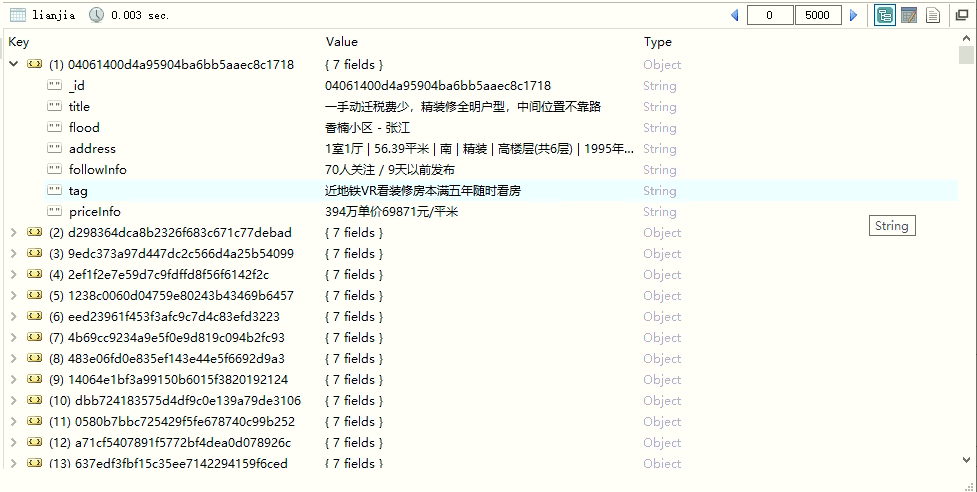

import hashlib import re from concurrent.futures import ThreadPoolExecutor from urllib import parse import requests from bs4 import BeautifulSoup from pymongo import MongoClient session = requests.session() # 创建线程池 pool = ThreadPoolExecutor(10) # Mongo链接 client = MongoClient(‘mongodb://10.0.0.101:27017/‘) db = client[‘db1‘] lianjia = db[‘lianjia‘] headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.150 Safari/537.36" } # 解析存储 def parse_page(html): if hasattr(html, "_Future__get_result"): html = html.result() soup = BeautifulSoup(html, "lxml") li_list = soup.find("ul", attrs={"class": "sellListContent"}).find_all("li", attrs={ "class": "clear LOGVIEWDATA LOGCLICKDATA"}, recursive=False) for li in li_list: title = li.find("div", attrs={"class": "title"}) flood = li.find("div", attrs={"class": "flood"}) address = li.find("div", attrs={"class": "address"}) followInfo = li.find("div", attrs={"class": "followInfo"}) tag = li.find("div", attrs={"class": "tag"}) priceInfo = li.find("div", attrs={"class": "priceInfo"}) # print(title.text) # print(flood.text) # print(address.text) # print(followInfo.text) # print(tag.text) # print(priceInfo.text) # 将标题进行MD5转换作为id md = hashlib.md5() md.update(title.text.encode("utf-8")) id = md.hexdigest() if not lianjia.find_one({"_id": id}): lianjia.insert({"_id": id, "title": title.text, "flood": flood.text, "address": address.text, "followInfo": followInfo.text, "tag": tag.text,"priceInfo":priceInfo.text}) # 首页内容 def first_page(search_key): res = session.get(url="https://sh.lianjia.com/ershoufang/rs%s" % (search_key)) soup = BeautifulSoup(res.text, "lxml") # 获取房子总数用来判断分页数量 total_num = int(re.search("[0-9]\d*", soup.find("h2", attrs={"class": "total fl"}).text).group()) return res.text, total_num # 分页内容 def range_page(num, search_key): res = session.get(url="https://sh.lianjia.com/ershoufang/pg%srs%s" % (num, search_key)) return res.text def run(search_key, total_num=None): search_key = parse.quote(search_key) if not total_num: html, total_num = first_page(search_key) parse_page(html) for num in range(2, (total_num // 30)+1): # 每页有30条数据,区余后获取分页总数 pool.submit(range_page, num, search_key).add_done_callback(parse_page) else: html, _ = first_page(search_key) parse_page(html) for num in range(2, total_num): pool.submit(range_page, num, search_key).add_done_callback(parse_page) run("浦东")

原文:https://www.cnblogs.com/angelyan/p/14680073.html