大部分商业网站需要我们登录后才能爬取内容,所以对于爬虫来说,生成cookies给代理使用成为了一个必须要做的事情。今天我们交流下关于使用selenium访问目标网站遇到的一些问题。

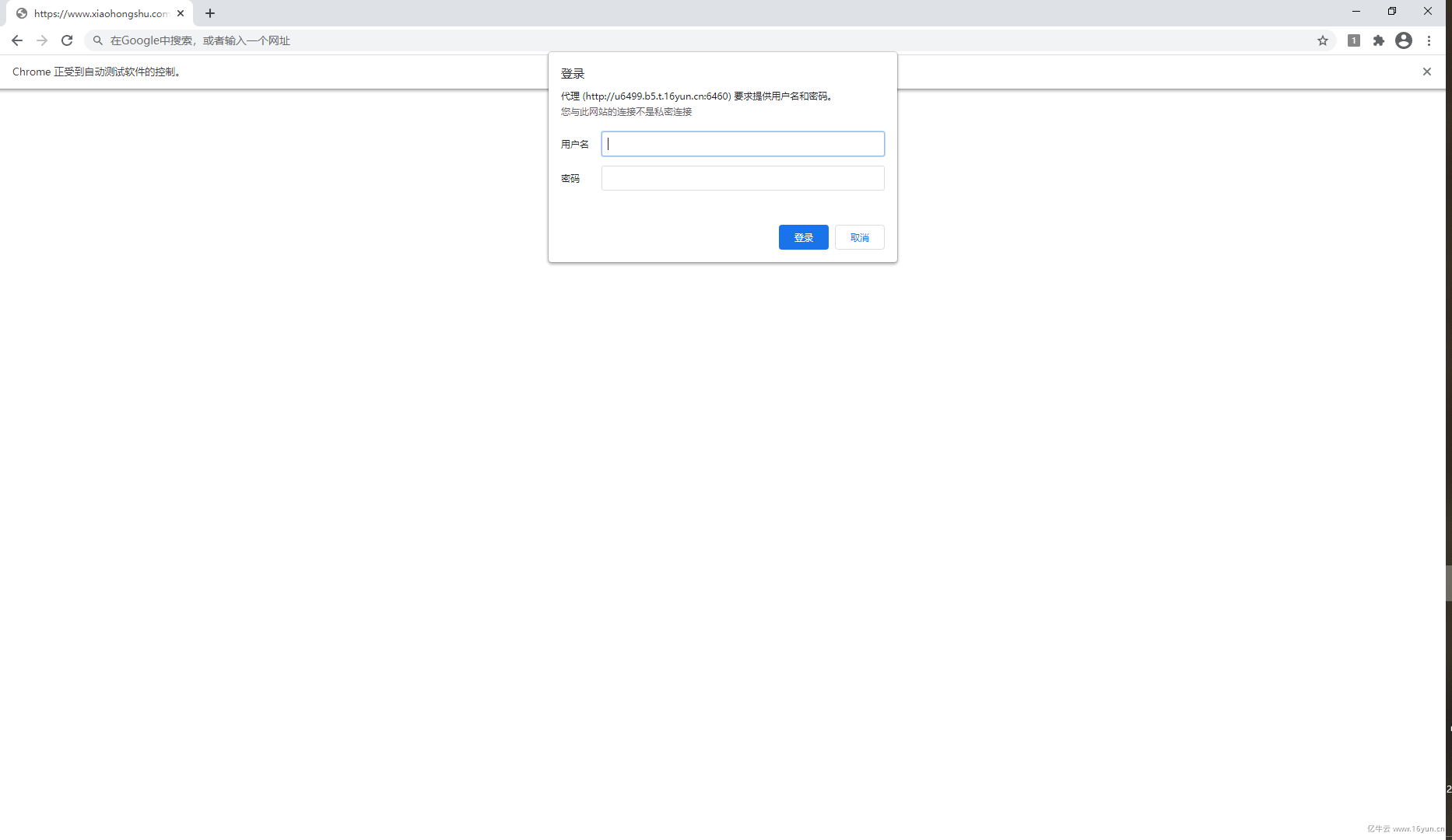

因为业务需求我们需要采集小红书的一些数据,程序在挂上代理访问目标网站的时候弹出了验证框。如图所示

这个问题从来没有遇到过,我以为是的代理的问题,咨询客服才知道这个是因为我的浏览器的驱动和版本的问题,然后更新了新版本就可以解决了。那我们分享下使用chrome driver来进行登录和cookie的生成。

import os

import time

import zipfile

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

class GenCookies(object):

USER_AGENT = open(‘useragents.txt‘).readlines()

# 16yun 代理配置

PROXY_HOST = ‘t.16yun.cn‘ # proxy or host

PROXY_PORT = 31111 # port

PROXY_USER = ‘USERNAME‘ # username

PROXY_PASS = ‘PASSWORD‘ # password

@classmethod

def get_chromedriver(cls, use_proxy=False, user_agent=None):

manifest_json = """

{

"version": "1.0.0",

"manifest_version": 2,

"name": "Chrome Proxy",

"permissions": [

"proxy",

"tabs",

"unlimitedStorage",

"storage",

"<all_urls>",

"webRequest",

"webRequestBlocking"

],

"background": {

"scripts": ["background.js"]

},

"minimum_chrome_version":"22.0.0"

}

"""

background_js = """

var config = {

mode: "fixed_servers",

rules: {

singleProxy: {

scheme: "http",

host: "%s",

port: parseInt(%s)

},

bypassList: ["localhost"]

}

};

chrome.proxy.settings.set({value: config, scope: "regular"}, function() {});

function callbackFn(details) {

return {

authCredentials: {

username: "%s",

password: "%s"

}

};

}

chrome.webRequest.onAuthRequired.addListener(

callbackFn,

{urls: ["<all_urls>"]},

[‘blocking‘]

);

""" % (cls.PROXY_HOST, cls.PROXY_PORT, cls.PROXY_USER, cls.PROXY_PASS)

path = os.path.dirname(os.path.abspath(__file__))

chrome_options = webdriver.ChromeOptions()

if use_proxy:

pluginfile = ‘proxy_auth_plugin.zip‘

with zipfile.ZipFile(pluginfile, ‘w‘) as zp:

zp.writestr("manifest.json", manifest_json)

zp.writestr("background.js", background_js)

chrome_options.add_extension(pluginfile)