一、使用官方的log类型,通过改造challenge来实现parallel的功能,实现对同一个index进行读写混合的测试需求

{

"name": "append-no-conflicts",

"description": "Indexes the whole document corpus using Elasticsearch default settings. We only adjust the number of replicas as we benchmark a single node cluster and Rally will only start the benchmark if the cluster turns green. Document ids are unique so all index operations are append only. After that a couple of queries are run.",

"default": true,

"schedule": [

{

"operation": "delete-index"

},

{

"operation": {

"operation-type": "create-index",

"settings": {{index_settings | default({}) | tojson}}

}

},

{

"name": "check-cluster-health",

"operation": {

"operation-type": "cluster-health",

"index": "logs-*",

"request-params": {

"wait_for_status": "{{cluster_health | default(‘green‘)}}",

"wait_for_no_relocating_shards": "true"

},

"retry-until-success": true

}

},

{

"parallel": {

"completed-by": "index-append", #表示当index-append完成时结束并发

"tasks": [

{

"operation": "index-append",

"warmup-time-period": 240,

"clients": {{bulk_indexing_clients | default(8)}}

},

{

"operation": "default",

"clients": 1,

"warmup-iterations": 500,

"iterations": 100,

"target-throughput": 8

},

{

"operation": "term",

"clients": 1,

"warmup-iterations": 500,

"iterations": 100,

"target-throughput": 50

},

{

"operation": "range",

"clients": 1,

"warmup-iterations": 100,

"iterations": 100,

"target-throughput": 1

},

{

"operation": "hourly_agg",

"clients": 1,

"warmup-iterations": 100,

"iterations": 100,

"target-throughput": 0.2

},

{

"operation": "scroll",

"clients": 1,

"warmup-iterations": 100,

"iterations": 200,

"#COMMENT": "Throughput is considered per request. So we issue one scroll request per second which will retrieve 25 pages",

"target-throughput": 1

}

]

}

}

]

},

{

"name": "append-no-conflicts-index-only",

"description": "Indexes the whole document corpus using Elasticsearch default settings. We only adjust the number of replicas as we benchmark a single node cluster and Rally will only start the benchmark if the cluster turns green. Document ids are unique so all index operations are append only.",

"schedule": [

{

"operation": "delete-index"

},

{

"operation": {

"operation-type": "create-index",

"settings": {{index_settings | default({}) | tojson}}

}

},

{

"name": "check-cluster-health",

"operation": {

"operation-type": "cluster-health",

"index": "logs-*",

"request-params": {

"wait_for_status": "{{cluster_health | default(‘green‘)}}",

"wait_for_no_relocating_shards": "true"

},

"retry-until-success": true

}

},

{

"operation": "index-append",

"warmup-time-period": 240,

"clients": {{bulk_indexing_clients | default(8)}}

},

{

"name": "refresh-after-index",

"operation": "refresh"

},

{

"operation": {

"operation-type": "force-merge",

"request-timeout": 7200

}

},

{

"name": "refresh-after-force-merge",

"operation": "refresh"

},

{

"name": "wait-until-merges-finish",

"operation": {

"operation-type": "index-stats",

"index": "_all",

"condition": {

"path": "_all.total.merges.current",

"expected-value": 0

},

"retry-until-success": true,

"include-in-reporting": false

}

}

]

},

{

"name": "append-sorted-no-conflicts",

"description": "Indexes the whole document corpus in an index sorted by timestamp field in descending order (most recent first) and using a setup that will lead to a larger indexing throughput than the default settings. Document ids are unique so all index operations are append only.",

"schedule": [

{

"operation": "delete-index"

},

{

"operation": {

"operation-type": "create-index",

"settings": {%- if index_settings is defined %} {{index_settings | tojson}} {%- else %} {

"index.sort.field": "@timestamp",

"index.sort.order": "desc"

}{%- endif %}

}

},

{

"name": "check-cluster-health",

"operation": {

"operation-type": "cluster-health",

"index": "logs-*",

"request-params": {

"wait_for_status": "{{cluster_health | default(‘green‘)}}",

"wait_for_no_relocating_shards": "true"

},

"retry-until-success": true

}

},

{

"operation": "index-append",

"warmup-time-period": 240,

"clients": {{bulk_indexing_clients | default(8)}}

},

{

"name": "refresh-after-index",

"operation": "refresh"

},

{

"operation": {

"operation-type": "force-merge",

"request-timeout": 7200

}

},

{

"name": "refresh-after-force-merge",

"operation": "refresh"

},

{

"name": "wait-until-merges-finish",

"operation": {

"operation-type": "index-stats",

"index": "_all",

"condition": {

"path": "_all.total.merges.current",

"expected-value": 0

},

"retry-until-success": true,

"include-in-reporting": false

}

}

]

},

{

"name": "append-index-only-with-ingest-pipeline",

"description": "Indexes the whole document corpus using Elasticsearch default settings. We only adjust the number of replicas as we benchmark a single node cluster and Rally will only start the benchmark if the cluster turns green. Document ids are unique so all index operations are append only. Runs the documents through an ingest node pipeline to parse the http logs. May require --elasticsearch-plugins=‘ingest-geoip‘ ",

"schedule": [

{

"operation": "delete-index"

},

{

"operation": {

"operation-type": "create-index",

"settings": {{index_settings | default({}) | tojson}}

}

},

{

"name": "check-cluster-health",

"operation": {

"operation-type": "cluster-health",

"index": "logs-*",

"request-params": {

"wait_for_status": "{{cluster_health | default(‘green‘)}}",

"wait_for_no_relocating_shards": "true"

},

"retry-until-success": true

}

},

{

"operation": "create-http-log-{{ingest_pipeline | default(‘baseline‘)}}-pipeline"

},

{

"operation": "index-append-with-ingest-{{ingest_pipeline | default(‘baseline‘)}}-pipeline",

"warmup-time-period": 240,

"clients": {{bulk_indexing_clients | default(8)}}

},

{

"name": "refresh-after-index",

"operation": "refresh"

},

{

"operation": {

"operation-type": "force-merge",

"request-timeout": 7200

}

},

{

"name": "refresh-after-force-merge",

"operation": "refresh"

},

{

"name": "wait-until-merges-finish",

"operation": {

"operation-type": "index-stats",

"index": "_all",

"condition": {

"path": "_all.total.merges.current",

"expected-value": 0

},

"retry-until-success": true,

"include-in-reporting": false

}

}

]

}

二、根据实际业务日志来生成data和track使性能测试结果更加贴合实际业务

1、从已有集群中的数据自动生成log和track

esrally版本要求2.0以上

参考:https://esrally.readthedocs.io/en/2.1.0/adding_tracks.html?highlight=custom

esrally create-track --track=acme --target-hosts=127.0.0.1:9200 --indices="products,companies" --output-path=~/tracks

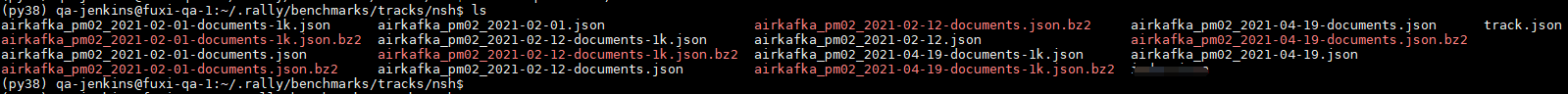

最终生成效果:

airkafka_pm02_2021-02-01-documents-1k.json:xxx-1k表示用于test_mode模式,数据量较小,用于测试

airkafka_pm02_2021-02-01.json:是对应log的index

airkafka_pm02_2021-02-01-documents.json.offset:log日志偏移量

{% import "rally.helpers" as rally with context %}

{

"version": 2,

"description": "Tracker-generated track for nsh",

"indices": [

{

"name": "airkafka_pm02_2021-02-12",

"body": "index.json" #指定创建index的json,可以自己整理对index进行修改删减

},

{

"name": "airkafka_pm02_2021-02-01",

"body": "index.json"

}

],

"corpora": [

{

"name": "nsh", #表示在data目录中子目录名称

"documents": [

{

"target-index": "airkafka_pm02_2021-02-12",

"source-file": "airkafka_pm02_2021-02-12-documents.json.bz2",

"document-count": 14960567, #可能会出现这里的count和实际生成的不一致,如果发现不一致可以改成一致

"compressed-bytes": 814346714,

"uncompressed-bytes": 12138377222

},

{

"target-index": "airkafka_pm02_2021-02-01",

"source-file": "airkafka_pm02_2021-02-01-documents.json.bz2",

"document-count": 24000503,

"compressed-bytes": 1296215463,

"uncompressed-bytes": 19551041674

}

]

}

],

"schedule": [

{

"operation": "delete-index"

},

{

"operation": {

"operation-type": "create-index",

"settings": {{index_settings | default({}) | tojson}}

}

},

{

"operation": {

"operation-type": "cluster-health",

"index": "airkafka_pm02_2021-02-12,airkafka_pm02_2021-02-01",

"request-params": {

"wait_for_status": "{{cluster_health | default(‘green‘)}}",

"wait_for_no_relocating_shards": "true"

},

"retry-until-success": true

}

},

{

"operation": {

"operation-type": "bulk",

"bulk-size": {{bulk_size | default(5000)}},

"ingest-percentage": {{ingest_percentage | default(100)}}

},

"clients": {{bulk_indexing_clients | default(8)}}

},

#自动生成的track里是无法像模板那边指定operation和challenge的文件,需要组装写到track里面。operation通常是到bulk为止,如果需要search,需要自己加上去

{

"operation": "default",

"operation-type": "search",

"index": "airkafka_pm02*",

"body": {

"query": {

"match_all": {}

}

},

"warmup-iterations": 500,

"iterations": 100,

"target-throughput": 8

},

{

"operation": "term",

"operation-type": "search",

"index": "airkafka_pm02*", #通常一个search operation包括type、index、body,

"body": {

"query": {

"term": {

"log_id.raw": {

"value": "gm_client_app_profile_log" #适配成符合自定义日志的search条件

}

}

}

},

"warmup-iterations": 500,

"iterations": 100,

"target-throughput": 50 #不指定则表示esrally尽最大可能发送消息,即测最大的性能,指定则是按照指定的tps发送。注意,如果指定,service time和letency是不一样的,letency要大于service time,实际的es性能需要看service time

},

{

"operation": "range",

"operation-type": "search",

"index": "airkafka_pm02*",

"body": {

"query": {

"range": {

"deveice_level": {

"gte": 0,

"lt": 3

}

}

}

},

"warmup-iterations": 100,

"iterations": 100,

"target-throughput": 1

},

{

"operation": "hourly_agg",

"operation-type": "search",

"index": "airkafka_pm02*",

"body": {

"size": 0,

"aggs": {

"by_hour": {

"date_histogram": {

"field": "@timestamp",

"interval": "hour"

}

}

}

},

"warmup-iterations": 100,

"iterations": 100,

"target-throughput": 0.2

},

{

"operation": "scroll",

"operation-type": "search",

"index": "airkafka_pm02*",

"pages": 25,

"results-per-page": 500,

"body": {

"query": {

"match_all": {}

}

},

"warmup-iterations": 100,

"iterations": 200,

"#COMMENT": "Throughput is considered per request. So we issue one scroll request per second which will retrieve 25 pages",

"target-throughput": 1

}

]

}

原文:https://www.cnblogs.com/to-here/p/14701421.html