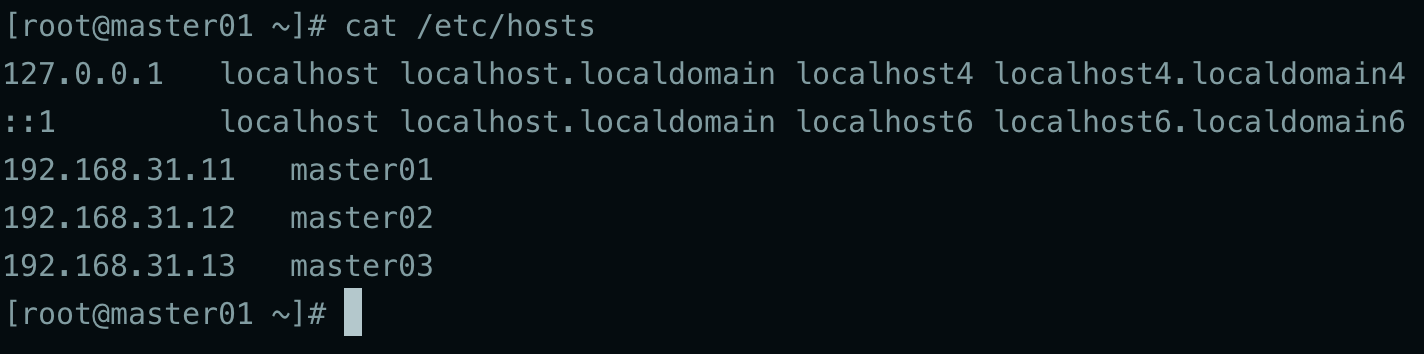

3台虚拟机IP地址分别是: 192.168.31.11/192.168.31.12/192.168.31.13

同时还需要对k8s service的网段进行规划,这里使用:10.0.8.1/12 这个是3台机器集群间通讯使用的网段

另外还有POD网段的规划,这里使用:172.16.0.0/12 这个是POD容器间通讯使用的网段

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sed -i -e ‘/mirrors.cloud.aliyuncs.com/d‘ -e ‘/mirrors.aliyuncs.com/d‘ /etc/yum.repos.d/CentOS-Base.repo

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

systemctl disable --now firewalld

systemctl disable --now dnsmasq

systemctl disable --now NetworkManager

setenforce 0

sed -i ‘s#SELINUX=enforcing#SELINUX=disabled#g‘ /etc/sysconfig/selinux

sed -i ‘s#SELINUX=enforcing#SELINUX=disabled#g‘ /etc/selinux/config

#关闭swap分区

swapoff -a && sysctl -w vm.swappiness=0

sed -ri ‘/^[^#]*swap/s@^@#@‘ /etc/fstab

#安装工ntpdate时间同步服务

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

yum install ntpdate -y

#所有节点同步时间

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo ‘Asia/Shanghai‘ >/etc/timezone

ntpdate time2.aliyun.com

# 加入到crontab

crontab -e

*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com

#所有节点配置limit

ulimit -SHn 65535

vim /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

ssh-keygen -t rsa

for i in master01 master02 master03;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

#下载安装源码文件

cd /root/ ; git clone https://github.com/dotbalo/k8s-ha-install.git

#如果无法下载就下载:https://gitee.com/dukuan/k8s-ha-install.git

#升级所有节点并重启

yum update -y --exclude=kernel* && reboot

#CentOS 7升级所有机器内核至4.19

cd /root

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

#所有节点安装内核升级包

cd /root

yum local install -y kernel-ml*

#修改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby-default-kernel)"

#检查默认内核是不是4.19

grubby --default-kernel

#所有节点重启然后再查看内核版本

reboot

uname -a

#所有节点安装ipvsadm

yum install ipvsadm ipset sysstat conntrack libseccomp -y

#所有节点配置ipvs模块 内核4.19+版本 nf_conntrack_ipv4 改为 nf_conntrack 4.18以下使用 nf_conntrack_ipv4

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

vim /etc/modules-load.d/ipvs.conf

# 加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

systemctl enable --now systemd-modules-load.service

#开启一些k8s集群中必须的内核参数,所有节点配置k8s内核:

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

net.ipv4.conf.all.route_localnet = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

安装docker-ce

yum install docker-ce-20.10.* docker-cli-20.10.* -y

新版kubelet建议使用systemd,将docker的cgroupDriver改为systemd

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl daemon-reload && systemctl enable --now docker

安装1.21.x版本的k8s组件

yum install kubeadm-1.21* kubelet-1.21* kubectl-1.21* -y

默认配置的pause镜像使用国外的gcr.io仓库,这里配置kubelet使用阿里云的pause镜像

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1"

EOF

设置开机启动kubelet服务

systemctl daemon-reload && systemctl enable --now kubelet

高可用服务可以使用物理设备实现负载均衡,如果是公有云,需要使用公有云的负载均衡服务

yum install keepalived haproxy -y

安装完成后,配置ha服务和keepalived服务

mkdir /etc/haproxy/

vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server master01 192.168.31.11:6443 check

server master02 192.168.31.12:6443 check

server master03 192.168.31.13:6443 check

vim /etc/keepalived/keepalived.conf

??注意修改对应设备的ip地址和网卡名称interface

Master01

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.31.11

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.31.200

}

track_script {

chk_apiserver

}

}

Master02

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.31.12

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.31.200

}

track_script {

chk_apiserver

}

}

Master03

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 192.168.31.13

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.31.200

}

track_script {

chk_apiserver

}

}

注意配置文件中主机名、主机IP、负载均衡IP、k8s的版本号、以及POD网段和k8s service的网段配置

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.31.11

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- 192.168.31.200

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.31.200:16443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.21.0

networking:

dnsDomain: cluster.local

podSubnet: 172.16.0.0/24

serviceSubnet: 10.96.0.0/24

scheduler: {}

更新kubeadm的文件

kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

将新生成的new.yaml文件拷贝至其他节点/root下

scp new.yaml master02:/root/

scp new.yaml master03:/root/

所有节点拉取镜像

kubeadm config images pull --config /root/new.yaml

拉取过程中如果报错coredns镜像不存在,可以换个镜像地址重新拉取

docker pull registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.8.0

docker tag registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.8.0 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

设置开机启动

systemctl enable --now kubelet

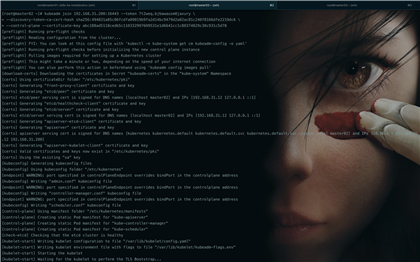

在master01节点进行初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他master节点加入master01即可

kubeadm init --config /root/new.yaml --upload-certs

如果初始化失败,则使用下面的命令重新进行初始化

kubeadm reset -f ; ipvsadm --clear ; rm -rf ~/.kube

初始化成功后,记录下产生的token值

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.31.200:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:61cbc428acad2f80374d36aea9ee7d69701610ab7ef250f6aaa4c2f22f6c9eff --control-plane --certificate-key 36590af77695b44ab62705187bdc0b9bbeb4df3f82e98087623b8f6df7c3f326

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.31.200:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:61cbc428acad2f80374d36aea9ee7d69701610ab7ef250f6aaa4c2f22f6c9eff

master01节点配置环境变量

cat <<EOF >> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source /root/.bashrc

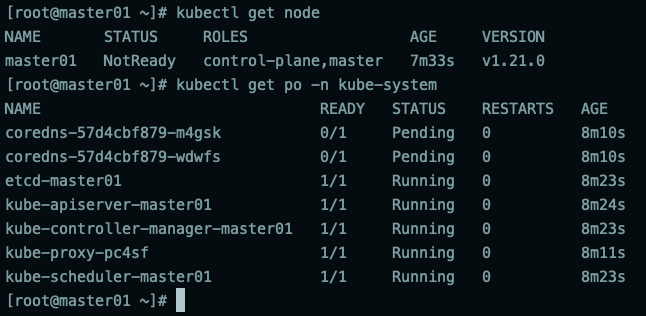

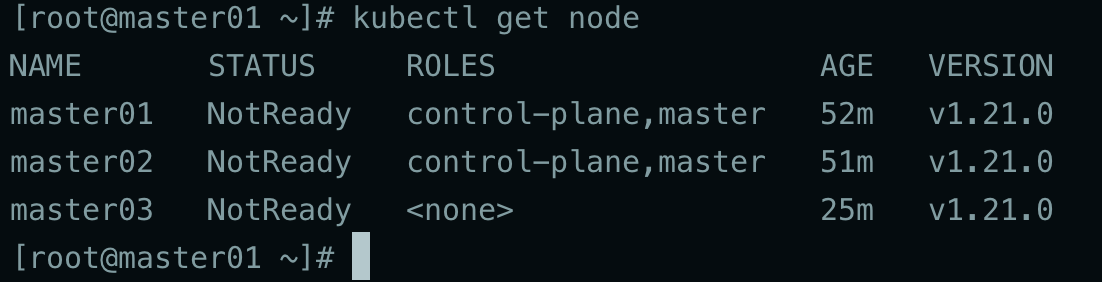

查看node状态和pod状态

token过期后,使用命令生成新的token

kubeadm token create --print-join-command

master需要生成--certificate-key

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

kubeadm init phase upload-certs --upload-certs

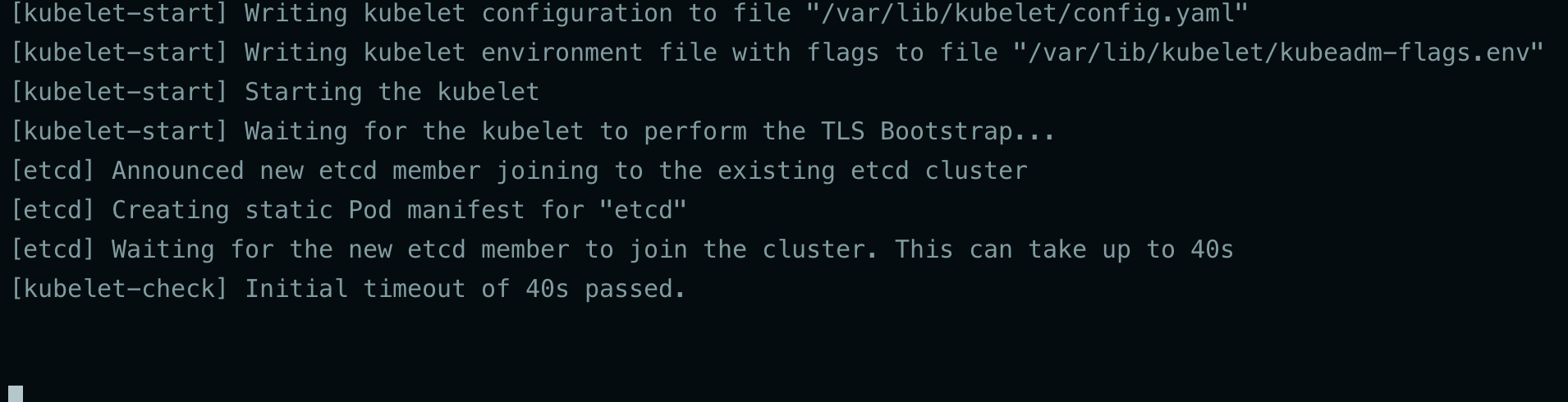

接着,在master02和master03上,使用之前生成的token,来加入集群

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.31.200:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:61cbc428acad2f80374d36aea9ee7d69701610ab7ef250f6aaa4c2f22f6c9eff --control-plane --certificate-key 36590af77695b44ab62705187bdc0b9bbeb4df3f82e98087623b8f6df7c3f326

如果有node,则使用下面生成的token来加入集群

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.31.200:16443 --token 7t2weq.bjbawausm0jaxury --discovery-token-ca-cert-hash sha256:61cbc428acad2f80374d36aea9ee7d69701610ab7ef250f6aaa4c2f22f6c9eff

如果加入集群失败,在失败的那台机器上执行reset命令,重新再次使用上面的命令加入集群

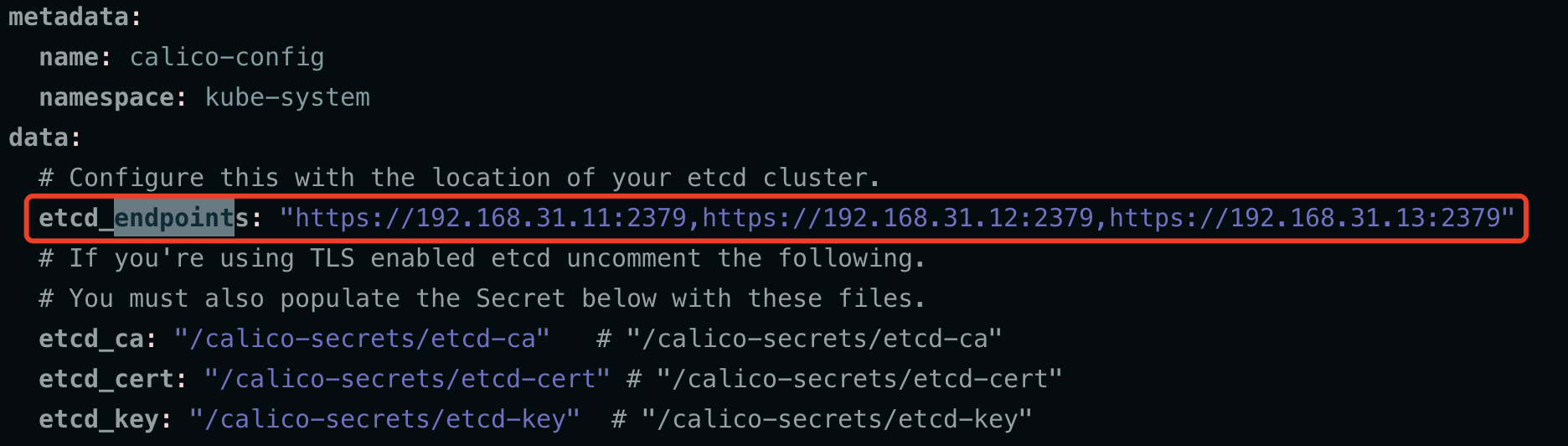

切换git分支,修改配置文件

cd /root/k8s-ha-install && git checkout manual-installation-v1.21.x && cd calico/

#修改etcd集群的ip地址

sed -i ‘s#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.31.11:2379,https://192.168.31.12:2379,https://192.168.31.13:2379"#g‘ calico-etcd.yaml

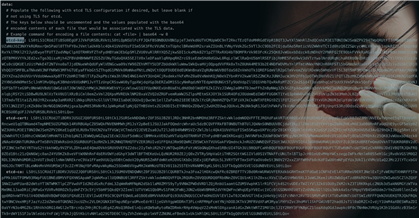

#将获取到的etcd_ca、etc_cert、etc_key添加到配置文件中

ETCD_CA=`cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d ‘\n‘`

ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d ‘\n‘`

ETCD_KEY=`cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d ‘\n‘`

sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

sed -i ‘s#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g‘ calico-etcd.yaml

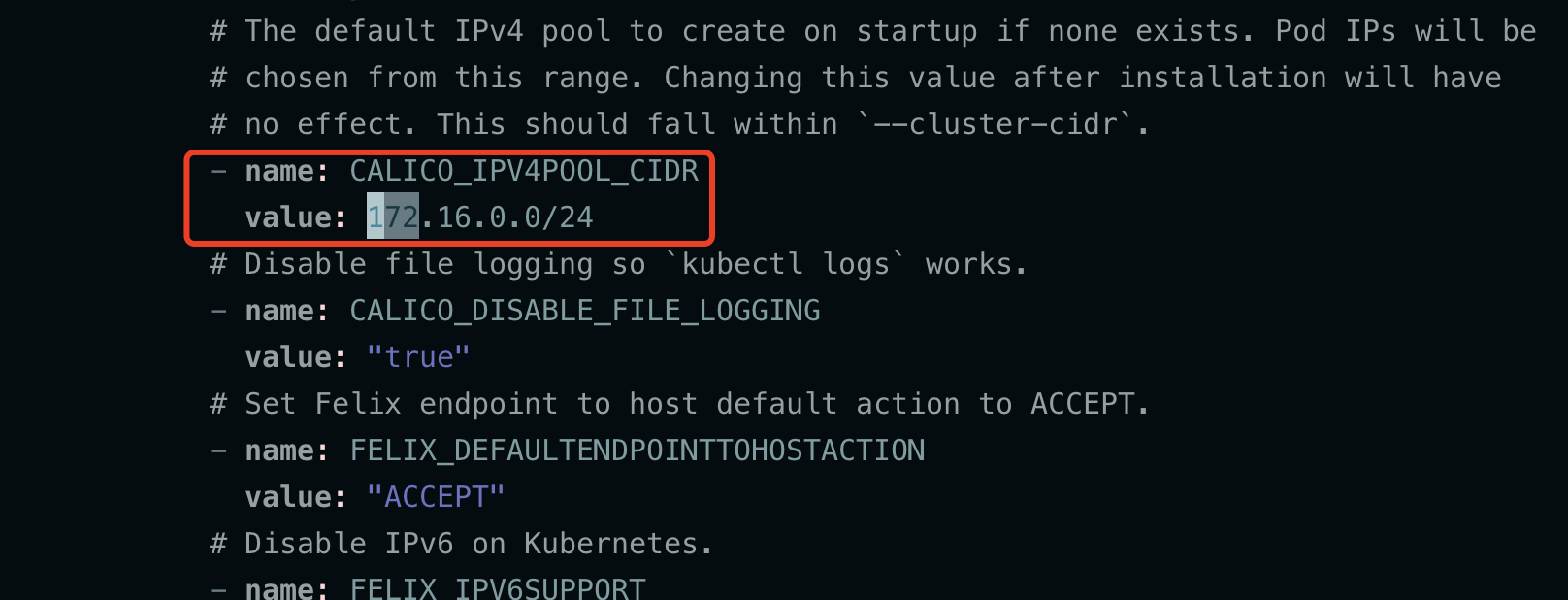

#修改POD网段

POD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= ‘{print $NF}‘`

sed -i ‘s@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "172.16.0.0/12"@ value: ‘"${POD_SUBNET}"‘@g‘ calico-etcd.yaml

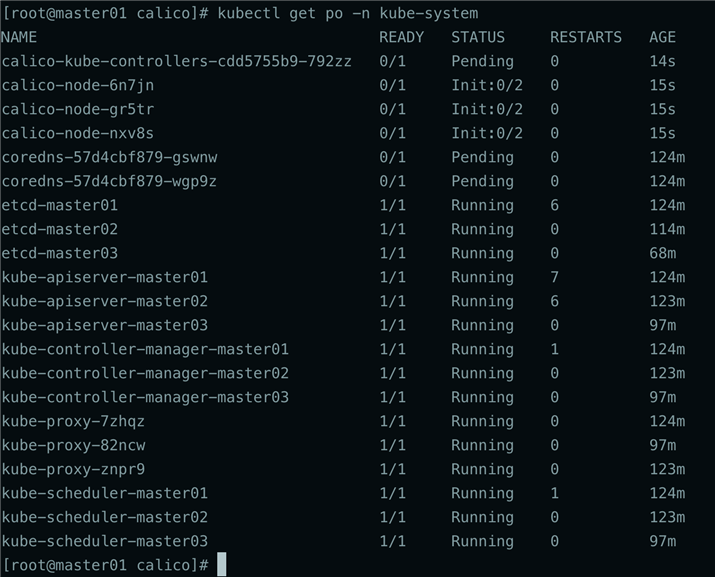

#根据配置文件安装calico

kubectl apply -f calico-etcd.yaml

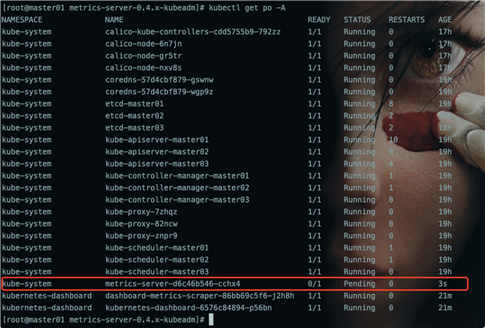

新版本k8s,使用metrics-server采集各节点和POD的内存、磁盘、CPU和网络使用率

cd /root/k8s-ha-install/metrics-server-0.4.x-kubeadm/

kubectl create -f comp.yaml

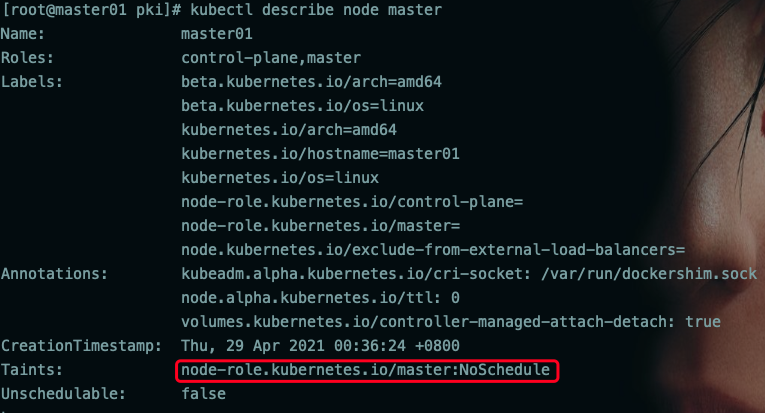

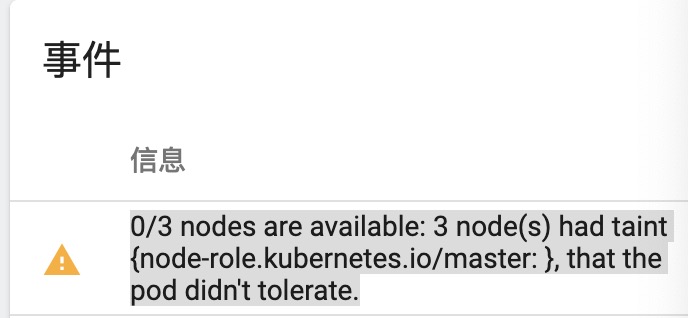

需要使用命令去掉污点

??????????????生产环境还是保留,不调度POD到master节点上????????????????

kubectl taint node -l node-role.kubernetes.io/master node-role.kubernetes.io/master:NoSchedule-

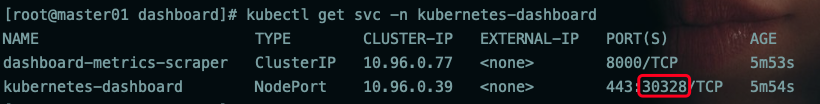

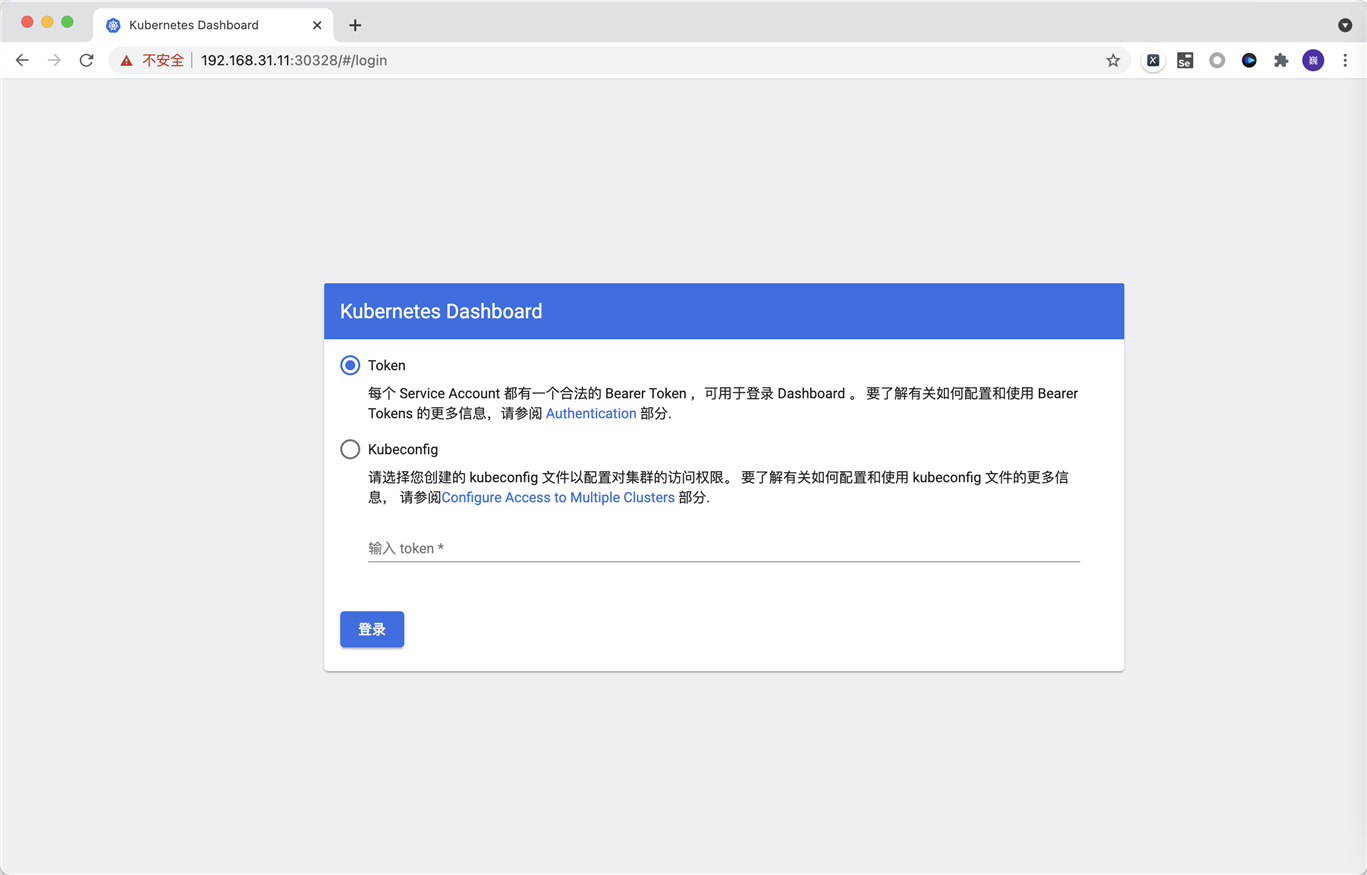

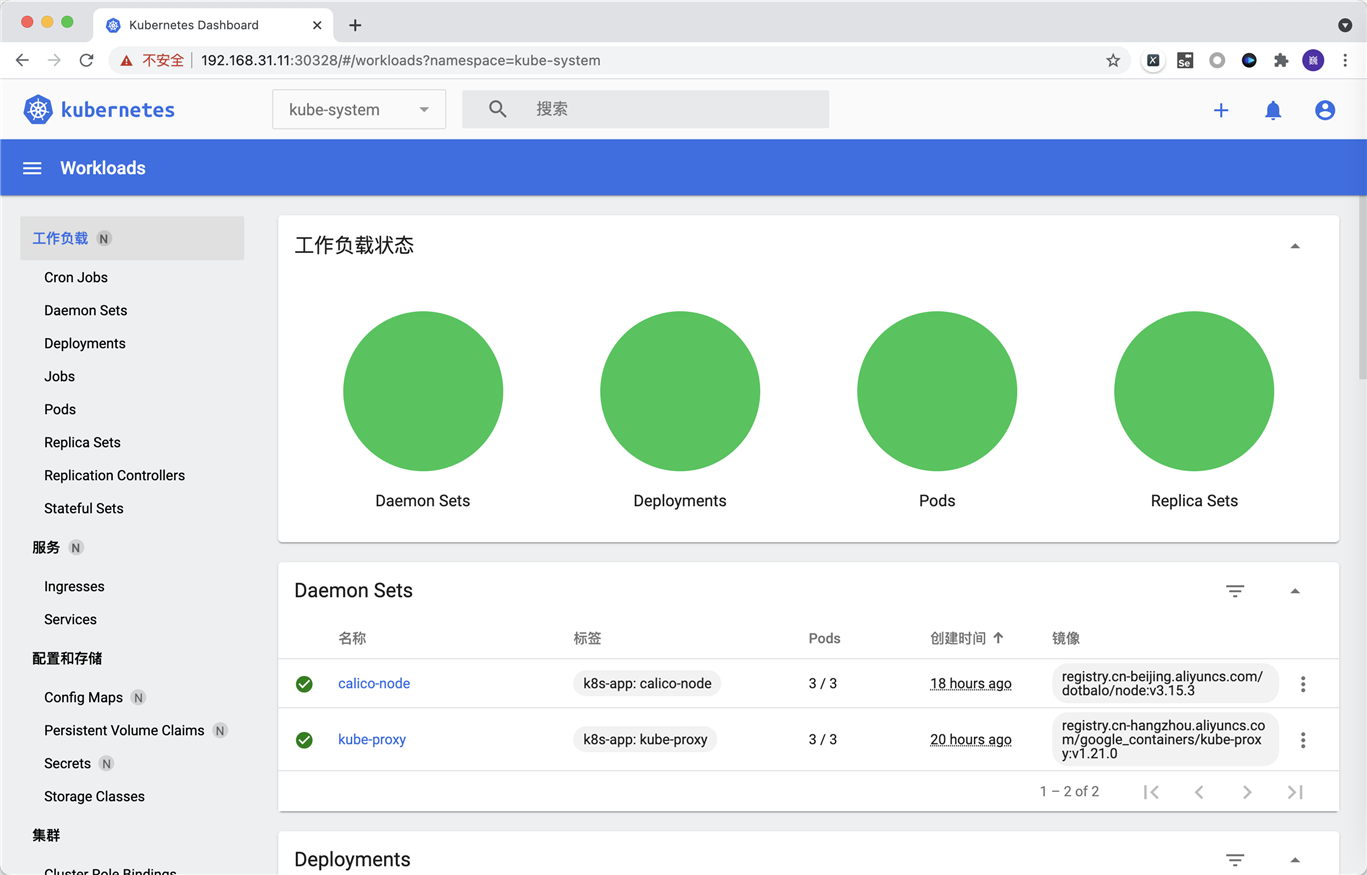

[root@master01 dashboard]# ll

总用量 12

-rw-r--r-- 1 root root 429 4月 29 01:38 dashboard-user.yaml

-rw-r--r-- 1 root root 7627 4月 29 01:38 dashboard.yaml

[root@master01 dashboard]# kubectl create -f .

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

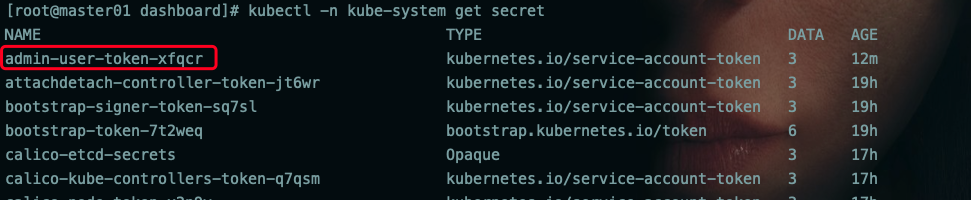

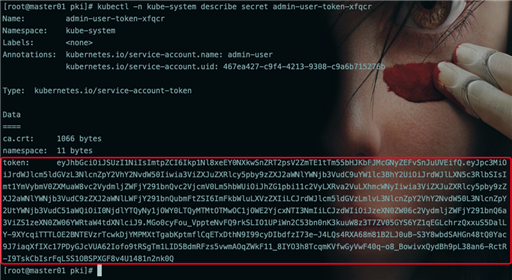

kubectl -n kube-system describe secret admin-user-token-xfqcr

1.Pod必须能解析service

2.Pod必须能解析跨namespace的service

3.每个节点都必须要能访问kubernetes的kubernetes-service 443和kube-dns的service 53

4.pod和pod之前要能通

a)同namespace能通信

b)跨namespace能通信

c)跨机器能通信

k8s学习记录,使用kubeadm安装k8s 1.21.x(一)

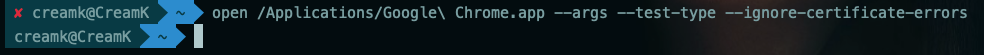

原文:https://www.cnblogs.com/creamk87/p/14706921.html