1.pandas df 与 spark df的相互转换

df_s=spark.createDataFrame(df_p)

df_p=df_s.toPandas()

2. Spark与Pandas中DataFrame对比

http://www.lining0806.com/spark%E4%B8%8Epandas%E4%B8%ADdataframe%E5%AF%B9%E6%AF%94/

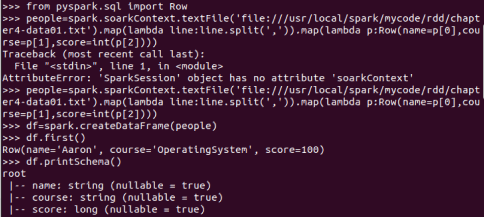

3.1 利用反射机制推断RDD模式

from pyspark.sql import Row people=spark.sparkContext.textFile(‘file:///usr/local/spark/mycode/rdd/chapter4-data01.txt‘).map(lambda line:line.split(‘,‘)).map(lambda p:Row(name=p[0],course=p[1],score=int(p[2]))) df=spark.createDataFrame(people) df.first() df.printSchema()

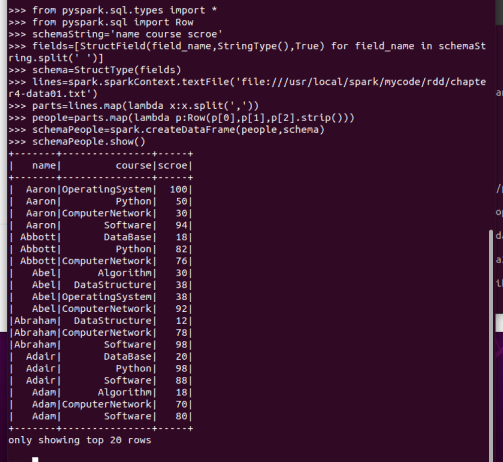

3.2 使用编程方式定义RDD模式

from pyspark.sql.types import * from pyspark.sql import Row schemaString=‘name course scroe‘ fields=[StructField(field_name,StringType(),True) for field_name in schemaString.split(‘ ‘)] schema=StructType(fields) lines=spark.sparkContext.textFile(‘file:///usr/local/spark/mycode/rdd/chapter4-data01.txt‘) parts=lines.map(lambda x:x.split(‘,‘)) people=parts.map(lambda p:Row(p[0],p[1],p[2].strip())) schemaPeople=spark.createDataFrame(people,schema) schemaPeople.show()

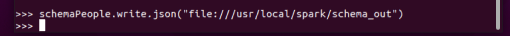

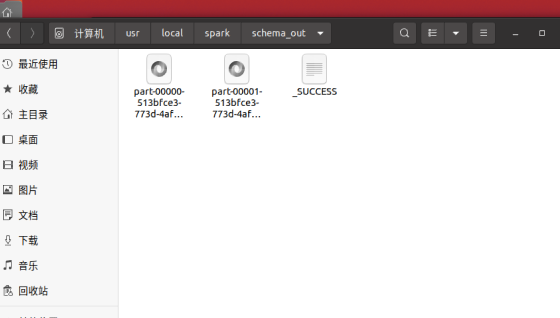

4. DataFrame保存为文件

schemaPeople.write.json("file:///usr/local/spark/schema_out")

原文:https://www.cnblogs.com/jieninice/p/14766707.html