192.168.0.210 deploy 192.168.0.211 node1 192.168.0.212 node2 192.168.0.213 node3

(1)关闭firewalled

systemctl stop firewalld

systemctl disable firewalld

(2)关闭selinux:

sed -i ‘s/enforcing/disabled/‘ /etc/selinux/config setenforce 0

(3)关闭NetworkManager

systemctl disable NetworkManager && systemctl stop NetworkManager

(4)添加主机名与IP对应关系:

vim /etc/hosts 172.30.112.78 deploy 172.30.112.179 node1 172.30.112.115 node2 172.30.112.82 node13

(5)设置主机名:

hostnamectl set-hostname deploy hostnamectl set-hostname node1 hostnamectl set-hostname node2 hostnamectl set-hostname node3

(6)同步网络时间和修改时区

yum install chrony -y systemctl restart chronyd.service && systemctl enable chronyd.service cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

(7)设置文件描述符

for i in deploy node1 node2 node3;do ssh $i "echo "ulimit -SHn 102400" >> /etc/rc.local";done cat >> /etc/security/limits.conf << EOF * soft nofile 65535 * hard nofile 65535 EOF

(8)内核参数优化

for i in deploy node1 node2 node3;do ssh $i "echo ‘vm.swappiness = 0‘ >> /etc/sysctl.conf";done for i in deploy node1 node2 node3;do ssh $i "echo ‘kernel.pid_max = 4194303‘ >> /etc/sysctl.conf";done for i in deploy node1 node2 node3;do ssh $i "sysctl -p";done

(9)在deploy上配置免密登录到node1 node2 node3

for host in node{1..3}; do ssh-copy-id $host;done

(10)read_ahead,通过数据预读并且记载到随机访问内存方式提高磁盘读操作

echo "8192" > /sys/block/sda/queue/read_ahead_kb

(11) I/O Scheduler,SSD要用noop,SATA/SAS使用deadline

echo "deadline" >/sys/block/sd[x]/queue/scheduler echo "noop" >/sys/block/sd[x]/queue/scheduler

vim /etc/yum.repos.d/ceph.repo [ceph] name=ceph baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/$basearch enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc [ceph-noarch] name=cephnoarch baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/ enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc [ceph-source] name=Ceph source package baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/SRPMS enabled=1 gpgcheck=1 type=rpm-md gpgkey=https://download.ceph.com/keys/release.asc ``` for i in deploy node1 node2 node3;do scp /root/ceph.repo root@$i:/etc/yum.repos.d/";done for i in deploy node1 node2 node3;do scp /root/centos.repo root@$i:/etc/yum.repos.d/;done for i in deploy node1 node2 node3;do ssh $i "yum clean all";done

yum install -y epel-release

yum install -y ceph-deploy

1、所有节点安装ceph包(node1 node2 node3)

for i in node1 node2 node3;do ssh $i "yum install -y epel-release";done for i in node1 node2 node3;do ssh $i "yum install -y ceph ceph-radosgw";done

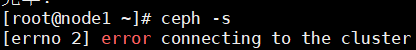

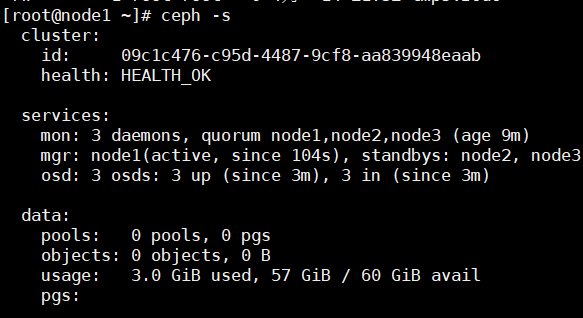

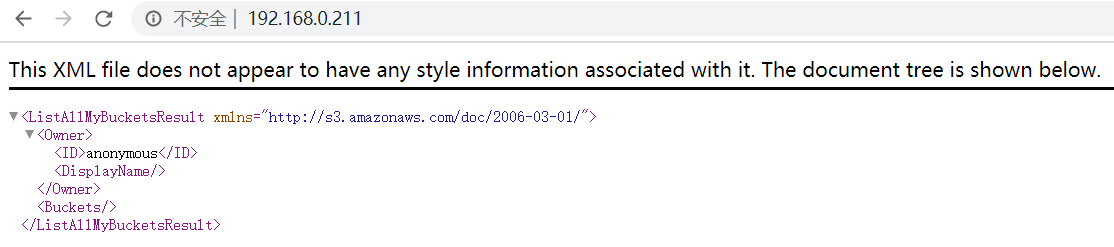

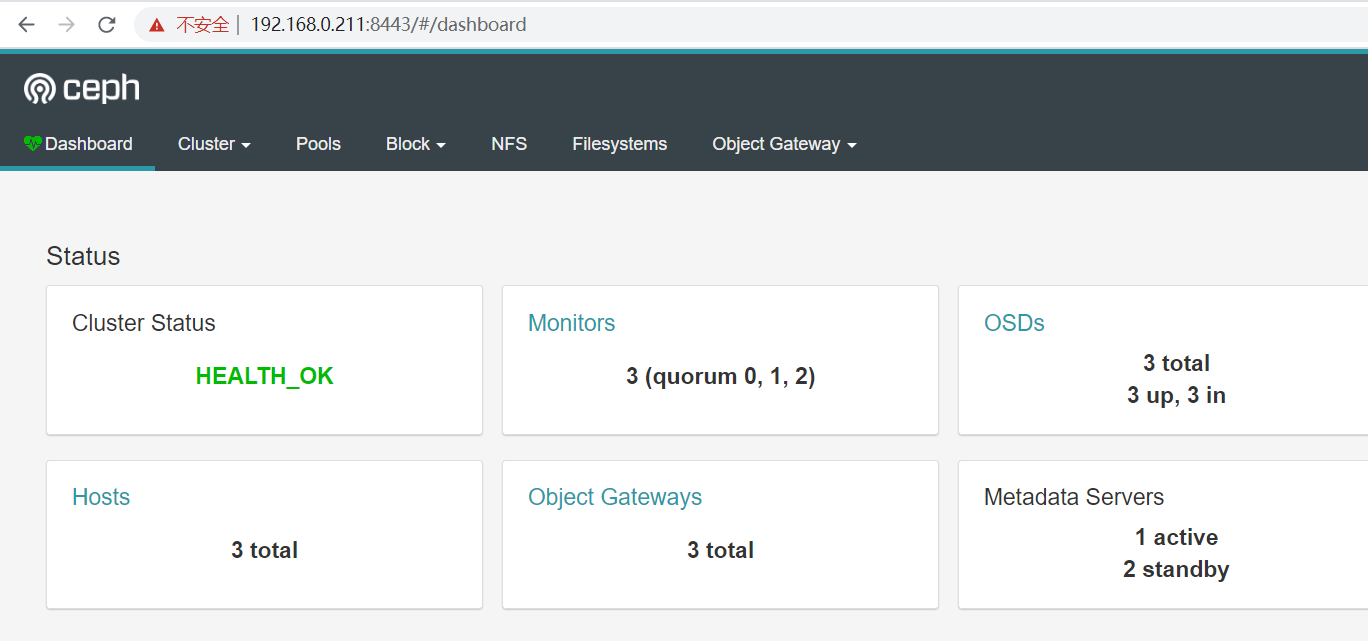

2、Create the cluster

[root@deploy ~]# mkdir /etc/ceph [root@deploy ~]# ls [root@deploy ~]# cd /etc/ceph [root@deploy ceph]# ls [root@deploy ceph]# ceph-deploy new node1 node2 node3 [root@deploy ceph]# ceph-deploy mon create-initial [root@deploy ceph]# ceph-deploy osd create node1 --data /dev/sdb [root@deploy ceph]# ceph-deploy osd create node2 --data /dev/sdb [root@deploy ceph]# ceph-deploy osd create node3--data /dev/sdb root@deploy ceph]# ceph-deploy mgr create node1 node2 node3

[root@deploy ceph]# ceph-deploy admin node1 node2 node3 #推送key

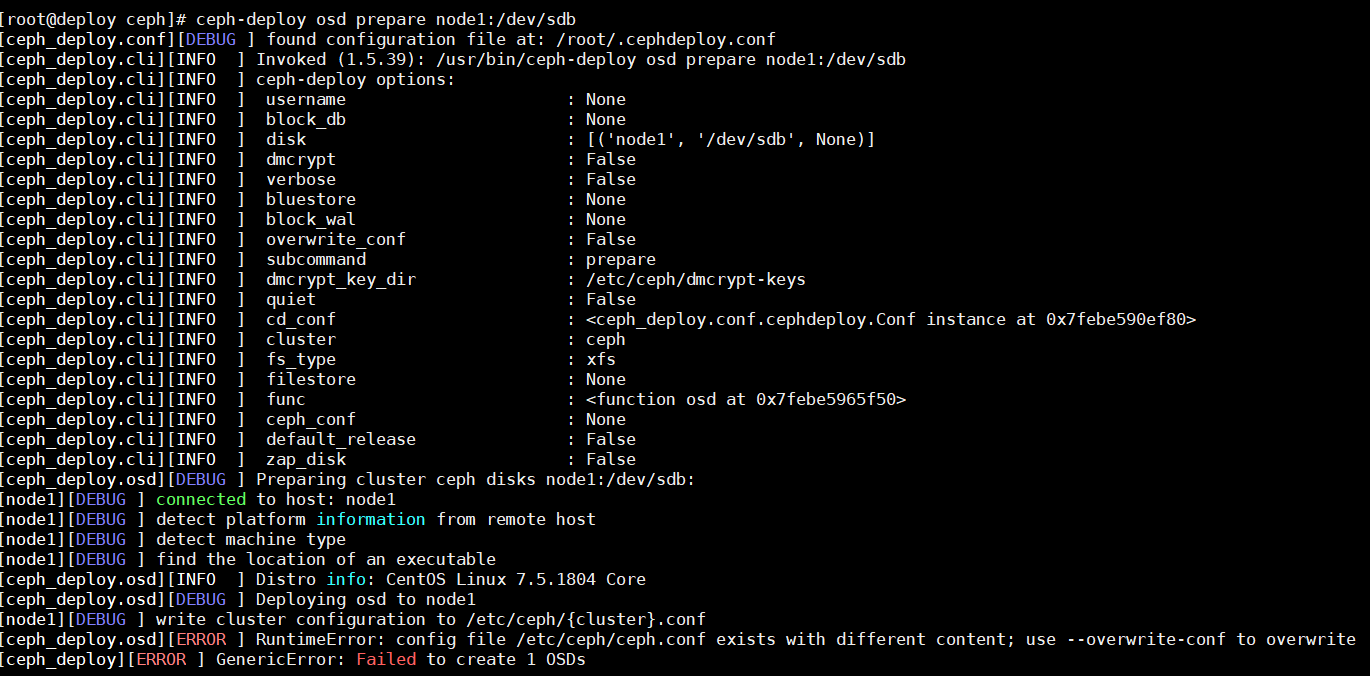

[root@deploy ceph]# ceph-deploy osd prepare node1:/dev/sdb

于是,网上又找方案,应该是conf不同步所致,几圈下来,--overwrite的使用各不相同

root@deploy ceph]# ceph-deploy --overwrite-conf config push deploy node1 node2 node3

原文:https://www.cnblogs.com/deny/p/14774419.html