相信完成了二进制的搭建kubeadm方式已经不是什么困难了。

难点:image 拉取一个shell 就搞定

本文是一边操作一边复制粘贴的,如发现错误请留言。

服务器一览表

|

名称 |

IP |

组件 |

|

master01 |

192.168.1.20 |

docker,etcd,nginx,keepalived |

|

master02 |

192.168.1.21 |

docker,etcd,nginx,keepalived |

|

node01 |

192.168.1.22 |

docker,etcd |

|

VIP |

192.168.1.26/24 |

#关闭防火墙 [root@master ~]# systemctl stop firewalld && systemctl disable firewalld #关闭selinux [root@master ~]# sed -i ‘s/enforcing/disabled/‘ /etc/selinux/config #关闭swap [root@master ~]# sed -ri ‘s/.*swap.*/#&/‘ /etc/fstab [root@master ~]# swapoff -a #添加主机解析 [root@master ~]# cat >> /etc/hosts << EOF 192.168.1.20 master01 192.168.1.21master02 192.168.1.22 node01 EOF #修改主机名 [root@master ~]# echo "master01" >/etc/hostname #添加内核桥接参数 [root@master ~]# cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

[root@master ~]# sysctl --system #生效 #DNS [root@master ~]# echo -e "nameserver 114.114.114.114\nnameserver 114.114.114.115" >/etc/resolv.conf #时区 [root@master ~]# yum install ntpdate -y [root@master ~]# ntpdate time.windows.com [root@master ~]# cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime #安装必要工具 tab [root@master ~]# yum install bash-completion wget vim net-tools -y #source /usr/share/bash-completion/bash_completion #echo “source <(kubectl completion bash)” >>/etc/bashrc

[root@master01 ~]# yum install epel-release -y [root@master01 ~]# yum install nginx keepalived -y

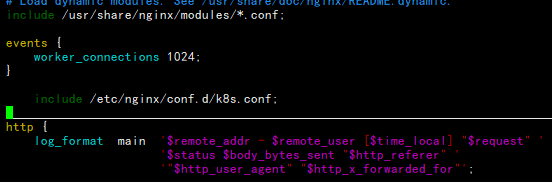

[root@master01 ~]# cat /etc/nginx/conf.d/k8s.conf

stream {

log_format main ‘$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent‘;

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.1.20:6443; # Master1 APISERVER IP:PORT

server 192.168.1.21:6443; # Master2 APISERVER IP:PORT

}

server {

listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

两台一样

! Configuration File for keepalived

global_defs {

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.26/24

}

track_script {

check_nginx

}

}

check_nginx.sh #文件内容

#!/bin/bash

count=$(ps -ef |grep -c [n]ginx)

if [ $count -le 2 ]; then

systemctl stop keepalived

fi

[root@master01 ~]# chmod +x /etc/keepalived/check_nginx.sh

[root@master01 ~]# systemctl daemon-reload && systemctl start nginx keepalived && systemctl enable nginx keepalived

backup服务器设置

[root@master01 ~]# scp -r /etc/nginx/* root@192.168.1.21:/etc/nginx

[root@master01 ~]# scp -r /etc/keepalived/* root@192.168.1.21:/etc/keepalived/

[root@master02 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.1.26/24

}

track_script {

check_nginx

}

}

[root@master02 ~]# systemctl daemon-reload && systemctl start nginx keepalived && systemctl enable nginx keepalived

查看服务

[root@master01 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cc:49:b4 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.20/24 brd 192.168.1.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.1.26/24 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::2b40:97fb:af46:680b/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@master01 ~]# systemctl status nginx keepalived|grep Active

Active: active (running) since Mon 2021-05-17 22:58:32 CST; 6min ago

Active: active (running) since Mon 2021-05-17 22:58:32 CST; 6min ago

测试环境

[root@master01 /etc/keepalived]# systemctl stop nginx

[root@master01 /etc/keepalived]# systemctl status nginx keepalived|grep Active

Active: inactive (dead) since Mon 2021-05-17 23:09:44 CST; 2s ago

Active: inactive (dead) since Mon 2021-05-17 23:09:45 CST; 843ms ago

[root@master01 /etc/keepalived]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cc:49:b4 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.20/24 brd 192.168.1.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet6 fe80::2b40:97fb:af46:680b/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@master02 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8c:5a:12 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.21/24 brd 192.168.1.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.1.26/24 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::4f77:fde8:b2e6:7548/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@master01 ~]# systemctl start nginx keepalived

[root@master01 ~]# ip a s eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cc:49:b4 brd ff:ff:ff:ff:ff:ff

inet 192.168.1.20/24 brd 192.168.1.255 scope global noprefixroute eth0

valid_lft forever preferred_lft forever

inet 192.168.1.26/24 scope global secondary eth0

valid_lft forever preferred_lft forever

inet6 fe80::2b40:97fb:af46:680b/64 scope link noprefixroute

valid_lft forever preferred_lft forever

一览表

|

名称 |

IP |

组件 |

|

etcd-1 |

192.168.1.20 |

etcd |

|

etcd-2 |

192.168.1.21 |

etcd |

|

etcd-3 |

192.168.1.22 |

etcd |

[root@master01 ~]# mv cfssl_1.5.0_linux_amd64 /usr/local/bin/cfssl [root@master01 ~]# mv cfssljson_1.5.0_linux_amd64 /usr/local/bin/cfssljson [root@master01 ~]# mv cfssl-certinfo_1.5.0_linux_amd64 /usr/bin/cfssl-certinfo 其实这里是忘记给执行权限了,没有移动之前给执行权限即可

#mkdir -p ~/TLS/etcd && cd ~/TLS/etcd [root@master01 ~/TLS/etcd]# chmod +x /usr/local/bin/cfssl* [root@master01 ~/TLS/etcd]# chmod +x /usr/bin/cfssl* [root@master01 ~/TLS/etcd]# cat > ca-config.json << EOF { "signing": { "default": { "expiry": "87600h" }, "profiles": { "www": { "expiry": "87600h", "usages": [ "signing", "key encipherment", "server auth", "client auth" ] } } } } EOF

cat > ca-csr.json << EOF { "CN": "etcd CA", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Beijing", "ST": "Beijing" } ] } EOF [root@master01 ~/TLS/etcd]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca - cat > server-csr.json << EOF { "CN": "etcd", "hosts": [ "192.168.1.20", "192.168.1.21", "192.168.1.22" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "BeiJing", "ST": "BeiJing" } ] } EOF [root@master01 ~/TLS/etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server [root@master01 ~]# mkdir /opt/etcd/{bin,cfg,ssl} -p [root@master02 ~]# mkdir /opt/etcd/{bin,cfg,ssl} -p [root@node01 ~]# mkdir /opt/etcd/{bin,cfg,ssl} -p [root@master01 ~]# tar zxf etcd-v3.4.15-linux-amd64.tar.gz [root@master01 ~]# mv etcd-v3.4.15-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/ cat > /opt/etcd/cfg/etcd.conf << EOF #[Member] ETCD_NAME="etcd-1" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://192.168.1.20:2380" ETCD_LISTEN_CLIENT_URLS="https://192.168.1.20:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.20:2380" ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.20:2379" ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.1.20:2380,etcd-2=https://192.168.1.21:2380,etcd-3=https://192.168.1.22:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" EOF

另外两台根据内容调整

systemd管理文件

cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify EnvironmentFile=/opt/etcd/cfg/etcd.conf ExecStart=/opt/etcd/bin/etcd --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem --logger=zap Restart=on-failure LimitNOFILE=65536 [Install] WantedBy=multi-user.target EOF [root@master01 ~]# cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/ #systemctl daemon-reload && systemctl start etcd && systemctl enable etcd ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.1.20:2379,https://192.168.1.21:2379,https://192.168.1.22:2379" endpoint health --write-out=table [root@master01 ~]# ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.1.20:2379,https://192.168.1.21:2379,https://192.168.1.22:2379" endpoint health --write-out=table +---------------------------+--------+-------------+-------+ | ENDPOINT | HEALTH | TOOK | ERROR | +---------------------------+--------+-------------+-------+ | https://192.168.1.20:2379 | true | 19.604498ms | | | https://192.168.1.21:2379 | true | 29.896808ms | | | https://192.168.1.22:2379 | true | 30.779205ms | | +---------------------------+--------+-------------+-------+

#wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo [root@master01 ~]# yum -y install docker-ce [root@master01 ~]# systemctldaemon-reload && systemctl enable docker && systemctl start docker

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF #yum install -y kubelet kubeadm kubectl [root@master01 ~]# rpm -qa kube* kubeadm-1.21.1-0.x86_64 kubectl-1.21.1-0.x86_64 kubelet-1.21.1-0.x86_64 kubernetes-cni-0.8.7-0.x86_64

[root@master01 ~]# kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.21.1

k8s.gcr.io/kube-controller-manager:v1.21.1

k8s.gcr.io/kube-scheduler:v1.21.1

k8s.gcr.io/kube-proxy:v1.21.1

k8s.gcr.io/pause:3.4.1

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns/coredns:v1.8.0

#!/bin/bash

images=(

kube-apiserver:v1.21.1

kube-controller-manager:v1.21.1

kube-scheduler:v1.21.1

kube-proxy:v1.21.1

pause:3.4.1

# etcd:3.4.13-0

# coredns/coredns:v1.8.0

)

for imageName in ${images[@]};

do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

docker pull coredns/coredns:1.8.0

docker tag coredns/coredns:1.8.0 k8s.gcr.io/coredns/coredns:v1.8.0

docker rmi coredns/coredns:1.8.0

cat > kubeadm-config.yaml << EOF

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 9037x2.tcaqnpaqkra9vsbw

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.20

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- master01

- master02

- 192.168.1.20

- 192.168.1.21

- 192.168.1.22

- 127.0.0.1

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.1.26:16443 # 负载均衡虚拟IP(VIP)和端口

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

- https://192.168.1.20:2379

- https://192.168.1.21:2379

- https://192.168.1.22:2379

caFile: /opt/etcd/ssl/ca.pem

certFile: /opt/etcd/ssl/server.pem

keyFile: /opt/etcd/ssl/server-key.pem

#imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.21.1

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

EOF

kubeadm init --config kubeadm-config.yaml

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 192.168.1.26:16443 --token 9037x2.tcaqnpaqkra9vsbw --discovery-token-ca-cert-hash sha256:cb9c237f076712f3e90bb3046a917ccb23fc5288c7c02c0bd4249e3d868fb05e --control-plane #加入多master

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.26:16443 --token 9037x2.tcaqnpaqkra9vsbw --discovery-token-ca-cert-hash sha256:cb9c237f076712f3e90bb3046a917ccb23fc5288c7c02c0bd4249e3d868fb05e

#加入node

# mkdir -p $HOME/.kube

# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 NotReady control-plane,master 3m51s v1.21.1

[root@master01 ~]# scp -r /etc/kubernetes/pki/ root@192.168.1.21:/etc/kubernetes/

复制master1的join 加入master2

还是上面的shell 先拉取镜像文件

#!/bin/bash

images=(

kube-apiserver:v1.21.1

kube-controller-manager:v1.21.1

kube-scheduler:v1.21.1

kube-proxy:v1.21.1

pause:3.4.1

# etcd:3.4.13-0

# coredns/coredns:v1.8.0

)

for imageName in ${images[@]};

do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

docker pull coredns/coredns:1.8.0

docker tag coredns/coredns:1.8.0 k8s.gcr.io/coredns/coredns:v1.8.0

docker rmi coredns/coredns:1.8.0

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run ‘kubectl get nodes‘ to see this node join the cluster.

[root@master02 ~]# mkdir -p $HOME/.kube

[root@master02 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master02 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

#kubeadm join 192.168.1.26:16443 --token 9037x2.tcaqnpaqkra9vsbw --discovery-token-ca-cert-hash sha256:cb9c237f076712f3e90bb3046a917ccb23fc5288c7c02c0bd4249e3d868fb05e --control-plane

[root@master02 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 NotReady control-plane,master 28m v1.21.1

master02 NotReady control-plane,master 2m3s v1.21.1

[root@master02 ~]# curl -k https://192.168.1.26:16443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.1",

"gitCommit": "5e58841cce77d4bc13713ad2b91fa0d961e69192",

"gitTreeState": "clean",

"buildDate": "2021-05-12T14:12:29Z",

"goVersion": "go1.16.4",

"compiler": "gc",

"platform": "linux/amd64"

}

[root@master01 ~]# curl -k https://192.168.1.26:16443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.1",

"gitCommit": "5e58841cce77d4bc13713ad2b91fa0d961e69192",

"gitTreeState": "clean",

"buildDate": "2021-05-12T14:12:29Z",

"goVersion": "go1.16.4",

"compiler": "gc",

"platform": "linux/amd64"

}

[root@master01 ~]# tail -20 /var/log/nginx/k8s-access.log

192.168.1.20 192.168.1.20:6443 - [18/May/2021:09:35:18 +0800] 200 1473

192.168.1.20 192.168.1.20:6443 - [18/May/2021:09:39:00 +0800] 200 4594

192.168.1.21 192.168.1.20:6443 - [18/May/2021:09:47:39 +0800] 200 728

#!/bin/bash

images=(

kube-proxy:v1.21.1

pause:3.4.1

)

for imageName in ${images[@]};

do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

#kubeadm join 192.168.1.26:16443 --token 9037x2.tcaqnpaqkra9vsbw --discovery-token-ca-cert-hash sha256:cb9c237f076712f3e90bb3046a917ccb23fc5288c7c02c0bd4249e3d868fb05e

默认token有效期为24小时,当过期之后,该token就不可用了。这时就需要重新创建token,可以直接使用命令快捷生成:

kubeadm token create --print-join-command

[root@master01 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 NotReady control-plane,master 36m v1.21.1

master02 NotReady control-plane,master 10m v1.21.1

node01 NotReady <none>

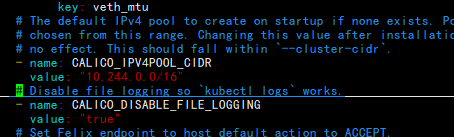

calico.yaml 修改

[root@master01 ~]# kubectl apply -f calico.yaml

[root@master01 ~]# kubectl apply -f recommended.yaml

[root@master01 ~]# kubectl create serviceaccount dashboard-admin -n kube-system

[root@master01 ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

[root@master01 ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk ‘/dashboard-admin/{print $1}‘)

[root@master01 ~]# kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

If you don‘t see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

/ #

原文:https://www.cnblogs.com/xyz349925756/p/14780638.html